Extract Interesting Skyline Points in High Dimension

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Interview with John Thompson: Community Activist and Community Conversation Participant

An Interview with John Thompson: Community Activist and Community Conversation Participant Interview conducted by Sharon Press1 SHARON: Thanks for letting me interview you. Let’s start with a little bit of background on John Thompson. Tell me about where you grew up. JOHN: I grew up on the south side of Chicago in a predominantly black neighborhood. I at- tended Henry O Tanner Elementary School in Chicago. I know throughout my time in Chicago, I always wanted to be a basketball player, so it went from wanting to be Dr. J to Michael Jordan, from Michael Jordan to Dennis Rodman, and from Dennis Rodman to Charles Barkley. I actu- ally thought that was the path I was gonna go, ‘cause I started getting so tall so fast at a young age. But Chicago was pretty rough and the public-school system in Chicago was pretty rough, so I come here to Minnesota, it’s like a culture shock. Different things, just different, like I don’t think there was one white person in my school in Chicago. SHARON: …and then you moved to Minnesota JOHN: I moved to Duluth Minnesota. I have five other siblings — two sisters, three brothers and I’m the baby of the family. Just recently I took on a program called Safe, and it’s a mentor- ship program for children, and I was asked, “Why would you wanna be a mentor to some of these mentees?” I said, “’Cause I was the little brother all the time.” So when I see some of the youth it was a no brainer, I’d be hypocritical not to help, ‘cause that’s how I was raised… from the butcher on the corner or my next-door neighbor, “You want me to call your mother on you?” If we got out of school early, or we had an early release, my mom would always give us instructions “You go to the neighbor’s house”, which was Miss Hollandsworth, “You go to Miss Hollandsworth’s house, and you better not give her no problems. -

Vipers' Head Coach Resigns Meet

Vipers’ Head Coach Resigns Larry Brown resigns from his position due to medical issues Florence, SC – February 12, 2014 – The Pee Dee Vipers’ Head Coach, Larry Brown, has resigned from his position as a consequence of medical concerns and precautions. Brown and the Vipers took into consideration the long-term health of Brown and productivity of the team when making this decision. Brown does not feel that he can adequately provide the Vipers with the standard of coaching he is accustom to contributing and focus on improving his health at the same time. The entire Vipers Organization backs Brown in his decision and will continue to support him during his journey to good health. Andre Bovain has been named Head Coach. Bovain served as the Vipers’ Assistant Coach under Brown. NBA legend and Columbia, SC native, Xavier McDaniel will be one of the Vipers’ assistant coaches. Bryan Hergenroether will also join the Vipers’ coaching staff. The Vipers’ new coaching staff will leave much of what Brown has developed in place; plays and structure will be similar. Brown and Bovain worked closely to ensure that the coaching transition will be smooth and not dramatically impact the team’s winning style of play. Xavier McDaniel: • Played in college at Wichita State (1981-1985) • 4th overall in the 1985 NBA draft to the Seattle SuperSonics • Played 14 seasons in the NBA (1985-1998) • NBA All-Rookie First Team in 1986 • NBA All-Star in 1988 • Career Stats (NBA): Points – 13,606; Rebounds – 5,313; Assists – 1,775 • Played against names such as Magic Johnson, -

CONGRESSIONAL RECORD—SENATE, Vol. 152, Pt. 9 June 21

June 21, 2006 CONGRESSIONAL RECORD—SENATE, Vol. 152, Pt. 9 12231 The PRESIDING OFFICER. Without The resolution, with its preamble, vided as follows: Senator WARNER in objection, it is so ordered. reads as follows: control of 30 minutes, Senator LEVIN in Mr. CHAMBLISS. Mr. President, first S. RES. 519 control of 15 minutes, Senator KERRY I ask unanimous consent that a mem- Whereas on Tuesday, June 20, 2006, the in control of 15 minutes. ber of my staff, Beth Sanford, be grant- Miami Heat defeated the Dallas Mavericks I further ask unanimous consent that ed floor privileges during the remain- by a score of 95 to 92, in Dallas, Texas; following the 60 minutes, the Demo- der of this bill. Whereas that victory marks the first Na- cratic leader be recognized for up to 15 The PRESIDING OFFICER. Without tional Basketball Association (NBA) Cham- minutes to close, to be followed by the objection, it is so ordered. pionship for the Miami Heat franchise; majority leader for up to 15 minutes to Whereas after losing the first 2 games of f the NBA Finals, the Heat came back to win close. Finally, I ask consent that fol- lowing that time, the Senate proceed CONGRATULATING THE MIAMI 4 games in a row, which earned the team an to the vote on the Levin amendment, HEAT overall record of 69-37 and the right to be named NBA champions; to be followed by a vote in relation to Mr. TALENT. Mr. President, I ask Whereas Pat Riley, over his 11 seasons the Kerry amendment, with no amend- unanimous consent that the Senate with the Heat, has maintained a standard of ment in order to the Kerry amend- now proceed to the consideration of S. -

Fastest 40 Minutes in Basketball, 2012-2013

University of Arkansas, Fayetteville ScholarWorks@UARK Arkansas Men’s Basketball Athletics 2013 Media Guide: Fastest 40 Minutes in Basketball, 2012-2013 University of Arkansas, Fayetteville. Athletics Media Relations Follow this and additional works at: https://scholarworks.uark.edu/basketball-men Citation University of Arkansas, Fayetteville. Athletics Media Relations. (2013). Media Guide: Fastest 40 Minutes in Basketball, 2012-2013. Arkansas Men’s Basketball. Retrieved from https://scholarworks.uark.edu/ basketball-men/10 This Periodical is brought to you for free and open access by the Athletics at ScholarWorks@UARK. It has been accepted for inclusion in Arkansas Men’s Basketball by an authorized administrator of ScholarWorks@UARK. For more information, please contact [email protected]. TABLE OF CONTENTS This is Arkansas Basketball 2012-13 Razorbacks Razorback Records Quick Facts ........................................3 Kikko Haydar .............................48-50 1,000-Point Scorers ................124-127 Television Roster ...............................4 Rashad Madden ..........................51-53 Scoring Average Records ............... 128 Roster ................................................5 Hunter Mickelson ......................54-56 Points Records ...............................129 Bud Walton Arena ..........................6-7 Marshawn Powell .......................57-59 30-Point Games ............................. 130 Razorback Nation ...........................8-9 Rickey Scott ................................60-62 -

The Role Identity Plays in B-Ball Players' and Gangsta Rappers

Vassar College Digital Window @ Vassar Senior Capstone Projects 2016 Playin’ tha game: the role identity plays in b-ball players’ and gangsta rappers’ public stances on black sociopolitical issues Kelsey Cox Vassar College Follow this and additional works at: https://digitalwindow.vassar.edu/senior_capstone Recommended Citation Cox, Kelsey, "Playin’ tha game: the role identity plays in b-ball players’ and gangsta rappers’ public stances on black sociopolitical issues" (2016). Senior Capstone Projects. 527. https://digitalwindow.vassar.edu/senior_capstone/527 This Open Access is brought to you for free and open access by Digital Window @ Vassar. It has been accepted for inclusion in Senior Capstone Projects by an authorized administrator of Digital Window @ Vassar. For more information, please contact [email protected]. Cox playin’ tha Game: The role identity plays in b-ball players’ and gangsta rappers’ public stances on black sociopolitical issues A Senior thesis by kelsey cox Advised by bill hoynes and Justin patch Vassar College Media Studies April 22, 2016 !1 Cox acknowledgments I would first like to thank my family for helping me through this process. I know it wasn’t easy hearing me complain over school breaks about the amount of work I had to do. Mom – thank you for all of the help and guidance you have provided. There aren’t enough words to express how grateful I am to you for helping me navigate this thesis. Dad – thank you for helping me find my love of basketball, without you I would have never found my passion. Jon – although your constant reminders about my thesis over winter break were annoying you really helped me keep on track, so thank you for that. -

Renormalizing Individual Performance Metrics for Cultural Heritage Management of Sports Records

Renormalizing individual performance metrics for cultural heritage management of sports records Alexander M. Petersen1 and Orion Penner2 1Management of Complex Systems Department, Ernest and Julio Gallo Management Program, School of Engineering, University of California, Merced, CA 95343 2Chair of Innovation and Intellectual Property Policy, College of Management of Technology, Ecole Polytechnique Federale de Lausanne, Lausanne, Switzerland. (Dated: April 21, 2020) Individual performance metrics are commonly used to compare players from different eras. However, such cross-era comparison is often biased due to significant changes in success factors underlying player achievement rates (e.g. performance enhancing drugs and modern training regimens). Such historical comparison is more than fodder for casual discussion among sports fans, as it is also an issue of critical importance to the multi- billion dollar professional sport industry and the institutions (e.g. Hall of Fame) charged with preserving sports history and the legacy of outstanding players and achievements. To address this cultural heritage management issue, we report an objective statistical method for renormalizing career achievement metrics, one that is par- ticularly tailored for common seasonal performance metrics, which are often aggregated into summary career metrics – despite the fact that many player careers span different eras. Remarkably, we find that the method applied to comprehensive Major League Baseball and National Basketball Association player data preserves the overall functional form of the distribution of career achievement, both at the season and career level. As such, subsequent re-ranking of the top-50 all-time records in MLB and the NBA using renormalized metrics indicates reordering at the local rank level, as opposed to bulk reordering by era. -

Michael Jordan: a Biography

Michael Jordan: A Biography David L. Porter Greenwood Press MICHAEL JORDAN Recent Titles in Greenwood Biographies Tiger Woods: A Biography Lawrence J. Londino Mohandas K. Gandhi: A Biography Patricia Cronin Marcello Muhammad Ali: A Biography Anthony O. Edmonds Martin Luther King, Jr.: A Biography Roger Bruns Wilma Rudolph: A Biography Maureen M. Smith Condoleezza Rice: A Biography Jacqueline Edmondson Arnold Schwarzenegger: A Biography Louise Krasniewicz and Michael Blitz Billie Holiday: A Biography Meg Greene Elvis Presley: A Biography Kathleen Tracy Shaquille O’Neal: A Biography Murry R. Nelson Dr. Dre: A Biography John Borgmeyer Bonnie and Clyde: A Biography Nate Hendley Martha Stewart: A Biography Joann F. Price MICHAEL JORDAN A Biography David L. Porter GREENWOOD BIOGRAPHIES GREENWOOD PRESS WESTPORT, CONNECTICUT • LONDON Library of Congress Cataloging-in-Publication Data Porter, David L., 1941- Michael Jordan : a biography / David L. Porter. p. cm. — (Greenwood biographies, ISSN 1540–4900) Includes bibliographical references and index. ISBN-13: 978-0-313-33767-3 (alk. paper) ISBN-10: 0-313-33767-5 (alk. paper) 1. Jordan, Michael, 1963- 2. Basketball players—United States— Biography. I. Title. GV884.J67P67 2007 796.323092—dc22 [B] 2007009605 British Library Cataloguing in Publication Data is available. Copyright © 2007 by David L. Porter All rights reserved. No portion of this book may be reproduced, by any process or technique, without the express written consent of the publisher. Library of Congress Catalog Card Number: 2007009605 ISBN-13: 978–0–313–33767–3 ISBN-10: 0–313–33767–5 ISSN: 1540–4900 First published in 2007 Greenwood Press, 88 Post Road West, Westport, CT 06881 An imprint of Greenwood Publishing Group, Inc. -

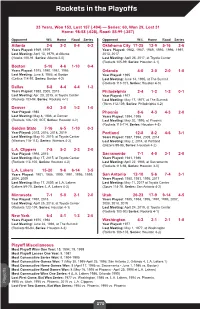

Rockets in the Playoffs

Rockets in the Playoffs 33 Years, Won 153, Lost 157 (.494) — Series: 60, Won 29, Lost 31 Home: 98-58 (.628), Road: 55-99 (.357) Opponent W-L Home Road Series Opponent W-L Home Road Series Atlanta 2-6 2-2 0-4 0-2 Oklahoma City 17-25 12-9 5-16 2-6 Years Played: 1969, 1979 Years Played: 1982, 1987, 1989, 1993, 1996, 1997, Last Meeting: April 13, 1979, at Atlanta 2013, 2017 (Hawks 100-91, Series: Atlanta 2-0) Last Meeting: April 25, 2017, at Toyota Center (Rockets 105-99, Series: Houston 4-1) Boston 5-16 4-6 1-10 0-4 Years Played: 1975, 1980, 1981, 1986 Orlando 4-0 2-0 2-0 1-0 Last Meeting: June 8, 1986, at Boston Year Played: 1995 (Celtics 114-97, Series: Boston 4-2) Last Meeting: June 14, 1995, at The Summit (Rockets 113-101, Series: Houston 4-0) Dallas 8-8 4-4 4-4 1-2 Years Played: 1988, 2005, 2015 Philadelphia 2-4 1-2 1-2 0-1 Last Meeting: Apr. 28, 2015, at Toyota Center Year Played: 1977 (Rockets 103-94, Series: Rockets 4-1) Last Meeting: May 17, 1977, at The Summit (76ers 112-109, Series: Philadelphia 4-2) Denver 4-2 3-0 1-2 1-0 Year Played: 1986 Phoenix 8-6 4-3 4-3 2-0 Last Meeting: May 8, 1986, at Denver Years Played: 1994, 1995 (Rockets 126-122, 2OT, Series: Houston 4-2) Last Meeting: May 20, 1995, at Phoenix (Rockets 115-114, Series: Houston 4-3) Golden State 7-16 6-5 1-10 0-3 Year Played: 2015, 2016, 2018, 2019 Portland 12-8 8-2 4-6 3-1 Last Meeting: May 10, 2019, at Toyota Center Years Played: 1987, 1994, 2009, 2014 (Warriors 118-113), Series: Warriors 4-2) Last Meeting: May 2, 2014, at Portland (Blazers 99-98, Series: Houston 4-2) L.A. -

2012-13 BOSTON CELTICS Media Guide

2012-13 BOSTON CELTICS SEASON SCHEDULE HOME AWAY NOVEMBER FEBRUARY Su MTWThFSa Su MTWThFSa OCT. 30 31 NOV. 1 2 3 1 2 MIA MIL WAS ORL MEM 8:00 7:30 7:00 7:30 7:30 4 5 6 7 8 9 10 3 4 5 6 7 8 9 WAS PHI MIL LAC MEM MEM TOR LAL MEM MEM 7:30 7:30 8:30 1:00 7:30 7:30 7:00 8:00 7:30 7:30 11 12 13 14 15 16 17 10 11 12 13 14 15 16 CHI UTA BRK TOR DEN CHA MEM CHI MEM MEM MEM 8:00 7:30 8:00 12:30 6:00 7:00 7:30 7:30 7:30 7:30 7:30 18 19 20 21 22 23 24 17 18 19 20 21 22 23 DET SAN OKC MEM MEM DEN LAL MEM PHO MEM 7:30 7:30 7:30 7:AL30L-STAR 7:30 9:00 10:30 7:30 9:00 7:30 25 26 27 28 29 30 24 25 26 27 28 ORL BRK POR POR UTA MEM MEM MEM 6:00 7:30 7:30 9:00 9:00 7:30 7:30 7:30 DECEMBER MARCH Su MTWThFSa Su MTWThFSa 1 1 2 MIL GSW MEM 8:30 7:30 7:30 2 3 4 5 6 7 8 3 4 5 6 7 8 9 MEM MEM MEM MIN MEM PHI PHI MEM MEM PHI IND MEM ATL MEM 7:30 7:30 7:30 7:30 7:30 7:00 7:30 7:30 7:30 7:00 7:00 7:30 7:30 7:30 9 10 11 12 13 14 15 10 11 12 13 14 15 16 MEM MEM MEM DAL MEM HOU SAN OKC MEM CHA TOR MEM MEM CHA 7:30 7:30 7:30 8:00 7:30 8:00 8:30 1:00 7:30 7:00 7:30 7:30 7:30 7:30 16 17 18 19 20 21 22 17 18 19 20 21 22 23 MEM MEM CHI CLE MEM MIL MEM MEM MIA MEM NOH MEM DAL MEM 7:30 7:30 8:00 7:30 7:30 7:30 7:30 7:30 8:00 7:30 8:00 7:30 8:30 8:00 23 24 25 26 27 28 29 24 25 26 27 28 29 30 MEM MEM BRK MEM LAC MEM GSW MEM MEM NYK CLE MEM ATL MEM 7:30 7:30 12:00 7:30 10:30 7:30 10:30 7:30 7:30 7:00 7:00 7:30 7:30 7:30 30 31 31 SAC MEM NYK 9:00 7:30 7:30 JANUARY APRIL Su MTWThFSa Su MTWThFSa 1 2 3 4 5 1 2 3 4 5 6 MEM MEM MEM IND ATL MIN MEM DET MEM CLE MEM 7:30 7:30 7:30 8:00 -

MEDIA INFORMATION to the MEDIA: Welcome to the 30Th Season of Kings Basketball in Sacramento

MEDIA INFORMATION TO THE MEDIA: Welcome to the 30th season of Kings basketball in Sacramento. The Kings media relations department will do everything possible to assist in your coverage of the club during the 2014-15 NBA season. If we can ever be of assistance, please do not hesitate to contact us. In an effort to continue to provide a professional working environment, we have established the following media guidelines. CREDENTIALS: Single-game press credentials can be reserved by accredited media members via e-mail (creden- [email protected]) until 48 hours prior to the requested game (no exceptions). Media members covering the Kings on a regular basis will be issued season credentials, but are still required to reserve seating for all games via email at [email protected] to the media relations office by the above mentioned deadline. Credentials will allow working media members entry into Sleep Train Arena, while also providing reserved press seating, and access to both team locker rooms and the media press room. In all cases, credentials are non-transferable and any unauthorized use will subject the bearer to ejection from Sleep Train Arena and forfeiture of the credential. All single-game credentials may be picked-up two hours in advance of tip-off at the media check-in table, located at the Southeast security entrance of Sleep Train Arena. PRESS ROOM: The Kings press room is located on the Southeast side of Sleep Train Arena on the operations level and will be opened two hours prior to game time. Admittance/space is limited to working media members with credentials only. -

Pat Williams Co-Founder and Senior Vice President of the Orlando Magic

Pat Williams Co-Founder and Senior Vice President of the Orlando Magic Leadership. With his vast experience leading NBA teams to the finals and grooming players into great coaches, Pat Williams knows how to identify and develop the universal qualities that all great leaders share. An author of more than 60 books – some of which address leadership and public speaking, Williams is adept at giving his audience the motivation and tools they need in order to affect real change at their organizations. In his presentations, he discusses leadership and the qualities leaders must possess, all while sharing insider sports stories, addressing how he himself became a leader, motivating his audience, and acting as a catalyst for change. Teamwork. Pat Williams has led 23 teams to the NBA playoffs and five to the finals, and largely part of his success has been his ability to meld great talents—like Dr. J and Moses Malone, Charles Barkley and Maurice Cheeks, and Shaquille O’Neal and “Penny” Hardaway—into championship teams and teammates. With this incredible experience (he has spent 49 years as a player and executive in professional baseball and basketball), Williams addresses how to create great teams that act as one and work toward common goals. During his presentations, he address teamwork, how to create and maintain great teams, conflict resolution, and overcoming adversity—all while sharing insider sports stories and offering his audience the necessary tools for affecting real change at their organizations. Winning. Having led 23 teams to the NBA playoffs and five to the finals, Pat Williams knows a thing or two about winning. -

Orlando's Dwight Howard Wins 2009-10 Nba Defensive

ORLANDO’S DWIGHT HOWARD WINS 2009-10 NBA DEFENSIVE PLAYER OF THE YEAR AWARD PRESENTED BY KIA MOTORS NEW YORK , April 20, 2010 – Dwight Howard of the Orlando Magic is the recipient of the 2009-10 NBA Defensive Player of the Year Award presented by Kia Motors, the NBA announced today, marking the second straight season the All-Star has earned the honor. The 6-11 center became the first player to lead the league in rebounding and blocks (1973-74 was the first season blocks were kept as an official statistic) in consecutive seasons, averaging 13.2 rebounds and 2.78 blocks. Howard also paced the league in field goal percentage (.612), becoming the first player to lead the NBA in all three of those categories since the NBA started keeping blocked shots. He also became only the fifth player in NBA history to lead the league in rebounding for at least three consecutive seasons. Howard recorded an NBA-high 64 double- doubles, including three 20-point/20-rebound efforts. As part of its support of the Defensive Player of the Year Award, Kia Motors America will donate a 2011 Kia Sorento CUV on behalf of Howard to the Nap Ford Community School in Parramore, a community in downtown Orlando. Nap Ford's vision is to empower students to maximize their potential to be contributing members of society. Kia Motors will present a brand new Sorento, the Official Vehicle of the NBA, to the charity of choice of each of four 2009-10 season-end award winners as part of the “The NBA Performance Awards Presented by Kia Motors.” Following this season, Kia Motors will have donated a total of 12 new vehicles to charity since the program began in 2008.