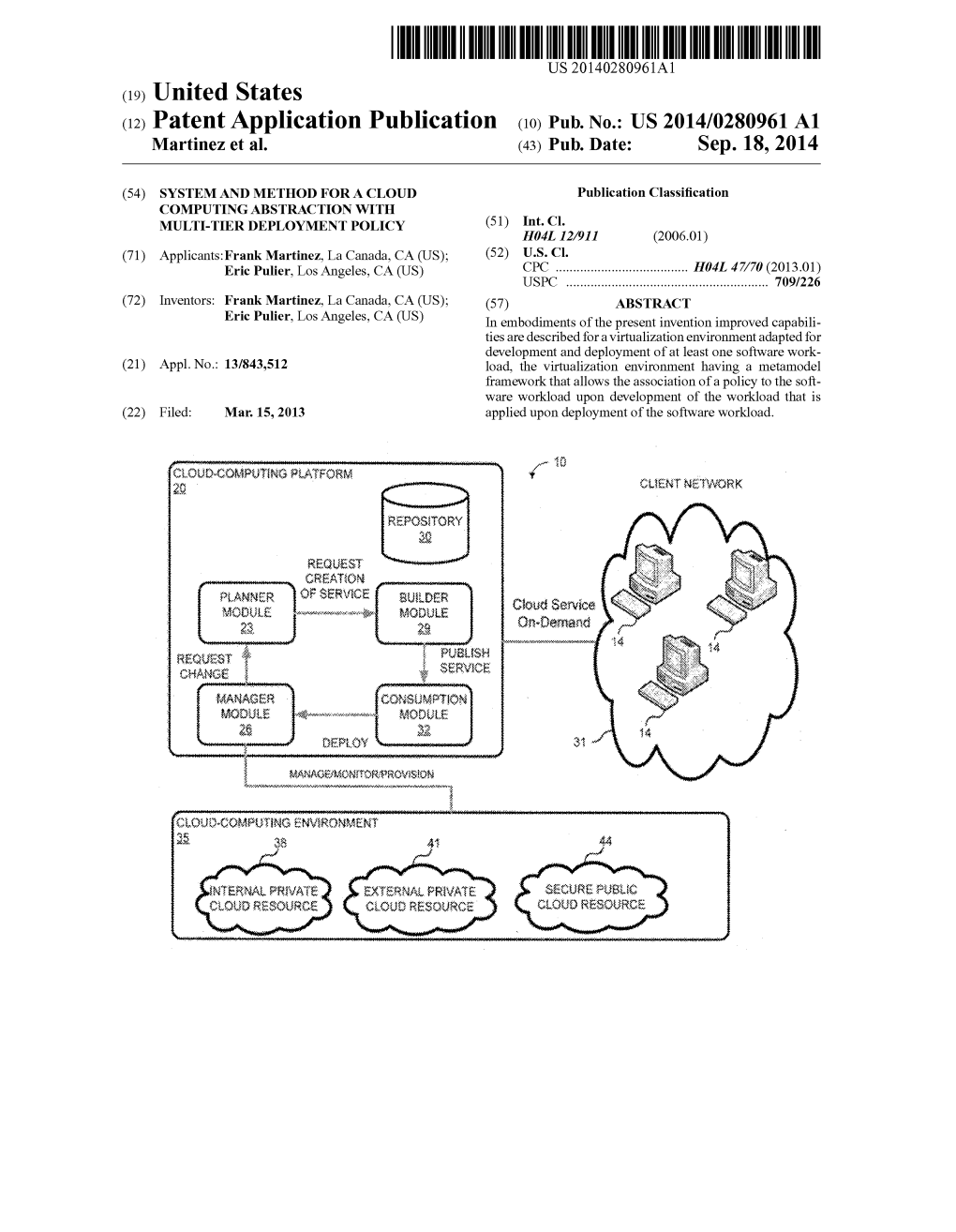

(12) Patent Application Publication (10) Pub. No.: US 2014/0280961 A1 Martinez Et Al

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openairinterface: Democratizing Innovation in the 5G Era

Computer Networks 176 (2020) 107284 Contents lists available at ScienceDirect Computer Networks journal homepage: www.elsevier.com/locate/comnet OpenAirInterface: Democratizing innovation in the 5G Era Florian Kaltenberger a,∗, Aloizio P. Silva b,c, Abhimanyu Gosain b, Luhan Wang d, Tien-Thinh Nguyen a a Eurecom, France b Northeastern University, Massachusetts, United States c US Ignite Inc. PAWR Project Office, Washington DC, United States d Beijing University of Posts and Telecommunications, Beijing, China a r t i c l e i n f o a b s t r a c t 2019 MSC: OpenAirInterface TM (OAI) is an open-source project that implements the 3rd Generation Partnership Project 00-01 (3GPP) technology on general purpose x86 computing hardware and Off-The-Shelf (COTS) Software Defined 99-00, Radio (SDR) cards like the Universal Software Radio Peripheral (USRP). It makes it possible to deploy and operate Keywords: a 4G Long-Term Evolution (LTE) network today and 5G New Radio (NR) networks in the future at a very low Open Air Interface cost. Moreover, the open-source code can be adapted to different use cases and deployment and new functionality 5G can be implemented, making it an ideal platform for both industrial and academic research. The OAI Software New radio technology Alliance (OSA) is a non-profit consortium fostering a community of industrial as well as research contributors. Network softwarization It also developed the OAI public license which is an open source license that allows contributors to implement LTE their own patented technology without having to relinquish their intellectual property rights. -

NEWCOM# Network of Excellence in Wireless Communications

Ref. Ares(2013)1025853 - 08/05/2013 Network of Excellence NEWCOM# Network of Excellence in Wireless Communications# FP7 Contract Number: 318306 WP2.3 – Flexible communication terminals and net- works D23.1 Open Lab access and experimental setups EuWIn@CNRS/Eurecom and preliminary plan of activities Contractual Delivery Date: April 30, 2013 Actual Delivery Date: April 30, 2013 Responsible Beneficiary: CNRS/Eurecom Contributing Beneficiaries: CNRS/Eurecom, CTTC, CNIT/UniBo Estimated Person Months: 8 Dissemination Level: Public Nature: Report Version: 1.0 PROPRIETARY RIGHTS STATEMENT This document contains information, which is proprietary to the NEWCOM# Consortium. This page is left blank intentionally PROPRIETARY RIGHTS STATEMENT This document contains information, which is proprietary to the NEWCOM# Consortium. FP7 Contract Number: 318306 Deliverable ID: WP2.3 / D23.1 Document Information Document ID: D23.1 Version Date: May 7, 2013 Total Number of Pages: 65 Abstract: This deliverable introduces EuWin, the European Laboratory of Wireless Communications for the Future Internet, which is one of the main activities of Newcom#. In particular this deliverable describes the facilities established at EuWin@Eurecom and how they can be accessed in situ or remotely. Last but not least we present a preliminary plan of activities and policies for Eu- Win. Keywords: Wireless communications, real-time testbed, LTE, open-source, radio experimentation, prototyping, software defined radio, OpenAirInterface Authors IMPORTANT: The information in the following two tables will be directly used for the MPA (Monitoring Partner Activity) procedure. Upon finalisation of the deliverable, please, ensure it is accurate. Use multiple pages if need- ed. Besides, please, adhere to the following rules: • Beneficiary/Organisation: For multi-party beneficiaries (CNIT) and beneficiaries with Third Parties (CNRS and CTTC), please, indicate beneficiary and organisation (e.g., CNIT/Pisa, CNRS/Supelec). -

Linux Infrared HOWTO

Linux Infrared HOWTO Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.8 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser $Date: 2011-10-31 15:06:07 $ The Infrared-HOWTO provides an introduction to Linux and infrared devices and how to use the software provided by the Linux/IrDA project. This package uses IrDA(TM) compliant standards. IrDA(TM) is an industrial standard for infrared wireless communication, and most laptops made after January 1996 are equipped with an IrDA(TM) compliant infrared transceiver. Infrared ports let you communicate with printers, modems, fax machines, LANs, and other laptops or PDAs. Speed ranges from 2400bps to 4Mbps. The Linux/IrDA stack supports IrLAP, IrLMP, IrIAS, IrIAP, IrLPT, IrCOMM, IrOBEX, and IrLAN. Several of the protocols are implemented as both clients and servers. There is also support for multiple IrLAP connections, via several IrDA(TM) devices at once. The Linux/IrDA project started at the end of 1997 and experienced some major rewrites since then. Please don't expect every feature working straight yet. As far as I know Linux/IrDA is the only open source IrDA implementation available. Remote Control (RC) via infrared is the aim of the Linux Infrared Remote Control - LIRC project, and also described in this HOWTO. Copyright (c) 2000-2011 Werner Heuser. For all chapters permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with the Invariant Sections being "Preface" and "Credits", with the Front-Cover Texts being "Linux Infrared HOWTO", and with the Back-Cover Texts being the section "About the Document and the Author". -

HP Compaq 8100 Elite Series Overview

QuickSpecs HP Compaq 8100 Elite Series Overview HP Compaq 8100 Elite Small Form Factor Business PC 1 Rear I/O includes (6) USB 2.0 ports, serial port, PS/2 mouse and keyboard ports, RJ-45 network interface, DisplayPort and VGA video interfaces, and audio in/out jacks 2 Low profile expansion slots include (1) PCI slot, (1) PCI Express x1 slots and (2) PCI Express x16 graphics slot 3 Front I/O includes (4) USB 2.0 ports, dedicated headphone output, and a microphone/headphone jack 4 HP Optical Mouse 5 HP Keyboard 6 3.5” external drive bay supporting a media card reader or a secondary hard disk drive 7 5.25” external drive bay supporting an optical disk drive 8 3.5” internal drive bay supporting primary hard disk drive 9 240W standard or 89% high efficiency Power Supply 10 HP Monitor (sold separately) DA - 13524 North America — Version 19 — December 17, 2010 Page 1 QuickSpecs HP Compaq 8100 Elite Series Overview HP Compaq 8100 Elite Convertible Minitower Business PC 1 (3) 5.25” external drive bays supporting optical disk drives, removable hard disk drives, or the HP Media Card Reader 2 320W standard or 89% high efficiency Power Supply 3 Rear I/O includes (6) USB 2.0 ports, serial port, PS/2 mouse and keyboard ports, RJ-45 network interface, DisplayPort and VGA video interfaces, and audio in/out jacks 4 Front I/O includes (4) USB 2.0 ports, dedicated headphone output, and a microphone/headphone jack 5 (3) 3.5” internal drive bays supporting multiple hard disk drives 6 Full height expansion slots include (3) full-length PCI slots, (1) PCI Express x1 slot, and (2) full-length PCI Express x16 graphics slots NOTE: Second PCIe x16 slot has x4 connectivity. -

The World's Leading Modems

The World’s Leading Modems All USRobotics modems have fax capabilities and are RoHS Compliant. USR controller based modems work with most operating systems and with 3 different form factors and a variety of functionality there is a modem available for every application. Product Part Form Type/OS Product Product Description Number Factor Applications 56K PCIe USR5638 PCIe Soft modem Point of Sale Single lane fits any PCIe Faxmodem PCI Express Dialup Internet slot, full and low profile PCI 3.3V brackets included, and Win 7, Svr 08/11, POSr common driver across OSs 56K PCI USR5670 Standard, Soft modem Dialup Internet Cost-effective, full featured Faxmodem Low Profile* convertible to low profile fax PCI Internal modem. 3.3V or 5V Win 7, Server 03/08/11 *Low Profile PCI bracket in box 56K Performance USR5610C Standard PCI Controller Dialup Internet Ideal internal for Windows Pro Modem Internal Point of Sale and Linux, firmware up- 5V gradeable. Win 7, Server 03/08/11 Low Profile 56K USR2980- Low Profile, Controller Point of Sale ONLY low profile controller PCI Faxmodem OEM Standard* Dialup Internet based internal modem! PCI Internal Broadband Backup 3.3V and 5V Win 7, Server 03/08/11 *Standard PCI bracket in box 56K USB Faxmodem USR5637 USB Controller Dialup Internet Extensive OS compatibility. with Voice External Broadband Backup Small and light. V.22 Fast Point of Sale Connect for POS. No Power Win 7, Server 03/08/11 Data Capture supply needed. Retail box. 56K USB Softmodem USR5639 USB Soft modem Dialup Internet Small and light, perfect External Point of Sale laptop accessory. -

E4k and RTL2832U Based Software Defined Radio –

E4k and RTL2832U based Software Defined Radio { SDR) J.-M Friedt, G. Goavec-M´erou, 3 d´ecembre 2012 The wide diffusion of radio communication in consumer electronics yields the availability of components with capabilities compatible with these communication modes (operating at least in the 50 to 2500 MHz range, several MHz bandwidth) and affordable thanks to mass production. This trend is the opportunity to present the use of a digital broadcast televi- sion (DVB) receiver { based on the Elonics E4000 chip { whose receiving stage is so simple (zero-intermediate frequency) that it appears compat- ible with many analog and digital radiofrequency transmission modes. The raw data flow generated by the receiver is processed using classical algorithms implemented as blocs in the gnuradio software and the as- sociated graphical user interface gnuradio-companion. We will demon- strate, beyond the use of the available signal processing blocs, how to implement our own algorithms for decoding the content of the incoming data flow. We will particularly focus on the packet communication pro- tocol, ACARS and radiomodems communicating using FSK and AFSK modulation. 1 Introduction to software defined radio (SDR) Software Defined Radio (SDR) aims at processing radiofrequency (RF) signals by using as much software as possible instead of being dependent upon hardware [1, 2, 3, 4]. Considering the frequencies and the data flow when communicating through wireless RF media, most signal processing steps had to rely on dedicated hardware, with software only handling the resulting low bandwidth data flow, usually in the audio range (<100 kHz). FM and AM receivers are amongst such usual receivers, in which no software at all is required, while several digital communication modes including the commonly used amateur radio (packet) mode is decoded by processing the digitized audio output (sound card input) of the RF receiver 1. -

GNU/Linux Support for the Toshiba Satellite Pro 6000 Series

GNU/Linux support for the Toshiba Satellite Pro 6000 series Wouter Verhelst First version: 17 February 2002. This version: 27 June 2002 Updated link to ’most recent’ version at 30 May 2003 Contents 1 Introduction 3 2 Framebuffer 3 3 The X Window System 4 4 Sound 6 5 Network 6 6 Power Management 7 7 USB 8 8 PCMCIA 9 9 Wireless LAN 9 10 Floppy disk drive 9 11 IDE Chipset 10 12 SlimSelect bay 10 13 IrDA 11 1 14 Other hardware 12 14.1 Modem . 12 14.2 SD Card . 12 14.3 FireWire? . 12 15 lazy files 13 15.1 /proc/pci . 13 15.2 /proc/cpuinfo . 14 15.3 /proc/interrupts . 15 15.4 outpu of ‘toshset -q’ . 15 16 Other sites of interest 16 17 Legal stuff 16 2 1 Introduction Employers rule. Especially if they give you a laptop and allow you to install GNU/Linux on it. Since not everything instantly worked the way I hoped under GNU/Linux, I wrote this document so that other people interested enough in running GNU/Linux on a Satellite Pro 6000 can profit from my findings. Luckily, I found that most hardware is supported, and even some of the special features of the laptop are, too. Unfortunately, That doesn’t mean it all works perfectly well, and even some (very nice) features are not (yet) supported. If you get something to work in a different and/or better way than me, please be so kind to let me know so that I can include it here (and use it too, of course ;-). -

Survey on System I/O Hardware Transactions and Impact on Latency, Throughput, and Other Factors

Survey on System I/O Hardware Transactions and Impact on Latency, Throughput, and Other Factors Larsen, S., & Lee, B. (2014). Survey on System I/O Hardware Transactions and Impact on Latency, Throughput, and Other Factors. Advances in Computers, 92, 67-104. doi:10.1016/B978-0-12-420232-0.00002-7 10.1016/B978-0-12-420232-0.00002-7 Elsevier Accepted Manuscript http://cdss.library.oregonstate.edu/sa-termsofuse Survey on System I/O Hardware Transactions and Impact on Latency, Throughput, and Other Factors Steen Larsen†‡ and Ben Lee† †School of Electrical and Engineering Computer Science Oregon State University Corvallis, OR 97331 [email protected], [email protected] ‡Intel Corporation 124 NE Shannon St Hillsboro OR 97124 - 1 - I. INTRODUCTION ................................................................................................................................. 4 II. BACKGROUND AND GENERAL DISCUSSION .......................................................................... 5 III. MEASUREMENTS AND QUANTIFICATIONS ............................................................................ 9 III.A Latency .......................................................................................................................................... 9 III.B Throughput and Bandwidth Efficiency ....................................................................................... 11 IV. SURVEY OF EXISTING METHODS AND TECHNIQUES ........................................................ 13 IV.A Simple Systems - Direct I/O access and -

Latitude D630c User's Guide

Dell™ Latitude™ D630/D630c User’s Guide Model PP24L www.dell.com | support.dell.com Notes, Notices, and Cautions NOTE: A NOTE indicates important information that helps you make better use of your computer. NOTICE: A NOTICE indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. CAUTION: A CAUTION indicates a potential for property damage, personal injury, or death. If you purchased a Dell™ n Series computer, any references in this document to Microsoft® Windows® operating systems are not applicable. ____________________ Information in this document is subject to change without notice. © 2007–2008 Dell Inc. All rights reserved. Trademarks used in this text: Dell, the DELL logo, Latitude, ExpressCharge, TravelLite, Strike Zone, Wi-Fi Catcher, and Client Manager are trademarks of Dell Inc.; Core, Active Management Technology, Centrino, and Intel are registered trademarks of Intel Corporation; Microsoft, Outlook, Windows, Windows Vista are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries; Bluetooth is a registered trademark owned by Bluetooth SIG, Inc. and is used by Dell under license; TouchStrip is a trademark of UPEK, Inc.; EMC is a registered trademark of EMC Corporation; ENERGY STAR is a registered trademark of the U.S. Environmental Protection Agency. As an ENERGY STAR partner, Dell Inc. has determined that this product meets the ENERGY STAR guidelines for energy efficiency. Other trademarks and trade names may be used in this document to refer to either the entities claiming the marks and names or their products. Dell Inc. disclaims any proprietary interest in trademarks and trade names other than its own. -

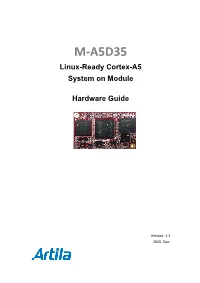

Hardware Guide

M-A5D35 Linux-Ready Cortex-A5 System on Module Hardware Guide Version: 1.1 2020 Dec. M-A5D35 User Guide Trademarks The Artila logo is a registered trademark of Artila Inc. All other trademarks or registered marks in this manual belong to their respective manufacturers. Disclaimer Information in this document is subject to change without notice and does not represent a commitment on the part of Artila. Artila provides this document as is, without warranty of any kind, either expressed or implied, including, but not limited to, its particular purpose. Artila reserves the right to make improvements and/or changes to this manual, or to the products and/or the programs described in this manual, at any time. Information provided in this manual is intended to be accurate and reliable. However, Artila assumes no responsibility for its use, or for any infringements on the rights of third parties that may result from its use. This product might include unintentional technical or typographical errors. Changes are periodically made to the information herein to correct such errors, and these changes are incorporated into new editions of the publication. ARTILA 2 M-A5D35 User Guide Document Amendment History Revision Date Remark V 0.1 2017 Jan. Initial V 1.0 2017 Jul. Hardware Guide V 1.1 2020 Dec. Kernel version updated ARTILA 3 M-A5D35 User Guide Table of Contents 1. Introduction .................................................................................................. 5 1.1 Features .............................................................................................