Optimality Theory and the Semiotic Triad: a New Approach for Songwriting, Sound Recording, and Artistic Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Clarky List 50 31/07/2012 13:06 Page 1

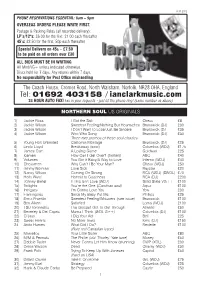

Clarky List 51_Clarky List 50 31/07/2012 13:06 Page 1 JULY 2012 PHONE RESERVATIONS ESSENTIAL : 8am – 9pm OVERSEAS ORDERS PLEASE WRITE FIRST. Postage & Packing Rates (all recorded delivery): LP’s/12"s: £6.00 for the first, £1.00 each thereafter 45’s: £2.50 for the first, 50p each thereafter Special Delivery on 45s – £7.50 to be paid on all orders over £30 ALL BIDS MUST BE IN WRITING All Mint/VG+ unless indicated otherwise. Discs held for 7 days. Any returns within 7 days. No responsibility for Post Office mis han dling The Coach House, Cromer Road, North Walsham, Norfolk, NR28 0HA, England Tel: 01692 403158 / ianclarkmusic.com 24 HOUR AUTO FAX! Fax in your requests - just let the phone ring! (same number as above) NORTHERN SOUL US ORIGINALS 1) Jackie Ross I Got the Skill Chess £8 2) Jackie Wilson Sweetest Feeling/Nothing But Heartaches Brunswick (DJ) £30 3) Jackie Wilson I Don’t Want to Lose/Just Be Sincere Brunswick (DJ £35 4) Jackie Wilson Who Who Song Brunswick (DJ) £30 Three rare promos of these soul classics 5) Young Holt Unlimited California Montage Brunswick (DJ) £25 6) Linda Lloyd Breakaway (swol) Columbia (WDJ) £175 7) James Carr A Losing Game Goldwax £25 8) Icemen How Can I Get Over? (brilliant) ABC £40 9) Volumes You Got it Baby/A Way to Love Inferno (WDJ) £40 10) Chessmen Why Can’t I Be Your Man? Chess (WDJ) £50 11) Jimmy Norman Love Sick Raystar £20 12) Nancy Wilcox Coming On Strong RCA (WDJ) (SWOL) £70 13) Herb Ward Honest to Goodness RCA (DJ) £200 14) Johnny Bartel If This Isn’t Love (WDJ) Solid State VG++ £100 15) Twilights -

Bob Marley Poems

Bob marley poems Continue Adult - Christian - Death - Family - Friendship - Haiku - Hope - Sense of Humor - Love - Nature - Pain - Sad - Spiritual - Teen - Wedding - Marley's Birthday redirects here. For other purposes, see Marley (disambiguation). Jamaican singer-songwriter The HonourableBob MarleyOMMarley performs in 1980BornRobert Nesta Marley (1945- 02-06)February 6, 1945Nine Mile, Mile St. Ann Parish, Colony jamaicaDied11 May 1981 (1981-05-11) (age 36)Miami, Florida, USA Cause of deathMamanoma (skin cancer)Other names Donald Marley Taff Gong Profession Singer Wife (s) Rita Anderson (m. After 1966) Partner (s) Cindy Breakspeare (1977-1978)Children 11 SharonSeddaDavid Siggy StephenRobertRohan Karen StephanieJulianKy-ManiDamian Parent (s) Norval Marley Sedella Booker Relatives Skip Marley (grandson) Nico Marley (grandson) Musical careerGenres Reggae rock Music Instruments Vocals Guitar Drums Years active1962-1981Labels Beverly Studio One JAD Wail'n Soul'm Upsetter Taff Gong Island Associated ActsBob Marley and WailersWebsitebobmarley.com Robert Nesta Marley, OM (February 6, 1945 - May 11, 1981) was Jamaican singer, songwriter and musician. Considered one of the pioneers of reggae, his musical career was marked by a fusion of elements of reggae, ska, and rocksteady, as well as his distinctive vocal and songwriting style. Marley's contribution to music has increased the visibility of Jamaican music around the world and has made him a global figure in popular culture for more than a decade. During his career, Marley became known as the Rastafari icon, and he imbued his music with a sense of spirituality. He is also considered a global symbol of Jamaican music and culture and identity, and has been controversial in his outspoken support for marijuana legalization, while he has also advocated for pan-African. -

Rock & Roll Rock & Roll L L O R & K C

108 ROCK & ROLL ROCK & ROLL ART ADAMS PAUL ANKA ROCK CRAZY BABY CD CL 4477 € 15.50 ITALIANO CD CNR 13050 € 15.50 · contains his single releases plus alternates & rehearsals and a PAUL ANKA / FEELINGS CD COL 2849 € 20.50 TV interview from 1959 - 30 tracks with 16 page booklet ! VOL.2, SINGS HIS BIG 10 CD D2 77558 € 11.90 BILLY ADAMS GERMAN-AMERICAN LINE OF LEGACY CD BA 0200 € 19.50 HITS CD RCA 84444 € 17.90 ESSENTIA L RCA ROCK & ROLL · Fantastic rough new recordings from the Sun Studio - diese Platte ist ein Muss für jeden bekennenden Rocker - Danke Billy, RECORDINGS 62-68 CD TAR 1057 € 18.90 wo warst du ? 17 Killer - no Filler (21.03.2000 SK) Love Me Warm And Tender- I’d Like To Know- A Steel Guitar And A ROCKIN’ THRU THE YEARS Glass Of Wine- I Never Knew Your Name- Every Night (With out 1955-2002 CD CMR 581 € 12.90 You)- Eso Beso (That Kiss)- Love (Makes The World Go ‘Round)- CHARLIE ADAMS Remem ber Diana- At Night- Hello Jim- It Does n’t Matter Anymore- The Longest Day- Hurry Up And Tell Me- Did You Have A Happy CATTIN’ AROUND BCD 16312 € 15.34 Birth day- From Rocking Horse To Rocking Chair- My Baby’s Comin’ ADDRISI BROS Home- In My Imagi nation- Ogni Volta (Every Time)- Every Day A CHER RY STONE CD DEL 71254 € 17.90 Heart Is Broken- As If There Were No Tomor row- I Can’t Help Loving HASIL ADKINS You- When We Get There OUT TO HUNCH...PLUS (50S · brand new US compilation in superb Sound ! RECORD INGS) CD CED 201 € 18.50 ANN MARGRET POUL TRY IN MOTION - THE VERY BEST OF CD BMG 69389 € 14.50 CHICKEN COLLECTION 55-99 CD CED 281 € 18.50 -

Nightshiftmag.Co.Uk @Nightshiftmag Nightshiftmag Nightshiftmag.Co.Uk Free Every Month NIGHTSHIFT Issue 285 April Oxford’S Music Magazine 2019

[email protected] @NightshiftMag NightshiftMag nightshiftmag.co.uk Free every month NIGHTSHIFT Issue 285 April Oxford’s Music Magazine 2019 “The mask probably is a defence mechanism. Focussing on this character of Tiger Mendoza rather than photo: Helen Messenger me as a person removes the notion of personality or race or even gender.” tiger mendoza Oxford’s collaboration king talks masks, movies & remixes Also in this issue: THE CELLAR - RIP WHEATSHEAF in peril? Introducing MEANS OF PRODUCTION FESTIVAL NEWS - the latest on Truck, Cornbury, Supernormal, Tandem & more plus all your Oxford music news, previews, reviews and five pages of gigs for April NIGHTSHIFT: PO Box 312, Kidlington, OX5 1ZU. Phone: 01865 372255 NEWS Nightshift: PO Box 312, Kidlington, OX5 1ZU Phone: 01865 372255 email: [email protected] Online: nightshiftmag.co.uk THE CELLAR CLOSED ITS agreed, there were no guarantees doors for the last time on the 11th on the time frame of the building March. work, which required access to the The venue, which has been at the shop above and various structural heart of the Oxford music scene for considerations. Essentially, the 40 years under the ownership of the whole process took far longer than Hopkins family, was forced to shut we were expecting, and we simply after it failed to reach an agreement could not keep operating under these with landlords The St Michael’s and conditions. All Saints “charity”, over a new rent “We are grateful to the landlords for deal. recognising the cultural importance The closure comes at the end of of the venue, and we hope that an 18-month period that saw The we have saved this space from Cellar survive an application for a becoming a store room. -

Biographical Description for the Historymakers® Video Oral History with H

Biographical Description for The HistoryMakers® Video Oral History with H. B. Barnum PERSON Barnum, H. B. Alternative Names: H. B. Barnum; Life Dates: July 15, 1936- Place of Birth: Houston, Texas, USA Residence: Los Angeles, CA Occupations: Music Producer Biographical Note Legendary music producer and arranger H. B. Barnum has worked with an extraordinary cross-spectrum of performers in his long career. Barnum was born Hidle Brown Barnum, Jr., on July 15, 1936, in Houston, Texas. At age four, he won a nationwide talent contest for his singing and piano playing, which launched a film and radio career that included appearances on Amos ‘n’ Andy and The Jack Benny Program. Barnum recorded his first solo album at the age of fourteen as Pee Wee Barnum. He attended Manual Arts High Pee Wee Barnum. He attended Manual Arts High School in Los Angeles, California. In 1955, Barnum co-founded the short-lived doo-wop group, The Dootones, at the request of Dootone label owner, Dootsie Williams. When the group broke up, he joined another doo-wop group, The Robins. Barnum began producing for The Robins in 1958, and also recorded a single on his own. Barnum had his first major hit as a producer when Dodie Stevens’ “Tan Shoes and Pink Shoelaces” reached the U.S. Top 5 in 1959. Although he recorded three albums during the 1960s – The Record, The Big Voice of Barnum – H. B., That Is, and Everyone Loves H. B. – Barnum, That Is – as well as the hit single “Lost Love,” his work as a producer and an arranger began to outpace his musical career. -

Extra Special Supplement to the Great R&B Files Includes Updated

The Great R&B Pioneers Extra Special Supplement to the Great R&B Files 2020 The R&B Pioneers Series edited by Claus Röhnisch Extra Special Supplement to the Great R&B Files - page 1 The Great R&B Pioneers Is this the Top Ten ”Super Chart” of R&B Hits? Ranking decesions based on information from Big Al Pavlow’s, Joel Whitburn’s, and Bill Daniels’ popularity R&B Charts from the time of their original release, and the editor’s (of this work) studies of the songs’ capabilities to ”hold” in quality, to endure the test of time, and have ”improved” to became ”classic representatives” of the era (you sure may have your own thoughts about this, but take it as some kind of subjective opinion - with a serious try of objectivity). Note: Songs listed in order of issue date, not in ranking order. Host: Roy Brown - ”Good Rocking Tonight” (DeLuxe) 1947 (youtube links) 1943 Don’t Cry, Baby (Bluebird) - Erskine Hawkins and his Orchestra Vocal refrain by Jimmy Mitchell (sic) Written by Saul Bernie, James P. Johnson and Stella Unger (sometimes listed as by Erskine Hawkins or Jmmy Mitchelle with arranger Sammy Lowe). Originally recorded by Bessie Smith in 1929. Jimmy 1. Mitchell actually was named Mitchelle and was Hawkins’ alto sax player. Brothers Paul (tenorsax) and Dud Bascomb (trumpet) played with Hawkins on this. A relaxed piano gives extra smoothness to it. Erskine was a very successful Hawkins was born in Birmingham, Alabama. Savoy Ballroom ”resident” bandleader and played trumpet. in New York for many years. -

Foals Släpper Dubbla Album Under 2019 – Första Singeln ”Exits” Ute Nu!

Foals 2019-01-22 13:53 CET Foals släpper dubbla album under 2019 – Första singeln ”Exits” ute nu! Den 8 mars släpper brittiska Foals det första av två kommande album 2019; ”Everything not saved will be lost – Part 1”. Part 2 släpps senare under hösten 2019. Den första låten från det nya albumet; ”Exits” är redan släppt. “They’re two halves of the same locket,” säger frontmannen Yannis Philippakis. “They can be listened to and appreciated individually, but fundamentally, they are companion pieces.” Foals har sedan 2015 streamats över en halv miljard gånger på Spotify. Bandet har gått från stökiga hemmafester till headline-bokningar på några av världens största festivaler. Foals har också nominerats i bland annat BRIT Awards, Mercury Prize och Novello samt vunnit priser i NME och Q-Awards. Efter drygt tio år sen starten har medlemmarna Yannis Philippakis (frontman), JimmySmith (gitarr), JackBevan (trummor), EdwinCongreave (keyboard) och WalterGervers (bassist) skapat två fullängdsalbum under 2019 ”Everything not saved will be lost – Part 1” och “Everything not saved will be lost – Part 2”. Den första singeln från albumet ”Exits” förkroppsligar temat för de två kommande albumen, i fokus står en desorienterad värld som ackompanjeras av melodiösa textrader. “There's a definite idea about the world being no longer habitable in the way that it was,” fortsätter Yannis. “A kind of perilousness lack of predictability and a feeling of being overwhelmed by the magnitudes of the problems we face. What's the response? And what’s the purpose of any response that one individual can have?” “Lyrically, there are resonances with what's going on in the world at the moment,” sammanfattar Yannis. -

J2P and P2J Ver 1

JuneB 9, 1962 ILLBDARE IV1LJBIC wealCoin Machine Ope Music- Phonograph Merchandising Radio -Tv Programming Los Angeles Síu: PAGE ONE RECORDS On Sizzling Streak Los Angeles continued to be one of the hottest record markets in the country last week when dealers reported business again on the rise. Some dealers reported sales up as much as SINGLES ALBUMS 100 per cent. For the past month Los Angeles has been on a hot sales streak, with records moving briskly across dealer coun- ters. Business was spotty in other sections of the country, NATIONAL BREAKOUTS though Boston, New Orleans and Nashville all reported good * NATIONAL 1 * business. MONO Bobby Vinton appeared to have one of the hottest of the ROSES ARE RED, Bobby Vinton, Epic 9509 new singles records with his "Roses Are Red" on the Epic NO BREAKOUTS THIS WEEK label. Disk jumped to the No. 68 slot on BMW's "Hot 100" this week. The new Philips label appeared to have both a hot STEREO singles and a hot LP among its first releases. Single getting LP moving BREAKOUTS action was Ruth Brown's "Shake a Hand." The * REGIONAL YOUNG WORLD, Lawrence Welk, Dot DLP out on a sales wave to stereo classical buyers was the Sviatoslav 25428 I 2. yet on BMW's Hot 100 have Richter waxing of the Liszt Piano Concertos Nos. and These new records, not BY THE SEA, Billy Vaughn, Dot DLP been reported getting strong sales action by dealers CHAPEL Last week's hot single, Emilio Pericoli's "Al Di La" on in major market (s) listed in parenthesis. -

The Coasters

The Coasters The Coasters are an American rhythm and blues/rock 1957 (all were recorded in Los Angeles). and roll vocal group who had a string of hits in the late "Yakety Yak" (recorded in New York), featuring King 1950s. Beginning with "Searchin'" and "Young Blood", Curtis on tenor saxophone, included the famous lineup of their most memorable songs were written by the songwrit- Gardner, Guy, Jones, and Gunter, became the act’s only ing and producing team of Leiber and Stoller.[1] Although national #1 single, and also topped the R&B chart. The the Coasters originated outside of mainstream doo-wop, next single, "Charlie Brown", reached #2 on both charts. their records were so frequently imitated that they be- It was followed by "Along Came Jones", "Poison Ivy" (#1 came an important part of the doo-wop legacy through for a month on the R&B chart), and "Little Egypt (Ying- the 1960s. Yang)". Changing popular tastes and a couple of line-up changes contributed to a lack of hits in the 1960s. During this 1 History time, Billy Guy was also working on solo projects, so New York singer Vernon Harrell was brought in to replace him for stage performances. Later members included Earl The Coasters were formed in October 1955 as a spin- “Speedo” Carroll (lead of the Cadillacs), Ronnie Bright off of the Robins, a Los Angeles-based rhythm and blues (the bass voice on Johnny Cymbal's "Mr. Bass Man"), group that included Carl Gardner and Bobby Nunn. The Jimmy Norman, and guitarist Thomas “Curley” Palmer. -

The Top Ten Vocal Groups of the Golden '50S

THE Top Ten Vocal Groups of the Golden ‘50s The Great R&B Files (# 6 of 12) Updated December 27, 2018 THE Top Ten Vocal Groups of the Golden ‘50s Rhythm and Blues Harmony Presented by Claus Röhnisch The R&B Pioneers Series - Volume Six of twelve Also read page 1 (86) - Top Rhythm & Blues Records - The Top R&B Hits from 30 classic years of R&B - The John Lee Hooker Session Discography with Year-By-Year Recap - The Blues Giants of the 1950s - Twelve Great Legends - Ten Sepia Super Stars of Rock ‘n’ Roll – Idols making Music Histouy - Transitions from Rhythm to Soul – Twelve Original Soul Icons - The True R&B Pioneers – Twelve Hit-Makers from the Early Years - Predecessors of the Soul Excplosion in the 1960s – Twelve Famous Favorites - The R&B Pioneers Series – The Top 30 Favorites - Clyde McPhartter - the Original Soul Star - The Clown Princes of Rock and Roll: The Coasters - Those Hoodlum Friends – The Coasters The Great R&B-files Created by Claus Röhnisch Find them all at http://www.rhythm-and-blues.info The R&B Pioneers Series – Volume Six of twelve THE Top Ten Vocal Groups of the Golden ‘50s Two great ”reference” books on Doo-Wop: The Doo-Wop Decades, 1945-1965 by B. Lee Cooper and Frank W, Hoffman CSIPP, US 2017 The Top 1000 Doo-Wop Songs by Anthony Gribin and Matthew Schiff Ttgpress, 2014 2 The R&B Pioneers Series – Volume Six of twelve THE Top Ten Vocal Groups of the Golden ‘50s Introduction Of all the countless (and mostly black) vocal groups, who gave us that exciting and wonderful harmony singing in the 1950s, I have selected ten outstanding pioneer R&B groups. -

EDDIE PALMIERI NEA Jazz Master (2013)

Funding for the Smithsonian Jazz Oral History Program NEA Jazz Master interview was provided by the National Endowment for the Arts. EDDIE PALMIERI NEA Jazz Master (2013) Interviewee: Eddie Palmieri (December 15, 1936 - ) Interviewer: Anthony Brown with recording engineer Ken Kimery Date: July 8, 2012 Depository: Archives Center, National Music of American History Description: Transcript, 50 pp. [BEGINNING OF DISK 1, TRACK 1] Brown: Today is July 8, 2012, and this is the Smithsonian Jazz Oral History Interview with NEA Jazz Master, arranger-pianist-composer-cultural hero-cultural icon, and definitely an inspiration to all musicians everywhere, Eddie Palmieri, in the Omni Berkshire in New York City. Good afternoon, Mr. Palmieri. Palmieri: Good afternoon, Anthony, and good afternoon, Ken. Brown: This interview is being conducted by Anthony Brown and Ken Kimery. And we just want to begin by saying thank you, Mr. Palmieri... Palmieri: Thank YOU, gentlemen. Brown: ...for all the music, all the inspiration, all the joy you have brought to everyone who’s had the opportunity and the privilege of hearing your music, and particularly if they’ve had the chance to dance to it—that especially. Palmieri: [LAUGHS] Brown: I’d like to start from the beginning. If you could start with giving your full birth-name, birth-place, and birth-date. th Palmieri: Well, Edward Palmieri. I was born in 60 East 112 Street in Manhattan, known as For additional information contact the Archives Center at 202.633.3270 or [email protected] Page | 1 the barrio, and at about 5 or 6 years old... That was 1936. -

Positie Artiest Nummer 1 Pearl Jam Black 2 Foo Fighters

Positie Artiest Nummer 1 Pearl Jam Black 2 Foo Fighters Everlong 3 Muse Knights of Cydonia 4 Nirvana Smells Like Teen Spirit 5 Rage Against The Machine Killing in the Name Of 6 Metallica Master of Puppets 7 Cure A Forest 8 Queens Of The Stone Age No One Knows 9 Editors Papillon 10 Killers Mr. Brightside 11 Pearl Jam Alive 12 Metallica One 13 Muse Plug in Baby 14 Radiohead Paranoid Android 15 Alice in Chains Would? 16 Foo Fighters The Pretender 17 De Staat Witch Doctor 18 Arctic Monkeys I Bet You Look Good On The Dancefloor 19 Linkin' Park In The End 20 Underworld Born Slippy 21 Oasis Champagne Supernova 22 Rammstein Deutschland 23 Blur Song 2 24 System of a Down Chop Suey! 25 Boxer Rebellion Diamonds 26 War On Drugs Under The Pressure 27 Radiohead Street Spirit (Fade Out) 28 Beastie Boys Sabotage 29 Soundgarden Black Hole Sun 30 Radiohead Creep 31 David Bowie Heroes 32 Korn Freak On A Leash 33 Arcade Fire Rebellion (Lies) 34 Johnny Cash Hurt 35 Pearl Jam Jeremy 36 Tame Impala Let It Happen 37 Pearl Jam Just Breathe 38 Editors Smokers Outside The Hospital Doors 39 Nirvana Lithium 40 Tool Schism 41 Metallica Enter Sandman 42 Queens Of The Stone Age Go With The Flow 43 AC/DC Thunderstruck 44 Green Day Basket Case 45 A Perfect Circle Judith 46 Pearl Jam Even Flow 47 Smashing Pumpkins Disarm 48 Limp Bizkit Break Stuff 49 Nirvana Heart-Shaped Box 50 Joy Division Love Will Tear Us Apart 51 16 Horsepower Black Soul Choir 52 Muse Psycho 53 Slayer Raining Blood 54 Nirvana Come As You Are 55 Radiohead Exit Music (For A Film) 56 Pixies Where Is My