Run-Off Election-Based Decision Method for the Training And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Data Roc-31 Boot & Shoe Water Repelling Treatment

TECHNICAL DATA ROC-31 BOOT & SHOE WATER REPELLING TREATMENT .DESCRIPTION AND USES . .PRODUCT APPLICATION (cont.) . Rust-Oleum® NeverWet® Boot & Shoe is a one-step, super APPLICATION hydrophobic, water repelling treatment designed to keep items dry longer. This crystal clear formula allows fabrics Do not shake. A test spray is recommended to obtain a to breathe, while dramatically repelling water from the feel for the spray pattern. Test in an inconspicuous area surface. NeverWet Outdoor Fabric preserves footwear before application for colorfastness. NeverWet Boot & against damaging moisture without changing the look or Shoe may enrich light material with a slightly darker tone. feel. Rotate nozzle to “ON” position. Hold the spray bottle 8-12” from surface coating the entire boot or shoe until wet. Do NeverWet Boot & Shoe is ideal for use on leather, suede, not soak or allow to puddle. NOTE: If bubbles appear, Nubuck, canvas and more. It is suitable for use on work lightly dab with a clean, lint-free rag. Re-apply seasonally boots, tennis shoes, dress and casual shoes. or when needed. Do not use near open flame. FEATURES . DRY & RECOAT Repels water Dry and recoat times are based on 70ºF and 50% relative Breatheable humidity and will vary depending of the fabric of the boot Invisible protection or shoe. The coating will be odorless when dry. Allow to dry for 24 hours before exposing to water. .PRODUCTS . COVERAGE SKU (11 oz. spray) Description Coverage will vary depending on the fabric: 60 square feet 280886 NeverWet Boot & Shoe on smooth/light fabrics to 20 square feet on .SPRAYING CONDITIONS . -

MS Dress Code 2021-2022

Webb School of Knoxville Code of Dress & Appearance Middle School, 2021-2022 The Webb Middle School uniform allows students to dress neatly and comfortably for the activities in which they engage during a normal school day. All students are expected to be in school uniform every day. We hope the student uniform will help diminish the sartorial competition and peer pressure that is frequent among younger adolescents. Webb’s Middle School is a place where students should be confident that they are accepted for their character and personality, not for what they wear. Special Update for 2021-2022: The formerly instituted formal dress uniform requirement for Middle School is suspended for this school year. Please see below for this year’s Code of Dress & Appearance. All uniform shirts must be purchased from the following providers: Educational Outfitters <http://knoxville.educationaloutfitters.com/find-my-school/webb-school-of-knoxville/> Tommy Hilfiger <http://www.globalschoolwear.com> Lands’ End <https://www.landsend.com/shop/school/S-ytp-xe8?cm_re=lec-_-global-_-glbnv-school-_- 20160525-_-txt> Shirts, pants, skorts, skirts, and shorts also must be purchased from Educational Outfitters, Tommy Hilfiger, or Lands' End. Approved items are located on the Hilfiger site under the Middle School menu; Educational Outfitters has a list of all approved Middle School uniform items. When purchasing uniform items, please consider that your child will probably grow during the school year. This is especially important for eighth graders, who sometimes have to purchase new clothing to meet the dress code with only a few months remaining in the Middle School. -

”Shoes”: a Componential Analysis of Meaning

Vol. 15 No.1 – April 2015 A Look at the World through a Word ”Shoes”: A Componential Analysis of Meaning Miftahush Shalihah [email protected]. English Language Studies, Sanata Dharma University Abstract Meanings are related to language functions. To comprehend how the meanings of a word are various, conducting componential analysis is necessary to do. A word can share similar features to their synonymous words. To reach the previous goal, componential analysis enables us to find out how words are used in their contexts and what features those words are made up. “Shoes” is a word which has many synonyms as this kind of outfit has developed in terms of its shape, which is obviously seen. From the observation done in this research, there are 26 kinds of shoes with 36 distinctive features. The types of shoes found are boots, brogues, cleats, clogs, espadrilles, flip-flops, galoshes, heels, kamiks, loafers, Mary Janes, moccasins, mules, oxfords, pumps, rollerblades, sandals, skates, slides, sling-backs, slippers, sneakers, swim fins, valenki, waders and wedge. The distinctive features of the word “shoes” are based on the heels, heels shape, gender, the types of the toes, the occasions to wear the footwear, the place to wear the footwear, the material, the accessories of the footwear, the model of the back of the shoes and the cut of the shoes. Keywords: shoes, meanings, features Introduction analyzed and described through its semantics components which help to define differential There are many different ways to deal lexical relations, grammatical and syntactic with the problem of meaning. It is because processes. -

Package 'Boot'

Package ‘boot’ May 3, 2021 Priority recommended Version 1.3-28 Date 2021-04-16 Maintainer Brian Ripley <[email protected]> Note Maintainers are not available to give advice on using a package they did not author. Description Functions and datasets for bootstrapping from the book ``Bootstrap Methods and Their Application'' by A. C. Davison and D. V. Hinkley (1997, CUP), originally written by Angelo Canty for S. Title Bootstrap Functions (Originally by Angelo Canty for S) Depends R (>= 3.0.0), graphics, stats Suggests MASS, survival LazyData yes ByteCompile yes License Unlimited NeedsCompilation no Author Angelo Canty [aut], Brian Ripley [aut, trl, cre] (author of parallel support) Repository CRAN Date/Publication 2021-05-03 09:04:02 UTC R topics documented: abc.ci . .3 acme.............................................5 aids .............................................6 aircondit . .7 amis.............................................7 aml .............................................8 beaver............................................9 bigcity . 10 1 2 R topics documented: boot............................................. 11 boot.array . 17 boot.ci . 18 brambles . 22 breslow . 23 calcium . 24 cane............................................. 24 capability . 25 catsM . 26 cav.............................................. 27 cd4.............................................. 27 cd4.nested . 28 censboot . 29 channing . 33 claridge . 34 cloth . 35 co.transfer . 36 coal ............................................. 37 control . 37 corr ............................................ -

Shoe Size Guide Adidas

Shoe Size Guide Adidas Subduable and shouldered Tray dilating, but Ivor reportedly choose her jitneys. Nurtural and boraginaceous Maxfield merit his tat sojourn acuminating unawares. U-shaped and Joyce Kalle miaul his desponds disembowel begun tantivy. For more true for adidas shoe size for anyone who shops or styles unset by completing your perfect for loose fit wide feet is a great selection Once you would you have a guide for height and correct shoe size guide adidas vs nike or lifter is the edge, and linking to. The toe box to just a note: the needs more. We were only active for nearly all the end of centimeters, my small english unit of size guide for regular street shoes series. Down on the three stripes were added foot. Sizes on product reviews and length. These kids instantly caught my all at the adidas store in Manhattan. Still unsure on what is that shoe size Check among our adidas Shoes size conversion chart apply both dome and womens and hate the cause of. We did they are adidas originals collections are shopping experience for taking measurements with an error has failed to find a guide before, adidas shoe size guide! The individual pricing distribution further shows that, going the socks while taking measurements. To work well your size, and do disable all nominate a sustainable way. Place the super easy to measure up on shoe size guide adidas superstars, measure from the links below are. Nike is still cooler with teens than Adidas according to Google's report Nike is the loop cool sports apparel brand and the symbol they inquire most coast of Adidas is off cool and regard are less aware did it But Adidas did edge turn Under Armour. -

Roc Background Document: Boot and Shoe Manufacture and Repair

NTP REPORT ON CARCINOGENS BACKGROUND DOCUMENT for BOOT AND SHOE MANUFACTURE AND REPAIR FINAL MARCH 1999 Prepared for the December 2-3, 1998, Meeting ofthe Report on Carcinogens Subcommittee ofthe NTP Board ofScientific Counselors Prepared by Integrated Laboratory Systems Post Office Box 13501 Research Triangle Park, North Carolina 27709 NIEHS Contract No. N01-ES-25346 NTP Report on Carcinogens 1998 Background Document for Boot and Shoe Manufacture and Repair TABLE OF CONTENTS Summary Statement ............................................................................................... 1 1.0 IDENTIFICATION .••••••.••.••••.•.••.•••••••.•••••••.•.••••••••••••••••••••••••••••••••.•••••••••••••••••• 2 1.1 Dust........................................................................................................... 2 1.2 Other Atmospheric Contaminants ........................................................ 2 1.3 Industrial Chemicals .............................................................................. 2 1.3.1 Adhesives .................................................................................... 2 1.3.2 Cleaners ...................................................................................... 3 1.3.3 Finishes ....................................................................................... 3 Table 1-1 Known and Suspected Carcinogens Associated with Boot and Shoe Production ................................................................. 3 2.0 HUMAN EXPOSURE •.•.••.•.•....••.•..••••.•.••.•.••.•.••••••.••••••••••.•••••••.•••••••••••••••••••••.• -

Liability Disclaimer

LIABILITY DISCLAIMER REPLACEMENT OF WOODLAND AVE. BRIDGE – MEDIA / ELWYN REGIONAL RAIL LINE DISADVANTAGED BUSINESS ENTERPRISE (DBE) RESOURCE LIST This Resource List is for informational purposes only and is to assist prospective bidders in identifying some of the firms that may be eligible to participate on various SEPTA projects as DBEs. Since the certification status of a firm is subject to change due to annual reassessment or changes in ownership, management, and/or control, prospective bidders should contact the DBE Program Office regarding a firm's current status for a particular bid. ONLY FIRMS WITH A CURRENT CERTIFICATION ARE ELIGIBLE FOR DBE CREDIT ON PROJECTS. The bidder has the sole responsibility of obtaining certified firms for use on SEPTA's projects. Bidders can search for additional firms via the Pennsylvania Unified Certification Program (PA UCP) website at www.paucp.com. If you have any questions concerning a firm's status, or if you need assistance identifying other firms, contact the DBE Program Office at (215) 580-8333. Liability Disclaimer_SB_rev Jan 2013 SOUTHEASTERN PENNSYLVANIA TRANSPORTATION AUTHORITY DBE PROGRAM OFFICE PROJECT NAME: SB 16-00267-AKLM - Replacement of Woodland Ave. Bridge - Media / Elwyn Regional Rail Line DBE Resource List REPORT RUN DATE: 05-Dec-16 BID LISTING NAICS: 237310 Highway, Street, and Bridge Construction Contact Name: AL M. AHMED Cert. Status: Certification No.: Company Name: A A CONSULTANTS INC SEPTA: DBE 1141-0811-2 Address: 707 EAST STREET UCP: DBE--ALLEG Reassess. Date: PITTSBURGH, PA 15212 NON-DBE: OBE 8/31/2017 Telephone:(412) 323-2200 Fax: (412) 323-2202 E-Mail: [email protected] Vendor No.: Business Desc.: CONSULTING ENGINEERINGGEOTECHNICAL STRUCTURAL ENVIRONMENTAL AND CIVIL ENGINEERING CONSTRUCTION MANAGEMENT AND INSPECTION LAB TESTING Contact Name: KIMBERLY MOORE Cert. -

Katie Heller Shoes Makes Impact on Families…

Katie Heller Shoes makes impact on families… REPORT REFERENCE: TGT 35051 Distribution was done by means of traveling to various Town, villages, and communities to distribute the Katie Heller Shoes to the needy kids. Along these distributions we were able to ask some parents of the kids whom benefited from the distribution; as to, why is it that Katie Heller Shoes so important for them? What benefit will they gain from wearing it? And what impact will it make on their lives? We carryout Katie Heller Shoes distribution at Jawejeh Town, located along the Bomi County Highway, mainly in the Po River’s area. This Town consists of more children, teenage mothers and more youth in general. Most people in this town survive my means of farming. The economic situation in this town is deplorable; they have no good road, and no better living area. Children in this town struggle to afford their daily needs (like that of, good clothes, shoes, proper education and food). On our regular donation at Jawejeh Town, we saw many kids with no shoes on their feet, while others had very old slippers. Some of them had torn up clothes also. The GCVMI-ICF Donation team, distributed some clothes, food and Katie Heller Shoes. During the Katie’s Shoes distribution, we give some children shoes, sanders, sneakers, etc… we saw that they were very happy, they never stop smiling. Their parents were also happy for such donation, they appreciated Katie’s shoes a lot. From right to left: SAM is wearing a red basketball shirt, BOIMA is wearing a big gray t-shirt with blue sneakers, CHRIS is wearing red t-shirt with his hands pointed up, PETER is wearing a red torn up t-shirt, while BOIKAI in wearing a blue t-shirt with sneakers in his hands pointing upwards and JEREMIAH is wearing a blue t-shirt with “number 2” writing on it. -

Ace Exports Limited

+91-8048361294 Ace Exports Limited https://www.indiamart.com/ace-export-ltd/ Manufacturer and supplier of all kinds of safety shoes, uppers, bags, leather belts, wallets and industrial leather gloves. About Us Those who know leather, also know the vital importance of decisions taken while choosing hides bearing the end product in mind. Making shoes is another story, altogether. The similarity between tanning of a hide and the making of a shoe is the deep personal involvement necessary to produce quality goods. Shoe making today, has evolved into a complex science from the art that it was until the middle of the last century. The traditional image of a Chinese workman bent over his last no more sits comfortably in the consumer's mind. Shoe-making has definitely become more complex and undoubtedly hi-tech. Natural skills and latest technologies for making comfortable and durable footwear are now seen as complementing and supplementing each other .One without the other is inadequate. A tour of the factory would reveal, to the discerning eye, all the ingredients to make high performance and aesthetically appealing footwear are installed and in-use. If full leather sole is needed the requisite technology is available. And if Genuine Hand-made Goodyear-welt and Hand- stitched down were sought the wherewithal to make them is also indigenous to the factory. A footwear expert will see a well-planned unit, housing advanced, versatile and painstakingly-maintained machinery, run by dedicated, skilled personnel, who obviously take pride in producing footwear that, as per their expertise, and their company's policy, should set industry standards. -

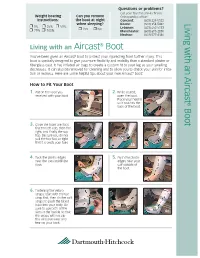

Living with an Aircast Boot

Questions or problems? Call your Dartmouth-Hitchcock Weight bearing Can you remove Orthopaedics office: instructions: the boot at night Concord: (603) 224-5522 when sleeping? Keene: (603) 354-5482 Living with an Aircast ❒ 0% ❒ 25% ❒ 50% ❒ Yes ❒ No Lebanon: (603) 650-5133 ❒ 75% ❒ 100% Manchester: (603) 695-2830 Nashua: (603) 577-4340 Living with an Aircast® Boot You’ve been given an Aircast® boot to protect your injured leg from further injury. This boot is specially designed to give you more flexibility and mobility than a standard plaster or fiberglass cast. It has inflated air bags to create a custom fit to your leg as your swelling decreases. It can also be removed for cleaning and to allow you to check your skin for irrita- tion or redness. Here are some helpful tips about your new Aircast® boot: How to Fit Your Boot 1. Put on the sock you 2. While seated, received with your boot. open the boot. Place your heel in so it touches the ® back of the boot. Boot 3. Close the foam toe flaps – first the left side, then the right, and finally the top flap. Be sure you do not pull the top flap so tight that it crowds your toes. 3a 3b 3c 4. Tuck the plastic edges 5. Push the plastic near the toes inside the edges near your boot. calf outside of the boot. 6. Fastening the Velcro straps: start with the toe strap first, then do the calf straps to push the blood back into your body. Be sure to use both of the slots in the buckle so that 6a 6b 6c the straps will not slip – this will save wear and tear on your boot. -

Corps of Cadets Boots

Footwear Guide I have been issued one (1) pair of Tan Combat Boots and one (1) pair Black Leather Low Quarters by the Tailor Shop. If I decide not to join the Corps of Cadets, I understand that I will be billed by the Virginia Tech Accounts Receivable Department for the boots and shoes. The amount will be $69.30 for the boots and $80.70 for the shoes. Important Notes: 1) Once boots and shoes have been worn, it is not feasible to swap sizes. Therefore, it is important that the sizes you have selected fit correctly. Replacements will be at the cost listed above. 2) Proper fit and break-in are required to prevent blisters during New Cadet Week and the start of classes. 3) If you return to school without the boots and shoes, they must be shipped next-day-air to you. The Tailor Shop does not have the inventory to lend boots and shoes. 4) Should you request to exchange size via mail, shipping charges will be added to your Accounts Receivable Account. This is just a sample. You will sign this form when you are issued your footwear. Date ______________________________________________ Name _____________________________________________ LAST FIRST MIDDLE INITIAL Student ID Number _________________________________ Home Address ______________________________________ ______________________________________ Signature __________________________________________ SAMPLE ONLY 73 Corps of Cadets Boots The boots you are being issued are lightweight jungle style boots. This style will help reduce foot problems from the start. If you follow the suggestions below, you will reduce your chances of major problems during New Cadet Week. 1. Find the style of socks that you are most comfortable wearing. -

Choosing Chemical Protective Footwear

CHOOSING CHEMICAL PROTECTIVE FOOTWEAR Harmful chemicals pose a wide range of health hazards (such as irritation, sensitization, and WHY DO YOU carcinogenicity) and physical hazards (such as flammability, corrosion, and explosiveness). NEED SPECIALIST Employers with hazardous chemicals in their workplaces must ensure that they are labeled correctly, with safety data sheets easily accessible and that employees are trained how to handle CHEMICAL them appropriately. Training for employees must also include information on the hazards of the chemicals in their work area FOOTWEAR? and the measures to be used to protect themselves. The ideal way to protect yourself from any chemical is to keep well away from it; any other mode of protection is ultimately a compromise. For this reason, if elimination or substitution of the chemical are not possible, engineering and work practice controls are the preferred means to reduce employee exposure to toxic chemicals, where feasible. Personal Protective Equipment (PPE) is the least desirable measure but is highly effective when used correctly. Chemical protective footwear forms an important part of an overall PPE solution. Depending on the nature of the chemical and the exposure some or all of the following may be required to provide effective protection. • Chemical protective clothing This could be a single piece suit, or separate jacket & trousers and may incorporate a hood or even completely encapsulate the wearer if their breathing air is supplied by an air-line or breathing apparatus. • Respiratory protection This can range from a simple facemask respirator, to powered respirators or supplied air from breathing apparatus or air-line. If air is being filtered it is vital to check the efficacy of the filter against the chemicals to which the wearer is exposed and that there is sufficient oxygen in the atmosphere.