Usability Themes in Open Source Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Desktop Migration and Administration Guide

Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Last Updated: 2021-05-05 Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Marie Doleželová Red Hat Customer Content Services [email protected] Petr Kovář Red Hat Customer Content Services [email protected] Jana Heves Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

The GNOME Desktop Environment

The GNOME desktop environment Miguel de Icaza ([email protected]) Instituto de Ciencias Nucleares, UNAM Elliot Lee ([email protected]) Federico Mena ([email protected]) Instituto de Ciencias Nucleares, UNAM Tom Tromey ([email protected]) April 27, 1998 Abstract We present an overview of the free GNU Network Object Model Environment (GNOME). GNOME is a suite of X11 GUI applications that provides joy to users and hackers alike. It has been designed for extensibility and automation by using CORBA and scripting languages throughout the code. GNOME is licensed under the terms of the GNU GPL and the GNU LGPL and has been developed on the Internet by a loosely-coupled team of programmers. 1 Motivation Free operating systems1 are excellent at providing server-class services, and so are often the ideal choice for a server machine. However, the lack of a consistent user interface and of consumer-targeted applications has prevented free operating systems from reaching the vast majority of users — the desktop users. As such, the benefits of free software have only been enjoyed by the technically savvy computer user community. Most users are still locked into proprietary solutions for their desktop environments. By using GNOME, free operating systems will have a complete, user-friendly desktop which will provide users with powerful and easy-to-use graphical applications. Many people have suggested that the cause for the lack of free user-oriented appli- cations is that these do not provide enough excitement to hackers, as opposed to system- level programming. Since most of the GNOME code had to be written by hackers, we kept them happy: the magic recipe here is to design GNOME around an adrenaline response by trying to use exciting models and ideas in the applications. -

Encryption Introduction to Using 7-Zip

IT Services Training Guide Encryption Introduction to using 7-Zip It Services Training Team The University of Manchester email: [email protected] www.itservices.manchester.ac.uk/trainingcourses/coursesforstaff Version: 5.3 Training Guide Introduction to Using 7-Zip Page 2 IT Services Training Introduction to Using 7-Zip Table of Contents Contents Introduction ......................................................................................................................... 4 Compress/encrypt individual files ....................................................................................... 5 Email compressed/encrypted files ....................................................................................... 8 Decrypt an encrypted file ..................................................................................................... 9 Create a self-extracting encrypted file .............................................................................. 10 Decrypt/un-zip a file .......................................................................................................... 14 APPENDIX A Downloading and installing 7-Zip ................................................................. 15 Help and Further Reference ............................................................................................... 18 Page 3 Training Guide Introduction to Using 7-Zip Introduction 7-Zip is an application that allows you to: Compress a file – for example a file that is 5MB can be compressed to 3MB Secure the -

Important Freedos Operating System Information

Important FreeDOS Operating System Information This PC has the FreeDOS operating system preinstalled, The Documentation and Utilities CD supports one or more which provides only limited DOS-based functionality until languages. Because the Documentation and Utilities CD another operating system is installed. does not autorun on some operating systems, you have to explore the directory of the CD to access the Software License and Warranty documentation files. Browse the documentation folder on the CD, locate the appropriate language subfolder, then HP is not responsible for support of the FreeDOS open the product folder to the Safety & Comfort Guide. operating system, and it is important to note that some features of FreeDOS may not function on this system. NOTE: Before you can view the contents of the Some hardware options ordered and delivered with this Documentation and Utilities CD, you must install a PC may not be supported under the FreeDOS operating licensed operating system, as well as a compatible system, nor will HP be responsible for providing driver version of Adobe Acrobat Reader, available at: support for such hardware. Please refer to the standard http://www.adobe.com warranty document included with your PC to learn about software technical support. A copy of the General Public License for FreeDOS can Utilities be found on the PC in the directory The Documentation and Utilities CD also contains a PC C:\FDOS\SOURCE\FREECOM\ diagnostic program called PC Doctor. PC Doctor either runs automatically when you start your PC with this CD in and can be viewed by entering on the command line: the optical drive, or when you install it to your hard drive c:\fdos\source\freecom\license after you have installed a licensed operating system. -

Pack, Encrypt, Authenticate Document Revision: 2021 05 02

PEA Pack, Encrypt, Authenticate Document revision: 2021 05 02 Author: Giorgio Tani Translation: Giorgio Tani This document refers to: PEA file format specification version 1 revision 3 (1.3); PEA file format specification version 2.0; PEA 1.01 executable implementation; Present documentation is released under GNU GFDL License. PEA executable implementation is released under GNU LGPL License; please note that all units provided by the Author are released under LGPL, while Wolfgang Ehrhardt’s crypto library units used in PEA are released under zlib/libpng License. PEA file format and PCOMPRESS specifications are hereby released under PUBLIC DOMAIN: the Author neither has, nor is aware of, any patents or pending patents relevant to this technology and do not intend to apply for any patents covering it. As far as the Author knows, PEA file format in all of it’s parts is free and unencumbered for all uses. Pea is on PeaZip project official site: https://peazip.github.io , https://peazip.org , and https://peazip.sourceforge.io For more information about the licenses: GNU GFDL License, see http://www.gnu.org/licenses/fdl.txt GNU LGPL License, see http://www.gnu.org/licenses/lgpl.txt 1 Content: Section 1: PEA file format ..3 Description ..3 PEA 1.3 file format details ..5 Differences between 1.3 and older revisions ..5 PEA 2.0 file format details ..7 PEA file format’s and implementation’s limitations ..8 PCOMPRESS compression scheme ..9 Algorithms used in PEA format ..9 PEA security model .10 Cryptanalysis of PEA format .12 Data recovery from -

Reactos-Devtutorial.Pdf

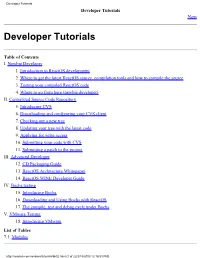

Developer Tutorials Developer Tutorials Next Developer Tutorials Table of Contents I. Newbie Developer 1. Introduction to ReactOS development 2. Where to get the latest ReactOS source, compilation tools and how to compile the source 3. Testing your compiled ReactOS code 4. Where to go from here (newbie developer) II. Centralized Source Code Repository 5. Introducing CVS 6. Downloading and configuring your CVS client 7. Checking out a new tree 8. Updating your tree with the latest code 9. Applying for write access 10. Submitting your code with CVS 11. Submitting a patch to the project III. Advanced Developer 12. CD Packaging Guide 13. ReactOS Architecture Whitepaper 14. ReactOS WINE Developer Guide IV. Bochs testing 15. Introducing Bochs 16. Downloading and Using Bochs with ReactOS 17. The compile, test and debug cycle under Bochs V. VMware Testing 18. Introducing VMware List of Tables 7.1. Modules http://reactos.com/rosdocs/tutorials/bk02.html (1 of 2) [3/18/2003 12:16:53 PM] Developer Tutorials Prev Up Next Chapter 8. Where to go from here Home Part I. Newbie Developer (newbie user) http://reactos.com/rosdocs/tutorials/bk02.html (2 of 2) [3/18/2003 12:16:53 PM] Part I. Newbie Developer Part I. Newbie Developer Prev Developer Tutorials Next Newbie Developer Table of Contents 1. Introduction to ReactOS development 2. Where to get the latest ReactOS source, compilation tools and how to compile the source 3. Testing your compiled ReactOS code 4. Where to go from here (newbie developer) Prev Up Next Developer Tutorials Home Chapter 1. Introduction to ReactOS development http://reactos.com/rosdocs/tutorials/bk02pt01.html [3/18/2003 12:16:54 PM] Chapter 1. -

7Z Zip File Download How to Open 7Z Files

7z zip file download How to Open 7z Files. This article was written by Nicole Levine, MFA. Nicole Levine is a Technology Writer and Editor for wikiHow. She has more than 20 years of experience creating technical documentation and leading support teams at major web hosting and software companies. Nicole also holds an MFA in Creative Writing from Portland State University and teaches composition, fiction-writing, and zine-making at various institutions. There are 8 references cited in this article, which can be found at the bottom of the page. This article has been viewed 292,786 times. If you’ve come across a file that ends in “.7z”, you’re probably wondering why you can’t open it. These files, known as “7z” or “7-Zip files,” are archives of one or more files in one single compressed package. You’ll need to install an unzipping app to extract files from the archive. These apps are usually free for any operating system, including iOS and Android. Learn how to open 7z files with iZip on your mobile device, 7-Zip or WinZip on Windows, and The Unarchiver in Mac OS X. 7z zip file download. If you are looking for 7-Zip, you have come to the right place. We explain what 7-Zip is and point you to the official download. What is 7-Zip? 7-zip is a compression and extraction software similar to WinZIP and WinRAR, that can read from and write to .7z archive files (although it can open other compressed archives such as .zip and .rar, among others, even including limited access to the contents of .msi, or Microsoft Installer files, and .exe files, or executable files). -

Winzip 12 Reviewer's Guide

Introducing WinZip® 12 WinZip® is the most trusted way to work with compressed files. No other compression utility is as easy to use or offers the comprehensive and productivity-enhancing approach that has made WinZip the gold standard for file-compression tools. With the new WinZip 12, you can quickly and securely zip and unzip files to conserve storage space, speed up e-mail transmission, and reduce download times. State-of-the-art file compression, strong AES encryption, compatibility with more compression formats, and new intuitive photo compression, make WinZip 12 the complete compression and archiving solution. Building on the favorite features of a worldwide base of several million users, WinZip 12 adds new features for image compression and management, support for new compression methods, improved compression performance, support for additional archive formats, and more. Users can work smarter, faster, and safer with WinZip 12. Who will benefit from WinZip® 12? The simple answer is anyone who uses a PC. Any PC user can benefit from the compression and encryption features in WinZip to protect data, save space, and reduce the time to transfer files on the Internet. There are, however, some PC users to whom WinZip is an even more valuable and essential tool. Digital photo enthusiasts: As the average file size of their digital photos increases, people are looking for ways to preserve storage space on their PCs. They have lots of photos, so they are always seeking better ways to manage them. Sharing their photos is also important, so they strive to simplify the process and reduce the time of e-mailing large numbers of images. -

Virtualization

Print Date: 21.06.2013 Transfer Files to FreeDOS Guest OS with ISO Image Oracle VM VirtualBox Brainboxes Limited, 18 Hurricane Drive, Liverpool International Business Park, Speke, Liverpool, L24 8RL, UK Tel: +44 (0)151 220 2500 Fax: +44 (0)151 252 0446 Web: www.brainboxes.com Email: [email protected] Contents 1. Version History ............................................................................................................................................ 10 © Copyright Brainboxes Limited 2013 Page 2 of 10 This document will demonstrate how to transfer files from the ISO disc image to FreeDOS Guest Operating System using Oracle VM VirtualBox application. 1. Start FreeDOS guest operating system by clicking “ Start ” as shown below: 2. Once the Guest Operating System is booted successfully, click “ Devices -> CD/DVD Devices -> Choose a virtual CD/DVD disk file… ” as shown below: © Copyright Brainboxes Limited 2013 Page 3 of 10 3. Browse to “Desktop” where the ISO image file is located, select the “PDS.iso” image file, and then click “Open ” when you are presented with the following: 4. Type “ md PDS ” at the DOS prompt, and then press “ Enter ” in order to create a PDS directory under C: drive as shown below: © Copyright Brainboxes Limited 2013 Page 4 of 10 5. Type “ D: ” at the DOS prompt, and then press “ Enter ” when you are presented with the following: 6. Type “ dir ” at the DOS prompt, and you will be shown that the folder “ PDS ” in the CD/DVD drive of the Guest Operating System which has the same contents as the ISO disc image file we have create previously as shown in the following: © Copyright Brainboxes Limited 2013 Page 5 of 10 7. -

Enterprise Desktop at Home with Freeipa and GNOME

Enterprise desktop at home with FreeIPA and GNOME Alexander Bokovoy ([email protected]) January 30th, 2016 FOSDEM’16 Enterprise? Enterprise desktop at home with FreeIPA and GNOME 2 * almost local office network is not managed by a company’s IT department Enterprise desktop at home with FreeIPA and GNOME 3 * almost company services’ hosting is cloudy there is no one cloud to rule them all Enterprise desktop at home with FreeIPA and GNOME 4 I Home-bound identity to access local resources I Cloud-based (social networking) identities I Free Software hats to wear I Certificates and smart cards to present myself legally I Private data to protect and share * almost I have FEW identities: I A corporate identity for services sign-on I want them to be usable at the same time Enterprise desktop at home with FreeIPA and GNOME 5 I Cloud-based (social networking) identities I Free Software hats to wear I Certificates and smart cards to present myself legally I Private data to protect and share * almost I have FEW identities: I A corporate identity for services sign-on I Home-bound identity to access local resources I want them to be usable at the same time Enterprise desktop at home with FreeIPA and GNOME 6 I Free Software hats to wear I Certificates and smart cards to present myself legally I Private data to protect and share * almost I have FEW identities: I A corporate identity for services sign-on I Home-bound identity to access local resources I Cloud-based (social networking) identities I want them to be usable at the same time Enterprise desktop -

Installing a Real-Time Linux Kernel for Dummies

Real-Time Linux for Dummies Jeroen de Best, Roel Merry DCT 2008.103 Eindhoven University of Technology Department of Mechanical Engineering Control Systems Technology group P.O. Box 513, WH -1.126 5600 MB Eindhoven, the Netherlands Phone: +31 40 247 42 27 Fax: +31 40 246 14 18 Email: [email protected], [email protected] Website: http://www.dct.tue.nl Eindhoven, January 5, 2009 Contents 1 Introduction 1 2 Installing a Linux distribution 3 2.1 Ubuntu 7.10 . .3 2.2 Mandriva 2008 ONE . .6 2.3 Knoppix 3.9 . 10 3 Installing a real-time kernel 17 3.1 Automatic (Ubuntu only) . 17 3.1.1 CPU Scaling Settings . 17 3.2 Manually . 18 3.2.1 Startup/shutdown problems . 25 4 EtherCAT for Unix 31 4.1 Build Sources . 38 4.1.1 Alternative timer in the EtherCAT Target . 40 5 TUeDACs 43 5.1 Download software . 43 5.2 Configure and build software . 44 5.3 Test program . 45 6 Miscellaneous 47 6.1 Installing ps2 and ps4 printers . 47 6.1.1 In Ubuntu 7.10 . 47 6.1.2 In Mandriva 2008 ONE . 47 6.2 Configure the internet connection . 48 6.3 Installing Matlab2007b for Unix . 49 6.4 Installing JAVA . 50 6.5 Installing SmartSVN . 50 6.6 Ubuntu 7.10, Gutsy Gibbon freezes every 10 minutes for approximately 10 sec 51 6.7 Installing Syntek Semicon DC1125 Driver . 52 Bibliography 55 A Menu.lst HP desktop computer DCT lab WH -1.13 57 i ii CONTENTS Chapter 1 Introduction This document describes the steps needed in order to obtain a real-time operating system based on a Linux distribution. -

How Do You Download Driver Fron 7 Zip Download Arduino and Install Arduino Driver

how do you download driver fron 7 zip Download Arduino and install Arduino driver. You can direct download the latest version from this page: http://arduino.cc/en/Main/Software, When the download finishes, unzip the downloaded file. Make sure to preserve the folder structure. Double-click the folder to open it. There should be a few files and sub-folders inside. Connect Seeeduino to PC. Connect the Seeeduino board to your computer using the USB cable. The green power LED (labeled PWR) should go on. Install the driver. Installing drivers for the Seeeduino with window7. Plug in your board and wait for Windows to begin its driver installation process. After a few moments, the process will fail. Open the Device Manager by right clicking “My computer” and selecting control panel. Look under Ports (COM & LPT). You should see an open port named "USB Serial Port" Right click on the "USB Serial Port" and choose the "Update Driver Software" option. Next, choose the "Browse my computer for Driver software" option. Finally, select the driver file named "FTDI USB Drivers", located in the "Drivers" folder of the Arduino Software download. Note: the FTDI USB Drivers are from Arduino. But when you install drivers for other Controllers, such as Xadow Main Board, Seeeduino Clio, Seeeduino Lite, you need to download corresponding driver file and save it. And select the driver file you have downloaded. The below dialog boxes automatically appears if you have installed driver successfully. You can check that the drivers have been installed by opening the Windows Device Manager. Look for a "USB Serial Port" in the Ports section.