Video Streaming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Can Apple TV™ Teach Us About Digital Signage?

What can Apple TV™ teach us about Digital Signage? Mark Hemphill | @ScreenScape July 2016 Executive Summary As a savvy communicator, you could be increasing revenue by building dynamic media channels into every one of your business locations via digital signage, yet something is holding you back. The cost and complexity associated with such technology is still too high for the average business owner to tackle. Or is it? Some of the traditional vendors in the digital signage industry make the task of connecting and controlling a few screens out to be a momentous challenge, a task for high paid experts. On the other hand, a new class of technologies has arrived in the home entertainment world that strips the exercise down to basics. And it’s surging in popularity. Apple TV™, and other similar streaming Internet devices, have managed to successfully eliminate the need for complicated hardware and complex installation processes to allow average non-technical homeowners to bring the Internet to their television screens. This trend in consumer entertainment offers insights we can adapt to the commercial world, lessons for digital signage vendors and their customers, which lead towards to simpler, more cost-effective, more user-friendly, and more scalable solutions. This article explores some of the following lessons in detail: • Simple appliances outperform PCs and Macs as digital media players • Sourcing and mounting a TV is not the critical challenge • In order to scale, digital signage networks must be designed for average non-technical users to operate • Creating an epic viewing experience isn’t as important as enabling a large and diverse network • Simplicity and cost-effectiveness go hand in hand By making it easier to connect and control screens, tomorrow’s digital signage solutions will help more businesses to reap the benefits of place-based media. -

“Laboratório” De T V Digital Usando Softw Are Open Source

“Laboratório” de TV digital usando software open source Objectivos Realizar uma pesquisa de software Open Source, nomeadamente o que está disponível em Sourceforge.net relacionado com a implementação de operações de processamento de sinais audiovisuais que tipicamente existem em sistemas de produção de TV digital. Devem ser identificadas aplicações para: • aquisição de vídeo, som e imagem • codificação com diferentes formatos (MPEG-2, MPEG-4, JPEG, etc.) • conversão entre formatos • pré e pós processamento (tal como filtragens) • edição • anotação Instalação dos programas e teste das suas funcionalidades. Linux Aquisição Filtros Codificação :: VLC :: Xine :: Ffmpeg :: Kino (DV) :: VLC :: Transcode :: Tvtime Television Viewer (TV) :: Video4Linux Grab Edição :: Mpeg4IP :: Kino (DV) Conversão :: Jashaka :: Kino :: Cinelerra :: VLC Playback :: Freej :: VLC :: FFMpeg :: Effectv :: MJPEG Tools :: PlayerYUV :: Lives :: Videometer :: MPlayer Anotação :: Xmovie :: Agtoolkit :: Video Squirrel VLC (VideoLan Client) VLC - the cross-platform media player and streaming server. VLC media player is a highly portable multimedia player for various audio and video formats (MPEG-1, MPEG-2, MPEG-4, DivX, mp3, ogg, ...) as well as DVDs, VCDs, and various streaming protocols. It can also be used as a server to stream in unicast or multicast in IPv4 or IPv6 on a high-bandwidth network. http://www.videolan.org/ Kino (DV) Kino is a non-linear DV editor for GNU/Linux. It features excellent integration with IEEE-1394 for capture, VTR control, and recording back to the camera. It captures video to disk in Raw DV and AVI format, in both type-1 DV and type-2 DV (separate audio stream) encodings. http://www.kinodv.org/ Tvtime Television Viewer (TV) Tvtime is a high quality television application for use with video capture cards on Linux systems. -

OM-Cube Project

OM-Cube project V. Hiribarren, N. Marchand, N. Talfer [email protected] - [email protected] - [email protected] Abstract. The OM-Cube project is composed of several components like a minimal operating system, a multi- media player, a LCD display and an infra-red controller. They should be chosen to fit the hardware of an em- bedded system. Several other similar projects can provide information on the software that can be chosen. This paper aims to examine the different available tools to build the OM-Multimedia machine. The main purpose is to explore different ways to build an embedded system that fits the hardware and fulfills the project. 1 A Minimal Operating System The operating system is the core of the embedded system, and therefore should be chosen with care. Because of its popu- larity, a Linux based system seems the best choice, but other open systems exist and should be considered. After having elected a system, all unnecessary components may be removed to get a minimal operating system. 1.1 A Linux Operating System Using a Linux kernel has several advantages. As it’s a popular kernel, many drivers and documentation are available. Linux is an open source kernel; therefore it enables anyone to modify its sources and to recompile it. Using Linux in an embedded system requires adapting the kernel to the hardware and to the system needs. A simple method for building a Linux embed- ded system is to create a partition on a development host and to mount it on a temporary mount point. This partition is filled as one goes along and then, the final distribution is put on the target host [Fich02] [LFS]. -

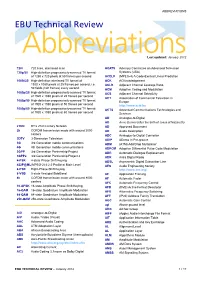

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

Nomadik Application Processor Andrea Gallo Giancarlo Asnaghi ST Is #1 World-Wide Leader in Digital TV and Consumer Audio

Nomadik Application Processor Andrea Gallo Giancarlo Asnaghi ST is #1 world-wide leader in Digital TV and Consumer Audio MP3 Portable Digital Satellite Radio Set Top Box Player Digital Car Radio DVD Player MMDSP+ inside more than 200 million produced chips January 14, 2009 ST leader in mobile phone chips January 14, 2009 Nomadik Nomadik is based on this heritage providing: – Unrivalled multimedia performances – Very low power consumption – Scalable performances January 14, 2009 BestBest ApplicationApplication ProcessorProcessor 20042004 9 Lowest power consumption 9 Scalable performance 9 Video/Audio quality 9 Cost-effective Nominees: Intel XScale PXA260, NeoMagic MiMagic 6, Nvidia MQ-9000, STMicroelectronics Nomadik STn8800, Texas Instruments OMAP 1611 January 14, 2009 Nomadik Nomadik is a family of Application Processors – Distributed processing architecture ARM9 + multiple Smart Accelerators – Support of a wide range of OS and applications – Seamless integration in the OS through standard API drivers and MM framework January 14, 2009 roadmap ... January 14, 2009 Some Nomadik products on the market... January 14, 2009 STn8815 block diagram January 14, 2009 Nomadik : a true real time multiprocessor platform ARM926 SDRAM SRAM General (L1 + L2) Purpose •Unlimited Space (Level 2 •Limited Bandwidth Cache System for Video) DMA Master OS Memory Controller Peripherals multi-layer AHB bus RTOS RTOS Multi-thread (Scheduler FSM) NAND Flash MMDSP+ Video •Unlimited Space MMDSP+ Audio 66 MHz, 16-bit •“No” Bandwidth 133 MHz, 24-bit •Mass storage -

The Top 10 Open Source Music Players Scores of Music Players Are Available in the Open Source World, and Each One Has Something That Is Unique

For U & Me Overview The Top 10 Open Source Music Players Scores of music players are available in the open source world, and each one has something that is unique. Here are the top 10 music players for you to check out. verybody likes to use a music player that is hassle- Amarok free and easy to operate, besides having plenty of Amarok is a part of the KDE project and is the default music Efeatures to enhance the music experience. The open player in Kubuntu. Mark Kretschmann started this project. source community has developed many music players. This The Amarok experience can be enhanced with custom scripts article lists the features of the ten best open source music or by using scripts contributed by other developers. players, which will help you to select the player most Its first release was on June 23, 2003. Amarok has been suited to your musical tastes. The article also helps those developed in C++ using Qt (the toolkit for cross-platform who wish to explore the features and capabilities of open application development). Its tagline, ‘Rediscover your source music players. Music’, is indeed true, considering its long list of features. 98 | FEBRUARY 2014 | OPEN SOURCE FOR YoU | www.LinuxForU.com Overview For U & Me Table 1: Features at a glance iPod sync Track info Smart/ Name/ Fade/ gapless and USB Radio and Remotely Last.fm Playback and lyrics dynamic Feature playback device podcasts controlled integration resume lookup playlist support Amarok Crossfade Both Yes Both Yes Both Yes Yes (Xine), Gapless (Gstreamer) aTunes Fade only -

MPLAYER-10 Mplayer-1.0Pre7-Copyright

MPLAYER-10 MPlayer-1.0pre7-Copyright MPlayer was originally written by Árpád Gereöffy and has been extended and worked on by many more since then, see the AUTHORS file for an (incomplete) list. You are free to use it under the terms of the GNU General Public License, as described in the LICENSE file. MPlayer as a whole is copyrighted by the MPlayer team. Individual copyright notices can be found in the file headers. Furthermore, MPlayer includes code from several external sources: Name: FFmpeg Version: CVS snapshot Homepage: http://www.ffmpeg.org Directory: libavcodec, libavformat License: GNU Lesser General Public License, some parts GNU General Public License, GNU General Public License when combined Name: FAAD2 Version: 2.1 beta (20040712 CVS snapshot) + portability patches Homepage: http://www.audiocoding.com Directory: libfaad2 License: GNU General Public License Name: GSM 06.10 library Version: patchlevel 10 Homepage: http://kbs.cs.tu-berlin.de/~jutta/toast.html Directory: libmpcodecs/native/ License: permissive, see libmpcodecs/native/xa_gsm.c Name: liba52 Version: 0.7.1b + patches Homepage: http://liba52.sourceforge.net/ Directory: liba52 License: GNU General Public License Name: libdvdcss Version: 1.2.8 + patches Homepage: http://developers.videolan.org/libdvdcss/ Directory: libmpdvdkit2 License: GNU General Public License Name: libdvdread Version: 0.9.3 + patches Homepage: http://www.dtek.chalmers.se/groups/dvd/development.shtml Directory: libmpdvdkit2 License: GNU General Public License Name: libmpeg2 Version: 0.4.0b + patches -

Technology Ties: Getting Real After Microsoft

i c a r u s Winter/Spring 2005 Technology Ties: Getting Real after Microsoft The D.C. Circuit’s 2001 decision in United States v. Microsoft opened the door for high-tech monopolists to tie products Scott Sher together if they can demonstrate that the procompetitive Wilson Sonsini Goodrich & Rosati justification for their practice outweighs the competitive harm. Reston, VA Although not yet endorsed by the Supreme Court, the [email protected] Microsoft decision poses a significant threat to stand-alone application providers selling products in dominant operating environments where the operating systems (“OS”) provider also sells competitive stand-alone applications. In the recently-filed private lawsuit—Real Networks v. Microsoft1— Real Networks included in its complaint tying allegations against Microsoft, a dominant OS provider, claiming that the defendant tied the sale of its OS to the purchase of (allegedly less desirable) applications for its operating system. The fate of lawsuits such as Real is almost assuredly contingent upon whether courts follow the Microsoft holding and resulting legal standard or adhere to a more traditional tying analysis, which requires per se condemnation of such practices. These cases and the development of the law of technology ties are likely to have a profound effect on the development of future operating systems and the long-term viability of many independent application providers who offer products that function in such OS’s. With dominant OS providers like Microsoft reaching further into the application world— through the development and/or acquisition of applications that function on their dominant OS environments—OS providers increasingly are becoming competitors to independent application providers, while at the same time providing the industry-standard OS on which these applications run. -

Review: a Digital Video Player to Support Music Practice and Learning Emond, Bruno; Vinson, Norman; Singer, Janice; Barfurth, M

NRC Publications Archive Archives des publications du CNRC ReView: a digital video player to support music practice and learning Emond, Bruno; Vinson, Norman; Singer, Janice; Barfurth, M. A.; Brooks, Martin This publication could be one of several versions: author’s original, accepted manuscript or the publisher’s version. / La version de cette publication peut être l’une des suivantes : la version prépublication de l’auteur, la version acceptée du manuscrit ou la version de l’éditeur. Publisher’s version / Version de l'éditeur: Journal of Technology in Music Learning, 4, 1, 2007 NRC Publications Record / Notice d'Archives des publications de CNRC: https://nrc-publications.canada.ca/eng/view/object/?id=2573dfda-4fa0-4a7f-b8e0-4014b537e486 https://publications-cnrc.canada.ca/fra/voir/objet/?id=2573dfda-4fa0-4a7f-b8e0-4014b537e486 Access and use of this website and the material on it are subject to the Terms and Conditions set forth at https://nrc-publications.canada.ca/eng/copyright READ THESE TERMS AND CONDITIONS CAREFULLY BEFORE USING THIS WEBSITE. L’accès à ce site Web et l’utilisation de son contenu sont assujettis aux conditions présentées dans le site https://publications-cnrc.canada.ca/fra/droits LISEZ CES CONDITIONS ATTENTIVEMENT AVANT D’UTILISER CE SITE WEB. Questions? Contact the NRC Publications Archive team at [email protected]. If you wish to email the authors directly, please see the first page of the publication for their contact information. Vous avez des questions? Nous pouvons vous aider. Pour communiquer directement avec un auteur, consultez la première page de la revue dans laquelle son article a été publié afin de trouver ses coordonnées. -

Cutting the Cable Cord

3/11/2021 The views, opinions, and information expressed during this • March 11, 6:30-7:30 PM webinar are those of the presenter and are not the views or opinions of the Newton Public Library. The Newton Public Library • FREE! NO REGISTRATION REQUIRED makes no representation or warranty with respect to the webinar or any information or materials presented therein. Users of webinar materials should not rely upon or construe the Thinking of cutting the cord? What are your options? ChromeCast information or resource materials contained in this webinar as Why and why not do it, Apple TV, Roku alternatives and more sources of entertainment. Sling, Hulu, Netflix legal or other professional advice and should not act or fail to act To log in live from home go to: based on the information in these materials without seeking the services of a competent legal or other specifically specialized https://kslib.zoom.us/j/561178181 professional. Watch the recorded presentation anytime after the 15th or Recorded https://kslib.info/1180/Digital-Literacy---Tech-Talks Presenter: Nathan, IT Supervisor, at the Newton Public Library 1. Protect your computer • A computer should always have the most recent updates installed for spam filters, anti-virus and anti-spyware software and http://www.districtdispatch.org/wp-content/uploads/2012/03/triple_play_web.png a secure firewall. http://cdn.greenprophet.com/wp-content/uploads/2012/04/frying-pan-kolbotek-neoflam-560x475.jpg What is “Cutting the Cord” Cable TV Cord Cutting (verb): The act of canceling your cable or Cable is the immediate answer satellite subscription in favor of a more affordable and obvious choice with the most television viewing option. -

Software Installation

Software Installation Please note students should not uninstall any software from this list Software Rationale Windows 8.1 Professional/Windows 10 (64 Bit) Nb Microsoft Edge Browser is not compatible with some websites and they will require opening in IE V11 Acrobat Reader To open pdf Documents https://get.adobe.com/reader/ Adobe Digital Editions Software program used to read ebooks http://www.adobe.com/au/solutions/ebook/digital- editions/download.html Pearson Bookshelf EBook reader for school textbooks https://www.pearsonplaces.com.au/help/ctl/article/mi d/6911.aspx?articleId=34 Java Runtime Environment Enabled execution of Java applications https://java.com/en/download/ Adobe Shockwave Player Allows execution of Director applications in web https://get.adobe.com/shockwave/ Browsers (Not required for Windows 10) Adobe Flash Player Used to view animations and movies in web browsers https://helpx.adobe.com/flash-player.html Microsoft Office 2016 Pro Plus including Word, Excel, Nb: This is available at no cost via the school’s Office365 Powerpoint, Onenote, Publisher & Onenote plugin Subscription. Learning Tools for Onenote Word: Word processor Excel: Spreadsheet application https://www.onenote.com/learningtools Publisher: Desktop publishing application PowerPoint: Presentation application Powepoint Plugin OfficeMix OneNote: Electronic note making and multimedia https://mix.office.com/en-us/Home container. Learning tools allows support for English Office Mix interactivity+screen video for powerpoint Outlook – email and personal information -

DIGITAL Media Players Have MEDIA Evolved to Provide PLAYERS a Wide Range of Applications and Uses

2011-2012 Texas 4-H Study Guide - Additional Resources DigitalDIGITAL media players have MEDIA evolved to provide PLAYERS a wide range of applications and uses. They come in a range of shapes and sizes, use different types of memory, and support a variety of file formats. In addition, digital media players interface differently with computers as well as the user. Consideration of these variables is the key in selecting the best digital media player. In this case, one size does not fit all. This guide is intended to provide you, the consumer, with information that will assist you in making the best choice. Key Terms • Digital Media Player – a portable consumer electronic device that is capable of storing and playing digital media. The data is typically stored on a hard drive, microdrive, or flash memory. • Data – information that may take the form of audio, music, images, video, photos, and other types of computer files that are stored electronically in order to be recalled by a digital media player or computer • Flash Memory – a memory chip that stores data and is solid-state (no moving parts) which makes it much less likely to fail. It is generally very small (postage stamp) making it lightweight and requires very little power. • Hard Drive – a type of data storage consisting of a collection of spinning platters and a roving head that reads data that is magnetically imprinted on the platters. They hold large amounts of data useful in storing large quantities of music, video, audio, photos, files, and other data. • Audio Format – the file format in which music or audio is available for use on the digital media player.