Non-Adaptive Adaptive Sampling on Turnstile Streams

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tt-Satisfiable

CMPSCI 601: Recall From Last Time Lecture 6 Boolean Syntax: ¡ ¢¤£¦¥¨§¨£ © §¨£ § Boolean variables: A boolean variable represents an atomic statement that may be either true or false. There may be infinitely many of these available. Boolean expressions: £ atomic: , (“top”), (“bottom”) § ! " # $ , , , , , for Boolean expressions Note that any particular expression is a finite string, and thus may use only finitely many variables. £ £ A literal is an atomic expression or its negation: , , , . As you may know, the choice of operators is somewhat arbitary as long as we have a complete set, one that suf- fices to simulate all boolean functions. On HW#1 we ¢ § § ! argued that is already a complete set. 1 CMPSCI 601: Boolean Logic: Semantics Lecture 6 A boolean expression has a meaning, a truth value of true or false, once we know the truth values of all the individual variables. ¢ £ # ¡ A truth assignment is a function ¢ true § false , where is the set of all variables. An as- signment is appropriate to an expression ¤ if it assigns a value to all variables used in ¤ . ¡ The double-turnstile symbol ¥ (read as “models”) de- notes the relationship between a truth assignment and an ¡ ¥ ¤ expression. The statement “ ” (read as “ models ¤ ¤ ”) simply says “ is true under ”. 2 ¡ ¤ ¥ ¤ If is appropriate to , we define when is true by induction on the structure of ¤ : is true and is false for any , £ A variable is true iff says that it is, ¡ ¡ ¡ ¡ " ! ¥ ¤ ¥ ¥ If ¤ , iff both and , ¡ ¡ ¡ ¡ " ¥ ¤ ¥ ¥ If ¤ , iff either or or both, ¡ ¡ ¡ ¡ " # ¥ ¤ ¥ ¥ If ¤ , unless and , ¡ ¡ ¡ ¡ $ ¥ ¤ ¥ ¥ If ¤ , iff and are both true or both false. 3 Definition 6.1 A boolean expression ¤ is satisfiable iff ¡ ¥ ¤ there exists . -

Chapter 9: Initial Theorems About Axiom System

Initial Theorems about Axiom 9 System AS1 1. Theorems in Axiom Systems versus Theorems about Axiom Systems ..................................2 2. Proofs about Axiom Systems ................................................................................................3 3. Initial Examples of Proofs in the Metalanguage about AS1 ..................................................4 4. The Deduction Theorem.......................................................................................................7 5. Using Mathematical Induction to do Proofs about Derivations .............................................8 6. Setting up the Proof of the Deduction Theorem.....................................................................9 7. Informal Proof of the Deduction Theorem..........................................................................10 8. The Lemmas Supporting the Deduction Theorem................................................................11 9. Rules R1 and R2 are Required for any DT-MP-Logic........................................................12 10. The Converse of the Deduction Theorem and Modus Ponens .............................................14 11. Some General Theorems About ......................................................................................15 12. Further Theorems About AS1.............................................................................................16 13. Appendix: Summary of Theorems about AS1.....................................................................18 2 Hardegree, -

Chapter 13: Formal Proofs and Quantifiers

Chapter 13: Formal Proofs and Quantifiers § 13.1 Universal quantifier rules Universal Elimination (∀ Elim) ∀x S(x) ❺ S(c) Here x stands for any variable, c stands for any individual constant, and S(c) stands for the result of replacing all free occurrences of x in S(x) with c. Example 1. ∀x ∃y (Adjoins(x, y) ∧ SameSize(y, x)) 2. ∃y (Adjoins(b, y) ∧ SameSize(y, b)) ∀ Elim: 1 General Conditional Proof (∀ Intro) ✾c P(c) Where c does not occur outside Q(c) the subproof where it is introduced. ❺ ∀x (P(x) → Q(x)) There is an important bit of new notation here— ✾c , the “boxed constant” at the beginning of the assumption line. This says, in effect, “let’s call it c.” To enter the boxed constant in Fitch, start a new subproof and click on the downward pointing triangle ❼. This will open a menu that lets you choose the constant you wish to use as a name for an arbitrary object. Your subproof will typically end with some sentence containing this constant. In giving the justification for the universal generalization, we cite the entire subproof (as we do in the case of → Intro). Notice that although c may not occur outside the subproof where it is introduced, it may occur again inside a subproof within the original subproof. Universal Introduction (∀ Intro) ✾c Where c does not occur outside the subproof where it is P(c) introduced. ❺ ∀x P(x) Remember, any time you set up a subproof for ∀ Intro, you must choose a “boxed constant” on the assumption line of the subproof, even if there is no sentence on the assumption line. -

Logic and Proof Release 3.18.4

Logic and Proof Release 3.18.4 Jeremy Avigad, Robert Y. Lewis, and Floris van Doorn Sep 10, 2021 CONTENTS 1 Introduction 1 1.1 Mathematical Proof ............................................ 1 1.2 Symbolic Logic .............................................. 2 1.3 Interactive Theorem Proving ....................................... 4 1.4 The Semantic Point of View ....................................... 5 1.5 Goals Summarized ............................................ 6 1.6 About this Textbook ........................................... 6 2 Propositional Logic 7 2.1 A Puzzle ................................................. 7 2.2 A Solution ................................................ 7 2.3 Rules of Inference ............................................ 8 2.4 The Language of Propositional Logic ................................... 15 2.5 Exercises ................................................. 16 3 Natural Deduction for Propositional Logic 17 3.1 Derivations in Natural Deduction ..................................... 17 3.2 Examples ................................................. 19 3.3 Forward and Backward Reasoning .................................... 20 3.4 Reasoning by Cases ............................................ 22 3.5 Some Logical Identities .......................................... 23 3.6 Exercises ................................................. 24 4 Propositional Logic in Lean 25 4.1 Expressions for Propositions and Proofs ................................. 25 4.2 More commands ............................................ -

1 Symbols (2286)

1 Symbols (2286) USV Symbol Macro(s) Description 0009 \textHT <control> 000A \textLF <control> 000D \textCR <control> 0022 ” \textquotedbl QUOTATION MARK 0023 # \texthash NUMBER SIGN \textnumbersign 0024 $ \textdollar DOLLAR SIGN 0025 % \textpercent PERCENT SIGN 0026 & \textampersand AMPERSAND 0027 ’ \textquotesingle APOSTROPHE 0028 ( \textparenleft LEFT PARENTHESIS 0029 ) \textparenright RIGHT PARENTHESIS 002A * \textasteriskcentered ASTERISK 002B + \textMVPlus PLUS SIGN 002C , \textMVComma COMMA 002D - \textMVMinus HYPHEN-MINUS 002E . \textMVPeriod FULL STOP 002F / \textMVDivision SOLIDUS 0030 0 \textMVZero DIGIT ZERO 0031 1 \textMVOne DIGIT ONE 0032 2 \textMVTwo DIGIT TWO 0033 3 \textMVThree DIGIT THREE 0034 4 \textMVFour DIGIT FOUR 0035 5 \textMVFive DIGIT FIVE 0036 6 \textMVSix DIGIT SIX 0037 7 \textMVSeven DIGIT SEVEN 0038 8 \textMVEight DIGIT EIGHT 0039 9 \textMVNine DIGIT NINE 003C < \textless LESS-THAN SIGN 003D = \textequals EQUALS SIGN 003E > \textgreater GREATER-THAN SIGN 0040 @ \textMVAt COMMERCIAL AT 005C \ \textbackslash REVERSE SOLIDUS 005E ^ \textasciicircum CIRCUMFLEX ACCENT 005F _ \textunderscore LOW LINE 0060 ‘ \textasciigrave GRAVE ACCENT 0067 g \textg LATIN SMALL LETTER G 007B { \textbraceleft LEFT CURLY BRACKET 007C | \textbar VERTICAL LINE 007D } \textbraceright RIGHT CURLY BRACKET 007E ~ \textasciitilde TILDE 00A0 \nobreakspace NO-BREAK SPACE 00A1 ¡ \textexclamdown INVERTED EXCLAMATION MARK 00A2 ¢ \textcent CENT SIGN 00A3 £ \textsterling POUND SIGN 00A4 ¤ \textcurrency CURRENCY SIGN 00A5 ¥ \textyen YEN SIGN 00A6 -

Data Stream Algorithms Lecture Notes

CS49: Data Stream Algorithms Lecture Notes, Fall 2011 Amit Chakrabarti Dartmouth College Latest Update: October 14, 2014 DRAFT Acknowledgements These lecture notes began as rough scribe notes for a Fall 2009 offering of the course “Data Stream Algorithms” at Dartmouth College. The initial scribe notes were prepared mostly by students enrolled in the course in 2009. Subsequently, during a Fall 2011 offering of the course, I edited the notes heavily, bringing them into presentable form, with the aim being to create a resource for students and other teachers of this material. I would like to acknowledge the initial effort by the 2009 students that got these notes started: Radhika Bhasin, Andrew Cherne, Robin Chhetri, Joe Cooley, Jon Denning, Alina Dja- mankulova, Ryan Kingston, Ranganath Kondapally, Adrian Kostrubiak, Konstantin Kutzkow, Aarathi Prasad, Priya Natarajan, and Zhenghui Wang. DRAFT Contents 0 Preliminaries: The Data Stream Model 5 0.1 TheBasicSetup................................... ........... 5 0.2 TheQualityofanAlgorithm’sAnswer . ................. 5 0.3 VariationsoftheBasicSetup . ................ 6 1 Finding Frequent Items Deterministically 7 1.1 TheProblem...................................... .......... 7 1.2 TheMisra-GriesAlgorithm. ............... 7 1.3 AnalysisoftheAlgorithm . .............. 7 2 Estimating the Number of Distinct Elements 9 2.1 TheProblem...................................... .......... 9 2.2 TheAlgorithm .................................... .......... 9 2.3 TheQualityoftheAlgorithm’sEstimate . .................. -

1. Latex and Symbolic Logic: an Introduction

Symbolic Logic and LATEX David W. Agler June 21, 2013 1 Introduction This document introduces some features of LATEX, the special symbols you will need in Symbolic Logic (PHIL012), and some reasons for why you should use LATEX over traditional word processing programs. This video also ac- companies several video tutorials on how to use LATEX in the Symbolic Logic (PHIL012) course at Penn State. 2 LATEX is not a word processor LATEX is a typesetting system that was originally designed to produce docu- ments with special symbols. LATEX is not a word processor which coordinates text with styling simultaneously. Instead, the LATEX system separates text from styling as it consists of (i) a text document (or *.tex file) which is text that does not contain any formatting and (ii) a compiler that takes the *.tex file and turns it into a readable and professionally stylized document. In other words, the production of a document using LATEX begins with the specification of what you want to say and the structure of what you want to say and this is then processed by a compiler which styles your document. To get a clearer idea of what some of this means, let's look at a very simple LATEX source document: \documentclass[12pt]{article} \begin{document} \title{Symbolic Logic and \LaTeX\ } \author{David W. Agler} 1 \date{\today} \maketitle \section{Introduction} Hello Again World! \end{document} The above specifies the class (or kind) of document you want to produce (an article, rather than a book or letter), commands for begining the document, the title of the article, the author of the article, the date, a command to make the title, a section that will automatically be numbered, some content that will appear as text (\Hello World"), and finally a command to end the document. -

Basics of Type Theory and Coq Logic Types ∧, ∨, ⇒, ¬, ∀, ∃ ×, +, →, Q, P Michael Shulman

Type-theoretic foundations Set theory Type theory Basics of type theory and Coq Logic Types ^; _; ); :; 8; 9 ×; +; !; Q; P Michael Shulman Sets Logic January 31, 2012 ×; +; !; Q; P ^; _; ); :; 8; 9 x 2 A is a proposition x : A is a typing judgment 1 / 77 2 / 77 Type theory is programming Typing judgments Type theory consists of rules for manipulating judgments. The most important judgment is a typing judgment: x1 : A1; x2 : A2;::: xn : An ` b : B For now, think of type theory as a programming language. The turnstile ` binds most loosely, followed by commas. • Closely related to functional programming languages like This should be read as: ML, Haskell, Lisp, Scheme. In the context of variables x of type A , x of type A ,..., • More expressive and powerful. 1 1 2 2 and xn of type An, the expression b has type B. • Can manipulate “mathematical objects”. Examples ` 0: N x : N; y : N ` x + y : N f : R ! R; x : R ` f (x): R 1 (n) 1 f : C (R; R); n: N ` f : C (R; R) 4 / 77 5 / 77 Type constructors Derivations The basic rules tell us how to construct valid typing judgments, We write these rules as follows. i.e. how to write programs with given input and output types. This includes: ` A: Type ` B : Type 1 How to construct new types (judgments Γ ` A: Type). ` A ! B : Type 2 How to construct terms of these types. 3 How to use such terms to construct terms of other types. x : A ` b : B A Example (Function types) ` λx :b : A ! B 1 If A: Type and B : Type, then A ! B : Type. -

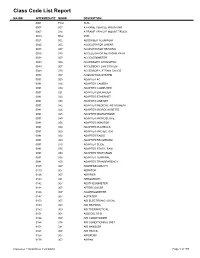

Class Code List Report

Class Code List Report MAJOR INTERMEDIATE MINOR DESCRIPTION 0001 FOU 3676 0007 007 A FRAME VEHICLE MOUNTING 0007 014 A FRAME VEHICLE MOUNT TRUCK 0010 BAC 0555 0021 002 ABSORBER ALUMINUM 0035 005 ACCELERATOR LINEAR 0035 007 ACCELERATOR READING 0035 015 ACCELERATOR NETWORK PATH 0038 007 ACCELEROMETER 0049 004 ACCESSORY CRYOGENIC 0049 007 ACCESSORY LAN SYSTEM 0049 010 ACCESSORY, STRAIN GAUGE 0058 007 ACQUISITION SYSTEM 0091 003 ADAPTER AC 0091 014 ADAPTER CAMERA 0091 018 ADAPTER COMPUTER 0091 021 ADAPTER ENLARGER 0091 023 ADAPTER ETHERNET 0091 038 ADAPTER LINE SET 0091 042 ADAPTER MEDICAL INSTRUMENT 0091 044 ADAPTER MICROCASSETTE 0091 045 ADAPTER MICROPHONE 0091 049 ADAPTER MICROSCOPE 0091 056 ADAPTER MONITOR 0091 058 ADAPTER PLAYBACK 0091 060 ADAPTER PROJECTOR 0091 062 ADAPTER RADIO 0091 063 ADAPTER RECORDING 0091 070 ADAPTER SLIDE 0091 075 ADAPTER STATIC RAM 0091 090 ADAPTER TELEVISION 0091 095 ADAPTER TERMINAL 0091 100 ADAPTER TRANSPARENCY 0105 007 ADDRESSOGRAPH 0119 007 AERATOR 0126 007 AERIFIER 0140 021 AEROGRAPH 0142 007 AESTHESIOMETER 0144 007 AFTERCOOLER 0146 007 AGGREGOMETER 0147 007 AGITATOR 0150 007 AID ELECTRONIC VOCAL 0150 008 AID HEARING 0150 009 AID THERAPUTICAL 0151 004 AIDE DELTOID 0154 007 AIR CONDITIONER 0154 014 AIR CONDITIONING UNIT 0154 021 AIR HANDLER 0161 007 AIR TRACK 0168 007 AIRBRUSH 0179 007 AIRPAK Printed on 11/5/2010 at 1:23:00PM Page 1 of 139 MAJOR INTERMEDIATE MINOR DESCRIPTION 0182 007 AIRPLANE 0189 003 ALARM AUTO 0189 007 ALARM BURGLAR 0189 014 ALARM FIRE 0189 021 ALARM INTRUDER 0189 022 ALARM SYSTEM ERROR -

An Introduction to Type Theory Outline

An Introduction to Type Theory Dan Christensen UWO, May 8, 2014 Outline: A brief history of types Type theory as set theory Type theory as logic History of Type Theory Initial ideas due to Bertrand Russell in early 1900's, to create a foundation for mathematics that avoids Russell's paradox. Studied by many logicians and later computer scientists, particularly Church, whose λ-calculus (1930's and 40's) is a type theory. In 1972, Per Martin-L¨ofextended type theory to include dependent types. It is this form of type theory that we will focus on. One of the key features is that it unifies set theory and logic! In 2006, Awodey and Warren, and Voevodsky, discovered that type theory has homotopical models. Understanding this interpretation will be the goal of the overall seminar, but not of this talk. 2012{2013: a special year at the IAS, which led to a book. Last week: $7.5M Dept of Defense grant for a group led by Awodey. Type theory as set theory: Notation Type theory studies things called types, which can be thought of as sets. The analog of elements of a set are called terms. We write a : A to indicate that a is a term of type A. And we write A : Type to indicate that A is a type. A judgement is a string of symbols that may or may not be provable from the rules of type theory. A judgement always contains the turnstile symbol ` , separating context from the conclusion. Examples (using things we haven't defined yet): ` 0 : N x : N; y : N ` x + y : N f : R ! R; x : R ` f(x): R Type theory as set theory: more judgements Judgements can also involve asserting that something is a type. -

A Package for Logical Symbols

A Package for Logical Symbols Rett Bull October 10, 2009 The package logicsym supplies logical symbols for classes like Pomona Col- lege's Math 123, CS 80, and CS 81. Known conflicts: the logicsym package redefines existing symbols \mp (minus- plus) and \Re. 1 Options and Customization 1.1 Compatiblity This version introduces a number of name changes|for consistency. Use the option oldnames to add the previous names. 1.2 Truth Constants There are three options for truth constants: topbottom, tfletters, and tfwords. The commands \False and \True may be used in any mode. The default font for words and letters is italic, more specifically \mathit, but it may be changed by redefining \Truthconstantfont. The default is topbottom. Only one of the three options may be used. 1.3 Conjunction The options for conjunction are wedge and ampersand. The default is wedge. 1.4 Implication The choices for arrows are singlearrows or doublearrows. The default is doublearrows. The \if and only if" connective matches the arrow style but can be replaced with ≡ by specifying the option equivalence. 1.5 Turnstiles There is no option for turnstiles, but their size (relative to other symbols) may be adjusted by redefining \TurnstileScale. The default is 1.0. 1 1.6 Crossing Out In natural deduction inferences, we often want to show that an assumption has been discharged by crossing it out. A slash often works well for single letters, but it is too narrow for longer formulas. We provide a macro \Crossbox to make a wide slash, but unfortunately it is difficult to draw a simple diagonal across a TEX box. -

Introducing the Double Turnstile

Introducing the Double Turnstile 1. The lifeblood of logic is entailment. This is the familiar phenomenon of a certain statement or sentences following from (or being a consequence of) other statements. Their affirmation commands or obliges one to accept others. For example, the statement “Las Vegas is to the west of Pittsburgh.” follows from “Pittsburgh is to the east of Las Vegas.” One may not affirm the latter without committing oneself to accepting the latter. [And, it so happens, vice versa.] This entailment is secured by virtue of what it means for one location to be ‘east’ (or ‘west’) of another. Similarly (though not equivalently) the statement “Aristotle is a logician.” follows jointly from “Aristotle is a student of Plato.”, “Any student of Plato is a Philosopher.”, and “All Philosophers are logicians.” Here, the entailment seems secured by the meanings of ‘is’ and ‘all’, as well as by the form (or order) that these sentences take. Those who study this phenomenon (that is, logicians!) often use the double turnstile (|=) to symbolize or to stand for this phenomena. In a sense, then, we can say that the primary (if not sole) concern of logic resides with the double turnstile. 2. Syntax of |=: The double turnstile participates in expressions that we in the logic trade call sequents. The double turnstile is an infix that is placed between expressions standing for either single statements or sentences and sets of sentences or statements. By convention, we symbolize sets of statements with capital Greek letters and individual statements with lower-case Greek letters. Thus sequent expressions will typically take the following forms: Γ |= α, Φ |= ω, Π |=Ρ, or Δ,φ |= ψ.