The Monad.Reader Issue 6 by Bernie Pope [email protected] and Dan Piponi [email protected] and Russell O’Connor [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory Adam Yedidia Scott Aaronson MIT MIT [email protected] [email protected] May 17, 2016 Abstract Since the definition of the Busy Beaver function by Rad´oin 1962, an interesting open question has been the smallest value of n for which BB(n) is independent of ZFC set theory. Is this n approximately 10, or closer to 1,000,000, or is it even larger? In this paper, we show that it is at most 7,910 by presenting an explicit description of a 7,910-state Turing machine Z with 1 tape and a 2-symbol alphabet that cannot be proved to run forever in ZFC (even though it presumably does), assuming ZFC is consistent. The machine is based on work of Harvey Friedman on independent statements involving order-invariant graphs. In doing so, we give the first known upper bound on the highest provable Busy Beaver number in ZFC. To create Z, we develop and use a higher-level language, Laconic, which is much more convenient than direct state manipulation. We also use Laconic to design two Turing machines, G and R, that halt if and only if there are counterexamples to Goldbach’s Conjecture and the Riemann Hypothesis, respectively. 1 Introduction 1.1 Background and Motivation Zermelo-Fraenkel set theory with the axiom of choice, more commonly known as ZFC, is an ax- iomatic system invented in the twentieth which has since been used as the foundation of most of modern mathematics. It encodes arithmetic by describing natural numbers as increasing sets of sets. -

Programming in XML”

Growing a Language from the Inside Out Theo D’Hondt Growing a Language from the Inside Out Francqui Chair - 2010 Inaugural Lecture - 19 March 2010 Theo D’Hondt Software Languages Lab Faculty of Sciences - Vrije Universiteit Brussel http://soft.vub.ac.be Francqui Chair - 2010 1 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 2 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 2 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Converting to MP3 Francqui Chair - 2010 2 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 3 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Compiling a big project Francqui Chair - 2010 3 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 4 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Compiling a small project Francqui Chair - 2010 4 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 5 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Application with green threads Francqui Chair - 2010 5 Inaugural Lecture Growing a Language from the Inside Out Theo D’Hondt A Simple Experiment Francqui Chair - 2010 6 Inaugural Lecture Growing a Language from the -

Recovery, Convergence and Documentation of Languages

Recovery, Convergence and Documentation of Languages by Vadim Zaytsev September 14, 2010 VRIJE UNIVERSITEIT Recovery, Convergence and Documentation of Languages ACADEMISCH PROEFSCHRIFT ter verkrijging van de graad Doctor aan de Vrije Universiteit Amsterdam, op gezag van de rector magnificus prof.dr. L.M. Bouter, in het openbaar te verdedigen ten overstaan van de promotiecommissie van de faculteit der Exacte Wetenschappen op woensdag 27 oktober 2010 om 15.45 uur in de aula van de universiteit, De Boelelaan 1105 door Vadim Valerievich Zaytsev geboren te Rostov aan de Don, Rusland promotoren: prof.dr. R. Lammel¨ prof.dr. C. Verhoef Dit onderzoek werd ondersteund door de Nederlandse Organisatie voor Wetenschappelijk Onderzoek via: This research has been sponsored by the Dutch Organisation of Scientific Research via: NWO 612.063.304 LPPR: Language-Parametric Program Restructuring Acknowledgements Working on a PhD is supposed to be an endeavour completed in seclusion, but in practice one cannot survive without the help and support from others, fruitful scientific discus- sions, collaborative development of tools and papers and valuable pieces of advice. My work was supervised by Prof. Dr. Ralf Lammel¨ and Prof. Dr. Chris Verhoef, who often believed in me more than I did and were always open to questions and ready to give expert advice. They have made my development possible. LPPR colleagues — Jan Heering, Prof. Dr. Paul Klint, Prof. Dr. Mark van den Brand — have been a rare yet useful source of new research ideas. All thesis reading committee members have dedicated a lot of attention to my work and delivered exceptionally useful feedback on the late stage of the research: Prof. -

DM820 Advanced Topics in Programming Languages Peter Schneider-Kamp [email protected]! COURSE ORGANIZATION

DM820 Advanced Topics in Programming Languages Peter Schneider-Kamp [email protected]! http://imada.sdu.dk/~petersk/DM820/! COURSE ORGANIZATION • slid e 2 Course Elements § 7 lectures Thursday 08-10 (Weeks 15–17, 19–22) § 3 lectures Monday 16-18 (Weeks 16–18) § 7 discussion sections Wednesday 08-10 (Weeks 16–22) § 4 presentation sessions Monday 16-18 (Weeks 19–22) § exam = oral presentation + practical project § oral presentation is internal pass/fail § practical project is external with grades • 3 • June 2009 Course Goals § Learn more ways to program! § To this end, you will learn § to use advanced concepts in standard programming languages § to use non-standard programming languages § to use programming in widely differing contexts § Focus of the course on broadly covering (parts of) the field § Depth added through individually assigned topics • 4 • June 2009 Course Contract § I am offering you the following: 1. I will help you in picking a topic 2. I am always willing to help you § From you I expect the following: 1. You give a good presentation of your assigned topic 2. You have FUN programming! § You and I have the right and duty to call upon the contract! • slid e 5 Topics for Lectures 1–6 § Mostly up to you! § Lectures can be picked from following topics a) scripting languages b) constraint programming c) multi-paradigm programming languages d) string/term/graph rewriting e) domain specific languages f) program verification g) extensible programming h) aspect-oriented programming i) parser generation § Today (& next time): A little history of programming languages • slid e 6 Topics for Lectures 7–10 § Up to me! § Selection criteria: § Topics must supplement your chosen topics in order to guarantee a broad overview § Topics must be fun (in some weird way) • slid e 7 Individually Assigned Topics 1/3 Specific Languages: A. -

The Cognitive Programming Paradigm - the Next Programming Structure

American Journal of Software Engineering and Applications 2013; 2(2): 54-67 Published online April 2, 2013 (http://www.sciencepublishinggroup.com/j/ajsea) doi: 10.11648/j.ajsea.20130202.15 The cognitive programming paradigm - the next programming structure Benjamin Odei Bempong Information and Communication Technology/Mathematics Department Presbyterian University College, Ghana. Abetifi-Kwahu, Eastern Region Emailaddress: [email protected] / [email protected] (B. Odei Bempong) To cite this article: Benjamin Odei Bempong.The Cognitive Programming Paradigm the Next Programming Struture, American Journal of Software Engi- neering and Applications . Vol. 2, No. 2, 2013, pp. 54-67. doi: 10.11648/j.ajsea.20130202.15 Abstract: The development of computer programming started with the development of the switching logic, because, the computer hardware is made up of millions of digital switches. The activation and deactivation of these switches are through codified instructions – (program) which trigger the switches to function. The computer programming languages have gone through a revolution, from the machine code language, through assembly mnemonics to the high level programming lan- guages like FORTRAN, ALGOL, COBOL, LISP, BASIC, ADA and C/C ++ . It is a fact that, these programming languages are not the exact codes that microprocessors do understand and work with, because through compiler and interpreter pro- grams, these high level programming languages that are easily understood by people are converted to machine code lan- guages for the microprocessor to understand and do the work human knowledge has instructed it to do. The various pro- gramming languages stem from the difficulties man has in using one programming language to solve different problems on the computer. -

Recovery, Convergence and Documentation of Languages Zaytsev, V.V

VU Research Portal Recovery, Convergence and Documentation of Languages Zaytsev, V.V. 2010 document version Publisher's PDF, also known as Version of record Link to publication in VU Research Portal citation for published version (APA) Zaytsev, V. V. (2010). Recovery, Convergence and Documentation of Languages. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. E-mail address: [email protected] Download date: 25. Sep. 2021 VRIJE UNIVERSITEIT Recovery, Convergence and Documentation of Languages ACADEMISCH PROEFSCHRIFT ter verkrijging van de graad Doctor aan de Vrije Universiteit Amsterdam, op gezag van de rector magnificus prof.dr. L.M. Bouter, in het openbaar te verdedigen ten overstaan van de promotiecommissie van de faculteit der Exacte Wetenschappen op woensdag 27 oktober 2010 om 15.45 uur in de aula van de universiteit, De Boelelaan 1105 door Vadim Valerievich Zaytsev geboren te Rostov aan de Don, Rusland promotoren: prof.dr. -

Xcoax 2017 Lisbon: Unusable for Programming

UNUSABLE FOR PROGRAMMING DANIEL TEMKIN Independent, New York, USA [email protected] 2017.xCoAx.org Lisbon Abstract Computation Esolangs are programming languages designed for Keywords Communication Aesthetics non-practical purposes, often as experiments, par- Esolangs & X odies, or experiential art pieces. A common goal of Programming languages esolangs is to achieve Turing Completeness: the capa- Code art bility of implemen ing algorithms of any complexity. Oulipo They just go about getting to Turing Completeness Tangible Computing with an unusual and impractical set of commands, or unexpected ways of representing code. However, there is a much smaller class of esolangs that are en- tirely “Unusable for Programming.” These languages explore the very boundary of what a programming language is; producing unstable programs, or for which no programs can be written at all. This is a look at such languages and how they function (or fail to). 92 1. INTRODUCTION Esolangs (for “esoteric programming languages”) include languages built as thought experiments, jokes, parodies critical of language practices, and arte- facts from imagined alternate computer histories. The esolang Unlambda, for example, is both an esolang masterpiece and typical of the genre. Written by David Madore in 1999, Unlambda gives us a version of functional programming taken to its most extreme: functions can only be applied to other functions, returning more functions, all of them unnamed. It’s a cyberlinguistic puzzle intended as a challenge and an experiment at the extremes of a certain type of language design. The following is a program that calculates the Fibonacci sequence. The code is nearly opaque, with little indication of what it’s doing or how it works. -

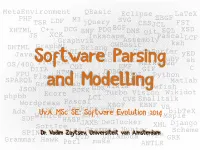

Uva Msc SE: Software Evolution 2014

MetaEnvironment QBasic Eclipse LaTeX PHP ΞBGF TSR LDF M3 jQuery SVG CSS FST BGF JCL XHTML C++ DCG BNF PDG DMS git SQL XSD JS XCK Inkscape PascalLCF Graphviz Assembly ksh DHTML GraphML Erlang GWBasic C# ATL Delphi CodeSurfer yED JavaXLDF Prolog SoftwarePromela MediaWiki ParsingRuby sh OS/400 CGI EMF Markdown DITA COBOL GIF C FPU HTMLHaskell dot Python SPARQL Flash CRC 80x86 GrammarLab Matlab JSON and ModellingGDK Wikia Blowfish Ecore PCRE Turbo Vision Wikidot phpbb LCI CVS Smalltalk Wordpress Rascal XBGF EBNF VB Ada ASF Jenkins EDD bibTeX WinIceUvA MSc SE: XSLTSoftware SubversionEvolution 2014 Maple SDF HASP DeGlucker SoftIce JAXB XML Django SPIN Dr. Vadim Zaytsev,IDA Grammar Universiteit Huntervan Amsterdam Scheme DTD Unlambda Zope GRK Grammar Hawk Perl make ANTLR What is parsing? What is modelling? Parsing? if (/^<s:.*>\/\/.*$/ := x) {…} awk '{print $1}' file.txt sed '/^$/d;G' file.txt Parsing techniques Parsing techniques: LR • Bottom-up • Deterministic • Operations • shift • reduce Left recursion is preferred • &$5CPFJVVRUGPYKMKRGFKCQTIYKMK(KNG5JKHV 4GFWEGA2CTUGA5VGRUAHQTA# $UXI%%$;5# -PWVJ1PVJG6TCPUNCVKQPQH.CPIWCIGUHTQO.GHVVQ4KIJV+% Parsing techniques: LL • Top-down • Backtracking • Needed • stack • parsing table • Left recursion is deadly %KWOCE5GTIKW../CVJGOCVKECN2CTUGT JVVRYYYEQFGRTQLGEVEQO#TVKENGU../CVJGOCVKECN2CTUGT .GYKU5VGCTPU5[PVCZ&KTGEVGF6TCPUFWEVKQP,#%/ Parsing techniques: … • Recursive descent — ↓ & accept() • Cocke-Younger-Kasami — ↑ & dynamic programming • Earley — ↓ & dynamic programming • Packrat — recursive descent -

The Joy of Clojure by Michael Fogus and Chris Houser

Michael Fogus Chris Houser FOREWORD BY STEVE YEGGE MANNING The Joy of Clojure by Michael Fogus and Chris Houser Chapter 1 Copyright 2011 Manning Publications brief contents PART 1 FOUNDATIONS ......................................................................1 1 ■ Clojure philosophy 3 2 ■ Drinking from the Clojure firehose 20 3 ■ Dipping our toes in the pool 43 PART 2 DATA TYPES ........................................................................59 4 ■ On scalars 61 5 ■ Composite data types 76 PART 3 FUNCTIONAL PROGRAMMING............................................. 105 6 ■ Being lazy and set in your ways 107 7 ■ Functional programming 125 PART 4 LARGE-SCALE DESIGN ........................................................ 155 8 ■ Macros 157 9 ■ Combining data and code 177 10 ■ Java.next 207 11 ■ Mutation 234 PART 5 TANGENTIAL CONSIDERATIONS.......................................... 275 12 ■ Performance 277 13 ■ Clojure changes the way you think 292 vii Clojure philosophy This chapter covers The Clojure way Why a(nother) Lisp? Functional programming Why Clojure isn’t especially object-oriented Learning a new language generally requires significant investment of thought and effort, and it is only fair that programmers expect each language they consider learning to justify that investment. Clojure was born out of creator Rich Hickey’s desire to avoid many of the complications, both inherent and incidental, of manag- ing state using traditional object-oriented techniques. Thanks to a thoughtful design based in rigorous programming language research, coupled with a fervent look toward practicality, Clojure has blossomed into an important programming language playing an undeniably important role in the current state of the art in lan- guage design. On one side of the equation, Clojure utilizes Software Transactional Memory (STM), agents, a clear distinction between identity and value types, arbi- trary polymorphism, and functional programming to provide an environment con- ducive to making sense of state in general, and especially in the face of concurrency. -

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory Adam Yedidia Scott Aaronson Massachusetts Institute of Technology [email protected] [email protected] Since the definition of the busy beaver function by Rado in 1962, an in- teresting open question has been the smallest value of n for which BB(n) is independent of Zermelo–Fraenkel set theory with the axiom of choice (ZFC). Is this n approximately 10, or closer to 1 000 000, or is it even larger? In this paper, we show that it is at most 7910 by present- ing an explicit description of a 7910-state Turing machine Z with one tape and a two-symbol alphabet that cannot be proved to run forever in ZFC (even though it presumably does), assuming ZFC is consistent. The machine is based on work of Harvey Friedman on independent statements involving order-invariant graphs. In doing so, we give the first known upper bound on the highest provable busy beaver number in ZFC. To create Z, we develop and use a higher-level language, La- conic, which is much more convenient than direct state manipulation. We also use Laconic to design two Turing machines, G and R, that halt if and only if there are counterexamples to Goldbach’s conjecture and the Riemann hypothesis, respectively. 1. Introduction 1.1 Background and Motivation Zermelo–Fraenkel set theory with the axiom of choice, more com- monly known as ZFC, is an axiomatic system invented in the twenti- eth century that has since been used as the foundation of most of mod- ern mathematics. -

Use Style: Paper Title

Volume 4, No. 10, September-October 2013 ISSN No. 0976-5697 International Journal of Advanced Research in Computer Science RESEARCH PAPER Available Online at www.ijarcs.info Logical reconstruction of programming language paradigms Davor Lauc Faculty of Humanities and Social Sciences University of Zagreb Zagreb, Croatia [email protected] Abstract: The concept of programming language paradigms is a one of fundamental concepts of computing, but the present usage of the term is quite chaotic. Using method of logical reconstruction programming paradigms are modeled by original logical models of computation that are considered paradigms of programming language paradigms. The space of the programming languages is visualized as a prism, with edges of imperative, function and logical paradigms corresponding to basic models of computation, and depth axis as degree of modularity of languages. Actual programming languages are represented as occupying some space in such a model. Finally, the model is evaluated for completeness with regard to existing programming languages. Keywords: programming language paradigms, models of computations, logical reconstruction outnumbered by computational languages in the order of I. INTRODUCTION magnitude. Also, models of computation are much more elegant and basic than programming languages that evolved The concept of programming languages paradigm is one facing complex real-world problems. Hence, foundational of the most elusive concepts of computing. It was introduced concepts, styles and features of programming languages by Floyd [1] when there already were a large number of should be easier to discover in the models of computation programming languages and programming styles. The than in vast number of programming languages. number of programming languages has now increased manifold with new languages designed almost every day. -

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory

A Relatively Small Turing Machine Whose Behavior Is Independent of Set Theory Adam Yedidia Scott Aaronson MIT MIT [email protected] [email protected] May 16, 2016 Abstract Since the definition of the Busy Beaver function by Rad´oin 1962, an interesting open question has been the smallest value of n for which BB(n) is independent of ZFC set theory. Is this n approximately 10, or closer to 1,000,000, or is it even larger? In this paper, we show that it is at most 7,910 by presenting an explicit description of a 7,910-state Turing machine Z with 1 tape and a 2-symbol alphabet that cannot be proved to run forever in ZFC (even though it presumably does), assuming ZFC is consistent. The machine is based on work of Harvey Friedman on independent statements involving order-invariant graphs. In doing so, we give the first known upper bound on the highest provable Busy Beaver number in ZFC. To create Z, we develop and use a higher-level language, Laconic, which is much more convenient than direct state manipulation. We also use Laconic to design two Turing machines, G and R, that halt if and only if there are counterexamples to Goldbach’s Conjecture and the Riemann Hypothesis, respectively. 1 Introduction 1.1 Background and Motivation Zermelo-Fraenkel set theory with the axiom of choice, more commonly known as ZFC, is an ax- iomatic system invented in the twentieth which has since been used as the foundation of most of modern mathematics. It encodes arithmetic by describing natural numbers as increasing sets of sets.