Classification of Soils Into Hydrologic Groups Using Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Port Silt Loam Oklahoma State Soil

PORT SILT LOAM Oklahoma State Soil SOIL SCIENCE SOCIETY OF AMERICA Introduction Many states have a designated state bird, flower, fish, tree, rock, etc. And, many states also have a state soil – one that has significance or is important to the state. The Port Silt Loam is the official state soil of Oklahoma. Let’s explore how the Port Silt Loam is important to Oklahoma. History Soils are often named after an early pioneer, town, county, community or stream in the vicinity where they are first found. The name “Port” comes from the small com- munity of Port located in Washita County, Oklahoma. The name “silt loam” is the texture of the topsoil. This texture consists mostly of silt size particles (.05 to .002 mm), and when the moist soil is rubbed between the thumb and forefinger, it is loamy to the feel, thus the term silt loam. In 1987, recognizing the importance of soil as a resource, the Governor and Oklahoma Legislature selected Port Silt Loam as the of- ficial State Soil of Oklahoma. What is Port Silt Loam Soil? Every soil can be separated into three separate size fractions called sand, silt, and clay, which makes up the soil texture. They are present in all soils in different propor- tions and say a lot about the character of the soil. Port Silt Loam has a silt loam tex- ture and is usually reddish in color, varying from dark brown to dark reddish brown. The color is derived from upland soil materials weathered from reddish sandstones, siltstones, and shales of the Permian Geologic Era. -

Silt Fence (1056)

Silt Fence (1056) Wisconsin Department of Natural Resources Technical Standard I. Definition IV. Federal, State, and Local Laws Silt fence is a temporary sediment barrier of Users of this standard shall be aware of entrenched permeable geotextile fabric designed applicable federal, state, and local laws, rules, to intercept and slow the flow of sediment-laden regulations, or permit requirements governing sheet flow runoff from small areas of disturbed the use and placement of silt fence. This soil. standard does not contain the text of federal, state, or local laws. II. Purpose V. Criteria The purpose of this practice is to reduce slope length of the disturbed area and to intercept and This section establishes the minimum standards retain transported sediment from disturbed areas. for design, installation and performance requirements. III. Conditions Where Practice Applies A. Placement A. This standard applies to the following applications: 1. When installed as a stand-alone practice on a slope, silt fence shall be placed on 1. Erosion occurs in the form of sheet and the contour. The parallel spacing shall rill erosion1. There is no concentration not exceed the maximum slope lengths of water flowing to the barrier (channel for the appropriate slope as specified in erosion). Table 1. 2. Where adjacent areas need protection Table 1. from sediment-laden runoff. Slope Fence Spacing 3. Where effectiveness is required for one < 2% 100 feet year or less. 2 to 5% 75 feet 5 to 10% 50 feet 4. Where conditions allow for silt fence to 10 to 33% 25 feet be properly entrenched and staked as > 33% 20 feet outlined in the Criteria Section V. -

Types of Landslides.Indd

Landslide Types and Processes andslides in the United States occur in all 50 States. The primary regions of landslide occurrence and potential are the coastal and mountainous areas of California, Oregon, Land Washington, the States comprising the intermountain west, and the mountainous and hilly regions of the Eastern United States. Alaska and Hawaii also experience all types of landslides. Landslides in the United States cause approximately $3.5 billion (year 2001 dollars) in dam- age, and kill between 25 and 50 people annually. Casualties in the United States are primar- ily caused by rockfalls, rock slides, and debris flows. Worldwide, landslides occur and cause thousands of casualties and billions in monetary losses annually. The information in this publication provides an introductory primer on understanding basic scientific facts about landslides—the different types of landslides, how they are initiated, and some basic information about how they can begin to be managed as a hazard. TYPES OF LANDSLIDES porate additional variables, such as the rate of movement and the water, air, or ice content of The term “landslide” describes a wide variety the landslide material. of processes that result in the downward and outward movement of slope-forming materials Although landslides are primarily associ- including rock, soil, artificial fill, or a com- ated with mountainous regions, they can bination of these. The materials may move also occur in areas of generally low relief. In by falling, toppling, sliding, spreading, or low-relief areas, landslides occur as cut-and- La Conchita, coastal area of southern Califor- flowing. Figure 1 shows a graphic illustration fill failures (roadway and building excava- nia. -

Silt Fences: an Economical Technique for Measuring Hillslope Soil Erosion

United States Department of Agriculture Silt Fences: An Economical Technique Forest Service for Measuring Hillslope Soil Erosion Rocky Mountain Research Station General Technical Peter R. Robichaud Report RMRS-GTR-94 Robert E. Brown August 2002 Robichaud, Peter R.; Brown, Robert E. 2002. Silt fences: an economical technique for measuring hillslope soil erosion. Gen. Tech. Rep. RMRS-GTR-94. Fort Collins, CO: U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station. 24 p. Abstract—Measuring hillslope erosion has historically been a costly, time-consuming practice. An easy to install low-cost technique using silt fences (geotextile fabric) and tipping bucket rain gauges to measure onsite hillslope erosion was developed and tested. Equipment requirements, installation procedures, statis- tical design, and analysis methods for measuring hillslope erosion are discussed. The use of silt fences is versatile; various plot sizes can be used to measure hillslope erosion in different settings and to determine effectiveness of various treatments or practices. Silt fences are installed by making a sediment trap facing upslope such that runoff cannot go around the ends of the silt fence. The silt fence is folded to form a pocket for the sediment to settle on and reduce the possibility of sediment undermining the silt fence. Cleaning out and weighing the accumulated sediment in the field can be accomplished with a portable hanging or plat- form scale at various time intervals depending on the necessary degree of detail in the measurement of erosion (that is, after every storm, quarterly, or seasonally). Silt fences combined with a tipping bucket rain gauge provide an easy, low-cost method to quantify precipitation/hillslope erosion relationships. -

Summarization and Comparison of Engineering Properties of Loess in the United States

Summarization and Comparison of Engineering Properties of Loess in the United States J. B. SHEELER, Associate Professor of Civil Engineering, Iowa State University •LARGE deposits of loess are found in many parts of the United States, but published values of the engineering properties of loess are relatively scarce. The data in this paper were gathered to indicate similarities and compare the properties of loess from one area with another. Loess is composed primarily of rather loosely arranged angular grains of sand, silt, and clay. Silt is usually the dominant size. Calcite is also generally present in amounts ranging from zero to more than 10 percent of the total soil.. The aeolian hypothesis of loess deposition is compatible with the physical charac teristics of undisturbed loess masses. This hypothesis states that fine-grained ma terial was transported, sorted, and redeposited by wind action and thus became loess. During deposition, moisture and clay minerals are believed responsible for cementing the coarser grains together to form a loose structure. The loess is therefore subject to loss of shear strength due to water softening the clay bonds and to severe consolida tion caused by a combination of loading and moisture. Loess is usually thought of as an aeolian material that was deposited thousands of years ago and has remained in place since the time of deposition. Loess that has been eroded and redeposited is often referred to as redeposited loess, reworked loess or more simply as a silt deposit. This implies that the word loess indicates an aeolian soil, undisturbed since deposition. Certain engineering properties of loess, such as shear strength, are quite drastically changed by erosion and redeposition. -

Step 2-Soil Mechanics

Step 2 – Soil Mechanics Introduction Webster defines the term mechanics as a branch of physical science that deals with energy and forces and their effect on bodies. Soil mechanics is the branch of mechanics that deals with the action of forces on soil masses. The soil that occurs at or near the surface of the earth is one of the most widely encountered materials in civil, structural and architectural engineering. Soil ranks high in degree of importance when compared to the numerous other materials (i.e. steel, concrete, masonry, etc.) used in engineering. Soil is a construction material used in many structures, such as retaining walls, dams, and levees. Soil is also a foundation material upon which structures rest. All structures, regardless of the material from which they are constructed, ultimately rest upon soil or rock. Hence, the load capacity and settlement behavior of foundations depend on the character of the underlying soils, and on their action under the stress imposed by the foundation. Based on this, it is appropriate to consider soil as a structural material, but it differs from other structural materials in several important aspects. Steel is a manufactured material whose physical and chemical properties can be very accurately controlled during the manufacturing process. Soil is a natural material, which occurs in infinite variety and whose engineering properties can vary widely from place to place – even within the confines of a single construction project. Geotechnical engineering practice is devoted to the location of various soils encountered on a project, the determination of their engineering properties, correlating those properties to the project requirements, and the selection of the best available soils for use with the various structural elements of the project. -

Undrained Pore Pressure Development on Cohesive Soil in Triaxial Cyclic Loading

applied sciences Article Undrained Pore Pressure Development on Cohesive Soil in Triaxial Cyclic Loading Andrzej Głuchowski 1,* , Emil Soból 2 , Alojzy Szyma ´nski 2 and Wojciech Sas 1 1 Water Centre-Laboratory, Faculty of Civil and Environmental Engineering, Warsaw University of Life Sciences-SGGW, 02787 Warsaw, Poland 2 Department of Geotechnical Engineering, Faculty of Civil and Environmental Engineering, Warsaw University of Life Sciences-SGGW, 02787 Warsaw, Poland * Correspondence: [email protected]; Tel.: +48-225-935-405 Received: 5 July 2019; Accepted: 5 September 2019; Published: 12 September 2019 Abstract: Cohesive soils subjected to cyclic loading in undrained conditions respond with pore pressure generation and plastic strain accumulation. The article focus on the pore pressure development of soils tested in isotropic and anisotropic consolidation conditions. Due to the consolidation differences, soil response to cyclic loading is also different. Analysis of the cyclic triaxial test results in terms of pore pressure development produces some indication of the relevant mechanisms at the particulate level. Test results show that the greater susceptibility to accumulate the plastic strain of cohesive soil during cyclic loading is connected with the pore pressure generation pattern. The value of excess pore pressure required to soil sample failure differs as a consequence of different consolidation pressure and anisotropic stress state. Effective stresses and pore pressures are the main factors that govern the soil behavior in undrained conditions. Therefore, the pore pressure generated in the first few cycles plays a key role in the accumulation of plastic strains and constitutes the major amount of excess pore water pressure. Soil samples consolidated in the anisotropic and isotropic stress state behave differently responding differently to cyclic loading. -

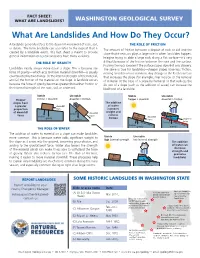

What Are Landslides and How Do They Occur?

Stable Unstable friction > downhill downhill > friction FACT SHEET: downhill WHAT ARE LANDSLIDES? WASHINGTON GEOLOGICALforce SURVEY friction downhill friction force gravity What Are Landslides And How Do They Occur?gravity Stable Unstable A landslide generally refers to the downhill movement of rock, soil, THE ROLE OF FRICTION friction > downhill downhill > friction or debris. The term landslide can also refer to the deposit that is The amount of friction between a deposit of rock or soil and the created by a landslide event. This fact sheet is meant to provide downhill slope that it rests on plays a large role in when landslides happen. force general information only; real landslides have many variables. Imagine trying to slide a large rock along a flat surface—it's very friction downhill THE ROLE OF GRAVITY difficult because of the friction between the rock and the frictionsurface. force gravity Pushing the rock is easier if the surface slopes downhill or is slippery. gravity Landslides nearly always move down a slope. This is because the The same is true for landslides—steeper slopes have less friction, force of gravity—which acts to move material downhill—is usually making landslides more common. Any change to the Earth's surface counteracted by two things: (1) the internal strength of the material, that increases the slope (for example, river incision or the removal and (2) the friction of the material on the slope. A landslide occurs of material at the base of a slope by humans) or that reduces the because the force of gravity becomes greater than either friction or friction of a slope (such as the addition of water) can increase the the internal strength of the rock, soil, or sediment. -

Effect of Environmental Factors on Pore Water Pressure

Examensarbete vid Institutionen för geovetenskaper Degree Project at the Department of Earth Sciences ISSN 1650-6553 Nr 416 Effect of Environmental Factors on Pore Water Pressure in River Bank Sediments, Sollefteå, Sweden Påverkan av miljöfaktorer på porvattentryck i flodbanksediment, Sollefteå, Sverige Hanna Fritzson INSTITUTIONEN FÖR GEOVETENSKAPER DEPARTMENT OF EARTH SCIENCES Examensarbete vid Institutionen för geovetenskaper Degree Project at the Department of Earth Sciences ISSN 1650-6553 Nr 416 Effect of Environmental Factors on Pore Water Pressure in River Bank Sediments, Sollefteå, Sweden Påverkan av miljöfaktorer på porvattentryck i flodbanksediment, Sollefteå, Sverige Hanna Fritzson ISSN 1650-6553 Copyright © Hanna Fritzson Published at Department of Earth Sciences, Uppsala University (www.geo.uu.se), Uppsala, 2017 Abstract Effect of Environmental Factors on Pore Water Pressure in River Bank Sediments, Sollefteå, Sweden Hanna Fritzson Pore water pressure in a silt slope in Sollefteå, Sweden, was measured from 2009-2016. The results from 2009-2012 were presented and evaluated in a publication by Westerberg et al. (2014) and this report is an extension of that project. In a silt slope the pore water pressures are generally negative, contributing to the stability of the slope. In this report the pore water pressure variations are analyzed using basic statistics and a connection between the pore water pressure variations, the geology and parameters such as temperature, precipitation and soil moisture are discussed. The soils in the slope at Nipuddsvägen consists of sandy silt, silt, clayey silt and silty clay. The main findings were that at 2, 4 and 6 m depth there are significant increases and decreases in the pore water pressure that can be linked with the changing of the seasons, for example there is a significant increase in the spring when the ground frost melts. -

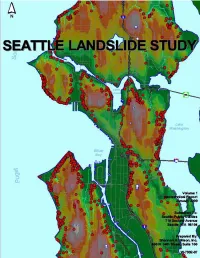

Landslide Study

Department of Planning and Development Seattle Landslide Study TABLE OF CONTENTS VOLUME 1. GEOTECHNICAL REPORT EXECUTIVE SUMMARY PREFACE 1.0 INTRODUCTION 1.1 Purpose 1.2 Scope of Services 1.3 Report Organization 1.4 Authorization 1.5 Limitations PART 1. LANDSLIDE INVENTORY AND ANALYSES 2.0 GEOLOGIC CONDITIONS 2.1 Topography 2.2 Stratigraphy 2.2.1 Tertiary Bedrock 2.2.2 Pre-Vashon Deposits 2.2.3 Vashon Glacial Deposits 2.2.4 Holocene Deposits 2.3 Groundwater and Wet Weather 3.0 METHODOLOGY 3.1 Data Sources 3.2 Data Description 3.2.1 Landslide Identification 3.2.2 Landslide Characteristics 3.2.3 Stratigraphy (Geology) 3.2.4 Landslide Trigger Mechanisms 3.2.5 Roads and Public Utility Impact 3.2.6 Damage and Repair (Mitigation) 3.3 Data Processing 4.0 LANDSLIDES 4.1 Landslide Types 4.1.1 High Bluff Peeloff 4.1.2 Groundwater Blowout 4.1.3 Deep-Seated Landslides 4.1.4 Shallow Colluvial (Skin Slide) 4.2 Timing of Landslides 4.3 Landslide Areas 4.4 Causes of Landslides 4.5 Potential Slide and Steep Slope Areas PART 2. GEOTECHNICAL EVALUATIONS 5.0 PURPOSE AND SCOPE 5.1 Purpose of Geotechnical Evaluations 5.2 Scope of Geotechnical Evaluations 6.0 TYPICAL IMPROVEMENTS RELATED TO LANDSLIDE TYPE 6.1 Geologic Conditions that Contribute to Landsliding and Instability 6.2 Typical Approaches to Improve Stability 6.3 High Bluff Peeloff Landslides 6.4 Groundwater Blowout Landslides 6.5 Deep-Seated Landslides 6.6 Shallow Colluvial Landslides 7.0 DETAILS REGARDING IMPROVEMENTS 7.1 Surface Water Improvements 7.1.1 Tightlines 7.1.2 Surface Water Systems - Maintenance -

Liquefaction Potential of Silty Soils D. Erteif, MH Maher

Transactions on the Built Environment vol 14, © 1995 WIT Press, www.witpress.com, ISSN 1743-3509 Liquefaction potential of silty soils D. Erteif, M.H. Maher*> "Lockwood-Singh and Associates, 1944 Cotner Avenue, ^Department of Civil and Environmental Engineering, Rutgers University, Piscataway NJ 08855-0909, USA Abstract Cyclic stress controlled tests are performed to investigate the degree of liquefaction of sand containing cohesionless and low plasticity fines such as those found in tailing materials. The experimental parameters varied consists of sand void ratio, silt content, and plasticity of silt. The failure is defined as 5% and 10% peak to peak axial strain since the pore pressure does not fully developed and stopps building up when it reaches a value equal to 90-95% of the initial confining stress. 1 Introduction Liquefaction of soils is a complex problem on which a great deal of experimental and numerical research has been done (NRC 8). Much of the research done involved liquefaction of sands. Hence, soils containing some fines are frequently encountered rather than clean sands, especially in alluvial or reclaimed deposits where soil liquefaction becomes extremely important for evaluating the stability of the ground during earthquakes. The phenomenon of liquefaction is considered to be the main cause of slope failures in saturated deposits of fine silty sands. Many natural and artificial soil deposits contain silty layers and the permeability of the soil depends on the amount of silt percent. For example, Mid-Chiba Earthquake shook the area of Tokyo Bay in 1980 with a magnitude of 6.1. Records of time change of pore pressures Transactions on the Built Environment vol 14, © 1995 WIT Press, www.witpress.com, ISSN 1743-3509 164 Soil Dynamics and Earthquake Engineering indicated that there were differences in the rate of pore pressure dissipation in different layers which contained different amount of fines (Ishihara^). -

Infiltration Through Disturbed Urban Soils

1 --------------------- Infiltration Through Disturbed Urban Soils Robert Pitt and Janice Lantrip Prior research by Pitt (1987) examined runoff losses from paved and roofed surfaces in urban areas and showed significant losses at these surfaces during the small and moderate sized events of most interest for water quality evaluations. However, Pitt and Durrans (1995) also examined runoff and pavement seepage on highway pavements and found that very little surface runoff entered typical highway pavement. During earlier research, it was also found that disturbed urban soils do not behave as indicated by stormwater models. Early unpubli'ihed double-ring infiltration tests conducted by the Wisconsin Department of Natural Resources (DNR) in Oconomowoc, Wisconsin, (shown in Table indicated highly variable infiltration rates for soils that were generally sandy {NRCS A and B hydrologic group soils) and dry. The median initial rate was about 75 (3 in/h), but ranged from 0 to 600 mm/h (0 to 25 in/h). The final rates aLso had a median value of about 75 mm/h (3 in/h) after at least two hours of testing, but ranged from 0 to 400 mm/h (0 to 15 in/h). Many infiltration rates actually increased with time during these tests. In about one third of the cases, the observed infiltration rates remained very dose to zero, even for these sandy soils. Areas that experienced substantial disturbances or traffic (such as school playing fieids), and siltation (such as in some grass swales) had the lowest infiltration rates. H was hoped that more detailed testing could explain some of the large variations observed.