Computer Graphics & Image Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ARTG-2306-CRN-11966-Graphic Design I Computer Graphics

Course Information Graphic Design 1: Computer Graphics ARTG 2306, CRN 11966, Section 001 FALL 2019 Class Hours: 1:30 pm - 4:20 pm, MW, Room LART 411 Instructor Contact Information Instructor: Nabil Gonzalez E-mail: [email protected] Office: Office Hours: Instructor Information Nabil Gonzalez is your instructor for this course. She holds an Associate of Arts degree from El Paso Community College, a double BFA degree in Graphic Design and Printmaking from the University of Texas at El Paso and an MFA degree in Printmaking from the Rhode Island School of Design. As a studio artist, Nabil’s work has been focused on social and political views affecting the borderland as well as the exploration of identity, repetition and erasure. Her work has been shown throughout the United State, Mexico, Colombia and China. Her artist books and prints are included in museum collections in the United States. Course Description Graphic Design 1: Computer Graphics is an introduction to graphics, illustration, and page layout software on Macintosh computers. Students scan, generate, import, process, and combine images and text in black and white and in color. Industry standard desktop publishing software and imaging programs are used. The essential applications taught in this course are: Adobe Illustrator, Adobe Photoshop and Adobe InDesign. Course Prerequisite Information Course prerequisites include ARTF 1301, ARTF 1302, and ARTF 1304 each with a grade of “C” or better. Students are required to have a foundational understanding of the elements of design, the principles of composition, style, and content. Additionally, students must have developed fundamental drawing skills. These skills and knowledge sets are provided through the Department of Art’s Foundational Courses. -

Statement by the Association of Hoving Image Archivists (AXIA) To

Statement by the Association of Hoving Image Archivists (AXIA) to the National FTeservation Board, in reference to the Uational Pilm Preservation Act of 1992, submitted by Dr. Jan-Christopher Eorak , h-esident The Association of Moving Image Archivists was founded in November 1991 in New York by representatives of over eighty American and Canadian film and television archives. Previously grouped loosely together in an ad hoc organization, Pilm Archives Advisory Committee/Television Archives Advisory Committee (FAAC/TAAC), it was felt that the field had matured sufficiently to create a national organization to pursue the interests of its constituents. According to the recently drafted by-laws of the Association, AHIA is a non-profit corporation, chartered under the laws of California, to provide a means for cooperation among individuals concerned with the collection, preservation, exhibition and use of moving image materials, whether chemical or electronic. The objectives of the Association are: a.) To provide a regular means of exchanging information, ideas, and assistance in moving image preservation. b.) To take responsible positions on archival matters affecting moving images. c.) To encourage public awareness of and interest in the preservation, and use of film and video as an important educational, historical, and cultural resource. d.) To promote moving image archival activities, especially preservation, through such means as meetings, workshops, publications, and direct assistance. e. To promote professional standards and practices for moving image archival materials. f. To stimulate and facilitate research on archival matters affecting moving images. Given these objectives, the Association applauds the efforts of the National Film Preservation Board, Library of Congress, to hold public hearings on the current state of film prese~ationin 2 the United States, as a necessary step in implementing the National Film Preservation Act of 1992. -

4.3 Discovering Fractal Geometry in CAAD

4.3 Discovering Fractal Geometry in CAAD Francisco Garcia, Angel Fernandez*, Javier Barrallo* Facultad de Informatica. Universidad de Deusto Bilbao. SPAIN E.T.S. de Arquitectura. Universidad del Pais Vasco. San Sebastian. SPAIN * Fractal geometry provides a powerful tool to explore the world of non-integer dimensions. Very short programs, easily comprehensible, can generate an extensive range of shapes and colors that can help us to understand the world we are living. This shapes are specially interesting in the simulation of plants, mountains, clouds and any kind of landscape, from deserts to rain-forests. The environment design, aleatory or conditioned, is one of the most important contributions of fractal geometry to CAAD. On a small scale, the design of fractal textures makes possible the simulation, in a very concise way, of wood, vegetation, water, minerals and a long list of materials very useful in photorealistic modeling. Introduction Fractal Geometry constitutes today one of the most fertile areas of investigation nowadays. Practically all the branches of scientific knowledge, like biology, mathematics, geology, chemistry, engineering, medicine, etc. have applied fractals to simulate and explain behaviors difficult to understand through traditional methods. Also in the world of computer aided design, fractal sets have shown up with strength, with numerous software applications using design tools based on fractal techniques. These techniques basically allow the effective and realistic reproduction of any kind of forms and textures that appear in nature: trees and plants, rocks and minerals, clouds, water, etc. For modern computer graphics, the access to these techniques, combined with ray tracing allow to create incredible landscapes and effects. -

The Uses of Animation 1

The Uses of Animation 1 1 The Uses of Animation ANIMATION Animation is the process of making the illusion of motion and change by means of the rapid display of a sequence of static images that minimally differ from each other. The illusion—as in motion pictures in general—is thought to rely on the phi phenomenon. Animators are artists who specialize in the creation of animation. Animation can be recorded with either analogue media, a flip book, motion picture film, video tape,digital media, including formats with animated GIF, Flash animation and digital video. To display animation, a digital camera, computer, or projector are used along with new technologies that are produced. Animation creation methods include the traditional animation creation method and those involving stop motion animation of two and three-dimensional objects, paper cutouts, puppets and clay figures. Images are displayed in a rapid succession, usually 24, 25, 30, or 60 frames per second. THE MOST COMMON USES OF ANIMATION Cartoons The most common use of animation, and perhaps the origin of it, is cartoons. Cartoons appear all the time on television and the cinema and can be used for entertainment, advertising, 2 Aspects of Animation: Steps to Learn Animated Cartoons presentations and many more applications that are only limited by the imagination of the designer. The most important factor about making cartoons on a computer is reusability and flexibility. The system that will actually do the animation needs to be such that all the actions that are going to be performed can be repeated easily, without much fuss from the side of the animator. -

Volume Rendering

Volume Rendering 1.1. Introduction Rapid advances in hardware have been transforming revolutionary approaches in computer graphics into reality. One typical example is the raster graphics that took place in the seventies, when hardware innovations enabled the transition from vector graphics to raster graphics. Another example which has a similar potential is currently shaping up in the field of volume graphics. This trend is rooted in the extensive research and development effort in scientific visualization in general and in volume visualization in particular. Visualization is the usage of computer-supported, interactive, visual representations of data to amplify cognition. Scientific visualization is the visualization of physically based data. Volume visualization is a method of extracting meaningful information from volumetric datasets through the use of interactive graphics and imaging, and is concerned with the representation, manipulation, and rendering of volumetric datasets. Its objective is to provide mechanisms for peering inside volumetric datasets and to enhance the visual understanding. Traditional 3D graphics is based on surface representation. Most common form is polygon-based surfaces for which affordable special-purpose rendering hardware have been developed in the recent years. Volume graphics has the potential to greatly advance the field of 3D graphics by offering a comprehensive alternative to conventional surface representation methods. The object of this thesis is to examine the existing methods for volume visualization and to find a way of efficiently rendering scientific data with commercially available hardware, like PC’s, without requiring dedicated systems. 1.2. Volume Rendering Our display screens are composed of a two-dimensional array of pixels each representing a unit area. -

Animated Presentation of Static Infographics with Infomotion

Eurographics Conference on Visualization (EuroVis) 2021 Volume 40 (2021), Number 3 R. Borgo, G. E. Marai, and T. von Landesberger (Guest Editors) Animated Presentation of Static Infographics with InfoMotion Yun Wang1, Yi Gao1;2, Ray Huang1, Weiwei Cui1, Haidong Zhang1, and Dongmei Zhang1 1Microsoft Research Asia 2Nanjing University (a) 5% (b) time Element Animation effect Meats, sweets slice spin link wipe dot zoom 35% icon zoom 10% Whole grains, title zoom OliVer oil pasta, beans, description wipe whole grain bread Mediterranean Diet 20% 30% 30% Vegetables and fruits Fish, seafood, poultry, Vegetables and fruits dairy food, eggs Figure 1: (a) An example infographic design. (b) The animated presentations for this infographic show the start time, duration, and animation effects applied to the visual elements. InfoMotion automatically generates animated presentations of static infographics. Abstract By displaying visual elements logically in temporal order, animated infographics can help readers better understand layers of information expressed in an infographic. While many techniques and tools target the quick generation of static infographics, few support animation designs. We propose InfoMotion that automatically generates animated presentations of static infographics. We first conduct a survey to explore the design space of animated infographics. Based on this survey, InfoMotion extracts graphical properties of an infographic to analyze the underlying information structures; then, animation effects are applied to the visual elements in the infographic in temporal order to present the infographic. The generated animations can be used in data videos or presentations. We demonstrate the utility of InfoMotion with two example applications, including mixed-initiative animation authoring and animation recommendation. -

Information Graphics Design Challenges and Workflow Management Marco Giardina, University of Neuchâtel, Switzerland, Pablo Medi

Online Journal of Communication and Media Technologies Volume: 3 – Issue: 1 – January - 2013 Information Graphics Design Challenges and Workflow Management Marco Giardina, University of Neuchâtel, Switzerland, Pablo Medina, Sensiel Research, Switzerland Abstract Infographics, though still in its infancy in the digital world, may offer an opportunity for media companies to enhance their business processes and value creation activities. This paper describes research about the influence of infographics production and dissemination on media companies’ workflow management. Drawing on infographics examples from New York Times print and online version, this contribution empirically explores the evolution from static to interactive multimedia infographics, the possibilities and design challenges of this journalistic emerging field and its impact on media companies’ activities in relation to technology changes and media-use patterns. Findings highlight some explorative ideas about the required workflow and journalism activities for a successful inception of infographics into online news dissemination practices of media companies. Conclusions suggest that delivering infographics represents a yet not fully tapped opportunity for media companies, but its successful inception on news production routines requires skilled professionals in audiovisual journalism and revised business models. Keywords: newspapers, visual communication, infographics, digital media technology © Online Journal of Communication and Media Technologies 108 Online Journal of Communication and Media Technologies Volume: 3 – Issue: 1 – January - 2013 During this time of unprecedented change in journalism, media practitioners and scholars find themselves mired in a new debate on the storytelling potential of data visualization narratives. News organization including the New York Times, Washington Post and The Guardian are at the fore of innovation and experimentation and regularly incorporate dynamic graphics into their journalism products (Segel, 2011). -

Lab 11: the Compound Microscope

OPTI 202L - Geometrical and Instrumental Optics Lab 9-1 LAB 9: THE COMPOUND MICROSCOPE The microscope is a widely used optical instrument. In its simplest form, it consists of two lenses Fig. 9.1. An objective forms a real inverted image of an object, which is a finite distance in front of the lens. This image in turn becomes the object for the ocular, or eyepiece. The eyepiece forms the final image which is virtual, and magnified. The overall magnification is the product of the individual magnifications of the objective and the eyepiece. Figure 9.1. Images in a compound microscope. To illustrate the concept, use a 38 mm focal length lens (KPX079) as the objective, and a 50 mm focal length lens (KBX052) as the eyepiece. Set them up on the optical rail and adjust them until you see an inverted and magnified image of an illuminated object. Note the intermediate real image by inserting a piece of paper between the lenses. Q1 ● Can you demonstrate the final image by holding a piece of paper behind the eyepiece? Why or why not? The eyepiece functions as a magnifying glass, or simple magnifier. In effect, your eye looks into the eyepiece, and in turn the eyepiece looks into the optical system--be it a compound microscope, a spotting scope, telescope, or binocular. In all cases, the eyepiece doesn't view an actual object, but rather some intermediate image formed by the "front" part of the optical system. With telescopes, this intermediate image may be real or virtual. With the compound microscope, this intermediate image is real, formed by the objective lens. -

Image Compression Using Discrete Cosine Transform Method

Qusay Kanaan Kadhim, International Journal of Computer Science and Mobile Computing, Vol.5 Issue.9, September- 2016, pg. 186-192 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 5.258 IJCSMC, Vol. 5, Issue. 9, September 2016, pg.186 – 192 Image Compression Using Discrete Cosine Transform Method Qusay Kanaan Kadhim Al-Yarmook University College / Computer Science Department, Iraq [email protected] ABSTRACT: The processing of digital images took a wide importance in the knowledge field in the last decades ago due to the rapid development in the communication techniques and the need to find and develop methods assist in enhancing and exploiting the image information. The field of digital images compression becomes an important field of digital images processing fields due to the need to exploit the available storage space as much as possible and reduce the time required to transmit the image. Baseline JPEG Standard technique is used in compression of images with 8-bit color depth. Basically, this scheme consists of seven operations which are the sampling, the partitioning, the transform, the quantization, the entropy coding and Huffman coding. First, the sampling process is used to reduce the size of the image and the number bits required to represent it. Next, the partitioning process is applied to the image to get (8×8) image block. Then, the discrete cosine transform is used to transform the image block data from spatial domain to frequency domain to make the data easy to process. -

Inviwo — a Visualization System with Usage Abstraction Levels

IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, VOL X, NO. Y, MAY 2019 1 Inviwo — A Visualization System with Usage Abstraction Levels Daniel Jonsson,¨ Peter Steneteg, Erik Sunden,´ Rickard Englund, Sathish Kottravel, Martin Falk, Member, IEEE, Anders Ynnerman, Ingrid Hotz, and Timo Ropinski Member, IEEE, Abstract—The complexity of today’s visualization applications demands specific visualization systems tailored for the development of these applications. Frequently, such systems utilize levels of abstraction to improve the application development process, for instance by providing a data flow network editor. Unfortunately, these abstractions result in several issues, which need to be circumvented through an abstraction-centered system design. Often, a high level of abstraction hides low level details, which makes it difficult to directly access the underlying computing platform, which would be important to achieve an optimal performance. Therefore, we propose a layer structure developed for modern and sustainable visualization systems allowing developers to interact with all contained abstraction levels. We refer to this interaction capabilities as usage abstraction levels, since we target application developers with various levels of experience. We formulate the requirements for such a system, derive the desired architecture, and present how the concepts have been exemplary realized within the Inviwo visualization system. Furthermore, we address several specific challenges that arise during the realization of such a layered architecture, such as communication between different computing platforms, performance centered encapsulation, as well as layer-independent development by supporting cross layer documentation and debugging capabilities. Index Terms—Visualization systems, data visualization, visual analytics, data analysis, computer graphics, image processing. F 1 INTRODUCTION The field of visualization is maturing, and a shift can be employing different layers of abstraction. -

Ground-Based Photographic Monitoring

United States Department of Agriculture Ground-Based Forest Service Pacific Northwest Research Station Photographic General Technical Report PNW-GTR-503 Monitoring May 2001 Frederick C. Hall Author Frederick C. Hall is senior plant ecologist, U.S. Department of Agriculture, Forest Service, Pacific Northwest Region, Natural Resources, P.O. Box 3623, Portland, Oregon 97208-3623. Paper prepared in cooperation with the Pacific Northwest Region. Abstract Hall, Frederick C. 2001 Ground-based photographic monitoring. Gen. Tech. Rep. PNW-GTR-503. Portland, OR: U.S. Department of Agriculture, Forest Service, Pacific Northwest Research Station. 340 p. Land management professionals (foresters, wildlife biologists, range managers, and land managers such as ranchers and forest land owners) often have need to evaluate their management activities. Photographic monitoring is a fast, simple, and effective way to determine if changes made to an area have been successful. Ground-based photo monitoring means using photographs taken at a specific site to monitor conditions or change. It may be divided into two systems: (1) comparison photos, whereby a photograph is used to compare a known condition with field conditions to estimate some parameter of the field condition; and (2) repeat photo- graphs, whereby several pictures are taken of the same tract of ground over time to detect change. Comparison systems deal with fuel loading, herbage utilization, and public reaction to scenery. Repeat photography is discussed in relation to land- scape, remote, and site-specific systems. Critical attributes of repeat photography are (1) maps to find the sampling location and of the photo monitoring layout; (2) documentation of the monitoring system to include purpose, camera and film, w e a t h e r, season, sampling technique, and equipment; and (3) precise replication of photographs. -

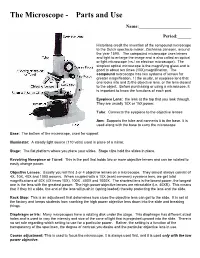

The Microscope Parts And

The Microscope Parts and Use Name:_______________________ Period:______ Historians credit the invention of the compound microscope to the Dutch spectacle maker, Zacharias Janssen, around the year 1590. The compound microscope uses lenses and light to enlarge the image and is also called an optical or light microscope (vs./ an electron microscope). The simplest optical microscope is the magnifying glass and is good to about ten times (10X) magnification. The compound microscope has two systems of lenses for greater magnification, 1) the ocular, or eyepiece lens that one looks into and 2) the objective lens, or the lens closest to the object. Before purchasing or using a microscope, it is important to know the functions of each part. Eyepiece Lens: the lens at the top that you look through. They are usually 10X or 15X power. Tube: Connects the eyepiece to the objective lenses Arm: Supports the tube and connects it to the base. It is used along with the base to carry the microscope Base: The bottom of the microscope, used for support Illuminator: A steady light source (110 volts) used in place of a mirror. Stage: The flat platform where you place your slides. Stage clips hold the slides in place. Revolving Nosepiece or Turret: This is the part that holds two or more objective lenses and can be rotated to easily change power. Objective Lenses: Usually you will find 3 or 4 objective lenses on a microscope. They almost always consist of 4X, 10X, 40X and 100X powers. When coupled with a 10X (most common) eyepiece lens, we get total magnifications of 40X (4X times 10X), 100X , 400X and 1000X.