Game Programming Gems 7

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Framework Para O Desenvolvimento De Experiências Virtuais Com Interacção Háptica

FACULDADE DE ENGENHARIA DA UNIVERSIDADE DO PORTO Framework para o Desenvolvimento de Experiências Virtuais com Interacção Háptica Telmo da Rocha Pereira Mestrado Integrado em Engenharia Informática e Computação Orientador: Prof. A. Augusto de Sousa Co-orientadora: Prof. ª Teresa Restivo Co-orientador: Prof. António Mendes Lopes 28 de Junho de 2010 Framework para o Desenvolvimento de Experiências Virtuais com Interacção Háptica Telmo da Rocha Pereira Mestrado Integrado em Engenharia Informática e Computação Aprovado em provas públicas pelo Júri: Presidente: Professor João Correia Lopes Vogal Externo: Professor António Ramires Fernandes Orientador: Professor António Augusto de Sousa 31 de Julho de 2010 Resumo A presente dissertação apresenta o trabalho efectuado na área do Desenvolvimento de Experiências Virtuais com Interacção Háptica, nomeadamente experiências relacionadas com o comportamento físico de objectos. Este trabalho enquadra-se numa perspectiva de poder introduzir o desenvolvimento de experiências virtuais com interacção háptica no ensino, estando definido como objectivo a concepção de um conjunto de metodologias que permitam criar este tipo de experiências de forma simples e rápida. Inicia-se assim o trabalho com uma revisão bibliográfica das áreas envolvidas, nomeadamente simulação por computador, computação gráfica, realidade virtual e interacção háptica. Seguidamente são investigadas e analisadas possíveis soluções actualmente existentes e passíveis de serem aplicadas. Dado que não se encontrou uma solução que satisfizesse as necessidades do problema, apresenta-se uma análise das tecnologias necessárias para conceber uma nova solução: motor gráfico, motor físico e dispositivo háptico. As duas primeiras tecnologias referidas são alvo de uma avaliação, que permite escolher, de entre um conjunto de opções, qual o motor gráfico e físico que melhor se adequa às necessidades. -

From Doom and Gloom to BOOM and Bloom

Cloud Programming: From Doom and Gloom to BOOM and Bloom Peter Alvaro, Neil Conway Faculty Recs: Joseph M. Hellerstein, RasDslav Bodik Collaborators: Tyson Condie, Bill Marczak, Rusty Sears UC Berkeley WriDng reliable, scalable distributed soLware remains extremely difficult Three Hardware Trends 1. Cloud Compung 2. Powerful, heterogeneous mobile clients 3. Many-Core ImplicaDons 1. Nearly every non-trivial program will be physically distributed 2. Increasingly heterogeneous clients, unpredictable cloud environments 3. Distributed programming will no longer be confined to highly-trained experts The Anatomy of a Distributed Program • In a typical distributed program, we see: – CommunicaDon, messaging, serializaDon – Event handling – Concurrency, coordinaon – Explicit fault tolerance, ad-hoc error handling • What are we looking for? – Correctness (safety, liveness, …) – Conformance to specificaDon – High-level performance properDes; behavior under network edge-cases Data-Centric Programming • Goal: Fundamentally raise the level of abstracon for distributed programming • MapReduce: data-centric batch programming – Programmers apply transforma-ons to data sets • Can we apply a data-centric approach to distributed programming in general? Bloom and BOOM 1. Bloom: A high-level, data-centric language designed for distributed compuDng 2. BOOM: Berkeley Orders of Magnitude – OOM bigger systems in OOM less code – Use Bloom to build real distributed systems Agenda: FoundaDon • Begin with a precise formal semanDcs – Datalog w/ negaDon, state update, and -

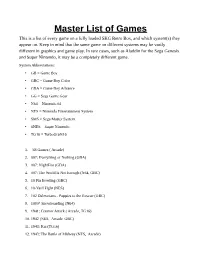

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

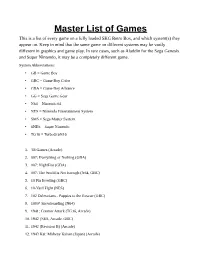

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Video Game Trader Magazine & Price Guide

Winter 2009/2010 Issue #14 4 Trading Thoughts 20 Hidden Gems Blue‘s Journey (Neo Geo) Video Game Flashback Dragon‘s Lair (NES) Hidden Gems 8 NES Archives p. 20 19 Page Turners Wrecking Crew Vintage Games 9 Retro Reviews 40 Made in Japan Coin-Op.TV Volume 2 (DVD) Twinkle Star Sprites Alf (Sega Master System) VectrexMad! AutoFire Dongle (Vectrex) 41 Video Game Programming ROM Hacking Part 2 11Homebrew Reviews Ultimate Frogger Championship (NES) 42 Six Feet Under Phantasm (Atari 2600) Accessories Mad Bodies (Atari Jaguar) 44 Just 4 Qix Qix 46 Press Start Comic Michael Thomasson’s Just 4 Qix 5 Bubsy: What Could Possibly Go Wrong? p. 44 6 Spike: Alive and Well in the land of Vectors 14 Special Book Preview: Classic Home Video Games (1985-1988) 43 Token Appreciation Altered Beast 22 Prices for popular consoles from the Atari 2600 Six Feet Under to Sony PlayStation. Now includes 3DO & Complete p. 42 Game Lists! Advertise with Video Game Trader! Multiple run discounts of up to 25% apply THIS ISSUES CONTRIBUTORS: when you run your ad for consecutive Dustin Gulley Brett Weiss Ad Deadlines are 12 Noon Eastern months. Email for full details or visit our ad- Jim Combs Pat “Coldguy” December 1, 2009 (for Issue #15 Spring vertising page on videogametrader.com. Kevin H Gerard Buchko 2010) Agents J & K Dick Ward February 1, 2009(for Issue #16 Summer Video Game Trader can help create your ad- Michael Thomasson John Hancock 2010) vertisement. Email us with your requirements for a price quote. P. Ian Nicholson Peter G NEW!! Low, Full Color, Advertising Rates! -

Vmware Vrealize Configuration Manager Installation Guide Vrealize Configuration Manager 5.8

VMware vRealize Configuration Manager Installation Guide vRealize Configuration Manager 5.8 This document supports the version of each product listed and supports all subsequent versions until the document is replaced by a new edition. To check for more recent editions of this document, see http://www.vmware.com/support/pubs. EN-001815-00 vRealize Configuration Manager Installation Guide You can find the most up-to-date technical documentation on the VMware Web site at: Copyright http://www.vmware.com/support/ The VMware Web site also provides the latest product updates. If you have comments about this documentation, submit your feedback to: [email protected] © 2006–2015 VMware, Inc. All rights reserved. This product is protected by U.S. and international copyright and intellectual property laws. VMware products are covered by one or more patents listed at http://www.vmware.com/go/patents. VMware is a registered trademark or trademark of VMware, Inc. in the United States and/or other jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies. VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com 2 VMware, Inc. Contents About This Book 5 Preparing to Install VCM 7 Typical or Advanced Installation 7 VCM Installation Configurations 8 Create VCM Domain Accounts 8 VCM Account Configuration 9 VCM Administrator Account 10 VCM User Accounts 10 Service Accounts 10 Network Authority Account 11 ECMSRSUser Account 12 SQL Server Permissions and Constructs 12 Gather Supporting Software -

By Carisa Lovell Thesis Advisor Paul Gestwicki Ball State University

Collaboration Station: A Memoir An Honors Thesis (HONR 499) By Carisa Lovell Thesis Advisor Paul Gestwicki Ball State University Muncie, Indiana May 2016 Expected Date of Graduation May 2016 .s;o CD/I LAhder{jr,:.d -;h.ts;s- Lovell1 J-]) ?i89 -Zf- Abstract 201&> .L08 What happens when an English major unintentionally sttunbles upon a class about serious gaming? She learns fascinating new jargon and is exposed to an entirely new way ofthinking! And, what happens when she decides to enroll in an irrnnersive learning experience in which she joins a team whose mission it is to create a cooperative educational video game? She learns that the third floor ofRobert Bell isn't such a bad place to hang out and be introduced to new things like coding, networking, and the ins and outs of serious game design And, what happens when this English major has so much more :lim than she ever thought possible? She writes about her experience, of course! Games are meant to be fun, and to convey concepts and meaning in an enjoyable way. Likewise, this memoir uses humor to capture the trials and tribulations of stepping outside of one's comfort zone and into the very real world of creating an imaginary space in which to play. Lovell2 Acknowledgements I would like to thank Dr. Paul Gestwicki for being adventurous enough to add me to his studio team, for being hilarious and inspirational at the same time, and for teaching me many life lessons along with cotrrse content. I will always appreciate his ability to switch seamlessly from his Professor Hat to his Mentor Hat to his Community Partner Hat to his Philosopher Hat to his Advisor Hat, as well as his ability to jump effortlessly from one soapbox to another as he endeavors to educate his students not just about computer science or game design, but about the way life is best lived. -

Talent Development: a ‘MUST’ for a PROMISING FUTURE

08_Mar_1_for pdf.qxp 2/21/08 4:53 PM Page 501 Talent Development: A ‘MUST’ FOR A PROMISING FUTURE Ms. Roberts points out that when we plan to establish P-16 systems, we must be sure that they are flexible enough to allow all students to learn what they are ready to learn when they are ready to learn it. JULIA LINK ROBERTS RE your children breaking an “ac- portunities to make progress. No one is limited in what ademic sweat”? This question is es- he or she can learn because of age, but rather learning sential, for it addresses the need to experiences are planned to match the level at which the work hard to reach a goal. Parents child is ready to learn. Student talents are accommodat- and educators often resort to an analogy between athletics and academics in an effort to bring children around onA the matter of the dual importance of hard work and talent. No athlete becomes a cham- pion without facing challenges. No athlete ex- cels without expert coaching. The same holds true for all talent areas. Schools that offer var- sity sports need to offer varsity-level opportu- nities in academic and artistic areas as well. In the movement toward an integrated P-16 system of education, we need to address a num- ber of questions about talent development. Why is talent development important for society in general? What must be in place in a P-16 sys- tem in order to nurture the development of tal- ent in a school and throughout a school system? And what must be in place to develop top talent? What factors erect barriers to talent development? In ed in a variety of areas, whether in academic subjects, in this article, I will meld key concepts in the develop- the arts, or in athletics. -

Game AI in Delta3d

Proceedings of the 2007 IEEE Symposium on Computational Intelligence and Games (CIG 2007) Game AI in Delta3D Christian J. Darken Bradley G. Anderegg Perry L. McDowell MOVES Institute/CS Dept. Alion Science and Technology Corp MOVES Institute Naval Postgraduate School banderegg at alionscience dot com Naval Postgraduate School cjdarken at nps dot edu mcdowell at nps dot edu Abstract—Delta3D is a GNU-licensed open source game space, we came up with a four-part philosophical credo upon engine with an orientation towards supporting “serious games” such as those with defense and homeland security applications. which we based building our game and simulation engine: AI is an important issue for serious games, since there is more pressure to “get the AI right”, as opposed to providing an 1. Maintain openness in all aspects to avoid lock-ins entertaining user experience. We describe several of our near- and increase flexibility. and longer-term AI projects oriented towards making it easier to build AI-enhanced applications in Delta3D. 2. Maintain the capability to support multiple genres, Keywords: Artificial Intelligence, Game Engines since we never know what type of application it will have to support next. I.INTRODUCTION Delta3D is a open source (Lesser GNU Public License 3. Build the engine in a modular fashion so that we (LPGL)) game engine created at the MOVES Institute, part can swap anything out as technologies mature at of the Naval Postgraduate School in Monterey. While different rates. originally designed for military game-based simulations, Delta3D can be used as the underlying architecture for a 4. -

Estudo Comparativo Entre Engines De Jogos

FACULDADE DE TECNOLOGIA, CIÊNCIAS E EDUCAÇÃO Graduação GRADUAÇÃO EM CIÊNCIA DA COMPUTAÇÃO Estudo comparativo entre engines de jogos Carlos Gabriel Agostinho Gonçalo Klinke Adinovam Henriques de Macedo Pimenta (Orientador) RESUMO A evolução das engines está agora se movendo para jogos mais realistas e tecnicamente ricos em vários campos, como a física, sons e renderização. Das primeiras engines até as engines mais atuais voltadas para jogos 3D, o objetivo do desenvolvimento permanece o mesmo: fornecer ao desenvolvedor uma plataforma para criar jogos exclusivos de tal maneira que os desenvolvedores não precisem escrever ou desenvolver o jogo a partir do zero, mas apenas implementar a ideia com a ajuda de uma engine. Desta maneira, muitos desenvolvedores (experientes ou iniciantes) têm encontrado nestas ferramentas a maneira mais simples, rápida e profissional de desenvolver novos jogos. Porém, com tantas engines disponíveis, o desenvolvedor precisa fazer uma escolha considerando os dados mais relevantes ao seu projeto. Este trabalho analisou dados de vários atributos relevantes ao processo de escolha considerando as 10 engines mais populares atualmente, servindo de conhecimento e auxílio à este processo decisório. Palavras-chave: Motores de jogos. Desenvolvimento de jogos. Jogos digitais. ABSTRACT The evolution of the game engines is now moving to more realistic and technically rich games in various fields such as physics, sounds and rendering. From the first enginesto the most current engines for 3D gaming, the goal of development remains the same: to provide the developer with a platform to create exclusive games in such a way that developers do not have to write or develop the game from scratch, but only implement the idea with the help of an engine. -

Curriculum Vitae Di Tommaso Cucinotta

Curriculum Vitae: Prof. Tommaso Cucinotta Personal data Birth date and place: April 1974, Potenza (Italy) Phone: +39 (0)50 882 028 Skype Id: t.cucinotta E-mail: Home page: http:// retis . santannapisa .it/~tommaso Current status Dec 2015 to date: Associate Professor at the Real-Time Systems Laboratory (ReTiS) of Scuola Superiore Sant'Anna RESEARCH TOPICS & COMPETENCIES ❑ Real-time and reliable NoSQL Database systems for cloud services ❑ Adaptive resource management and scheduling in Cloud Computing & Network Function Virtualization infrastructures ❑ Artificial Intelligence and Machine Learning to support Data Center Operations in Cloud & NFV infrastructures ❑ Platforms for real-time data streaming and analytics ❑ Quality of service control for adaptive soft real-time applications, including multimedia and IMS systems ❑ Operating Systems for real-time and embedded applications and many-core and massively distributed systems ❑ Trusted computing and confidentiality in cloud computing ❑ Smart-cards: interoperability, protocols and architectures ❑ Digital signatures, biometrics identification, multicast security Experience highlights (details below) ❑ 7 Granted and 25 Filed EU and US Patents in the areas of security, resource management and scheduling ❑ 25 International Journal Publications, including IEEE Transaction on Computers, IEEE Transaction on Industrial Informatics and ACM Transactions on Embedded and Computing Systems ❑ 65 International Conference and Workshop Peer-reviewed Publications and 13 Book Chapters ❑ 3 EU Projects scientific -

Microsoft Windows Server 2008 PKI and Deploying the Ncipher Hardware Security Module

This is a joint nCipher and IdentIT authored whitepaper Microsoft Windows Server 2008 PKI and Deploying the nCipher Hardware Security Module Abstract This paper discusses the benefits that are unique to deploying the integrated solution of the Windows Server 2008 PKI and the nCipher nShield and netHSM hardware security modules (HSM). This includes the essential concepts and technologies used to deploy a PKI and the best practice security and life cycle key management features provided by nCipher HSMs.. MicrosofT WIndoWs server 2008 PKI and dePloyIng The nCipher hardWare seCurity Module Introduction...............................................................................................................................................................................................3 PKI – A Crucial Component to Securing e-commerce ......................................................................................................................4 Microsoft Windows Server 2008 ...............................................................................................................................................................4 nCipher Hardware Security Modules ......................................................................................................................................................4 Best.Practice.Security.–.nCipher.HSMs.with.Windows.Server.2008.PKI................................................................................5 Overview...............................................................................................................................................................................................................5