SOUNDFIELD ANALYSIS and SYNTHESIS: Recording, Reproduction and Compression

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Std8010 Flexible MPEG Audio/Video Codec with DVD and DVB Receiver Capability

STd8010 Flexible MPEG audio/video codec with DVD and DVB receiver capability DATA BRIEF DESCRIPTION Package (35x35 mm² PBGA) STMicroelectronics has developed an audio/video full-duplex Codec for low-cost combo devices. The STd8010, equipped with open and scalable architectures, brings audio/video encoding for DVD or HDD recording, state-of-the art DVD player and recorder features, STB, networking and PVR. TV watch and record, time shift, DV movie transfer to disc, MPEG4 decoding and encoding, DVD audio are many functions supported by this device. The STd8010 offers manufacturers of STB, DVD recorder, PVR and convergence products a cost- effective solution with an unrivalled set of features, xxxx and flexibility. Figure 1. STd8010 application diagram Rev. 1 December 2005 1/3 STD8010 FEATURES – Virtualizer: ST OmniSurround™ ■ Host processor – 4 x 2-channel PCM outputs, – ST231 processor at 400 MHz, with TLB – IEC60958-IEC61937 digital output support for Linux and OS21 operating ■ DVD sub-picture decoder high performance systems picture composition – 3 processing cores providing up to 4.5 GOPs – Multi plane: background, still picture, video, of processing power sub-picture, graphics, PIP, cursor ■ Video encoder – 2D graphics accelerator – Based on the ST231 processor 400 MHz – Adaptive anti-flicker – Real time MPEG2 MP at ML encode ■ Multi-format video outputs – Real time DivX encode – CVBS, Y/C, RGB and YUV outputs with – Pre-processing for superior image quality 10-bit DAC at 54 MHz – Constant and variable bit-rate encoding – PAL/NTSC/SECAM -

Cs4931x/Cs49330

查询cs4931供应商 CS4931X/CS49330 Part Matrix vs. Code Matrix for Broadcast-based Applications 1. CS4931X/CS49330: BROADCAST If compressed data is delivered to the DSP (via I2S APPLICATION SUBFAMILY or LJ) according to the IEC61937 specification, this A Broadcast Application implies that data is either would actually no longer be considered a an Elementary Stream (ES) or Packetized “broadcast-based” application (with the exception Elementary Stream (PES) format. of the AAST and AACT codes), regardless of the final product’s intended purpose. Data delivery is either: If the data delivery is IEC61937-packed AC-3, • Serial Bursty (Configure INPUTA3 and send MPEG Multichannel, MP3 or AAC, the designer data to the CDI port while monitoring should then refer to the “CS4932X/CS49330, Part CMPREQ throttle line to make sure that Input Matrix vs. Code Matrix for AVR/Outboard FIFO is not over/under-flowed. Decoder-based Applications” as this would now be • Parallel Bursty (Configure for INPUTA7 or considered an AVR-based application in regards to INPUTA8 and send data to the parallel port in the data delivery. This document is available from Motorola or Intel format). the Cirrus Logic website on the CS49300 Page. • “PES over I2S” (Typically, data can not be All Broadcast-based applications follow these rules delivered via an I2S or Left-Justified input, for (except where noted): any standard release broadcast-based Expected CLKIN = 27 MHz (Oscillator Value). application code, with the exception of AC3N, which accepts “PES over I2S” which follows a DSPCLK = Refer to AN162 (Sampling Frequency proprietary patent-pending packing technique and Application Code Dependent). -

Deutsch - 1 - Sicherheitsinformationen Das Netzkabel / Den Stecker Mit Nassen Händen, Da Dies Einen Kurzschluss Oder Elektrischen Schlag Verursachen Kann

Inhaltsverzeichnis Sicherheitsinformationen ........................................2 Kennzeichnungen auf dem Gerät ...........................3 Umweltinformationen ..............................................3 Funktionen ..............................................................4 Zubehör im Lieferumfang .......................................4 Standby-Meldungen ...............................................4 TV-Bedientasten & Betrieb .....................................5 Einlegen der Batterien in die Fernbedienung .........5 Stromversorgung Anschließen ..............................5 Anschluss der Antenne ...........................................6 Benachrichtigung ....................................................6 Fernbedienung .......................................................7 Anschlüsse .............................................................8 Ein-/Ausschalten.....................................................9 Erstinstallation ........................................................9 Medien Abspielen über USB Eingang ..................10 Aufzeichnung einer Sendung ............................... 11 Timeshift Aufnahme .............................................. 11 Sofort-Aufnahme .................................................. 11 Wiedergabe von aufgenommenen Programmen.. 11 Aufnahmekonfiguration.........................................12 Menü Medienbrowser ...........................................12 CEC und CEC RC Passthrough ...........................12 E-Handbuch..........................................................13 -

English 5 INTRODUCTION

INTRODUCTION CONTENTS CONTENTS INTRODUCTION ..................................................................P 5 INSTALLATION ....................................................................P 7 STANDARD CONNECTIONS................................................P 9 GETTING STARTED..............................................................P 11 PLAYING A DVD-VIDEO DISC..............................................P 12 PLAYING A VIDEO CD..........................................................P 16 PLAYING AN AUDIO CD ......................................................P 18 SETTINGS ............................................................................P 20 PARENTAL CONTROL ..........................................................P 22 BEFORE REQUESTING SERVICE ..........................................P 23 DVD-VIDEO THE ENTERTAINMENT MEDIUM FOR THE MILLENIUM DIGITAL VIDEO Video was never like this before! Perfect digital studio-quality DVD-Video uses state-of-the-art MPEG2 data compression pictures with truly 3-dimensional digital multichannel audio. technology to register an entire movie on a single 5-inch disc. Story sequences screened from your own choice of camera DVD’s variable bitrate compression, running at up to 9.8 angle. Mbits/second, captures even the most complex pictures in Language barriers broken down by sound tracks in as many as their original quality. eight languages, plus subtitles - if available on disc - in as many as 32. And whether you watch DVD-Video on wide- The crystal-clear digital pictures have -

28Pw9615 Nicam

Preliminary information WideScreen Television 28PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen SOURCE • NEXTVIEW (type 3) PREPARED • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 28PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen SOURCE • NEXTVIEW (type 3) PREPARED • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 28PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • Digital CrystalClear with Dynamic Contrast and LTP2 • Full PAL plus (Colour Plus incl.) / WideScreen plus • Dolby Digital and MPEG Multichannel • Wireless FM surround speakers • TXT/NEXTVIEW DualScreen SOURCE • NEXTVIEW (type 3) PREPARED • 440 pages EasyText teletext (level 2.5) • Active control • Improved Graphical User Interface • Zoom (16x) Preliminary information WideScreen Television 28PW9615 NICAM Product highlights • 100Hz Digital Scan with Digital Natural Motion • -

HDM500 High-Definition MPEG-2 Decoder HDM500 High-Definition MPEG-2 Decoder Optional SAC014 Bracket for LTC and RS422 Optional SAC015 Digital Audio Breakout Cable

HDM500 High-Definition MPEG-2 Decoder HDM500 High-Definition MPEG-2 Decoder Optional SAC014 Bracket for LTC and RS422 Optional SAC015 Digital Audio Breakout Cable Specifications MPEG Streams ISO/IEC 13818 and ISO/IEC 11172 compliant. Accepts ATSC-compliant MPEG-2 Elementary, Packetized Elementary (PES), Transport and Program Streams and MPEG-1 System Streams 4 MPEG Video Decodes MP@ML or MP@HL (4:2:0) up to 80 Mbps : Digital Bitstream Input Through the PCI bus using bus mastering and burst transfer mode 2 Digital Video Outputs SMPTE 292M digital video output, with embedded audio - BNC connector Can be optionally configured as SMPTE259M-C when 480i or 576i output formats are selected : DVI (Digital Visual Interface) output via DVI-I Digital/Analog Connector 0 Analog Video Outputs Can be configured as either SMPTE 274M or 296M (RGB with sync-on-all or YPbPr with sync-on-Y), RGBHV, SPMTE 253M (RGB with sync-on-all), SMPTE 170M NTSC, ITU-R BT.470 PAL or S-Video (Y/C) via DVI-I connector Digital Audio Output 6 AES-3 outputs, 75 ohms, unbalanced (SMPTE 276M-1995), via DB15-HD connector, 4 may be configured as IEC 61937/SMPTE 337M-2000 Digital audio outputs Analog Audio Output 1 pair unbalanced audio, via DB15-HD connector or MPC (CD-ROM style) header connector LTC 2V P-P, 1K ohm, BNC connector with optional SAC014 Machine Control 9-Pin, RS-422A can be configured as either "Controller" or "Device" Genlock Locks to the H-sync and V-sync, allows variable delay about the H-sync On-Screen Display Up to 24 bits per pixel or indexed color with blending Host System Pentium-based PC with an available PCI short card slot conforming to specification revision 2.1 running Requirements Windows NT 4.0, or greater, with a VGA display adapter supporting Microsoft® Direct Draw mode Disk transfer rate may limit the maximum sustained MPEG data rate achievable Power requirements 3.3v (5 W typical), 5v (1.6 W typical), 12v (1.6 W typical), -12v (0.2 W typical) 4 : 2 : 0 mpeg-2 Size Standard PCI short card, 6.875 in. -

Instruction Manual for Upgraded Unit

Instruction Manual for upgraded unit Thank you for using our products. The upgraded unit now supports the newest decoders and sound formats below as well as conventional sound formats including Dolby Digital, DTS, and THX Surround EX. With your new AV amplifier, you can enjoy movies and music to their absolute fullest. Contents Features ......................................................................................................2 Speaker configuration and placement/Connecting speakers Speaker placement .................................................................................................3 Speaker Setup 1-1. Speaker Config sub-menu ............................................................................... 4 1-4. THX Audio Setup sub-menu (new function) ................................................... 5 1-5. LFE Level Setup sub-menu ................................................................................ 5 Input Setup 2-1. Digital Setup sub-menu .................................................................................... 6 2-4. Listening Mode Preset sub-menu .................................................................... 6 2-5. Delay sub-menu ..............................................................................................16 Listening Mode Setup 3. Listening Mode Setup menu ..............................................................................10 Other upgraded function Selecting audio input signal using the AUDIO button on the remote controller (new function) .........................................................................................................16 -

The Makeover from DVD to Blu-Ray Disc

Praise for Blu-ray Disc Demystified “BD Demystified is an essential reference for designers and developers building with- in Blu-ray’s unique framework and provides them with the knowledge to deliver a compelling user experience with seamlessly integrated multimedia.” — Lee Evans, Ambient Digital Media, Inc., Marina del Rey, CA “Jim’s Demystified books are the definitive resource for anyone wishing to learn about optical media technologies.” — Bram Wessel, CTO and Co-Founder, Metabeam Corporation “As he did with such clarity for DVD, Jim Taylor (along with his team of experts) again lights the way for both professionals and consumers, pointing out the sights, warning us of the obstacles and giving us the lay of the land on our journey to a new high-definition disc format.” — Van Ling, Blu-ray/DVD Producer, Los Angeles, CA “Blu-ray Disc Demystified is an excellent reference for those at all levels of BD pro- duction. Everyone from novices to veterans will find useful information contained within. The authors have done a great job making difficult subjects like AACS encryption, BD-Java, and authoring for Blu-ray easy to understand.” — Jess Bowers, Director, Technical Services, 1K Studios, Burbank, CA “Like its red-laser predecessor, Blu-ray Disc Demystified will immediately take its rightful place as the definitive reference book on producing BD. No authoring house should undertake a Blu-ray project without this book on the author’s desk. If you are new to Blu-ray, this book will save you time, money, and heartache as it guides the DVD author through the new spec and production details of producing for Blu-ray.” — Denny Breitenfeld, CTO, NetBlender, Inc., Alexandria, VA “An all in one encyclopedia of all things BD.” — Robert Gekchyan, Lead Programmer/BD Technical Manager Technicolor Creative Services, Burbank, CA About the Authors Jim Taylor is chief technologist and general manager of the Advanced Technology Group at Sonic Solutions, the leading developer of BD, DVD, and CD creation software. -

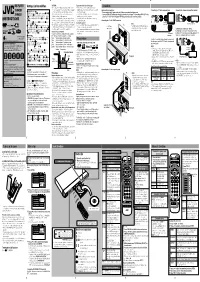

JVC XV-N422 User Guide Manual Operating Instruction

CAUTION: To prevent malfunction of the player Connections DVD PLAYER Warnings, Cautions and Others • Do not block the ventilation openings or holes. (If the • There are no user-serviceable parts inside. If XV-N420B ventilation openings or holes are blocked by a newspaper anything goes wrong, unplug the power cord and Before making connections: Connecting to a TV with component jacks Connecting to a stereo audio amplifier (receiver) or cloth, etc., the heat may not be able to get out.) consult your dealer. • Do not connect the AC power cord until all other connections have been made. • Do not place any naked flame sources, such as • Do not insert any metallic objects, such as wires, • Connect VIDEO OUT of the player directly to the video input of your TV. Connecting VIDEO OUT of the PB XV-N422S PB Y RIGHT lighted candles, on the apparatus. hairpins, coins, etc. into the player. player to a TV via a VCR/an integrated TV/Video system may cause a monitor problem. XIAL Y RIGHT • When discarding batteries, environmental problems • Do not block the vents. Blocking the vents may LEFT TREAM LEFT INSTRUCTIONS VIDEO PR must be considered and local rules or laws governing damage the player. Connecting to a TV with SCART connector VIDEO PR VIDEO OUT AUDIO OUT the disposal of these batteries must be followed strictly. To clean the cabinet L OUT VIDEO OUT AUDIO OUT To analog ® NOTE audio input To analog audio input • Do not expose this apparatus to rain, moisture, dripping • Use a soft cloth. Follow the relevant instructions on TV SCART cable The VIDEO jack, AV OUT terminal, and VIDEO or splashing and that no objects filled with liquids, such the use of chemically-coated cloths. -

Sydney, 10 December 1999 ( 329.6

SPARK AND CANNON Telephone: Adelaide (08) 8212-3699 TRANSCRIPT Melbourne (03) 9670-6989 Perth (08) 9325-4577 OF PROCEEDINGS Sydney (02) 9211-4077 _______________________________________________________________ PRODUCTIVITY COMMISSION DRAFT REPORT INTO THE BROADCASTING SERVICES ACT 1992 PROF R.J. SNAPE, Presiding Commissioner MR S. SIMSON, Assistant Commissioner TRANSCRIPT OF PROCEEDINGS AT SYDNEY ON FRIDAY, 10 DECEMBER 1999, AT 9.09 AM Continued from 9/12/99 Broadcasting 1388 br101299.doc PROF SNAPE: Okay, welcome to this, the fifth day of the hearings in Sydney on the draft report of the Productivity Commission on broadcasting. Copies of the draft report have been available since 22 October. If anyone present hasn't received a copy and would like one, they should contact members of the commission staff who are present. The commission wishes to thank the people and organisations who have responded to the draft report, either in further submissions or in arranging to appear at the hearings. The submissions are available here today for viewing and are on the web site of the commission. These submissions and comments will help us to improve the report which will be submitted to the treasurer early in March. The timing of the release of the final report is under the control of the government. As in the case of the earlier hearings, transcripts are being made and should be available on the commission's Web site within three days of the relevant hearing. Copies will be sent to the relevant participants. At the end of the scheduled hearings today I shall invite any persons present to make oral presentations should they wish to do so. -

ESS Technology, Inc. ES8380 Phoenix DVD Processor Product Brief

ADVANCE ES8380 Phoenix DVD Processor ESS Technology, Inc. Product Brief DESCRIPTION FEATURES The ES8380 Phoenix™ processor is a single-chip DVD • Built-in RF amplifier and servo controller. processor supporting DVD video, MPEG-4 ASP and • High-performance focusing, sledding, tracking and CLV/CAV DivX® Home Theater playback. spindle servo control. The Phoenix DVD processor is ideal for stand-alone DVD • DVD-Video, DVD-R/RW, DVD+R/RW, SVCD, VCD, CD-ROM, players, DVD receivers, DVD/VCR combos and DVD A/V CD-R/RW, CD-DA support. mini-component systems. It incorporates a high-quality • DivX Home Theater quality video at full screen (D1) deinterlacer and a TV encoder that supports HD (ES8380FCA/CB/CC/CD only). (720p/1080i) and Macrovision™ protected progressive • MPEG-4 Advanced Simple Profile video including GMC and (480p/576p) and interlaced video output. The HD output is QPEL support (ES8380FBA/BB/CA/CB/CC/CD only). ideal for the display of JPEG pictures, and when used with • Pixel-adaptive de-interlacer. the built-in video scaler, allows up-conversion of unprotected video to HD resolution. • Scaler for video up-conversion to 1080i/720p. • NTSC/PAL encoder with six video DACs for composite, The Phoenix processor is built on the ESS proprietary dual S-Video, and component video outputs. CPU Programmable Multimedia Processor (PMP) core • Macrovision protected, NTSC/PAL interlaced, and progressive consisting of 32-bit RISC and 64-bit DSP processors that scan (480p/576p) video output. deliver the best DVD feature set. It integrates a servo controller, RF amplifier, read channel, ECC, servo DSP, • HD component output for JPEG picture and video. -

Design Your Next Generation DVD Receiver Today

FORTISSIMO PLATFORM OVERVIEW Design Your Next Generation DVD Receiver Today Cirrus Logic® Fortissimo Platforms Deliver Dolby® Pro Logic® IIx and 7.1-Channel Audio While Reducing BOM Costs and Speeding Your Time to Market Fortissimo Features I Incorporates a Cirrus Logic Total-E platform including the CS98XXX DVD Processor, the CS5361 Stereo ADC, the CS8415 S/PDIF, and the CS4382 8-Channel, 24-bit, 192 kHz DAC I Plays/Decodes DVD, VCD, VCD 2.0, SVCD, CD, and other popular standards I Supports DivX 3.11, 4.x, and 5.x; Home Theater Certifiable (CDB98300-RX only) I Decoder: Dolby Digital Pro Logic IIx, Dolby Digital-EX, DTS-ES Discrete 6.1, DTS-ES Matrix 6.1, AAC™ Multichannel 5.1, Windows Media® Audio, MP3 (MPEG-1/2, 2.5, The new Cirrus Logic® Fortissimo platforms, the CDB98200-RX, and Layer III), MPEG Multichannel Audio, DTS the soon-to-be-released CDB98300-RX, combine all the advanced ® ® ® Neo:6™, SRS Circle Surround , SRS Surround audio hardware and software necessary to develop high-demand, I Real-time decoding of digital video in either next-generation DVD receivers. The platforms are packed with MPEG-2 MP@ML or MPEG-1 features that today’s consumers demand, which will help provide manufacturers with profitable margins while delivering full-featured ® I Kodak Picture CD playback products that combine DVD Player functionality with an Audio/Video I Plays DVD-Audio including CPPM and Verance™ Receiver’s acoustical performance. watermark protection The Fortissimo CDB98200-RX platform is comprised of Cirrus Logic’s mainstream DVD processor, the CS98200, as well as the Composite, component, and S-Video with I CS5361 Stereo ADC, the CS8415 S/PDIF, and the CS4382 8-Channel, progressive video output support 24-bit, 192 kHz DAC.