Foraging Goes Mobile: Foraging While Debugging on Mobile Devices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automating Navigation Sequences in AJAX Websites

Automating Navigation Sequences in AJAX Websites Paula Montoto, Alberto Pan, Juan Raposo, Fernando Bellas, and Javier López Department of Information and Communication Technologies, University of A Coruña Facultad de Informática, Campus de Elviña s/n 15071 A Coruña, Spain {pmontoto,apan,jrs,fbellas,jmato}@udc.es Abstract. Web automation applications are widely used for different purposes such as B2B integration, automated testing of web applications or technology and business watch. One crucial part in web automation applications is to allow easily generating and reproducing navigation sequences. Previous proposals in the literature assumed a navigation model today turned obsolete by the new breed of AJAX-based websites. Although some open-source and commercial tools have also addressed the problem, they show significant limitations either in usability or their ability to deal with complex websites. In this paper, we propose a set of new techniques to deal with this problem. Our main contributions are a new method for recording navigation sequences supporting a wider range of events, and a novel method to detect when the effects caused by a user action have finished. We have evaluated our approach with more than 100 web applications, obtaining very good results. Keywords: Web automation, web integration, web wrappers. 1 Introduction Web automation applications are widely used for different purposes such as B2B integration, web mashups, automated testing of web applications or business watch. One crucial part in web automation applications is to allow easily generating and reproducing navigation sequences. We can distinguish two stages in this process: − Generation phase. In this stage, the user specifies the navigation sequence to reproduce. -

Web Usability Glossary

USABILITY GLOSSARY TERM DEFINITION above the fold / : The fold' marks the end of the visible part of the web page. Therefore, 'above the below the fold fold' means the area of the web page that is visible without scrolling. 'below the fold' is the area of the web page that requires scrolling to see. accessibility : Accessibility is a prerequisite to usability. If a person can not access a web page he certainly can not use it. Accessibility refers to web page information/content being obtainable and functional to the largest possible audience. It is about providing access to information for those who would otherwise lose their opportunity to use the web. In contrast inaccessible means unobtainable, nonfunctional. accessibility aids : Assistive technology; tools to help people with disabilities to use computers more effectively. affordance / : A situation where an object's sensory characteristics intuitively imply its perceived affordance functionality and use: a button, by being slightly raised above an otherwise flat surface, suggests the idea of pushing it. A lever, by being an appropriate size for grasping, suggests pulling it. A blinking red light and buzzer suggests a problem and demands attention. A chair, by its size, its curvature, its balance, and its position, suggests sitting on it. An affordance is a desirable property of a user interface - software which naturally leads people to take the correct steps to accomplish their goals. banner blindness : This is the situation where a website user ignores banner-like information on a website. The banner information may or may not be an ad. breadcrumbs : Type of web navigation where current location within the website is indicated by a list of pages above the current page in the hierarchy, up to the main page. -

An Algorithm for Gaze Region Estimation on Web Pages

INTERNATIONAL JOURNAL OF SCIENTIFIC & TECHNOLOGY RESEARCH VOLUME 6, ISSUE 08, AUGUST 2017 ISSN 2277-8616 An Algorithm For Gaze Region Estimation On Web Pages John K. Njue, Simon G. Maina, Ronald Waweru Mwangi, Michael Kimwele Abstract: Accurate gaze region estimation on the web is important for the purpose of placing marketing advertisements in web pages and monitoring authenticity of user’s response in web forms. To identify gaze region on the web, we need cheap, less technical and non-laboratory setup that minimize user interruptions. This study uses the mouse and keyboard as input devices to collect web usage data. The data collected is categorized into mouse activities, keyboard activities and scroll activities. A regression model is then used to estimate gaze region from the categorized data. The results indicate that although mouse cursor data is generated throughout the user session, the use of data from keyboard presses and scroll from both keyboard and mouse improves the accuracy of identified gaze regions in web usage data. Index Terms: Gaze tracking, gaze region, linear regression model, mouse activities, keyboard activities, scroll activities, web page. ———————————————————— 1 INTRODUCTION 2 LITERATURE REVIEW Eye gaze tracking records eye movements whilst a participant A significant feature of web applications is possibility for non- examines a visual stimulus [1]. Tracking user’s eye gaze and sequential navigation to access items on web documents. eye movements are some of the main ways of studying There is no definite order of determining the sequence in attention on web pages as they provide rich details on user which the text is read in web documents [4]. -

The Explorer Bar: Unifying and Improving Web Navigation

The Explorer bar: Unifying and improving web navigation Scott Berkun Program Manager One Microsoft Way Redmond, WA 98052 [email protected] ABSTRACT The Explorer bar is a component of the Internet Explorer web browser that provides a unified model for web navigation activities. The user tasks of searching for new sites, visiting favorite sites, and accessing previously viewed sites are simplified and enhanced by using a single user interface element. Keywords Navigation, bookmark, design, hypertext, searching, browsing more digestible groups that users would understand. We settled on three groupings: web pages the user had not seen INTRODUCTION before, web pages the user was interested in, and web pages the user had visited before. We gave these groupings the names Search, Favorites and History for easier reference. The World Wide Web provides access to an enormous As we sketched out different ideas for solutions to the most amount of information and resources. The primary usability common navigation problems, we recognized that there issues with using the web involve insufficient support for were strong similarities for how users might interact with helping users find and return to individual web pages. The these sets of information. Explorer bar was designed to improve these usability For example, when searching the web using a problems by providing a single well designed user interface search engine, users received a long list of search results. In model for the most common set of web navigation tasks. formal and informal usability studies, we observed many users click on a result link which took them to a new site, The design problem click on several links on that site, and then recognize it was not the site they wanted. -

Scrolling Worse Than Tabs, Menus, and Collapsible Fieldsets

Navigation in Long Forms on Smartphones: Scrolling worse than Tabs, Menus, and Collapsible Fieldsets Johannes Harms, Martina Kratky, Christoph Wimmer, Karin Kappel, Thomas Grechenig INSO Research Group, Vienna Univ. of Technology, Austria. [firstname].[lastname]@inso.tuwien.ac.at Abstract. Mobile applications provide increasingly complex functionality through form-based user interfaces, which requires effective solutions for navi- gation on small-screen devices. This paper contributes a comparative usability evaluation of four navigation design patterns: Scrolling, Tabs, Menus, and Col- lapsible Fieldsets. These patterns were evaluated in a case study on social net- work profile pages. Results show that memorability, usability, overview, and subjective preference were worse in Scrolling than in the other patterns. This indicates that designers of form-based user interfaces on small-screen devices should not rely on Scrolling to support navigation, but use other design patterns instead. Keywords. Navigation; Mobile; Smartphone; Form Design; Evaluation 1 Introduction Forms are widely employed as user interfaces (UIs) for data entry and subsequent editing [15, 17, 22]. Long forms are often considered a bad design practice – e.g., an empirical study [22, p. 294] and recent guidelines [3] recommend against long forms and unnecessary questions. But they cannot always be avoided due to complex appli- cation requirements [16]. This also holds for forms on small-screen, mobile devices such as smartphones. Examples of mobile apps with long form-based UIs include editing a contact in the iOS address book (43 form fields), Facebook’s mobile profile page (11 collapsible fieldsets for 88 form fields), and the Samsung Galaxy system settings (4 tabs for about 380 form fields). -

Improving Search Engine Interfaces for Blind Users: a Case Study

Improving Search Engine Interfaces for Blind Users: a case study PATRIZIA ANDRONICO*, MARINA BUZZI*, CARLOS CASTILLO**, BARBARA LEPORINI* *Istituto di Informatica e Telematica del CNR **Center for Web Research, U. de Chile Abstract: This article describes a research project focused on improving search engine usability for the sightless persona, who uses assistive technology to navigate the Web. Based on a preliminary study on accessibility and usability of search tools, we formulate eight guidelines for designing search engine user interfaces. In the current phase of our study we modified the source code of Google's interface while maintaining the same “look&feel”, in order to demonstrate that with very little effort it is possible to make access easier, more efficient, and less frustrating for sightless individuals. After giving a general overview of the project, the paper focuses on interface design and discusses any implementation problems and solutions. Keywords: accessibility, usability user interface, blind users, search engine Introduction Due to the enormous amount of information on the Internet today, search engines have become indispensable for finding specific and appropriate information. If we consider the user’s task, two main search engine components are equally important to carry out a successful search: (I) the search process, which seeks the requested information and orders the results by relevance; and (II) the user interface, where the user types the query keywords and the search results are shown. Since individuals interact with the search tool to set up a search task and explore the results, it is essential that user interfaces be easy to use and accessible to all; this is particularly important for sightless users who, interacting via screen reader, perceive the page contents very differently and experience a much longer search time. -

Design Issues for World Wide Web Navigation Visualisation Tools

Design Issues for World Wide Web Navigation Visualisation Tools Andy Cockburny Steve Jonesyx Department of Computer Science Department of Computer Science University of Canterbury University of Waikato Christchurch, New Zealand Hamilton, New Zealand [email protected] [email protected] +64 3 364 2987 ext 7768 +64 7 838 4490 Abstract The World Wide Web (WWW) is a successful hypermedia information space used by millions of people, yet it suffers from many deficiencies and problems in support for navigation around its vast information space. In this paper we identify the origins of these navigation problems, namely WWW browser design, WWW page design, and WWW page description languages. Regardless of their origins, these problems are eventually represented to the user at the browser’s user interface. To help overcome these problems, many tools are being developed which allow users to visualise WWW subspaces. We identify five key issues in the design and functionality of these visualisation systems: characteristics of the visual representation, the scope of the subspace representation, the mechanisms for generating the visualisation, the degree of browser independence, and the navigation support facilities. We provide a critical review of the diverse range of WWW visualisation tools with respect to these issues. Keywords World Wide Web; hypermedia navigation; design issues; visualisation. 1 Introduction With millions of users searching, browsing, and surfing the WWW, economies of scale are clearly relevant. Even a small inefficiency in user navigation within the WWW will result in enormous productivity losses if it is common to a fraction of WWW users. Our previous research has noted that users commonly have incorrect mental-models of even the most fundamental methods of WWW navigation, and has suggested interface mechanisms to ease the problems [CJ96]. -

Validation of Navigation Guidelines for Improving Usability in the Mobile Web

Accepted manuscript: Validation of navigation guidelines for improving usability in the mobile web. E. Garcia-Lopez, A. Garcia-Cabot, C. Manresa-Yee, L. de-Marcos, C. Pages-Arevalo. Computer Standards & Interfaces. 2017. Validation of navigation guidelines for improving usability in the mobile web Eva Garcia-Lopez*, 1, Antonio Garcia-Cabot1, Cristina Manresa-Yee2, Luis de-Marcos1, Carmen Pages-Arevalo1 1Computer Science Department, University of Alcala, Alcalá de Henares, Spain. 2Maths and Computer Science Department, University of Balearic Islands, Palma de Mallorca, Spain. 1Dpto Ciencias Computación. Edificio Politécnico. Ctra Barcelona km 33.1. 28871. Alcalá de Henares. Madrid. Spain. 2Ed. Anselm Turmeda. Ctra Valldemossa km 7.5. 07122. Palma de Mallorca. Islas Baleares. Spain. 1Phone: +34 918856836 *Corresponding author: [email protected] Validation of navigation guidelines for improving usability in the mobile web Abstract: Traditional navigation guidelines for desktop computers may not be valid for mobile devices because they have different characteristics such as smaller screens and keyboards. This paper aims to check whether navigation guidelines for Personal Computers are valid or not for mobile devices. To do this, firstly an evaluation was carried out by a group of advisors with knowledge in web design, and then empirical studies were conducted with real mobile devices and users. Results show that most of the traditional navigation guidelines are valid for current mobile devices, but some of them are not, which suggests that a similar process is necessary to validate traditional guidelines for mobile devices. The final result is a set of fifteen navigation guidelines for mobile web sites, from which eleven are totally new because they have not been previously suggested by any standard or recommendation for mobile web sites. -

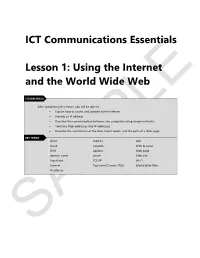

Lesson 1: Using the Internet and the World Wide Web

1 ICT Communications Essentials Lesson 1: Using the Internet and the World Wide Web LESSON SKILLS After completing this lesson, you will be able to: Explain how to access and connect to the Internet Identify an IP address Describe the communication between two computers using simple networks Translate Web addresses into IP addresses Describe the architecture of the Web, how it works, and the parts of a Web page KEY TERMS client modem URL cloud network Web browser DNS packets Web page domain name server Web site hyperlinks TCP/IP Wi-Fi Internet Top-Level Domain (TLD) World Wide Web IP address SAMPLE ICT Communications Essentials - Lesson 1: Using the Internet and the World Wide Web 1-2 Points to Ponder These Points to Ponder are designed to help you focus on key elements in this lesson. They are also suitable for use to spark discussions or individual research. How would you define "Internet"? What are your top five reasons for accessing the Internet? What type of Internet connection service is available in your area? o What type of service might better serve your needs, and why? Should your local city or town provide free Wi-Fi? o What are some pros and cons to providing free Wi-Fi for everyone? Explain your thinking. How do you think the Internet will change over the next 5 to 10 years? Explain your thinking. SAMPLE © 2016 Certification Partners, LLC. — All Rights Reserved. Version 2.0 ICT Communications Essentials - Lesson 1: Using the Internet and the World Wide Web 1-3 Overview This lesson introduces one of the most significant innovations of the past half-century: the Internet. -

Contextual Navigation Aids for Two World Wide Web Systems

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION, 12(2), 193–217 Copyright © 2000, Lawrence Erlbaum Associates, Inc. Contextual Navigation Aids for Two World Wide Web Systems Joonah Park Jinwoo Kim Human Computer Interaction Lab Department of Cognitive Science Yonsei University In spite of the radical enhancement of Web technologies, many users still continue to experience severe difficulties in navigating Web systems. One way to reduce the navi- gation difficulties is to provide context information that explains the current situation of Web users. In this study, we empirically examined the effects of 2 types of context in- formation, structural and temporal context. In the experiment, we evaluated the effec- tiveness of the contextual navigation aids in 2 different types of Web systems, an elec- tronic commerce system that has a well-defined structure and a content dissemination system that has an ill-defined structure. In our experiment, participants answered a set of postquestionnaires after performing several searching and browsing tasks. The re- sults of the experiment reveal that the 2 types of contextual navigation aids signifi- cantly improved the performance of the given tasks regardless of different Web sys- tems and different task types. Moreover, context information changed the users’ navigation patterns and increased their subjective convenience of navigation. This study concludes with implications for understanding the users’ searching and brows- ing patterns and for developing effective navigation systems. 1. INTRODUCTION The rapid growth of the Internet has created new lifestyles, such as searching for valuable information and browsing through various products by using the World Wide Web (WWW) as a universal tool. -

Website Adaptive Navigation Effects on User Experiences

Brigham Young University BYU ScholarsArchive Theses and Dissertations 2012-12-07 Website Adaptive Navigation Effects on User Experiences James C. Speirs Brigham Young University - Provo Follow this and additional works at: https://scholarsarchive.byu.edu/etd Part of the Computer Sciences Commons BYU ScholarsArchive Citation Speirs, James C., "Website Adaptive Navigation Effects on User Experiences" (2012). Theses and Dissertations. 3536. https://scholarsarchive.byu.edu/etd/3536 This Thesis is brought to you for free and open access by BYU ScholarsArchive. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. Website Adaptive Navigation Effects on User Experiences James Carl Speirs A thesis submitted to the faculty of Brigham Young University in partial fulfillment of the requirements for the degree of Master of Science Bret R. Swan, Chair Richard G. Helps Derek L. Hansen School of Technology Brigham Young University December 2012 Copyright © 2012 James Speirs All Rights Reserved ABSTRACT Website Adaptive Navigation Effects on User Experiences James Speirs School of Technology, BYU Master of Science The information search process within a website can often be frustrating and confusing for website visitors. Navigational structures are often complex and multitiered, hiding links with several layers of navigation that user’s might be interested in. Poor navigation causes user frustration. Adaptive navigation can be used to improve the user’s navigational experience by flattening the navigational structure and reducing the number of accessible links to only those that the user would be interested in. This examines the effects on a user’s navigational experience, of using adaptive navigation as the main navigational structure on a website. -

(12) United States Patent (10) Patent No.: US 9,547.468 B2 Yen Et Al

USOO9547468B2 (12) United States Patent (10) Patent No.: US 9,547.468 B2 Yen et al. (45) Date of Patent: Jan. 17, 2017 (54) CLIENT-SIDE PERSONAL VOICE WEB G06F 3/04842; G06F 17/30899; G10L NAVIGATION 15/265 See application file for complete search history. (71) Applicant: MICROSOFT TECHNOLOGY LICENSING, LLC, Redmond, WA (56) References Cited (US) U.S. PATENT DOCUMENTS (72) Inventors: Cheng-Yi Yen, Redmond, WA (US); 5,893,063 A * 4/1999 Loats ...................... G1 OL15, 26 Derek Liddell, Kent, WA (US); 704/27O Kenneth Reneris, Clyde Hill, WA 7,653,544 B2 * 1/2010 Bradley ................. G06Q 50/01 (US); Charles Morris, Seattle, WA TO4,260 (US); Dieter Rindle, Klosterlechfeld (Continued) (DE); Tanvi Surti, Seattle, WA (US); Michael Stephens, Seattle, WA (US); OTHER PUBLICATIONS Eka Tjung, Redmond, WA (US) Viticci, Federico , "Coming Soon: i Phone Voice Control for (73) Assignee: MICROSOFT TECHNOLOGY Everything MacStories'. Published on: Jan. 30, 2011, Available LICENSING, LLC, Redmond, WA at: http://www.macstories.net/iphone/coming-Soon-iphone-voice (US) control-for-everything? (4 pages total). (Continued) (*) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 Primary Examiner — Maryam Ipakchi U.S.C. 154(b) by 137 days. (74) Attorney, Agent, or Firm — Miia Sula; Judy Yee; Micky Minhas (21) Appl. No.: 14/231,570 (57) ABSTRACT (22) Filed: Mar. 31, 2014 A system running on a mobile device such as a Smartphone is configured to expose a user interface (UI) to enable a user (65) Prior Publication Data to specify web pages that can be pinned to a start screen of US 2015/0277846 A1 Oct.