On Euclidean Methods for Cubic and Quartic Jacobi Symbols

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Euler Phi Function for the Eisenstein Integers and Some Applications

An Euler phi function for the Eisenstein integers and some applications Emily Gullerud aBa Mbirika University of Minnesota University of Wisconsin-Eau Claire [email protected] [email protected] January 8, 2020 Abstract The Euler phi function on a given integer n yields the number of positive integers less than n that are relatively prime to n. Equivalently, it gives the order of the group Z of units in the quotient ring (n) for a given integer n. We generalize the Euler phi function to the Eisenstein integer ring Z[ρ] where ρ is the primitive third root of 2πi/3 Z unity e by finding the order of the group of units in the ring [ρ] (θ) for any given Eisenstein integer θ. As one application we investigate a sufficiency criterion for Z × when certain unit groups [ρ] (γn) are cyclic where γ is prime in Z[ρ] and n ∈ N, thereby generalizing well-known results of similar applications in the integers and some lesser known results in the Gaussian integers. As another application, we prove that the celebrated Euler-Fermat theorem holds for the Eisenstein integers. Contents 1 Introduction 2 2 Preliminaries and definitions 3 2.1 Evenand“odd”Eisensteinintegers . 5 arXiv:1902.03483v2 [math.NT] 6 Jan 2020 2.2 ThethreetypesofEisensteinprimes . 8 2.3 Unique factorization in Z[ρ]........................... 10 3 Classes of Z[ρ] /(γn) for a prime γ in Z[ρ] 10 3.1 Equivalence classes of Z[ρ] /(γn)......................... 10 3.2 Criteria for when two classes in Z[ρ] /(γn) are equivalent . 13 3.3 Units in Z[ρ] /(γn)............................... -

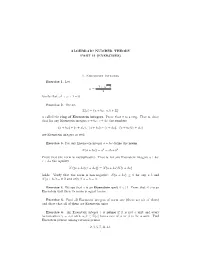

Algebraic Number Theory Part Ii (Solutions) 1

ALGEBRAIC NUMBER THEORY PART II (SOLUTIONS) 1. Eisenstein Integers Exercise 1. Let p −1 + −3 ! = : 2 Verify that !2 + ! + 1 = 0. Solution. We have p 2 p p p −1 + −3 −1 + −3 1 + −3 −1 + −3 + + 1 = − + + 1 2 2 2 2 1 1 1 1 p = − − + 1 + − + −3 2 2 2 2 = 0: Exercise 2. The set Z[!] = fa + b! : a; b 2 Zg is called the ring of Eisenstein integers. Prove that it is a ring. That is, show that for any Eisenstein integers a + b!, c + d! the numbers (a + b!) + (c + d!); (a + b!) − (c + d!); (a + b!)(c + d!) are Eisenstein integers as well. Solution. For the sum and difference the calculations are easy: (a + b!) + (c + d!) = (a + c) + (b + d)!; (a + b!) − (c + d!) = (a − c) + (b − d)!: Since a; b; c; d are integers, we see that a + c; b + d; a − c; b − d are integers as well. Therefore the above numbers are Eisenstein integers. The product is a bit more tricky. Here we need to use the fact that !2 = −1−!: (a + b!)(c + d!) = ac + ad! + bc! + bd!2 = ac + ad! + bc! + bd(−1 − !) = (ac − bd) + (ad + bc − bd)!: Since a; b; c; d are integers, we see that ac − bd and ad + bc − bd are integers as well, so the above number is an Einstein integer. Exercise 3. For any Eisenstein integer a + b! define the norm N(a + b!) = a2 − ab + b2: Prove that the norm is multiplicative. That is, for any Eisenstein integers a + b!, c + d! the equality N ((a + b!)(c + d!)) = N(a + b!)N(c + d!) 1 2 ALGEBRAIC NUMBER THEORY PART II (SOLUTIONS) holds. -

Of Certain Complex Quartic Fields. II

MATHEMATICS OF COMPUTATION, VOLUME 29, NUMBER 129 JANUARY 1975, PAGES 137-144 Computation of the Ideal Class Group of Certain Complex Quartic Fields. II By Richard B. Lakein Abstract. For quartic fields K = F3(sJtt), where F3 = Q(p) and n = 1 mod 4 is a prime of F3, the ideal class group is calculated by the same method used previously for quadratic extensions of F^ = Q(i), but using Hurwitz' complex continued fraction over Q(p). The class number was found for 10000 such fields, and the previous computation over F. was extended to 10000 cases. The dis- tribution of class numbers is the same for 10000 fields of each type: real quad- ratic, quadratic over Fj, quadratic over F3. Many fields were found with non- cyclic class group, including the first known real quadratics with groups 5X5 and 7X7. Further properties of the continued fractions are also discussed. 1. Introduction. The quartic fields K = Fi\/p), where p G F = Q{\/-m), m = 1, 2, 3, 7, or 11, have many algebraic properties of integers, ideals, and units which are closely analogous to those of real quadratic fields. Furthermore, since the integers of F form a euclidean ring, one can, by means of a suitable generalization of continued fractions, actually calculate the objects of interest in K. In addition to the detailed algebraic properties, there is a "gross" phenomenon in real quadratic fields with a counterpart in these quartic fields. The fields Q(Vp). for prime p = Ak + 1, appear to have a regular distribution of class numbers. -

Introduction to Algebraic Number Theory Part II

Introduction to Algebraic Number Theory Part II A. S. Mosunov University of Waterloo Math Circles November 14th, 2018 DETOUR Detour: Mihˇailescu's Theorem I In 1844 it was conjectured by Catalan that the only perfect powers that differ by one are 8 and 9. I Last time I mentioned Tijdeman's Theorem: there are only finitely many perfect powers that differ by one. I I was unaware that the conjecture of Catalan was solved by the Romanian mathematician Preda Mihˇailescuin 2002 in his article \Primary Cyclotomic Units and a Proof of Catalan's Conjecture". I See a popular video about Catalan's Conjecture: https://www.youtube.com/watch?v=Us-__MukH9I (subscribe to Numberphile!) I The question about prime powers that differ by 2 is still open! Detour: Mihˇailescu's Theorem Figure: Eug´eneCharles Catalan (left) and Preda Mihailescu (right) RECALL I We were learning about algebraic number theory. It studies properties of numbers that are roots of polynomial I p p −1+ −3 3 equations, like i, 2 ; 2, and so on. I We learned about Gaussian integers, i.e. numbers of the form a + bi, where a;b are integers and i satisfies i 2 + 1 = 0. I The set of Gaussian integers is denoted by Z[i]. You can add, subtract and multiply Gaussian integers, and the result will be a Gaussian integer. Therefore Z[i] is a ring. I We saw that every Gaussian integer a + bi has a norm N(a + bi) = a2 + b2 and that the norm is multiplicative: N(ab) = N(a)N(b): Generalizing Primes I We generalized the notion of divisibility. -

A Computational Exploration of Gaussian and Eisenstein Moats

A Computational Exploration of Gaussian and Eisenstein Moats By Philip P. West A DISSERTATION SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTERS IN SCIENCE (MATHEMATICS) at the CALIFORNIA STATE UNIVERSITY CHANNEL ISLANDS 20 14 copyright 20 14 Philip P. West ALL RIGHTS RESERVED APPROVED FOR THE MATHEMATICS PROGRAM Brian Sittinger, Advisor Date: 21 December 20 14 Ivona Grzegorzyck Date: 12/2/14 Ronald Rieger Date: 12/8/14 APPROVED FOR THE UNIVERSITY Doctor Gary A. Berg Date: 12/10/14 Non- Exclusive Distribution License In order for California State University Channel Islands (C S U C I) to reproduce, translate and distribute your submission worldwide through the C S U C I Institutional Repository, your agreement to the following terms is necessary. The authors retain any copyright currently on the item as well as the ability to submit the item to publishers or other repositories. By signing and submitting this license, you (the authors or copyright owner) grants to C S U C I the nonexclusive right to reproduce, translate (as defined below), and/ or distribute your submission (including the abstract) worldwide in print and electronic format and in any medium, including but not limited to audio or video. You agree that C S U C I may, without changing the content, translate the submission to any medium or format for the purpose of preservation. You also agree that C S U C I may keep more than one copy of this submission for purposes of security, backup and preservation. You represent that the submission is your original work, and that you have the right to grant the rights contained in this license. -

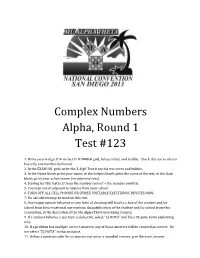

Complex Numbers Alpha, Round 1 Test #123

Complex Numbers Alpha, Round 1 Test #123 1. Write your 6-digit ID# in the I.D. NUMBER grid, left-justified, and bubble. Check that each column has only one number darkened. 2. In the EXAM NO. grid, write the 3-digit Test # on this test cover and bubble. 3. In the Name blank, print your name; in the Subject blank, print the name of the test; in the Date blank, print your school name (no abbreviations). 4. Scoring for this test is 5 times the number correct + the number omitted. 5. You may not sit adjacent to anyone from your school. 6. TURN OFF ALL CELL PHONES OR OTHER PORTABLE ELECTRONIC DEVICES NOW. 7. No calculators may be used on this test. 8. Any inappropriate behavior or any form of cheating will lead to a ban of the student and/or school from future national conventions, disqualification of the student and/or school from this convention, at the discretion of the Mu Alpha Theta Governing Council. 9. If a student believes a test item is defective, select “E) NOTA” and file a Dispute Form explaining why. 10. If a problem has multiple correct answers, any of those answers will be counted as correct. Do not select “E) NOTA” in that instance. 11. Unless a question asks for an approximation or a rounded answer, give the exact answer. Alpha Complex Numbers 2013 ΜΑΘ National Convention Note: For all questions, answer “(E) NOTA” means none of the above answers is correct. Furthermore, assume that i 1, cis cos i sin , and Re(z ) and Im(z ) are the real and imaginary parts of z, respectively, unless otherwise specified. -

A History of Stickelberger's Theorem

A History of Stickelberger’s Theorem A Senior Honors Thesis Presented in Partial Fulfillment of the Requirements for graduation with research distinction in Mathematics in the undergraduate colleges of The Ohio State University by Robert Denomme The Ohio State University June 8, 2009 Project Advisor: Professor Warren Sinnott, Department of Mathematics 1 Contents Introduction 2 Acknowledgements 4 1. Gauss’s Cyclotomy and Quadratic Reciprocity 4 1.1. Solution of the General Equation 4 1.2. Proof of Quadratic Reciprocity 8 2. Jacobi’s Congruence and Cubic Reciprocity 11 2.1. Jacobi Sums 11 2.2. Proof of Cubic Reciprocity 16 3. Kummer’s Unique Factorization and Eisenstein Reciprocity 19 3.1. Ideal Numbers 19 3.2. Proof of Eisenstein Reciprocity 24 4. Stickelberger’s Theorem on Ideal Class Annihilators 28 4.1. Stickelberger’s Theorem 28 5. Iwasawa’s Theory and The Brumer-Stark Conjecture 39 5.1. The Stickelberger Ideal 39 5.2. Catalan’s Conjecture 40 5.3. Brumer-Stark Conjecture 41 6. Conclusions 42 References 42 2 Introduction The late Professor Arnold Ross was well known for his challenge to young students, “Think deeply of simple things.” This attitude applies to no story better than the one on which we are about to embark. This is the century long story of the generalizations of a single idea which first occurred to the 19 year old prodigy, Gauss, and which he was able to write down in no less than 4 pages. The questions that the young genius raised by offering the idea in those 4 pages, however, would torment the greatest minds in all the of the 19th century. -

An Exposition of the Eisenstein Integers

Eastern Illinois University The Keep Masters Theses Student Theses & Publications 2016 An Exposition of the Eisenstein Integers Sarada Bandara Eastern Illinois University This research is a product of the graduate program in Mathematics and Computer Science at Eastern Illinois University. Find out more about the program. Recommended Citation Bandara, Sarada, "An Exposition of the Eisenstein Integers" (2016). Masters Theses. 2467. https://thekeep.eiu.edu/theses/2467 This is brought to you for free and open access by the Student Theses & Publications at The Keep. It has been accepted for inclusion in Masters Theses by an authorized administrator of The Keep. For more information, please contact [email protected]. The Graduate School� EASTERNILLINOIS UNIVERSITY' Thesis Maintenance and Reproduction Certificate FOR: Graduate Candidates Completing Theses in Partial Fulfillment of the Degree Graduate Faculty Advisors Directing the Theses Preservation, Reproduction, and Distribution of Thesis Research RE: Preserving, reproducing, and distributing thesis research is an important part of Booth Library's responsibility to provide access to scholarship. In order to further this goal, Booth Library makes all graduate theses completed as part of a degree program at Eastern Illinois University available for personal study, research, and other not-for-profit educational purposes. Under 17 U.S.C. § 108, the library may reproduce and distribute a copy without infringing on copyright; however, professional courtesy dictates that permission be requested from the author before doing so. Your signatures affirm the following: • The graduate candidate is the author of this thesis. • The graduate candidate retains the copyright and intellectual property rights associated with the original research, creative activity, and intellectual or artistic content of the thesis. -

Finding Factors of Factor Rings Over Eisenstein Integers

International Mathematical Forum, Vol. 9, 2014, no. 31, 1521 - 1537 HIKARI Ltd, www.m-hikari.com http://dx.doi.org/10.12988/imf.2014.111121 Finding Factors of Factor Rings over Eisenstein Integers Valmir Bu¸caj Department of Mathematics, Texas Lutheran University 1000 West Court Street, Seguin, TX 78155 Dedicated to my parents, Afrim and Drita Bu¸caj. Copyright c 2014 Valmir Bu¸caj. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract In this paper we prove a few results related to the factor rings over the Eisenstein integers. In particular we show that the ring Z[!] fac- tored by an ideal generated by any element m + n! of this ring, where g:c:d(m; n) = 1 is isomorphic to the ring Zm2+n2−mn. Next, we find the representatives for the equivalence classes of the ring Z[!] when factor- ing out by a power of a prime ideal, and also identify all the units of these quotient rings respectively. Then, we give a representation for the factor ring Z[!]= hm + n!i in terms of the product of other rings. Fi- nally, we end this paper with a few applications to elementary number theory. Mathematics Subject Classification: Primary 13F15, 13F07, 13B02; Secondary 11D09, 11A51 Keywords: Eisenstein Integers; Eisenstein Primes; Factor Rings; Prime Ideals; Norm; Equivalence Classes; Units 1522 Valmir Bu¸caj 1 Introduction Eisenstein integersp are defined to be the set Z[!] = fa + b! : a; b 2 Zg where ! = (−1 + i 3)=2: This set lies inside the set of complex numbers C and they form a commutative ring in the algebraic number field Q(!): We can define a ring norm N : Z[!] ! Z+ [ f0g by N(m + n!) = (m + n!)(m + n!¯) = m2 + n2 − mn; and it is easy to check that N is multiplicative; that is, N(α · β) = N(α) · N(β), for all α; β 2 Z[!]: p Proposition 1.1. -

1. Eisenstein Integers Exercise 1. Let Ω

ALGEBRAIC NUMBER THEORY PART II (EXERCISES) 1. Eisenstein Integers Exercise 1. Let p −1 + −3 ! = : 2 Verify that !2 + ! + 1 = 0. Exercise 2. The set Z[!] = fa + b! : a; b 2 Zg is called the ring of Eisenstein integers. Prove that it is a ring. That is, show that for any Eisenstein integers a + b!, c + d! the numbers (a + b!) + (c + d!); (a + b!) − (c + d!); (a + b!)(c + d!) are Eisenstein integers as well. Exercise 3. For any Eisenstein integer a + b! define the norm N(a + b!) = a2 − ab + b2: Prove that the norm is multiplicative. That is, for any Eisenstein integers a + b!, c + d! the equality N ((a + b!)(c + d!)) = N(a + b!)N(c + d!) holds. Verify that the norm is non-negative: N(a + b!) ≥ 0 for any a; b and N(a + b!) = 0 if and only if a = b = 0. Exercise 4. We say that γ is an Eisenstein unit if γ j 1. Prove that if γ is an Eisenstein unit then its norm is equal to one. Exercise 5. Find all Eisenstein integers of norm one (there are six of them) and show that all of them are Eisenstein units. Exercise 6. An Eisenstein integer γ is prime if it is not a unit and every factorization γ = αβ with α; β 2 Z[!] forces one of α or β to be a unit. Find Eisenstein primes among rational primes 2; 3; 5; 7; 11; 13: 1 2 ALGEBRAIC NUMBER THEORY PART II (EXERCISES) 2. Failure of Unique Factorization Exercise 1. -

Quadratic and Cubic Reciprocity Suzanne Rousseau Eastern Washington University

Eastern Washington University EWU Digital Commons EWU Masters Thesis Collection Student Research and Creative Works 2012 Quadratic and cubic reciprocity Suzanne Rousseau Eastern Washington University Follow this and additional works at: http://dc.ewu.edu/theses Part of the Physical Sciences and Mathematics Commons Recommended Citation Rousseau, Suzanne, "Quadratic and cubic reciprocity" (2012). EWU Masters Thesis Collection. 27. http://dc.ewu.edu/theses/27 This Thesis is brought to you for free and open access by the Student Research and Creative Works at EWU Digital Commons. It has been accepted for inclusion in EWU Masters Thesis Collection by an authorized administrator of EWU Digital Commons. For more information, please contact [email protected]. QUADRATIC AND CUBIC RECIPROCITY A Thesis Presented To Eastern Washington University Cheney, Washington In Partial Fulfillment of the Requirements for the Degree Master of Science By Suzanne Rousseau Spring 2012 THESIS OF SUZANNE ROUSSEAU APPROVED BY DATE: DR. DALE GARRAWAY, GRADUATE STUDY COMMITTEE DATE: DR. RON GENTLE, GRADUATE STUDY COMMITTEE DATE: LIZ PETERSON, GRADUATE STUDY COMMITTEE ii MASTERS THESIS In presenting this thesis in partial fulfillment of the requirements for a mas- ter's degree at Eastern Washington University, I agree that the JFK Library shall make copies freely available for inspection. I further agree that copying of this project in whole or in part is allowable only for scholarly purposes. It is understood, however, that any copying or publication of this thesis for commercial purposes, or for financial gain, shall not be allowed without my written permission. Signature Date iii Abstract In this thesis, we seek to prove results about quadratic and cubic reciprocity in great detail. -

KTH Royal Institute of Technology on Integers, Primes and Unique

KTH Royal Institute of Technology Department of Mathematics Bachelor Thesis, SA104X On Integers, Primes and Unique Factorization in Quadratic Fields Author: Supervisor: Alice Hedenlund Dan Laksov May 18, 2013 1 Abstract. This thesis will deal with quadratic fields. The prob- lem is to study such fields and their properties including, but not limited to, determining integers, finding primes and deciding which quadratic fields have unique factorization. The goal is to get famil- iar with these concepts and to provide a starting point for students with an interest in algebra to explore field extensions and inte- gral closures in relation to elementary number theory. The reader will be assumed to have a basic knowledge in algebra and famil- iar with concepts such as groups, rings and fields. The necessary background material is covered in for example A First Course In Abstract Algebra by John B. Fraleigh. Some familiarity with basic number theory may be helpful, but not necessary for the scope of this thesis. The questions posed in this thesis was answered by means of literature and discussions with fellow students and my supervisor. The first four sections will deal with basic concepts in algebra such as algebraic numbers, algebraic integers and prime numbers. This knowledge will then be applied to the subject of quadratic fields. The thesis is concluded with two sections about important cases of quadratic fields, Gaussian and Eisenstein. 2 Contents 1. Algebraic Numbers 3 1.1. Algebraic Elements 3 1.2. Algebraic Extensions 4 2. Algebraic Integers 6 3. Prime Numbers 9 4. Factorization 10 4.1.