Validation of Stereovision Camera System for 3D Strain Tracking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FINEPIX XP60 Series First Steps Owner’S Manual Basic Photography and Playback More on Photography Thank You for Your Purchase of This Product

BL03301-100 EN DIGITAL CAMERA Before You Begin FINEPIX XP60 Series First Steps Owner’s Manual Basic Photography and Playback More on Photography Thank you for your purchase of this product. This manual More on Playback describes how to use your FUJIFILM digital camera and the supplied software. Be sure that Movies you have read and understood its contents and the warnings in Connections “For Your Safety” (P ii) before us- ing the camera. Menus For information on related products, visit our website at http://www.fujifilm.com/products/digital_cameras/index.html Technical Notes Troubleshooting Appendix For Your Safety IMPORTANT SAFETY INSTRUCTIONS • Read Instructions: All the safety and operat- Alternate Warnings: This video product is Power-Cord Protection: Power-supply cords ing instructions should be read before the equipped with a three-wire grounding-type should be routed so that they are not likely appliance is operated. plug, a plug having a third (grounding) pin. to be walked on or pinched by items placed • Retain Instructions: The safety and operating This plug will only fi t into a grounding-type upon or against them, paying particular instructions should be retained for future power outlet. This is a safety feature. If you attention to cords at plugs, convenience re- reference. are unable to insert the plug into the outlet, ceptacles, and the point where they exit from • Heed Warnings: All warnings on the ap- contact your electrician to replace your obso- the appliance. pliance and in the operating instructions lete outlet. Do not defeat the safety purpose Accessories: Do not place this video product should be adhered to. -

Finepix Z20fd Owner's Manual

BL00708-700(1) E Before You Begin Owner’s Manual First Steps Thank you for your purchase of this product. This manual de- Basic Photography and Playback scribes how to use your FUJIFILM FinePix Z20fd digital camera and the supplied software. Be sure that you have read and More on Photography understood its contents before using the camera. More on Playback Movies Connections Menus Technical Notes For information on related products, visit our website at http://www.fujifilm.com/products/index.htm Troubleshooting Appendix For Your Safety IMPORTANT SAFETY INSTRUCTIONS • Read Instructions: All the safety and op- Alternate Warnings: This video product Water and Moisture: Do not use this vid- AAntennasntennas erating instructions should be read is equipped with a three-wire ground- eo product near water—for example, Outdoor Antenna Grounding: If an before the appliance is operated. ing-type plug, a plug having a third near a bath tub, wash bowl, kitchen outside antenna or cable system is • Retain Instructions: The safety and (grounding) pin. This plug will only fi t sink, or laundry tub, in a wet basement, connected to the video product, be operating instructions should be into a grounding-type power outlet. or near a swimming pool, and the like. sure the antenna or cable system is retained for future reference. This is a safety feature. If you are unable grounded so as to provide some pro- Power-Cord Protection: Power-sup- • Heed Warnings: All warnings on the to insert the plug into the outlet, contact tection against voltage surges and ply cords should be routed so that appliance and in the operating in- your electrician to replace your obsolete built-up static charges. -

Fujifilm Finepix AX550 Digital Camera User Guide Manuals

BL01671-201 EN DIGITAL CAMERA Before You Begin FINEPIX AX500 Series First Steps Owner’s Manual Basic Photography and Playback Thank you for your purchase More on Photography of this product. This manual describes how to use your More on Playback FUJIFILM digital camera and the supplied software. Be sure that Movies you have read and understood its contents and the warnings in Connections “For Your Safety” (P ii) before us- ing the camera. Menus For information on related products, visit our website at http://www.fujifilm.com/products/digital_cameras/index.html Technical Notes Troubleshooting Appendix For Your Safety IMPORTANT SAFETY INSTRUCTIONS • Read Instructions: All the safety and operat- Alternate Warnings: This video product is Power-Cord Protection: Power-supply cords ing instructions should be read before the equipped with a three-wire grounding-type should be routed so that they are not likely appliance is operated. plug, a plug having a third (grounding) pin. to be walked on or pinched by items placed • Retain Instructions: The safety and operating This plug will only fit into a grounding-type upon or against them, paying particular instructions should be retained for future power outlet. This is a safety feature. If you attention to cords at plugs, convenience re- reference. are unable to insert the plug into the outlet, ceptacles, and the point where they exit from • Heed Warnings: All warnings on the ap- contact your electrician to replace your obso- the appliance. lete outlet. Do not defeat the safety purpose pliance and in the operating instructions Accessories: Do not place this video product of the grounding type plug. -

FINEPIX S8600 Series Owner's Manual

BL04301-101 EN DIGITAL CAMERA Before You Begin FINEPIX S8600 Series First Steps Owner’s Manual Basic Photography and Playback More on Photography More on Playback Movies For information on related products, visit our website at http://www.fujifilm.com/products/digital_cameras/index.html Connections Menus Technical Notes Troubleshooting Appendix For Your Safety Be sure to read this notes before using WARNING Do not allow water or foreign objects to enter the camera. Safety Notes If water or foreign objects get inside the camera, turn the camera • Make sure that you use your camera correctly. Read these Safety Notes and off, remove the battery and disconnect and unplug the AC power your Owner’s Manual carefully before use. adapter. Continued use of the camera can cause a fire or electric shock. • After reading these Safety Notes, store them in a safe place. • Contact your FUJIFILM dealer. About the Icons Do not use the camera in the bathroom or shower. The icons shown below are used in this document to indicate the severity of Do not use in This can cause a fire or electric shock. the injury or damage that can result if the information indicated by the icon the bathroom is ignored and the product is used incorrectly as a result. or shower. Never attempt to disassemble or modify (never open the case). This icon indicates that death or serious injury can result if the infor- Failure to observe this precaution can cause fire or electric shock. mation is ignored. Do not disas- WARNING semble Should the case break open as the result of a fall or other accident, do not This icon indicates that personal injury or material damage can result touch the exposed parts. -

3Rd Annual Lucie Technical Awards 2017 Finalists & Winners

3RD ANNUAL LUCIE TECHNICAL AWARDS 2017 FINALISTS & WINNERS CAMERA BEST CAMERA BAG LIGHTING BEST INSTANT CAMERA *WINNER: Think Tank Photo Airport Advantage BEST CONTINUOUS LIGHT SOURCE - Gitzo Century Traveler Messenger Bag *WINNER: Fujifilm instax SQUARE SQ10 - Lowepro Flipside 300 AW II *WINNER: ARRI SkyPanel S120-C - Leica Sofort - Fluotec AURALUX 100 LED Fresnel - Manfrotto Pro Light 3N1-36 - Lomo Instant Automat Glass Magellan - Fotodiox PopSpot J-500 - Manfrotto Pro Light Bumblebee-230 - MiNT SLR670-S Noir - Hive Lighting Wasp 100-C™ - MindShift Gear PhotoCross™ 13 - Polaroid SNAP Touch Instant Digital Camera - Polaroid Flexible LED Lighting Panel with - ThinkTank Photo Spectral™ 10 4-Channel Remote Control - Think Tank Photo StreetWalker Rolling Backpack V2.0 - Rotolight AEOS BEST FIXED-LENS COMPACT CAMERA - Vanguard Alta Sky 51D *WINNER: Fujifilm X100F BEST SPEEDLIGHT - Canon PowerShot G9 X Mark II BEST TRIPOD - LUMIX DC-ZS70 *WINNER: Metz Mecablitz M400 *WINNER: 3 Legged Thing Equinox Leo - Sony RX 100V - Canon MT-26EX-RT Macro Twin Lite Carbon Fibre Tripod - Godox Witstro AD200 Pocket Flash - System & AirHed Switch - Hähnel MODUS 600RT BEST ACTION CAMERA - BENRO Slim Carbon Fiber TSL08CN00 Tripod - Yongnuo YN686EX-RT TTL *WINNER: Olympus TOUGH TG-5 - Gitzo GT3543XLS Series 3 Systematic Tripod XL - FUJIFILM FinePix XP120 - Vanguard Alta Pro 2+ 263AB100 SOFWARE - GoPro Hero5 Black - Vanguard VEO 2 265CB Carbon Fiber - KODAK PIXPRO ORBIT360 4K BEST PHOTO EDITING SOFTWARE - Nikon KeyMission 170 *WINNER: Capture One Pro 10.1 - Ricoh -

Owner's Manual

BL03201-101 EN DIGITAL CAMERA Before You Begin FINEPIX S4800 Series FINEPIX S4700 Series First Steps FINEPIX S4600 Series Basic Photography and Playback Owner’s Manual More on Photography Thank you for your purchase of this prod- uct. This manual describes how to use your More on Playback FUJIFILM digital camera and the supplied software. Be sure that you have read and Movies understood its contents and the warnings in “For Your Safety” (P ii) before using the Connections camera. Menus Technical Notes For information on related products, visit our website at http://www.fujifilm.com/products/digital_cameras/index.html Troubleshooting Appendix For Your Safety IMPORTANT SAFETY INSTRUCTIONS • Read Instructions: All the safety and not defeat the safety purpose of the This video product should never be An appliance operating instructions should be polarized plug. placed near or over a radiator or heat and cart com- read before the appliance is oper- register. bination should Alternate Warnings: This video ated. be moved with product is equipped with a 3-wire Attachments: Do not use attachments • Retain Instructions: The safety and care. Quick stops, grounding-type plug, a plug having not recommended by the video operating instructions should be excessive force, a third (grounding) pin. This plug will product manufacturer as they may retained for future reference. and uneven sur- only fit into a grounding-type power cause hazards. • Heed Warnings: All warnings on the faces may cause the appliance and outlet. This is a safety feature. If you appliance and in the operating in- Water and Moisture: Do not use this cart combination to overturn. -

Annual Report 2010 Download Entire Report

FUJIFILM Holdings Corporation For further information, please contact: Annual Report 2010 IR Office, Corporate Planning Div. FUJIFILM Holdings Corporation 7-3, Akasaka 9-Chome, Minato-ku, Tokyo 107-0052, Japan Tel: 81-3-6271-1111 URL: http://www.fujifilmholdings.com/ Annual Report 2010 The body of this report is printed on FSC-certified paper. Printed in Japan Corporate Information Corporate Information FUJIFILM Holdings Corporation Fujifilm Group Corporate Philosophy 7-3, Akasaka 9-chome, We will use leading-edge, proprietary technologies to provide top-quality products and Minato-ku, Tokyo 107-0052, Japan Tel: 81-3-6271-1111 services that contribute to the advancement of culture, science, technology and industry, URL: http://www.fujifilmholdings.com/ as well as improved health and environmental protection in society. Our overarching aim is to help enhance the quality of life of people worldwide. Date of Establishment: January 20, 1934 Stock Exchange Listings: Tokyo, Osaka, Nagoya Capital: ¥40,363 million (as of March 31, 2010) Share Registrar Fuji Photo Film Co., Ltd. (“Fuji Photo”)—predecessor of Treasury The Chuo Mitsui Trust and Banking Company, Limited Stock FUJIFILM Holdings Corporation (“Fujifilm” or “the Fiscal Year-end: March25,978 31 33-1, Shiba 3-chome, Minato-ku, Tokyo 105-8574, Japan Company”)—was established in 1934 with the aim of realiz- 5.1% Number of employees (Persons): 74,216 (as of March 31, 2010)Financial ing the domestic production of photographic films. Having Institutions 206,906 carried out sustained efforts -

Fujifilm Finepix S1 Pro User's Manual

OWNER’S MANUAL Basic Photography This manual will show you how to use your FUJIFILM DIGITAL CAMERA FinePix S1 Pro correctly. Please follow the instructions carefully. Setup Advanced Photography Advanced Photography (Playback) Preference BB11378-200(1) Downloaded from ManualsCamera.com Manuals Warning To prevent fire or shock hazard, do not expose the unit to rain or moisture. CAUTION: TO REDUCE THE RISK OF ELECTRIC SHOCK. DO NOT REMOVE COVER (OR BACK). NO USER-SERVICEABLE PARTS INSIDE. REFER SERVICING TO QUALIFIED SERVICE PERSONNEL. The graphical symbols are located on the bottom of the case. This symbol is intended to alert the user to the presence of uninsulated “dangerous voltage” within the product’s enclosure that may be of sufficient magnitude to constitute a risk of electric shock to persons. This symbol is intended to alert the user to the presence of important operating and maintenance (servicing) instructions in the literature accompanying the appliance. For customers in the U.S.A. FCC Statement This device complies with part 15 of the FCC Rules. Operation is subject to the following two conditions:(1) This device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation. CAUTION This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications. -

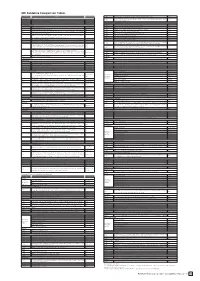

GRI Guideline Comparison Tables

GRI Guideline Comparison Tables Data and Information Environmental Aspects Item Indicators Pages in this Report ○EN14 Strategies, current actions, and future plans for managing impacts on biodiversity. 28, 67-69 1. Strategy and Profile Number of IUCN Red List species and national conservation list species with habitats in areas affected by oper- ○EN15 None Statement from the most senior decision-maker of the organization (e.g., CEO, chair, or equivalent senior position) about the relevance of ations, by level of extinction risk. 1.1 sustainability to the organization and its strategy. 2-3 1.2 Description of key impacts, risks, and opportunities. 28-29 Emissions, Effluents, and Waste 2. Organizational Profile ◎EN16 Total direct and indirect greenhouse gas emissions by weight. 86-90 >>> Environmental monitoring 2.1 Name of the organization. Editorial Policy, 94 ◎EN17 Other relevant indirect greenhouse gas emissions by weight. 86-90 2.2 Primary brands, products, and/or services. 94 ◎EN18 Initiatives to reduce greenhouse gas emissions and reductions achieved. 16-19 >>> Installing plumbing and tanks above ground 2.3 Operational structure of the organization, including main divisions, operating companies, subsidiaries, and joint ventures. 95 ◎EN19 Emissions of ozone-depleting substances by weight. 86-90 2.4 Location of organization’s headquarters. 95 ◎EN20 NOx, SOx, and other significant air emissions by type and weight. 86-90 Number of countries where the organization operates, and names of countries with either major operations or ◎EN21 Total water discharge by quality and destination. 86-90 >>> Legal compliance and reports on complaints 2.5 95 that are specifically relevant to the sustainability issues covered in the report. -

FINEPIX F200EXR Owner's Manual

BL00829-201 E Before You Begin First Steps Basic Photography and Playback Owner’s Manual More on Photography Thank you for your purchase of this product. This manual describes More on Playback how to use your FUJIFILM FinePix F200EXR digital camera and the supplied software. Be sure that you Movies have read and understood its con- tents before using the camera. Connections Menus For information on related products, visit our website at http://www.fujifilm.com/products/index.html Technical Notes Troubleshooting Appendix For Your Safety IMPORTANT SAFETY INSTRUCTIONS • Read Instructions: All the safety and op- Alternate Warnings: This video prod- Water and Moisture: Do not use this AAntennasntennas erating instructions should be read uct is equipped with a three-wire video product near water—for ex- Outdoor Antenna Grounding: If an before the appliance is operated. grounding-type plug, a plug having ample, near a bath tub, wash bowl, outside antenna or cable system is • Retain Instructions: The safety and a third (grounding) pin. This plug kitchen sink, or laundry tub, in a wet connected to the video product, be operating instructions should be will only fi t into a grounding-type basement, or near a swimming pool, sure the antenna or cable system is retained for future reference. power outlet. This is a safety feature. and the like. grounded so as to provide some pro- • Heed Warnings: All warnings on the If you are unable to insert the plug tection against voltage surges and Power-Cord Protection: Power-sup- appliance and in the operating in- into the outlet, contact your electri- built-up static charges. -

Remote Sensing of Clouds and Calibration Using Fujifilm Finepix S1 Digital Camera Clement Li1, Stephen E

Remote sensing of clouds and calibration using Fujifilm Finepix S1 digital camera Clement Li1, Stephen E. Schwartz2, Viviana Vladutescu3, Antonio Aguirre3 1City College of the City University of New York, NY, NY 10031, 2Brookhaven National Laboratory, Upton, NY 11973, 3New York City College of Technology, Brooklyn NY 11201 1 Cloud fraction used • Clouds exert important radiative effects on climate. 4 Cropped original Red/(Red+Blue), • Clouds are commonly characterized by cloud fraction in 3456 x 3456 pixels RRB image measurements and models. • We undertook high resolution photography from the surface to examine cloud structure at high resolution. Fujifilm Finepix S1 digital camera specifications 2 Focal length = 215 mm , f# = 5.6, Effective aperture diameter = 38.4 mm, 35 mm Equivalent focal length = 1200 mm Output: 3456 x 4608 pixels; 16 bit of red green and blue λ = 450 nm λ = 532 nm λ = 650 nm Rayleigh criterion, 29 34 41 μrad (mm @ 1 km) Pixel FOV, 6.3 µrad (mm @1 km) Resolution Tests Target at 1 km, Set up of camera for line profile of the resolution test. red channel intensity over • Cloud fractions of the same image are highly arbitrary and depend on the Red/(Red+Blue), RRB, threshold. the 4 cm bars of • Cloud fraction of Δ54% with Δ0.015 threshold. target at 1 km. • As resolution decreases, cloud fraction tends to increase if the threshold is below the mean, and vice versa. 5 Performance Tests Cloud Pixels Cloud Fraction Goal: • Measure fractal dimensions to associate with clouds and its cloud • Field of view of each image is 22 x 29 mrad. -

Preliminary Study on Medium Format Cameras Comparison

PRELIMINARY STUDY ON MEDIUM FORMAT CAMERAS COMPARISON VIA PHOTO RESPONSE NON-UNIFORMITY by EDGAR FRITZ B.A., University of California, San Diego, 2001 A thesis submitted to the Faculty of the Graduate School of the University of Colorado in partial fulfillment of the requirements for the degree of Master of Science Recording Arts Program 2019 This thesis for the Master of Science degree by Edgar Fritz has been approved for the Recording Arts Program by Catalin Grigoras, Chair Jeff M. Smith Carol Golemboski Date: December 14, 2019 ii Fritz, Edgar (M.S., Recording Arts Program) Preliminary Study on Medium Format Cameras Comparison Via Photo Response Non-Uniformity Thesis directed by Associate Professor Catalin Grigoras ABSTRACT This is a preliminary study on Medium Format cameras. This study utilizes Photo Response Non- Uniformity (PRNU), which is part of the background noise inherent in all digital camera sensors, to compare between Medium Format cameras of the same make and model. PRNU is comprised of tiny imperfections at the pixel level and is sometimes referred to as the camera’s “fingerprint.” This background noise can be extracted and utilized to compare between a reference image and a source camera. Two different methods of PRNU extraction were utilized in this study, a Gaussian method, and a Wiener method. The inter-variability and intra-variability between a reference image and sets of natural scene images was used to compare cameras. The form and content of this abstract are approved. I recommend its publication. Approved: Catalin Grigoras iii To my wife and kids, for always encouraging me to reach my goals.