Notes on Von Neumann Algebras

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quasi-Expectations and Amenable Von Neumann

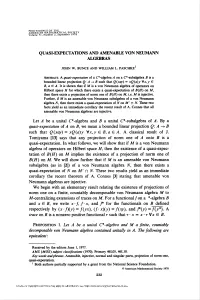

PROCEEDINGS OI THE „ „ _„ AMERICAN MATHEMATICAL SOCIETY Volume 71, Number 2, September 1978 QUASI-EXPECTATIONSAND AMENABLEVON NEUMANN ALGEBRAS JOHN W. BUNCE AND WILLIAM L. PASCHKE1 Abstract. A quasi-expectation of a C*-algebra A on a C*-subalgebra B is a bounded linear projection Q: A -> B such that Q(xay) = xQ(a)y Vx,y £ B, a e A. It is shown that if M is a von Neumann algebra of operators on Hubert space H for which there exists a quasi-expectation of B(H) on M, then there exists a projection of norm one of B(H) on M, i.e. M is injective. Further, if M is an amenable von Neumann subalgebra of a von Neumann algebra N, then there exists a quasi-expectation of N on M' n N. These two facts yield as an immediate corollary the recent result of A. Cormes that all amenable von Neumann algebras are injective. Let A be a unital C*-algebra and B a unital C*-subalgebra of A. By a quasi-expectation of A on B, we mean a bounded linear projection Q: A -» B such that Q(xay) = xQ(a)y Vx,y G B, a G A. A classical result of J. Tomiyama [13] says that any projection of norm one of A onto F is a quasi-expectation. In what follows, we will show that if M is a von Neumann algebra of operators on Hubert space H, then the existence of a quasi-expec- tation of B(H) on M implies the existence of a projection of norm one of B(H) on M. -

Math 261Y: Von Neumann Algebras (Lecture 5)

Math 261y: von Neumann Algebras (Lecture 5) September 9, 2011 In this lecture, we will state and prove von Neumann's double commutant theorem. First, let's establish a bit of notation. Let V be a Hilbert space, and let B(V ) denote the Hilbert space of bounded operators on V . We will consider several topologies on B(V ): (1) The norm topology, which has a subbasis (in fact, a basis) of open sets given by fx 2 B(V ): jjx−x0jj < g where x0 2 B(V ) and is a positive real number. (2) The strong topology, which has a subbasis of open sets given by fx 2 B(V ): jjx(v) − x0(v)jj < g, where x0 2 B(V ), v 2 V , and is a positive real number. (3) The weak topology, which has a subbases of open sets given by fx 2 B(V ): j(x(v) − x0(v); w)j < g where x0 2 B(V ), v; w 2 V , and is a positive real number. Each of these topologies is more coarse than the previous. That is, we have norm convergence ) strong convergence ) weak convergence. Warning 1. With respect to the norm topology, B(V ) is a metric space, so its topology is determined by the collection of convergent sequences. However, the strong and weak topologies do not have this property. However, the unit ball of B(V ) is metrizable in either topology if V is a separable Hilbert space. Warning 2. The multiplication map B(V ) × B(V ) ! B(V ) (x; y) 7! xy is not continuous with respect to either the strong or weak topology. -

Von Neumann Algebras Form a Model for the Quantum Lambda Calculus∗

Von Neumann Algebras form a Model for the Quantum Lambda Calculus∗ Kenta Cho and Abraham Westerbaan Institute for Computing and Information Sciences Radboud University, Nijmegen, the Netherlands {K.Cho,awesterb}@cs.ru.nl Abstract We present a model of Selinger and Valiron’s quantum lambda calculus based on von Neumann algebras, and show that the model is adequate with respect to the operational semantics. 1998 ACM Subject Classification F.3.2 Semantics of Programming Language Keywords and phrases quantum lambda calculus, von Neumann algebras 1 Introduction In 1925, Heisenberg realised, pondering upon the problem of the spectral lines of the hydrogen atom, that a physical quantity such as the x-position of an electron orbiting a proton is best described not by a real number but by an infinite array of complex numbers [12]. Soon afterwards, Born and Jordan noted that these arrays should be multiplied as matrices are [3]. Of course, multiplying such infinite matrices may lead to mathematically dubious situations, which spurred von Neumann to replace the infinite matrices by operators on a Hilbert space [44]. He organised these into rings of operators [25], now called von Neumann algebras, and thereby set off an explosion of research (also into related structures such as Jordan algebras [13], orthomodular lattices [2], C∗-algebras [34], AW ∗-algebras [17], order unit spaces [14], Hilbert C∗-modules [28], operator spaces [31], effect algebras [8], . ), which continues even to this day. One current line of research (with old roots [6, 7, 9, 19]) is the study of von Neumann algebras from a categorical perspective (see e.g. -

Functional Analysis Lecture Notes Chapter 2. Operators on Hilbert Spaces

FUNCTIONAL ANALYSIS LECTURE NOTES CHAPTER 2. OPERATORS ON HILBERT SPACES CHRISTOPHER HEIL 1. Elementary Properties and Examples First recall the basic definitions regarding operators. Definition 1.1 (Continuous and Bounded Operators). Let X, Y be normed linear spaces, and let L: X Y be a linear operator. ! (a) L is continuous at a point f X if f f in X implies Lf Lf in Y . 2 n ! n ! (b) L is continuous if it is continuous at every point, i.e., if fn f in X implies Lfn Lf in Y for every f. ! ! (c) L is bounded if there exists a finite K 0 such that ≥ f X; Lf K f : 8 2 k k ≤ k k Note that Lf is the norm of Lf in Y , while f is the norm of f in X. k k k k (d) The operator norm of L is L = sup Lf : k k kfk=1 k k (e) We let (X; Y ) denote the set of all bounded linear operators mapping X into Y , i.e., B (X; Y ) = L: X Y : L is bounded and linear : B f ! g If X = Y = X then we write (X) = (X; X). B B (f) If Y = F then we say that L is a functional. The set of all bounded linear functionals on X is the dual space of X, and is denoted X0 = (X; F) = L: X F : L is bounded and linear : B f ! g We saw in Chapter 1 that, for a linear operator, boundedness and continuity are equivalent. -

Quantum Mechanics, Schrodinger Operators and Spectral Theory

Quantum mechanics, SchrÄodingeroperators and spectral theory. Spectral theory of SchrÄodinger operators has been my original ¯eld of expertise. It is a wonderful mix of functional analy- sis, PDE and Fourier analysis. In quantum mechanics, every physical observable is described by a self-adjoint operator - an in¯nite dimensional symmetric matrix. For example, ¡¢ is a self-adjoint operator in L2(Rd) when de¯ned on an appropriate domain (Sobolev space H2). In quantum mechanics it corresponds to a free particle - just traveling in space. What does it mean, exactly? Well, in quantum mechanics, position of a particle is described by a wave 2 d function, Á(x; t) 2 L (R ): The physical meaningR of the wave function is that the probability 2 to ¯nd it in a region at time t is equal to jÁ(x; t)j dx: You can't know for sure where the particle is for sure. If initially the wave function is given by Á(x; 0) = Á0(x); the SchrÄodinger ¡i¢t equation says that its evolution is given by Á(x; t) = e Á0: But how to compute what is e¡i¢t? This is where Fourier transform comes in handy. Di®erentiation becomes multiplication on the Fourier transform side, and so Z ¡i¢t ikx¡ijkj2t ^ e Á0(x) = e Á0(k) dk; Rd R ^ ¡ikx where Á0(k) = Rd e Á0(x) dx is the Fourier transform of Á0: I omitted some ¼'s since they do not change the picture. Stationary phase methods lead to very precise estimates on free SchrÄodingerevolution. -

Algebra of Operators Affiliated with a Finite Type I Von Neumann Algebra

UNIVERSITATIS IAGELLONICAE ACTA MATHEMATICA doi: 10.4467/20843828AM.16.005.5377 53(2016), 39{57 ALGEBRA OF OPERATORS AFFILIATED WITH A FINITE TYPE I VON NEUMANN ALGEBRA by Piotr Niemiec and Adam Wegert Abstract. The aim of the paper is to prove that the ∗-algebra of all (closed densely defined linear) operators affiliated with a finite type I von Neumann algebra admits a unique center-valued trace, which turns out to be, in a sense, normal. It is also demonstrated that for no other von Neumann algebras similar constructions can be performed. 1. Introduction. With every von Neumann algebra A one can associate the set Aff(A) of operators (unbounded, in general) which are affiliated with A. In [11] Murray and von Neumann discovered that, surprisingly, Aff(A) turns out to be a unital ∗-algebra when A is finite. This was in fact the first example of a rich set of unbounded operators in which one can define algebraic binary op- erations in a natural manner. This concept was later adapted by Segal [17,18], who distinguished a certain class of unbounded operators (namely, measurable with respect to a fixed normal faithful semi-finite trace) affiliated with an ar- bitrary semi-finite von Neumann algebra and equipped it with a structure of a ∗-algebra (for an alternative proof see e.g. [12] or x2 in Chapter IX of [21]). A more detailed investigations in algebras of the form Aff(A) were initiated by a work of Stone [19], who described their models for commutative A in terms of unbounded continuous functions densely defined on the Gelfand spectrum X of A. -

Operator Algebras: an Informal Overview 3

OPERATOR ALGEBRAS: AN INFORMAL OVERVIEW FERNANDO LLEDO´ Contents 1. Introduction 1 2. Operator algebras 2 2.1. What are operator algebras? 2 2.2. Differences and analogies between C*- and von Neumann algebras 3 2.3. Relevance of operator algebras 5 3. Different ways to think about operator algebras 6 3.1. Operator algebras as non-commutative spaces 6 3.2. Operator algebras as a natural universe for spectral theory 6 3.3. Von Neumann algebras as symmetry algebras 7 4. Some classical results 8 4.1. Operator algebras in functional analysis 8 4.2. Operator algebras in harmonic analysis 10 4.3. Operator algebras in quantum physics 11 References 13 Abstract. In this article we give a short and informal overview of some aspects of the theory of C*- and von Neumann algebras. We also mention some classical results and applications of these families of operator algebras. 1. Introduction arXiv:0901.0232v1 [math.OA] 2 Jan 2009 Any introduction to the theory of operator algebras, a subject that has deep interrelations with many mathematical and physical disciplines, will miss out important elements of the theory, and this introduction is no ex- ception. The purpose of this article is to give a brief and informal overview on C*- and von Neumann algebras which are the main actors of this summer school. We will also mention some of the classical results in the theory of operator algebras that have been crucial for the development of several areas in mathematics and mathematical physics. Being an overview we can not provide details. Precise definitions, statements and examples can be found in [1] and references cited therein. -

Set Theory and Von Neumann Algebras

SET THEORY AND VON NEUMANN ALGEBRAS ASGER TORNQUIST¨ AND MARTINO LUPINI Introduction The aim of the lectures is to give a brief introduction to the area of von Neumann algebras to a typical set theorist. The ideal intended reader is a person in the field of (descriptive) set theory, who works with group actions and equivalence relations, and who is familiar with the rudiments of ergodic theory, and perhaps also orbit equivalence. This should not intimidate readers with a different background: Most notions we use in these notes will be defined. The reader is assumed to know a small amount of functional analysis. For those who feel a need to brush up on this, we recommend consulting [Ped89]. What is the motivation for giving these lectures, you ask. The answer is two-fold: On the one hand, there is a strong connection between (non-singular) group actions, countable Borel equiva- lence relations and von Neumann algebras, as we will see in Lecture 3 below. In the past decade, the knowledge about this connection has exploded, in large part due to the work of Sorin Popa and his many collaborators. Von Neumann algebraic techniques have lead to many discoveries that are also of significance for the actions and equivalence relations themselves, for instance, of new cocycle superrigidity theorems. On the other hand, the increased understanding of the connection between objects of ergodic theory, and their related von Neumann algebras, has also made it pos- sible to construct large families of non-isomorphic von Neumann algebras, which in turn has made it possible to prove non-classification type results for the isomorphism relation for various types of von Neumann algebras (and in particular, factors). -

Approximation Properties for Group C*-Algebras and Group Von Neumann Algebras

transactions of the american mathematical society Volume 344, Number 2, August 1994 APPROXIMATION PROPERTIES FOR GROUP C*-ALGEBRAS AND GROUP VON NEUMANN ALGEBRAS UFFE HAAGERUP AND JON KRAUS Abstract. Let G be a locally compact group, let C*(G) (resp. VN(G)) be the C*-algebra (resp. the von Neumann algebra) associated with the left reg- ular representation / of G, let A(G) be the Fourier algebra of G, and let MqA(G) be the set of completely bounded multipliers of A(G). With the com- pletely bounded norm, MqA(G) is a dual space, and we say that G has the approximation property (AP) if there is a net {ua} of functions in A(G) (with compact support) such that ua —»1 in the associated weak '-topology. In par- ticular, G has the AP if G is weakly amenable (•» A(G) has an approximate identity that is bounded in the completely bounded norm). For a discrete group T, we show that T has the AP •» C* (r) has the slice map property for sub- spaces of any C*-algebra -<=>VN(r) has the slice map property for a-weakly closed subspaces of any von Neumann algebra (Property Sa). The semidirect product of weakly amenable groups need not be weakly amenable. We show that the larger class of groups with the AP is stable with respect to semidirect products, and more generally, this class is stable with respect to group exten- sions. We also obtain some results concerning crossed products. For example, we show that the crossed product M®aG of a von Neumann algebra M with Property S„ by a group G with the AP also has Property Sa . -

Functional Analysis (WS 19/20), Problem Set 8 (Spectrum And

Functional Analysis (WS 19/20), Problem Set 8 (spectrum and adjoints on Hilbert spaces)1 In what follows, let H be a complex Hilbert space. Let T : H ! H be a bounded linear operator. We write T ∗ : H ! H for adjoint of T dened with hT x; yi = hx; T ∗yi: This operator exists and is uniquely determined by Riesz Representation Theorem. Spectrum of T is the set σ(T ) = fλ 2 C : T − λI does not have a bounded inverseg. Resolvent of T is the set ρ(T ) = C n σ(T ). Basic facts on adjoint operators R1. | Adjoint T ∗ exists and is uniquely determined. R2. | Adjoint T ∗ is a bounded linear operator and kT ∗k = kT k. Moreover, kT ∗T k = kT k2. R3. | Taking adjoints is an involution: (T ∗)∗ = T . R4. Adjoints commute with the sum: ∗ ∗ ∗. | (T1 + T2) = T1 + T2 ∗ ∗ R5. | For λ 2 C we have (λT ) = λ T . R6. | Let T be a bounded invertible operator. Then, (T ∗)−1 = (T −1)∗. R7. Let be bounded operators. Then, ∗ ∗ ∗. | T1;T2 (T1 T2) = T2 T1 R8. | We have relationship between kernel and image of T and T ∗: ker T ∗ = (im T )?; (ker T ∗)? = im T It will be helpful to prove that if M ⊂ H is a linear subspace, then M = M ??. Btw, this covers all previous results like if N is a nite dimensional linear subspace then N = N ?? (because N is closed). Computation of adjoints n n ∗ M1. ,Let A : R ! R be a complex matrix. Find A . 2 M2. | ,Let H = l (Z). For x = (:::; x−2; x−1; x0; x1; x2; :::) 2 H we dene the right shift operator −1 ∗ with (Rx)k = xk−1: Find kRk, R and R . -

Categorical Characterizations of Operator-Valued Measures

Categorical characterizations of operator-valued measures Frank Roumen Inst. for Mathematics, Astrophysics and Particle Physics (IMAPP) Radboud University Nijmegen [email protected] The most general type of measurement in quantum physics is modeled by a positive operator-valued measure (POVM). Mathematically, a POVM is a generalization of a measure, whose values are not real numbers, but positive operators on a Hilbert space. POVMs can equivalently be viewed as maps between effect algebras or as maps between algebras for the Giry monad. We will show that this equivalence is an instance of a duality between two categories. In the special case of continuous POVMs, we obtain two equivalent representations in terms of morphisms between von Neumann algebras. 1 Introduction The logic governing quantum measurements differs from classical logic, and it is still unknown which mathematical structure is the best description of quantum logic. The first attempt for such a logic was discussed in the famous paper [2], in which Birkhoff and von Neumann propose to use the orthomod- ular lattice of projections on a Hilbert space. However, this approach has been criticized for its lack of generality, see for instance [22] for an overview of experiments that do not fit in the Birkhoff-von Neumann scheme. The operational approach to quantum physics generalizes the approach based on pro- jective measurements. In this approach, all measurements should be formulated in terms of the outcome statistics of experiments. Thus the logical and probabilistic aspects of quantum mechanics are combined into a unified description. The basic concept of operational quantum mechanics is an effect on a Hilbert space, which is a positive operator lying below the identity. -

Von Neumann Algebras

1 VON NEUMANN ALGEBRAS ADRIAN IOANA These are lecture notes from a topics graduate class taught at UCSD in Winter 2019. 1. Review of functional analysis In this section we state the results that we will need from functional analysis. All of these are stated and proved in [Fo99, Chapters 4-7]. Convention. All vector spaces considered below are over C. 1.1. Normed vector spaces. Definition 1.1. A normed vector space is a vector space X over C together with a map k · k : X ! [0; 1) which is a norm, i.e., it satisfies that • kx + yk ≤ kxk + kyk, for al x; y 2 X, • kαxk = jαj kxk, for all x 2 X and α 2 C, and • kxk = 0 , x = 0, for all x 2 X. Definition 1.2. Let X be a normed vector space. (1) A map ' : X ! C is called a linear functional if it satisfies '(αx + βy) = α'(x) + β'(y), for all α; β 2 C and x; y 2 X. A linear functional ' : X ! C is called bounded if ∗ k'k := supkxk≤1 j'(x)j < 1. The dual of X, denoted X , is the normed vector space of all bounded linear functionals ' : X ! C. (2) A map T : X ! X is called linear if it satisfies T (αx+βy) = αT (x)+βT (y), for all α; β 2 C and x; y 2 X. A linear map T : X ! X is called bounded if kT k := supkxk≤1 kT (x)k < 1. A linear bounded map T is usually called a linear bounded operator, or simply a bounded operator.