Trimming and Quality Control (2015-06-03)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beginning Portable Shell Scripting from Novice to Professional

Beginning Portable Shell Scripting From Novice to Professional Peter Seebach 10436fmfinal 1 10/23/08 10:40:24 PM Beginning Portable Shell Scripting: From Novice to Professional Copyright © 2008 by Peter Seebach All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN-13 (pbk): 978-1-4302-1043-6 ISBN-10 (pbk): 1-4302-1043-5 ISBN-13 (electronic): 978-1-4302-1044-3 ISBN-10 (electronic): 1-4302-1044-3 Printed and bound in the United States of America 9 8 7 6 5 4 3 2 1 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Lead Editor: Frank Pohlmann Technical Reviewer: Gary V. Vaughan Editorial Board: Clay Andres, Steve Anglin, Ewan Buckingham, Tony Campbell, Gary Cornell, Jonathan Gennick, Michelle Lowman, Matthew Moodie, Jeffrey Pepper, Frank Pohlmann, Ben Renow-Clarke, Dominic Shakeshaft, Matt Wade, Tom Welsh Project Manager: Richard Dal Porto Copy Editor: Kim Benbow Associate Production Director: Kari Brooks-Copony Production Editor: Katie Stence Compositor: Linda Weidemann, Wolf Creek Press Proofreader: Dan Shaw Indexer: Broccoli Information Management Cover Designer: Kurt Krames Manufacturing Director: Tom Debolski Distributed to the book trade worldwide by Springer-Verlag New York, Inc., 233 Spring Street, 6th Floor, New York, NY 10013. -

Linux Hardening Techniques Vasudev Baldwa Ubnetdef, Spring 2021 Agenda

Linux Hardening Techniques Vasudev Baldwa UBNetDef, Spring 2021 Agenda 1. What is Systems Hardening? 2. Basic Principles 3. Updates & Encryption 4. Monitoring 5. Services 6. Firewalls 7. Logging What is System Hardening? ⬡ A collection of tools, techniques, and best practices to reduce vulnerability in technology applications, systems, infrastructure, firmware, and other areas ⬡ 3 major areas: OS vs Software vs Network ⬠ When have we done hardening in this class before? ⬠ This lecture is focusing mostly on OS and software level Why Harden? ⬡ Firewalls can only get us so far, what happens when at attack is inside the network? ⬠ If you have nothing protecting your systems you are in trouble ⬡ We want some kind of secondary protection A Few Cybersecurity Principles ⬡ Zero Trust Security ⬠ Instead of assuming everything behind the firewall is safe, Zero Trust verifies each request as though it originates from an unsecure network ⬡ Principle of Least Privilege ⬠ Only privileges needed to complete a task should be allowed ⬠ Users should not have domain administrator/root privileges ⬡ Principle of Least Common Mechanism ⬠ Mechanisms used to access resources should not be shared in order to avoid the transmission of data. ⬠ Shared resources should not be used to access resources The Threat Model ⬡ A process by which potential threats can be identified and prioritized. ⬠ If you have a web server that feeds input to a mysql database, then protecting against mysql injections would be prioritized in your model. 2 considerations ⬡ *nix like is a very -

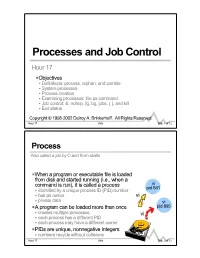

Processes and Job Control

Processes and Job Control Hour 17 PObjectives < Definitions: process, orphan, and zombie < System processes < Process creation < Examining processes: the ps command < Job control: &, nohup, fg, bg, jobs, ( ), and kill < Exit status Copyright © 1998-2002 Delroy A. Brinkerhoff. All Rights Reserved. Hour 17 Unix Slide 1 of 12 Process Also called a job by C and Korn shells PWhen a program or executable file is loaded from disk and started running (i.e., when a command is run), it is called a process vi pid 641 < identified by a unique process ID (PID) number < has an owner vi < private data vi PA program can be loaded more than once pid 895 < creates multiple processes vi < each process has a different PID < each process may have a different owner PPIDs are unique, nonnegative integers < numbers recycle without collisions Hour 17 Unix Slide 2 of 12 System Processes Processes created during system boot P0System kernel < “hand crafted” at boot < called swap in older versions (swaps the CPU between processes) < called sched in newer versions (schedules processes) < creates process 1 P1 init (the parent of all processes except process 0) < general process spawner < begins building locale-related environment < sets or changes the system run-level P2 page daemon (pageout on most systems) P3 file system flusher (fsflush) Hour 17 Unix Slide 3 of 12 Process Life Cycle Overview of creating new processes fork init init pid 467 Pfork creates two identical pid 1 exec processes (parent and child) getty pid 467 Pexec < replaces the process’s instructions -

Multiresolution Recurrent Neural Networks: an Application to Dialogue Response Generation

Multiresolution Recurrent Neural Networks: An Application to Dialogue Response Generation Iulian Vlad Serban∗◦ Tim Klinger University of Montreal IBM Research 2920 chemin de la Tour, T. J. Watson Research Center, Montréal, QC, Canada Yorktown Heights, NY, USA Gerald Tesauro Kartik Talamadupula Bowen Zhou IBM Research IBM Research IBM Research T. J. Watson Research Center, T. J. Watson Research Center, T. J. Watson Research Center, Yorktown Heights, Yorktown Heights, Yorktown Heights, NY, USA NY, USA NY, USA Yoshua Bengioy◦ Aaron Courville◦ University of Montreal University of Montreal 2920 chemin de la Tour, 2920 chemin de la Tour, Montréal, QC, Canada Montréal, QC, Canada Abstract We introduce the multiresolution recurrent neural network, which extends the sequence-to-sequence framework to model natural language generation as two parallel discrete stochastic processes: a sequence of high-level coarse tokens, and a sequence of natural language tokens. There are many ways to estimate or learn the high-level coarse tokens, but we argue that a simple extraction procedure is sufficient to capture a wealth of high-level discourse semantics. Such procedure allows training the multiresolution recurrent neural network by maximizing the exact joint log-likelihood over both sequences. In contrast to the standard log- likelihood objective w.r.t. natural language tokens (word perplexity), optimizing the joint log-likelihood biases the model towards modeling high-level abstractions. We apply the proposed model to the task of dialogue response generation in arXiv:1606.00776v2 [cs.CL] 14 Jun 2016 two challenging domains: the Ubuntu technical support domain, and Twitter conversations. On Ubuntu, the model outperforms competing approaches by a substantial margin, achieving state-of-the-art results according to both automatic evaluation metrics and a human evaluation study. -

07 07 Unixintropart2 Lucio Week 3

Unix Basics Command line tools Daniel Lucio Overview • Where to use it? • Command syntax • What are commands? • Where to get help? • Standard streams(stdin, stdout, stderr) • Pipelines (Power of combining commands) • Redirection • More Information Introduction to Unix Where to use it? • Login to a Unix system like ’kraken’ or any other NICS/ UT/XSEDE resource. • Download and boot from a Linux LiveCD either from a CD/DVD or USB drive. • http://www.puppylinux.com/ • http://www.knopper.net/knoppix/index-en.html • http://www.ubuntu.com/ Introduction to Unix Where to use it? • Install Cygwin: a collection of tools which provide a Linux look and feel environment for Windows. • http://cygwin.com/index.html • https://newton.utk.edu/bin/view/Main/Workshop0InstallingCygwin • Online terminal emulator • http://bellard.org/jslinux/ • http://cb.vu/ • http://simpleshell.com/ Introduction to Unix Command syntax $ command [<options>] [<file> | <argument> ...] Example: cp [-R [-H | -L | -P]] [-fi | -n] [-apvX] source_file target_file Introduction to Unix What are commands? • An executable program (date) • A command built into the shell itself (cd) • A shell program/function • An alias Introduction to Unix Bash commands (Linux) alias! crontab! false! if! mknod! ram! strace! unshar! apropos! csplit! fdformat! ifconfig! more! rcp! su! until! apt-get! cut! fdisk! ifdown! mount! read! sudo! uptime! aptitude! date! fg! ifup! mtools! readarray! sum! useradd! aspell! dc! fgrep! import! mtr! readonly! suspend! userdel! awk! dd! file! install! mv! reboot! symlink! -

HP-UX Fundamentals

Contact Us: (616) 875-4060 HP-UX Essentials Course Summary Length: 3 Days Prerequisite: Basic computer skills Recommendation Statement: Student should be able to use a computer monitor, keyboard, mouse. Student needs to be able to input commands and control characters from a keyboard. Course Description: For those new to HP-UX, this is the first course you will take. The course starts at the beginner level and works into intermediate and advanced HP-UX commands and utilities. The student is introduced to the HP-UX Korn shell, the command line and command syntax. Although you will be exposed to the various windowing platforms available in HP-UX, this course teaches UNIX at “the command line level” to develop a thorough understanding of the HP-UX operating environment. The student learns how to navigate the HP-UX file systems and how to work with files, directories and permissions. The student will learn how to use the HP-UX editors “ex” and “vi. “ Once the student is comfortable with basic HP-UX commands, they’ll move onto advanced power tools as well as how to customize the POSIX Korn shell. The classroom labs are run in a real-world UNIX environment, are structured to allow you to learn by doing, and are developed to simulate “real world” situations. The hands-on labs progressively build your HP-UX knowledge and command skills in a clear and concise manner. Working in a controlled UNIX classroom environment with an expert instructor, you will learn HP-UX concepts and commands. You will receive professional tips and techniques that will help you build your HP-UX confidence and skills. -

Download the Sample Pages

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. Sun Microsystems, Inc., has intellectual property rights relating to implementations of the technology described in this publication. In particular, and without limitation, these intellectual property rights may include one or more U.S. patents, foreign patents, or pending applications. Sun, Sun Microsystems, the Sun logo, J2ME, J2EE, Solaris, Java, Javadoc, Java Card, NetBeans, and all Sun and Java based trademarks and logos are trademarks or registered trademarks of Sun Microsystems, Inc., in the United States and other countries. UNIX is a registered trademark in the United States and other countries, exclusively licensed through X/Open Company, Ltd. THIS PUBLICATION IS PROVIDED “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, OR NON-INFRINGEMENT. THIS PUBLICATION COULD INCLUDE TECHNICAL INACCURACIES OR TYPOGRAPHICAL ERRORS. CHANGES ARE PERIODICALLY ADDED TO THE INFORMATION HEREIN; THESE CHANGES WILL BE INCORPORATED IN NEW EDI- TIONS OF THE PUBLICATION. -

Commands with the Answers

Catonsville Ubuntu-MD Loco Meeting October 22, 2016 1. View the file /var/log/syslog one page at a time a. cat /var/log/syslog |more 2. Declare variables for /var/log/syslog and /etc/logrotate.conf a. SYSLOG=”/var/log/syslog” b. LOGROTATE=”/etc/logrotate.conf” 3. View the first 5 lines of /var/log/syslog using the declared variable a. head -n5 $SYSLOG 4. View a live view of the last 5 lines of /var/log/syslog using the declared variable a. tail -n5 -f $SYSLOG 5. Search for all instances of the words log and rotate in /etc/logrotate.conf using the declared variable a. grep –Ew ‘log|rotate’ $LOGROTATE 6. Count all instances of the word log or rotate in /etc/logrotate.conf using the declared variable a. grep –Ewc ‘log|rotate’ $LOGROTATE 7. Count all instances of blank lines in /etc/logrotate.conf using the declared variable a. grep –c ‘^$’ $LOGROTATE 8. Count all the words in /etc/logrotate.conf using the declared variable a. cat $LOGROTATE | wc -w 9. Create a new group account… student a. groupadd student 10. Create a new standard user account… student1, primary group is student a. useradd –gstudent student1 11. Create a new privileged user account… instructor a. useradd –Gsudo instructor 12. Create a new directories called projects, assignments, quizzes, tests in /data a. mkdir –p /data/{projects,assignments,quizzes,tests} 13. Assign all files created in quizzes and tests directories with the group instructor a. chmod g+s /data/{quizzes,tests} 14. Create new files called assignment_1.txt thru assignment_9.txt in the home directory a. -

Linux Shell Scripting Cookbook Second Edition

Linux Shell Scripting Cookbook Second Edition Over 110 practical recipes to solve real-world shell problems, guaranteed to make you wonder how you ever lived without them Shantanu Tushar Sarath Lakshman BIRMINGHAM - MUMBAI Linux Shell Scripting Cookbook Second Edition Copyright © 2013 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: January 2011 Second edition: May 2013 Production Reference: 1140513 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78216-274-2 www.packtpub.com Cover Image by Parag Kadam ([email protected]) Credits Authors Project Coordinator Shantanu Tushar Shiksha Chaturvedi Sarath Lakshman Proofreader Reviewers Linda Morris Rajeshwari K. John C. Kennedy Indexer Hemangini Bari Anil Kumar Sudhendu Kumar Production Coordinator Aravind SV Shantanu Zagade Acquisition Editor Cover Work Kartikey Pandey Shantanu Zagade Lead Technical Editor Ankita Shashi Technical Editors Jalasha D'costa Amit Ramadas Lubna Shaikh About the Authors Shantanu Tushar is an advanced GNU/Linux user since his college days. -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Xalasys.com <tille wants no spam _at_ xalasys dot com> Version 1.8 Last updated 20060315 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Linux Command Cheat Sheet

Linux commands cheat sheet Basic navigation Command Purpose Examples cd change working directory cd .. Change to parent directory cd /course/cs017 Change to /course/cs017 cd - Change to previous directory cd ~/course Change to /home/YOUR_USERNAME/course cd ~dap/pub Change to /home/dap/pub ls list files in a directory ls list files in current directory ls dir1 list files in dir1 ls -l dir1 show details for each file in dir1 ls -a dir1 show all files (even hidden files) pwd print the current working directory©s name People Command Purpose Examples floor see who©s logged in where floor ta print a map of who©s logged into TA areas finger find out about a person finger dap snoop find out about a person snoop dap anyone find your friends logged in anyone Add logins, 1 per line to your ~/.anyone zwrite send a message to a person zwrite dap su switch to user Don©t use this. Use sux. sux switch to user (can use graphical apps) sux ± dap Switch to user ©dap© Permissions Command Purpose Examples chgrp change the group which owns a file chgrp GROUP file Change group of ©file© to GROUP chmod change the user who owns a file chmod MODE file Change mode of ©file© to MODE umask change your umask umask MODE Change default umask to MODE Use "man <command>" for more details [email protected] Page 1/2 Linux commands cheat sheet Specific file types Command Purpose Command Purpose acroread Views pdf files gzip/gunzip Gzip compression xpdf Views pdf files zip/unzip Windows-compatible compression xdvi Views dvi files gv Views postscript files (.ps) Miscellaneous -

Linux Shell Scripting Tutorial V2.0

Linux Shell Scripting Tutorial v2.0 Written by Vivek Gite <[email protected]> and Edited By Various Contributors PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Mon, 31 May 2010 07:27:26 CET Contents Articles Linux Shell Scripting Tutorial - A Beginner's handbook:About 1 Chapter 1: Quick Introduction to Linux 4 What Is Linux 4 Who created Linux 5 Where can I download Linux 6 How do I Install Linux 6 Linux usage in everyday life 7 What is Linux Kernel 7 What is Linux Shell 8 Unix philosophy 11 But how do you use the shell 12 What is a Shell Script or shell scripting 13 Why shell scripting 14 Chapter 1 Challenges 16 Chapter 2: Getting Started With Shell Programming 17 The bash shell 17 Shell commands 19 The role of shells in the Linux environment 21 Other standard shells 23 Hello, World! Tutorial 25 Shebang 27 Shell Comments 29 Setting up permissions on a script 30 Execute a script 31 Debug a script 32 Chapter 2 Challenges 33 Chapter 3:The Shell Variables and Environment 34 Variables in shell 34 Assign values to shell variables 38 Default shell variables value 40 Rules for Naming variable name 41 Display the value of shell variables 42 Quoting 46 The export statement 49 Unset shell and environment variables 50 Getting User Input Via Keyboard 50 Perform arithmetic operations 54 Create an integer variable 56 Create the constants variable 57 Bash variable existence check 58 Customize the bash shell environments 59 Recalling command history 63 Path name expansion 65 Create and use aliases 67 The tilde expansion 69 Startup scripts 70 Using aliases 72 Changing bash prompt 73 Setting shell options 77 Setting system wide shell options 82 Chapter 3 Challenges 83 Chapter 4: Conditionals Execution (Decision Making) 84 Bash structured language constructs 84 Test command 86 If structures to execute code based on a condition 87 If.