Multiresolution Recurrent Neural Networks: an Application to Dialogue Response Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Process Text Streams Using Filters

Process Text Streams Using Filters OBJECTIVE: Candidates should should be able to apply filters to text streams. 1 Process Text Streams Using Filters KeyKEY knowledge KNOWLEDGE area(s): AREAS: Send text files and output streams through text utility filters to modify the output using standard UNIX commands found in the GNU textutils package. 2 Process Text Streams Using Filters KEY FILES,TERMS, UTILITIES cat nl tail cut paste tr expand pr unexpand fmt sed uniq head sort wc hexdump split join tac 3 cat cat the editor - used as a rudimentary text editor. cat > short-message we are curious to meet penguins in Prague Crtl+D *Ctrl+D - command is used for ending interactive input. 4 cat cat the reader More commonly used to flush text to stdout. Options: -n number each line of output -b number only non-blank output lines -A show carriage return Example cat /etc/resolv.conf ▶ search mydomain.org nameserver 127.0.0.1 5 tac tac reads back-to-front This command is the same as cat except that the text is read from the last line to the first. tac short-message ▶ penguins in Prague to meet we are curious 6 head or tail using head or tail - often used to analyze logfiles. - by default, output 10 lines of text. List 20 first lines of /var/log/messages: head -n 20 /var/log/messages head -20 /var/log/messages List 20 last lines of /etc/aliases: tail -20 /etc/aliases 7 head or tail The tail utility has an added option that allows one to list the end of a text starting at a given line. -

Beginning Portable Shell Scripting from Novice to Professional

Beginning Portable Shell Scripting From Novice to Professional Peter Seebach 10436fmfinal 1 10/23/08 10:40:24 PM Beginning Portable Shell Scripting: From Novice to Professional Copyright © 2008 by Peter Seebach All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN-13 (pbk): 978-1-4302-1043-6 ISBN-10 (pbk): 1-4302-1043-5 ISBN-13 (electronic): 978-1-4302-1044-3 ISBN-10 (electronic): 1-4302-1044-3 Printed and bound in the United States of America 9 8 7 6 5 4 3 2 1 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Lead Editor: Frank Pohlmann Technical Reviewer: Gary V. Vaughan Editorial Board: Clay Andres, Steve Anglin, Ewan Buckingham, Tony Campbell, Gary Cornell, Jonathan Gennick, Michelle Lowman, Matthew Moodie, Jeffrey Pepper, Frank Pohlmann, Ben Renow-Clarke, Dominic Shakeshaft, Matt Wade, Tom Welsh Project Manager: Richard Dal Porto Copy Editor: Kim Benbow Associate Production Director: Kari Brooks-Copony Production Editor: Katie Stence Compositor: Linda Weidemann, Wolf Creek Press Proofreader: Dan Shaw Indexer: Broccoli Information Management Cover Designer: Kurt Krames Manufacturing Director: Tom Debolski Distributed to the book trade worldwide by Springer-Verlag New York, Inc., 233 Spring Street, 6th Floor, New York, NY 10013. -

Comodo System Cleaner Version 3.0

Comodo System Cleaner Version 3.0 User Guide Version 3.0.122010 Versi Comodo Security Solutions 525 Washington Blvd. Jersey City, NJ 07310 Comodo System Cleaner - User Guide Table of Contents 1.Comodo System-Cleaner - Introduction ............................................................................................................ 3 1.1.System Requirements...........................................................................................................................................5 1.2.Installing Comodo System-Cleaner........................................................................................................................5 1.3.Starting Comodo System-Cleaner..........................................................................................................................9 1.4.The Main Interface...............................................................................................................................................9 1.5.The Summary Area.............................................................................................................................................11 1.6.Understanding Profiles.......................................................................................................................................12 2.Registry Cleaner............................................................................................................................................. 15 2.1.Clean.................................................................................................................................................................16 -

The Linux Command Line

The Linux Command Line Fifth Internet Edition William Shotts A LinuxCommand.org Book Copyright ©2008-2019, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042. A version of this book is also available in printed form, published by No Starch Press. Copies may be purchased wherever fine books are sold. No Starch Press also offers elec- tronic formats for popular e-readers. They can be reached at: https://www.nostarch.com. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. Release History Version Date Description 19.01A January 28, 2019 Fifth Internet Edition (Corrected TOC) 19.01 January 17, 2019 Fifth Internet Edition. 17.10 October 19, 2017 Fourth Internet Edition. 16.07 July 28, 2016 Third Internet Edition. 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. Table of Contents Introduction....................................................................................................xvi Why Use the Command Line?......................................................................................xvi -

Université De Montréal Context-Aware

UNIVERSITE´ DE MONTREAL´ CONTEXT-AWARE SOURCE CODE IDENTIFIER SPLITTING AND EXPANSION FOR SOFTWARE MAINTENANCE LATIFA GUERROUJ DEPARTEMENT´ DE GENIE´ INFORMATIQUE ET GENIE´ LOGICIEL ECOLE´ POLYTECHNIQUE DE MONTREAL´ THESE` PRESENT´ EE´ EN VUE DE L'OBTENTION DU DIPLOME^ DE PHILOSOPHIÆ DOCTOR (GENIE´ INFORMATIQUE) JUILLET 2013 ⃝c Latifa Guerrouj, 2013. UNIVERSITE´ DE MONTREAL´ ECOLE´ POLYTECHNIQUE DE MONTREAL´ Cette th`ese intitul´ee: CONTEXT-AWARE SOURCE CODE IDENTIFIER SPLITTING AND EXPANSION FOR SOFTWARE MAINTENANCE pr´esent´eepar: GUERROUJ Latifa en vue de l'obtention du dipl^ome de: Philosophiæ Doctor a ´et´ed^ument accept´eepar le jury d'examen constitu´ede: Mme BOUCHENEB Hanifa, Doctorat, pr´esidente M. ANTONIOL Giuliano, Ph.D., membre et directeur de recherche M. GUEH´ ENEUC´ Yann-Ga¨el, Ph.D., membre et codirecteur de recherche M. DESMARAIS Michel, Ph.D., membre Mme LAWRIE Dawn, Ph.D., membre iii This dissertation is dedicated to my parents. For their endless love, support and encouragement. iv ACKNOWLEDGMENTS I am very grateful to both Giulio and Yann for their support, encouragement, and intel- lectual input. I worked with you for four years or even less, but what I learned from you will last forever. Giulio, your passion about research was a source of inspiration and motivation for me. Also, your mentoring and support have been instrumental in achieving my goals. Yann, your enthusiasm and guidance have always been a strength for me to keep moving forward. Research would not be as much fun without students and researchers to collaborate with. It has been a real pleasure and great privilege working with Massimiliano Di Penta (University of Sannio), Denys Poshyvanyk (College of William and Mary), and their teams. -

Linux Hardening Techniques Vasudev Baldwa Ubnetdef, Spring 2021 Agenda

Linux Hardening Techniques Vasudev Baldwa UBNetDef, Spring 2021 Agenda 1. What is Systems Hardening? 2. Basic Principles 3. Updates & Encryption 4. Monitoring 5. Services 6. Firewalls 7. Logging What is System Hardening? ⬡ A collection of tools, techniques, and best practices to reduce vulnerability in technology applications, systems, infrastructure, firmware, and other areas ⬡ 3 major areas: OS vs Software vs Network ⬠ When have we done hardening in this class before? ⬠ This lecture is focusing mostly on OS and software level Why Harden? ⬡ Firewalls can only get us so far, what happens when at attack is inside the network? ⬠ If you have nothing protecting your systems you are in trouble ⬡ We want some kind of secondary protection A Few Cybersecurity Principles ⬡ Zero Trust Security ⬠ Instead of assuming everything behind the firewall is safe, Zero Trust verifies each request as though it originates from an unsecure network ⬡ Principle of Least Privilege ⬠ Only privileges needed to complete a task should be allowed ⬠ Users should not have domain administrator/root privileges ⬡ Principle of Least Common Mechanism ⬠ Mechanisms used to access resources should not be shared in order to avoid the transmission of data. ⬠ Shared resources should not be used to access resources The Threat Model ⬡ A process by which potential threats can be identified and prioritized. ⬠ If you have a web server that feeds input to a mysql database, then protecting against mysql injections would be prioritized in your model. 2 considerations ⬡ *nix like is a very -

Cuteftp Pro V8 Utorrent

1 / 2 CuteFTP Pro V8 Utorrent 2.1 Server ... FileZilla FTP Client, WS FTP, Bullet Proof FTP, CuteFTP ... Vuze (formerly Azureus), utorrent, Transmission, Deluge, qBittorrent, .... Nero 8 Ultra Edition 8 3 13 0 crack. Utorrent 1.8.3 serial keygen. Globalscape Cuteftp Pro 8 3 3 054 key code generator. Betterzip 1.8.3 crack.. CuteFTP Pro V8 Utorrent cuteftp, cuteftp free, cuteftp mac, cuteftp server, cuteftp vs filezilla, cuteftp sftp, cuteftp portable, cuteftp crack, cuteftp 8 professional, .... Download CCProxy 8 is easy-to-use and powerful. ... allows use of ICQ, MSN Messenger, Yahoo Messenger, CuteFTP, CuteFTP Pro and WS-FTP. ... Previous uTorrent Pro Crack 3.5.4 Build 44590 & Key Free Download.. Find SophosLabs data about viruses, spyware, suspicious behavior and files, adware, PUAs, and controlled applications and devices. · ABC · ABC Client · ANts P2P .... FCleaner 1.3.1.621Jul 12th; Registry Gear 2.1.1.609Jun 9th; CuteFTP Pro 8.3.4Jun 2nd; CuteFTP Lite 8.3.4Jun 2nd ... [Show All]8 softwares in this category. 8 September 2012 at 16:11 Reply ... [SOFTWARE] uTorrent Turbo Booster · [GAMES] JUST CAUSE 2 SKIDROW + DLC LIMITED CONTENT · [OS] ... [CUSTOM] AlienWare Full Pack · [SOFTWARE] CuteFTP / Cute FTP Professional v8.3.3.. Cakewalk Dimension Pro DXi and VSTi sampler synthesizer works great with ... FXsound DFX Audio Enhancement v8.0 for Windows Media Player & Winamp ... GlobalSCAPE CuteFTP 4.2; GlobalSCAPE CuteFTP 8, 8.0.4 Pro (Error ... Beta (Chinese Simplified/Traditional); uTorrent 1.6; WinMX 3.54 (Beta 4).. Able2Extract Professional v8.0.28.0 Incl Crack [TorDigger] utorrent · SpyKey.rar Serial Key keygen · PATCHED Win 10 Pro RS3 En-us (x86 x64) ... -

IBM Education Assistance for Z/OS V2R1

IBM Education Assistance for z/OS V2R1 Item: ASCII Unicode Option Element/Component: UNIX Shells and Utilities (S&U) Material is current as of June 2013 © 2013 IBM Corporation Filename: zOS V2R1 USS S&U ASCII Unicode Option Agenda ■ Trademarks ■ Presentation Objectives ■ Overview ■ Usage & Invocation ■ Migration & Coexistence Considerations ■ Presentation Summary ■ Appendix Page 2 of 19 © 2013 IBM Corporation Filename: zOS V2R1 USS S&U ASCII Unicode Option IBM Presentation Template Full Version Trademarks ■ See url http://www.ibm.com/legal/copytrade.shtml for a list of trademarks. Page 3 of 19 © 2013 IBM Corporation Filename: zOS V2R1 USS S&U ASCII Unicode Option IBM Presentation Template Full Presentation Objectives ■ Introduce the features and benefits of the new z/OS UNIX Shells and Utilities (S&U) support for working with ASCII/Unicode files. Page 4 of 19 © 2013 IBM Corporation Filename: zOS V2R1 USS S&U ASCII Unicode Option IBM Presentation Template Full Version Overview ■ Problem Statement –As a z/OS UNIX Shells & Utilities user, I want the ability to control the text conversion of input files used by the S&U commands. –As a z/OS UNIX Shells & Utilities user, I want the ability to run tagged shell scripts (tcsh scripts and SBCS sh scripts) under different SBCS locales. ■ Solution –Add –W filecodeset=codeset,pgmcodeset=codeset option on several S&U commands to enable text conversion – consistent with support added to vi and ex in V1R13. –Add –B option on several S&U commands to disable automatic text conversion – consistent with other commands that already have this override support. –Add new _TEXT_CONV environment variable to enable or disable text conversion. -

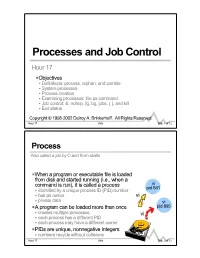

Processes and Job Control

Processes and Job Control Hour 17 PObjectives < Definitions: process, orphan, and zombie < System processes < Process creation < Examining processes: the ps command < Job control: &, nohup, fg, bg, jobs, ( ), and kill < Exit status Copyright © 1998-2002 Delroy A. Brinkerhoff. All Rights Reserved. Hour 17 Unix Slide 1 of 12 Process Also called a job by C and Korn shells PWhen a program or executable file is loaded from disk and started running (i.e., when a command is run), it is called a process vi pid 641 < identified by a unique process ID (PID) number < has an owner vi < private data vi PA program can be loaded more than once pid 895 < creates multiple processes vi < each process has a different PID < each process may have a different owner PPIDs are unique, nonnegative integers < numbers recycle without collisions Hour 17 Unix Slide 2 of 12 System Processes Processes created during system boot P0System kernel < “hand crafted” at boot < called swap in older versions (swaps the CPU between processes) < called sched in newer versions (schedules processes) < creates process 1 P1 init (the parent of all processes except process 0) < general process spawner < begins building locale-related environment < sets or changes the system run-level P2 page daemon (pageout on most systems) P3 file system flusher (fsflush) Hour 17 Unix Slide 3 of 12 Process Life Cycle Overview of creating new processes fork init init pid 467 Pfork creates two identical pid 1 exec processes (parent and child) getty pid 467 Pexec < replaces the process’s instructions -

Complaint of ) ) Free Press and Public Knowledge ) ) __- ______Against Comcast Corporation ) ) for Secretly Degrading Peer-To-Peer Applications ) ) To: Ms

Before the FEDERAL COMMUNICATIONS COMMISSION Washington, D.C. 20554 Formal Complaint of ) ) Free Press and Public Knowledge ) ) __- _______ Against Comcast Corporation ) ) For Secretly Degrading Peer-to-Peer Applications ) ) To: Ms. Marlene H. Dortch, Secretary, FCC ) Formal Complaint Marvin Ammori, General Counsel Andy Schwartzman Ben Scott, Policy Director Harold Feld Free Press Parul Desdai 501 Third Street NW, Suite 875 Media Access Project Washington, DC 20001 1625 K Street, NW 202-265-1490 Suite 1000 Washington, DC 20006 (202) 232-4300 November 1, 2007 Table of Contents Summary ..................................................................................................................................i I. Facts ........................................................................................................................1 A. Parties..................................................................................................................1 B. Network Neutrality Background...........................................................................2 C. Comcast Blocks Innovative Applications .............................................................5 D. Comcast’s Methods are Deliberately Secretive.....................................................9 II. Legal Argument .....................................................................................................12 A. Degrading Applications Violates the Commission’s Internet Policy Statement, Which the FCC Has Vowed to Enforce..............................................................12 -

Unix and Linux System Administration and Shell Programming

Unix and Linux System Administration and Shell Programming Unix and Linux System Administration and Shell Programming version 56 of August 12, 2014 Copyright © 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2009, 2010, 2011, 2012, 2013, 2014 Milo This book includes material from the http://www.osdata.com/ website and the text book on computer programming. Distributed on the honor system. Print and read free for personal, non-profit, and/or educational purposes. If you like the book, you are encouraged to send a donation (U.S dollars) to Milo, PO Box 5237, Balboa Island, California, USA 92662. This is a work in progress. For the most up to date version, visit the website http://www.osdata.com/ and http://www.osdata.com/programming/shell/unixbook.pdf — Please add links from your website or Facebook page. Professors and Teachers: Feel free to take a copy of this PDF and make it available to your class (possibly through your academic website). This way everyone in your class will have the same copy (with the same page numbers) despite my continual updates. Please try to avoid posting it to the public internet (to avoid old copies confusing things) and take it down when the class ends. You can post the same or a newer version for each succeeding class. Please remove old copies after the class ends to prevent confusing the search engines. You can contact me with a specific version number and class end date and I will put it on my website. version 56 page 1 Unix and Linux System Administration and Shell Programming Unix and Linux Administration and Shell Programming chapter 0 This book looks at Unix (and Linux) shell programming and system administration. -

GNU Coreutils Cheat Sheet (V1.00) Created by Peteris Krumins ([email protected], -- Good Coders Code, Great Coders Reuse)

GNU Coreutils Cheat Sheet (v1.00) Created by Peteris Krumins ([email protected], www.catonmat.net -- good coders code, great coders reuse) Utility Description Utility Description arch Print machine hardware name nproc Print the number of processors base64 Base64 encode/decode strings or files od Dump files in octal and other formats basename Strip directory and suffix from file names paste Merge lines of files cat Concatenate files and print on the standard output pathchk Check whether file names are valid or portable chcon Change SELinux context of file pinky Lightweight finger chgrp Change group ownership of files pr Convert text files for printing chmod Change permission modes of files printenv Print all or part of environment chown Change user and group ownership of files printf Format and print data chroot Run command or shell with special root directory ptx Permuted index for GNU, with keywords in their context cksum Print CRC checksum and byte counts pwd Print current directory comm Compare two sorted files line by line readlink Display value of a symbolic link cp Copy files realpath Print the resolved file name csplit Split a file into context-determined pieces rm Delete files cut Remove parts of lines of files rmdir Remove directories date Print or set the system date and time runcon Run command with specified security context dd Convert a file while copying it seq Print sequence of numbers to standard output df Summarize free disk space setuidgid Run a command with the UID and GID of a specified user dir Briefly list directory