An Index Notation for Tensor Products

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

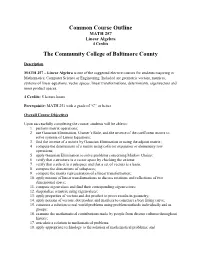

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Exercise 1 Advanced Sdps Matrices and Vectors

Exercise 1 Advanced SDPs Matrices and vectors: All these are very important facts that we will use repeatedly, and should be internalized. • Inner product and outer products. Let u = (u1; : : : ; un) and v = (v1; : : : ; vn) be vectors in n T Pn R . Then u v = hu; vi = i=1 uivi is the inner product of u and v. T The outer product uv is an n × n rank 1 matrix B with entries Bij = uivj. The matrix B is a very useful operator. Suppose v is a unit vector. Then, B sends v to u i.e. Bv = uvT v = u, but Bw = 0 for all w 2 v?. m×n n×p • Matrix Product. For any two matrices A 2 R and B 2 R , the standard matrix Pn product C = AB is the m × p matrix with entries cij = k=1 aikbkj. Here are two very useful ways to view this. T Inner products: Let ri be the i-th row of A, or equivalently the i-th column of A , the T transpose of A. Let bj denote the j-th column of B. Then cij = ri cj is the dot product of i-column of AT and j-th column of B. Sums of outer products: C can also be expressed as outer products of columns of A and rows of B. Pn T Exercise: Show that C = k=1 akbk where ak is the k-th column of A and bk is the k-th row of B (or equivalently the k-column of BT ). -

Tensor Products

Tensor products Joel Kamnitzer April 5, 2011 1 The definition Let V, W, X be three vector spaces. A bilinear map from V × W to X is a function H : V × W → X such that H(av1 + v2, w) = aH(v1, w) + H(v2, w) for v1,v2 ∈ V, w ∈ W, a ∈ F H(v, aw1 + w2) = aH(v, w1) + H(v, w2) for v ∈ V, w1, w2 ∈ W, a ∈ F Let V and W be vector spaces. A tensor product of V and W is a vector space V ⊗ W along with a bilinear map φ : V × W → V ⊗ W , such that for every vector space X and every bilinear map H : V × W → X, there exists a unique linear map T : V ⊗ W → X such that H = T ◦ φ. In other words, giving a linear map from V ⊗ W to X is the same thing as giving a bilinear map from V × W to X. If V ⊗ W is a tensor product, then we write v ⊗ w := φ(v ⊗ w). Note that there are two pieces of data in a tensor product: a vector space V ⊗ W and a bilinear map φ : V × W → V ⊗ W . Here are the main results about tensor products summarized in one theorem. Theorem 1.1. (i) Any two tensor products of V, W are isomorphic. (ii) V, W has a tensor product. (iii) If v1,...,vn is a basis for V and w1,...,wm is a basis for W , then {vi ⊗ wj }1≤i≤n,1≤j≤m is a basis for V ⊗ W . -

Appendix A: Matrices and Tensors

Appendix A: Matrices and Tensors A.1 Introduction and Rationale The purpose of this appendix is to present the notation and most of the mathematical techniques that will be used in the body of the text. The audience is assumed to have been through several years of college level mathematics that included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in undergraduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles and the vector cross and triple scalar products. The solutions to ordinary differential equations are reviewed in the last two sections. The notation, as far as possible, will be a matrix notation that is easily entered into existing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad etc. The desire to represent the components of three-dimensional fourth order tensors that appear in anisotropic elasticity as the components of six-dimensional second order tensors and thus represent these components in matrices of tensor components in six dimensions leads to the nontraditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in Sect. A.11, along with the rationale for this approach. A.2 Definition of Square, Column, and Row Matrices An r by c matrix M is a rectangular array of numbers consisting of r rows and c columns, S.C. -

On Manifolds of Tensors of Fixed Tt-Rank

ON MANIFOLDS OF TENSORS OF FIXED TT-RANK SEBASTIAN HOLTZ, THORSTEN ROHWEDDER, AND REINHOLD SCHNEIDER Abstract. Recently, the format of TT tensors [19, 38, 34, 39] has turned out to be a promising new format for the approximation of solutions of high dimensional problems. In this paper, we prove some new results for the TT representation of a tensor U ∈ Rn1×...×nd and for the manifold of tensors of TT-rank r. As a first result, we prove that the TT (or compression) ranks ri of a tensor U are unique and equal to the respective seperation ranks of U if the components of the TT decomposition are required to fulfil a certain maximal rank condition. We then show that d the set T of TT tensors of fixed rank r forms an embedded manifold in Rn , therefore preserving the essential theoretical properties of the Tucker format, but often showing an improved scaling behaviour. Extending a similar approach for matrices [7], we introduce certain gauge conditions to obtain a unique representation of the tangent space TU T of T and deduce a local parametrization of the TT manifold. The parametrisation of TU T is often crucial for an algorithmic treatment of high-dimensional time-dependent PDEs and minimisation problems [33]. We conclude with remarks on those applications and present some numerical examples. 1. Introduction The treatment of high-dimensional problems, typically of problems involving quantities from Rd for larger dimensions d, is still a challenging task for numerical approxima- tion. This is owed to the principal problem that classical approaches for their treatment normally scale exponentially in the dimension d in both needed storage and computa- tional time and thus quickly become computationally infeasable for sensible discretiza- tions of problems of interest. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

28. Exterior Powers

28. Exterior powers 28.1 Desiderata 28.2 Definitions, uniqueness, existence 28.3 Some elementary facts 28.4 Exterior powers Vif of maps 28.5 Exterior powers of free modules 28.6 Determinants revisited 28.7 Minors of matrices 28.8 Uniqueness in the structure theorem 28.9 Cartan's lemma 28.10 Cayley-Hamilton Theorem 28.11 Worked examples While many of the arguments here have analogues for tensor products, it is worthwhile to repeat these arguments with the relevant variations, both for practice, and to be sensitive to the differences. 1. Desiderata Again, we review missing items in our development of linear algebra. We are missing a development of determinants of matrices whose entries may be in commutative rings, rather than fields. We would like an intrinsic definition of determinants of endomorphisms, rather than one that depends upon a choice of coordinates, even if we eventually prove that the determinant is independent of the coordinates. We anticipate that Artin's axiomatization of determinants of matrices should be mirrored in much of what we do here. We want a direct and natural proof of the Cayley-Hamilton theorem. Linear algebra over fields is insufficient, since the introduction of the indeterminate x in the definition of the characteristic polynomial takes us outside the class of vector spaces over fields. We want to give a conceptual proof for the uniqueness part of the structure theorem for finitely-generated modules over principal ideal domains. Multi-linear algebra over fields is surely insufficient for this. 417 418 Exterior powers 2. Definitions, uniqueness, existence Let R be a commutative ring with 1. -

A Some Basic Rules of Tensor Calculus

A Some Basic Rules of Tensor Calculus The tensor calculus is a powerful tool for the description of the fundamentals in con- tinuum mechanics and the derivation of the governing equations for applied prob- lems. In general, there are two possibilities for the representation of the tensors and the tensorial equations: – the direct (symbolic) notation and – the index (component) notation The direct notation operates with scalars, vectors and tensors as physical objects defined in the three dimensional space. A vector (first rank tensor) a is considered as a directed line segment rather than a triple of numbers (coordinates). A second rank tensor A is any finite sum of ordered vector pairs A = a b + ... +c d. The scalars, vectors and tensors are handled as invariant (independent⊗ from the choice⊗ of the coordinate system) objects. This is the reason for the use of the direct notation in the modern literature of mechanics and rheology, e.g. [29, 32, 49, 123, 131, 199, 246, 313, 334] among others. The index notation deals with components or coordinates of vectors and tensors. For a selected basis, e.g. gi, i = 1, 2, 3 one can write a = aig , A = aibj + ... + cidj g g i i ⊗ j Here the Einstein’s summation convention is used: in one expression the twice re- peated indices are summed up from 1 to 3, e.g. 3 3 k k ik ik a gk ∑ a gk, A bk ∑ A bk ≡ k=1 ≡ k=1 In the above examples k is a so-called dummy index. Within the index notation the basic operations with tensors are defined with respect to their coordinates, e. -

The Tensor Product and Induced Modules

The Tensor Product and Induced Modules Nayab Khalid The Tensor Product The Tensor Product A Construction (via the Universal Property) Properties Examples References Nayab Khalid PPS Talk University of St. Andrews October 16, 2015 The Tensor Product and Induced Modules Overview of the Presentation Nayab Khalid The Tensor Product A 1 The Tensor Product Construction Properties Examples 2 A Construction References 3 Properties 4 Examples 5 References The Tensor Product and Induced Modules The Tensor Product Nayab Khalid The Tensor Product A Construction Properties The tensor product of modules is a construction that allows Examples multilinear maps to be carried out in terms of linear maps. References The tensor product can be constructed in many ways, such as using the basis of free modules. However, the standard, more comprehensive, definition of the tensor product stems from category theory and the universal property. The Tensor Product and Induced Modules The Tensor Product (via the Nayab Khalid Universal Property) The Tensor Product A Construction Properties Examples Definition References Let R be a commutative ring. Let E1; E2 and F be modules over R. Consider bilinear maps of the form f : E1 × E2 ! F : The Tensor Product and Induced Modules The Tensor Product (via the Nayab Khalid Universal Property) The Tensor Product Definition A Construction The tensor product E1 ⊗ E2 is the module with a bilinear map Properties Examples λ: E1 × E2 ! E1 ⊗ E2 References such that there exists a unique homomorphism which makes the following diagram commute. The Tensor Product and Induced Modules A Construction of the Nayab Khalid Tensor Product The Tensor Product Here is a more constructive definition of the tensor product. -

Università Degli Studi Di Trieste a Gentle Introduction to Clifford Algebra

Università degli Studi di Trieste Dipartimento di Fisica Corso di Studi in Fisica Tesi di Laurea Triennale A Gentle Introduction to Clifford Algebra Laureando: Relatore: Daniele Ceravolo prof. Marco Budinich ANNO ACCADEMICO 2015–2016 Contents 1 Introduction 3 1.1 Brief Historical Sketch . 4 2 Heuristic Development of Clifford Algebra 9 2.1 Geometric Product . 9 2.2 Bivectors . 10 2.3 Grading and Blade . 11 2.4 Multivector Algebra . 13 2.4.1 Pseudoscalar and Hodge Duality . 14 2.4.2 Basis and Reciprocal Frames . 14 2.5 Clifford Algebra of the Plane . 15 2.5.1 Relation with Complex Numbers . 16 2.6 Clifford Algebra of Space . 17 2.6.1 Pauli algebra . 18 2.6.2 Relation with Quaternions . 19 2.7 Reflections . 19 2.7.1 Cross Product . 21 2.8 Rotations . 21 2.9 Differentiation . 23 2.9.1 Multivectorial Derivative . 24 2.9.2 Spacetime Derivative . 25 3 Spacetime Algebra 27 3.1 Spacetime Bivectors and Pseudoscalar . 28 3.2 Spacetime Frames . 28 3.3 Relative Vectors . 29 3.4 Even Subalgebra . 29 3.5 Relative Velocity . 30 3.6 Momentum and Wave Vectors . 31 3.7 Lorentz Transformations . 32 3.7.1 Addition of Velocities . 34 1 2 CONTENTS 3.7.2 The Lorentz Group . 34 3.8 Relativistic Visualization . 36 4 Electromagnetism in Clifford Algebra 39 4.1 The Vector Potential . 40 4.2 Electromagnetic Field Strength . 41 4.3 Free Fields . 44 5 Conclusions 47 5.1 Acknowledgements . 48 Chapter 1 Introduction The aim of this thesis is to show how an approach to classical and relativistic physics based on Clifford algebras can shed light on some hidden geometric meanings in our models.