The Story of Bridging

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 10: Switching & Internetworking

Lecture 10: Switching & Internetworking CSE 123: Computer Networks Alex C. Snoeren HW 2 due WEDNESDAY Lecture 10 Overview ● Bridging & switching ◆ Spanning Tree ● Internet Protocol ◆ Service model ◆ Packet format CSE 123 – Lecture 10: Internetworking 2 Selective Forwarding ● Only rebroadcast a frame to the LAN where its destination resides ◆ If A sends packet to X, then bridge must forward frame ◆ If A sends packet to B, then bridge shouldn’t LAN 1 LAN 2 A W B X bridge C Y D Z CSE 123 – Lecture 9: Bridging & Switching 3 Forwarding Tables ● Need to know “destination” of frame ◆ Destination address in frame header (48bit in Ethernet) ● Need know which destinations are on which LANs ◆ One approach: statically configured by hand » Table, mapping address to output port (i.e. LAN) ◆ But we’d prefer something automatic and dynamic… ● Simple algorithm: Receive frame f on port q Lookup f.dest for output port /* know where to send it? */ If f.dest found then if output port is q then drop /* already delivered */ else forward f on output port; else flood f; /* forward on all ports but the one where frame arrived*/ CSE 123 – Lecture 9: Bridging & Switching 4 Learning Bridges ● Eliminate manual configuration by learning which addresses are on which LANs Host Port A 1 ● Basic approach B 1 ◆ If a frame arrives on a port, then associate its source C 1 address with that port D 1 ◆ As each host transmits, the table becomes accurate W 2 X 2 ● What if a node moves? Table aging Y 3 ◆ Associate a timestamp with each table entry Z 2 ◆ Refresh timestamp for each -

802.11 BSS Bridging

802.11 BSS Bridging Contributed by Philippe Klein, PhD Broadcom IEEE 8021/802.11 Study Group, Aug 2012 new-phkl-11-bbs-bridging-0812-v2 The issue • 802.11 STA devices are end devices that do not bridge to external networks. This: – limit the topology of 802.11 BSS to “stub networks” – do not allow a (STA-)AP-STA wireless link to be used as a connecting path (backbone) between other networks • Partial solutions exist to overcome this lack of bridging functionality but these solutions are: – proprietary only – limited to certain type of traffic – or/and based on Layer 3 (such IP Multicast to MAC Multicast translation, NAT - Network Address Translation) IEEE 8021/802.11 Study Group - Aug 2012 2 Coordinated Shared Network (CSN) CSN CSN Network CSN Node 1 Node 2 Shared medium Logical unicast links CSN CSN Node 3 Node 4 • Contention-free, time-division multiplexed-access, network of devices sharing a common medium and supporting reserved bandwidth based on priority or flow (QoS). – one of the nodes of the CSN acts as the network coordinator, granting transmission opportunities to the other nodes of the network. • Physically a shared medium, in that a CSN node has a single physical port connected to the half-duplex medium, but logically a fully-connected one-hop mesh network, in that every node can transmit frames to every other node over the shared medium. • Supports two types of transmission: – unicast transmission for point-to-point (node-to-node) – transmission and multicast/broadcast transmission for point-to-multipoint (node-to-other/all-nodes) transmission. -

ISDN LAN Bridging Bhi

ISDN LAN Bridging BHi Tim Boland U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Gaithersburg, MD 20899 QC 100 NIST .U56 NO. 5532 199it NISTIR 5532 ISDN LAN Bridging Tim Boland U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Gaithersburg, MD 20899 November 1994 U.S. DEPARTMENT OF COMMERCE Ronald H. Brown, Secretary TECHNOLOGY ADMINISTRATION Mary L. Good, Under Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY Arati Prabhakar, Director DATE DUE - ^'' / 4 4 ' / : .f : r / Demco, Inc. 38-293 . ISDN LAN BRIDGING 1.0 Introduction This paper will provide guidance which will enable users to properly assimilate Integrated Services Digital Network (ISDN) local area network (LAN) bridging products into the workplace. This technology is expected to yield economic, functional and performance benefits to users. Section 1 (this section) provides some introductory information. Section 2 describes the environment to which this paper applies. Section 3 provides history and status information. Section 4 describes service features of some typical product offerings. Section 5 explains the decisions that users have to make and the factors that should influence their decisions. Section 6 deals with current ISDN LAN bridge interoperability activities. Section 7 gives a high-level summary and future direction. 2.0 ISDN LAN Bridging Environment 2 . 1 User Environment ISDN LAN bridge usage should be considered by users who have a need to access a LAN or specific device across a distance of greater than a few kilometers, or by users who are on a LAN and need to access a specific device or another network remotely, and, for both situations, have or are considering ISDN use to accomplish this access. -

Bridging Strategies for LAN Internets Previous Screen Nathan J

51-20-36 Bridging Strategies for LAN Internets Previous screen Nathan J. Muller Payoff As corporations continue to move away from centralized computing to distributed, peer-to- peer arrangements, the need to share files and access resources across heterogeneous networks becomes all the more necessary. The need to interconnect dissimilar host systems and LANs may arise from normal business operations or as the result of a corporate merger or acquisition. Whatever the justification, internetworking is becoming ever more important, and the interconnection device industry will grow for the rest of the decade. Introduction The devices that facilitate the interconnection of host systems and LANs fall into the categories of repeaters , bridges, routers, and gateways. Repeaters are the simplest devices and are used to extend the range of LANs and other network facilities by boosting signal strength and reshaping distorted signals. Gateways are the most complex devices; they provide interoperability between applications by performing processing-intensive protocol conversions. In the middle of this “complexity spectrum” are bridges and routers. At the risk of oversimplification, traditional bridges implement basic data-level links between LANs that use identical protocols; traditional routers can be programmed for multiple network protocols, thereby supporting diverse types of LANs and host systems over the same WAN facility. However, in many situations the use of routers is overkill and needlessly expensive; routers cost as much as $75,000 for a full-featured, multiport unit, compared with $6,000 to $30,000 for most bridges. The price difference is attributable to the number of protocols supported, the speed of the Central Processing Unit, port configurations, WAN interfaces, and network management features. -

Bridging Internetwork Operating System Release 10.2

Internetwork Operating System Release 10.2 cisc EM Bridging Software Release O2 September 1994 Corporate Headquarters 170 West Tasman Drive San Jose CA 95134-1706 USA Phone 408 526-4000 Fax 408 526-4100 Customer Order Number TRN-IRSC-1O.2 Text Part Number 2O91O1 The and and other technical information the products specifications configurations regarding products contained in this manual are subject to change without notice Alt statements technical information and recommendations contained in this manual are believed to be accurate and reliable but are without of and must take full for their presented warranty any kind express or implied users responsibility application of any products specified in this manual This radiate radio if not installed and used in equipment generates uses and can frequency energy and accordance with the instruction manual for this device may cause interference to radio communications This equipment has been tested and found to comply with the limits for Class computing device which reasonable pursuant to Subpart of Part 15 of FCC Rules are designed to provide protection against such interference when operated in commercial environment of this in residential is in which their Operation equipment area likely to cause interference case users at own expense will be required to take whatever measures may be required to correct the interference will The following third-party software may be included with your product and be subject to the software license agreement The Cisco implementation of TCP header compression -

Understanding Linux Internetworking

White Paper by David Davis, ActualTech Media Understanding Linux Internetworking In this Paper Introduction Layer 2 vs. Layer 3 Internetworking................ 2 The Internet: the largest internetwork ever created. In fact, the Layer 2 Internetworking on term Internet (with a capital I) is just a shortened version of the Linux Systems ............................................... 3 term internetwork, which means multiple networks connected Bridging ......................................................... 3 together. Most companies create some form of internetwork when they connect their local-area network (LAN) to a wide area Spanning Tree ............................................... 4 network (WAN). For IP packets to be delivered from one Layer 3 Internetworking View on network to another network, IP routing is used — typically in Linux Systems ............................................... 5 conjunction with dynamic routing protocols such as OSPF or BGP. You c an e as i l y use Linux as an internetworking device and Neighbor Table .............................................. 5 connect hosts together on local networks and connect local IP Routing ..................................................... 6 networks together and to the Internet. Virtual LANs (VLANs) ..................................... 7 Here’s what you’ll learn in this paper: Overlay Networks with VXLAN ....................... 9 • The differences between layer 2 and layer 3 internetworking In Summary ................................................. 10 • How to configure IP routing and bridging in Linux Appendix A: The Basics of TCP/IP Addresses ....................................... 11 • How to configure advanced Linux internetworking, such as VLANs, VXLAN, and network packet filtering Appendix B: The OSI Model......................... 12 To create an internetwork, you need to understand layer 2 and layer 3 internetworking, MAC addresses, bridging, routing, ACLs, VLANs, and VXLAN. We’ve got a lot to cover, so let’s get started! Understanding Linux Internetworking 1 Layer 2 vs. -

Bridging Principles

Bridging Principles 1 By the end of this session you will be able to... n Define bridging modes – Source Routing – Transparent – Source Route Transparent (SRT) n Describe how Spanning Tree functions Token Ring Bridging 2 2 Flexible Frame Forwarding Choice of Techniques Source Route Source Route Transparent Transparent Bridging Bridging Bridging n Transparent u Ethernet and Token Ring u simple to implement u not easy to manage in a complex network n Source Routing u Token Ring u requires management effort to implement u trouble shooting is simplified n SRT u short term combination solution Token Ring Bridging 3 Bridging Techniques Transparent Can be used on both Token Ring and Ethernet networks Nothing is identified so implementation is simple Nothing is identified so locating problems can be difficult on complex networks Source Route Bridging Designed for Token Ring networks Requires each ring and bridge to be identified Locating potential and actual trouble spots is simplified SRT Useful when combing transparent and source routing networks, e.g. when adding a department using ‘the other method’ to a company network. Allows bridges/switches to forward both source routed and transparent frames appropriately. Also allows the bridges/switches to communicate with each other. A short term solution, ultimately MAKE UP YOUR MIND; use source routing OR transparent for the whole network. 3 What is the purpose of a Bridge ? 2 4 1 Ring A Ring B 6 3 5 n Connects two physical rings n Forwards or Filters Frames n Single logical network n Keeps local traffic local Token Ring Bridging 4 Bridges are used to physically connect two rings. -

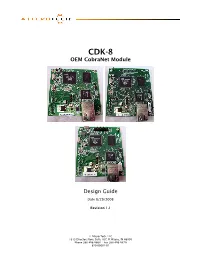

OEM Cobranet Module Design Guide

CDK-8 OEM CobraNet Module Design Guide Date 8/29/2008 Revision 1.1 © Attero Tech, LLC 1315 Directors Row, Suite 107, Ft Wayne, IN 46808 Phone 260-496-9668 • Fax 260-496-9879 620-00001-01 CDK-8 Design Guide Contents 1 – Overview .....................................................................................................................................................................................................................................2 1.1 – Notes on Modules .................................................................................................................................................... 2 2 – Digital Audio Interface Connectivity...........................................................................................................................................................................3 2.1 – Pin Descriptions ....................................................................................................................................................... 3 2.1.1 - Audio clocks ..................................................................................................................................................... 3 2.1.2 – Digital audio..................................................................................................................................................... 3 2.1.3 – Serial bridge ..................................................................................................................................................... 4 2.1.4 – Control............................................................................................................................................................ -

Bridging Basics

CHAPTER 3 Bridging Basics Background Bridges became commercially available in the early 1980s. At the time of their introduction, bridges connected and enabled packet forwarding between homogeneous networks. More recently, bridging between different networks has also been defined and standardized. Several kinds of bridging have emerged as important. Transparent bridging is found primarily in Ethernet environments. Source-route bridging is found primarily in Token Ring environments. Translational bridging provides translation between the formats and transit principles of different media types (usually Ethernet and Token Ring). Source-route transparent bridging combines the algorithms of transparent bridging and source-route bridging to allow communication in mixed Ethernet/Token Ring environments. The diminishing price and the recent inclusion of bridging capability in many routers has taken substantial market share away from pure bridges. Those bridges that have survived include features such as sophisticated filtering, pseudo-intelligent path selection, and high throughput rates. Although an intense debate about the benefits of bridging versus routing raged in the late 1980s, most people now agree that each has its place and that both are often necessary in any comprehensive internetworking scheme. Internetworking Device Comparison Internetworking devices offer communication between local area network (LAN) segments. There are four primary types of internetworking devices: repeaters, bridges, routers, and gateways. These devices can be differentiated very generally by the Open System Interconnection (OSI) layer at which they establish the LAN-to-LAN connection. Repeaters connect LANs at OSI Layer 1; bridges connect LANs at Layer 2; routers connect LANs at Layer 3; and gateways connect LANs at Layers 4 through 7. -

Bridging (Networking)

Bridging (networking) A network bridge is a computer networking device that creates a single, aggregate network from multiple communication networks or network segments. This function is called network bridging.[1] Bridging is distinct from routing. Routing allows multiple networks to communicate independently and yet remain separate, whereas bridging connects two separate networks as if they were a single network.[2] In the OSI model, bridging is performed in the data link layer (layer 2).[3] If one or more segments of the bridged network are wireless, the device is known as a wireless bridge. A high-level overview of network bridging, using the ISO/OSI layers and terminology The main types of network bridging technologies are simple bridging, multiport bridging, and learning or transparent bridging.[4][5] Contents Transparent bridging Simple bridging Multiport bridging Implementation Forwarding Shortest Path Bridging See also References Transparent bridging Transparent bridging uses a table called the forwarding information base to control the forwarding of frames between network segments. The table starts empty and entries are added as the bridge receives frames. If a destination address entry is not found in the table, the frame is flooded to all other ports of the bridge, flooding the frame to all segments except the one from which it was received. By means of these flooded frames, a host on the destination network will respond and a forwarding database entry will be created. Both source and destination addresses are used in this process: source addresses are recorded in entries in the table, while destination addresses are looked up in the table and matched to the proper segment to send the frame to. -

Cisco Extended Wireless Connectivity for Students and Communities: Bridging the Digital Divide Solution Overview

Solution overview Cisco public Cisco Extended Wireless Connectivity for Students and Communities Bridge the digital divide Millions of low-income students and households do not have access to fast and reliable internet in their home. What if you could bridge that gap and enable consistent remote learning experiences for all children during the pandemic and beyond? Internet access is more important than ever – not just for students but also for those looking for jobs and trying to efficiently manage life. Cisco® Extended Wireless Connectivity is a solution that enables secure and fast Wi-Fi to any household, helping schools and communities bridge the digital divide. © 2021 Cisco and/or its affiliates. All rights reserved. Solution overview Cisco public Key benefits Overview Cisco is uniquely positioned to help build the bridge The global pandemic has impacted schools and communities significantly. Overnight, students were to securely connect all students and homes. The solution offers: learning from home, people were working from home, and many others were put out of work. While difficult for most, lower-income families and households were the most affected. Not everyone has • Cost-effective operations: Uses existing access to reliable and fast internet in their home, impeding the ability to learn, work, and find jobs. network infrastructure and internet services to extend connectivity to students and In a recent study, 43% of lower-income parents reported that their children use a cellphone to families in their home. This eliminates complete their homework*. Compounding the issue, 40% reported that they must use public Wi-Fi to additional operating fees, as no broadband LTE-based services are required. -

Wimax Solutions Airspan’S Wimax Deployments Around the World

Industry’s most complete WiMAX product family in the widest selection of frequency bands WiMAX Solutions Airspan’s WiMAX Deployments Around the World 1 Airspan - The Recognized Leader in WiMAX • The widest range of WiMAX products • The widest range of frequency bands • The most advanced technologies and features Airspan is a worldwide leader in capability enables them to broadband wireless with over 400 simultaneously oer both WiMAX and customers in more than 100 countries. Wi-Fi, thus enabling the service provider to address a variety of As a founding member of the WiMAX markets including xed, nomadic, Forum™, Airspan has led the way in portable and Mobile WiMAX together WiMAX, being among the rst wave of with any Wi-Fi enabled device. companies to achieve certication for its base station and end user premises The challenges associated with equipment. delivering time critical services such as voice and video over a shared medium Airspan is also leading the race to such as wireless access are well known. Introducing WiMAX Mobile WiMAX. Through a careful Airspan’s VoiceMAX gives operators choice of advance technologies, the the ability to deliver carrier-grade VoIP agship base station HiperMAX is through a software suite that provides software upgradeable to Mobile admission control and manages WiMAX. The recently announced network congestion to deliver the best Over 400 customers in more than MicroMAXe also use the same user experience for VoIP calls across a 100 countries baseband technology rst HiperMAX network operating xed, • Maximize your CAPEX and developed for HiperMAX. nomadic and mobile proles OPEX returns simultaneously.