Retrofitting Privacy Controls to Stock Android

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Network/Socket Programming in Java

Network/Socket Programming in Java Rajkumar Buyya Elements of C-S Computing a client, a server, and network Request Client Server Network Result Client machine Server machine java.net n Used to manage: c URL streams c Client/server sockets c Datagrams Part III - Networking ServerSocket(1234) Output/write stream Input/read stream Socket(“128.250.25.158”, 1234) Server_name: “manjira.cs.mu.oz.au” 4 Server side Socket Operations 1. Open Server Socket: ServerSocket server; DataOutputStream os; DataInputStream is; server = new ServerSocket( PORT ); 2. Wait for Client Request: Socket client = server.accept(); 3. Create I/O streams for communicating to clients is = new DataInputStream( client.getInputStream() ); os = new DataOutputStream( client.getOutputStream() ); 4. Perform communication with client Receiive from client: String line = is.readLine(); Send to client: os.writeBytes("Hello\n"); 5. Close sockets: client.close(); For multithreade server: while(true) { i. wait for client requests (step 2 above) ii. create a thread with “client” socket as parameter (the thread creates streams (as in step (3) and does communication as stated in (4). Remove thread once service is provided. } Client side Socket Operations 1. Get connection to server: client = new Socket( server, port_id ); 2. Create I/O streams for communicating to clients is = new DataInputStream( client.getInputStream() ); os = new DataOutputStream( client.getOutputStream() ); 3. Perform communication with client Receiive from client: String line = is.readLine(); Send to client: os.writeBytes("Hello\n"); -

How to XDP (With Snabb)

How to XDP (with Snabb) Max Rottenkolber <[email protected]> Tuesday, 21 January 2020 Table of Contents Intro 1 Creating an XDP socket 2 Mapping the descriptor rings 4 Binding an XDP socket to an interface 7 Forwarding packets from a queue to an XDP socket 8 Creating a BPF map . 9 Assembling a BPF program . 9 Attaching a BPF program to an interface . 12 Packet I/O 14 Deallocating the XDP socket 18 Intro Networking in userspace is becoming mainstream. Recent Linux kernels ship with a feature called eXpress Data Path (XDP) that intends to con- solidate kernel-bypass packet processing applications with the kernel’s networking stack. XDP provides a new kind of network socket that al- lows userspace applications to do almost direct I/O with network device drivers while maintaining integration with the kernel’s native network stack. To Snabb XDP looks like just another network I/O device. In fact the XDP ABI/API is so similar to a typical hardware interfaces that it feels a bit like driving a NIC, and not by accident. I think it is fair to describe XDP as a generic interface to Linux network drivers that also 1 has a virtual backend it integrates with: the Linux network stack. In that sense, XDP can be seen as a more hardware centric sibling of AF_PACKET. I can think of two reasons why XDP might be interesting for Snabb users: • While the Snabb community prefers NICs with open hardware interface specifications there are a lot of networking devices out there that lack open specifications. -

Improving Networking

IMPERIAL COLLEGE LONDON FINALYEARPROJECT JUNE 14, 2010 Improving Networking by moving the network stack to userspace Author: Matthew WHITWORTH Supervisor: Dr. Naranker DULAY 2 Abstract In our modern, networked world the software, protocols and algorithms involved in communication are among some of the most critical parts of an operating system. The core communication software in most modern systems is the network stack, but its basic monolithic design and functioning has remained unchanged for decades. Here we present an adaptable user-space network stack, as an addition to my operating system Whitix. The ideas and concepts presented in this report, however, are applicable to any mainstream operating system. We show how re-imagining the whole architecture of networking in a modern operating system offers numerous benefits for stack-application interactivity, protocol extensibility, and improvements in network throughput and latency. 3 4 Acknowledgements I would like to thank Naranker Dulay for supervising me during the course of this project. His time spent offering constructive feedback about the progress of the project is very much appreciated. I would also like to thank my family and friends for their support, and also anybody who has contributed to Whitix in the past or offered encouragement with the project. 5 6 Contents 1 Introduction 11 1.1 Motivation.................................... 11 1.1.1 Adaptability and interactivity.................... 11 1.1.2 Multiprocessor systems and locking................ 12 1.1.3 Cache performance.......................... 14 1.2 Whitix....................................... 14 1.3 Outline...................................... 15 2 Hardware and the LDL 17 2.1 Architectural overview............................. 17 2.2 Network drivers................................. 18 2.2.1 Driver and device setup....................... -

This Is the Architecture of Android. Android Runs on Top of a Vanilla Kernel with Little Modifications, Which Are Called Androidisms

4. This is the architecture of Android. Android runs on top of a vanilla kernel with little modifications, which are called Androidisms. The native userspace, includes the init process, a couple of native daemons, hundreds of native libraries and the Hardware Abstraction Layer (HAL). The native libraries are implemented in C/C++ and their functionality is exposed to applications through Java framework APIs. A large part of Android is implemented in Java and until Android version 5.0 the default runtime was Dalvik. Recently a new, more performant runtime, called ART has been integrated in Android (first made available as an option in version 4.4, default runtime from version 5.0). Java runtime libraries are defined in java.* and javax.* packages, and are derived from Apache Harmony project (not Oracle/SUN, in order to avoid copyright and distribution issues.), but several components have been replaced, others extended and improved. Native code can be called from Java through the Java Native Interface (JNI). A large part of the fundamental features of Android are implemented in the system services: display, touch screen, telephony, network connectivity, and so on. A part of these services are implemented in native code and the rest in Java. Each service provides an interface which can be called from other components. Android Framework libraries (also called “the framework API”) include classes for building Android applications. On top, we have the stock applications, that come with the phone. And other applications that are installed by the user. 6. ● Most Linux distributions take the vanilla kernel (from Linux Kernel Archives) and apply their own patches in order to solve bugs, add specific features and improve performance. -

Post Sockets - a Modern Systems Network API Mihail Yanev (2065983) April 23, 2018

Post Sockets - A Modern Systems Network API Mihail Yanev (2065983) April 23, 2018 ABSTRACT In addition to providing native solutions for problems, es- The Berkeley Sockets API has been the de-facto standard tablishing optimal connection with a Remote point or struc- systems API for networking. However, designed more than tured data communication, we have identified that a com- three decades ago, it is not a good match for the networking mon issue with many of the previously provided networking applications today. We have identified three different sets of solutions has been leaving the products vulnerable to code problems in the Sockets API. In this paper, we present an injections and/or buffer overflow attacks. The root cause implementation of Post Sockets - a modern API, proposed by for such problems has mostly proved to be poor memory Trammell et al. that solves the identified limitations. This or resource management. For this reason, we have decided paper can be used for basis of evaluation of the maturity of to use the Rust programming language to implement the Post Sockets and if successful to promote its introduction as new API. Rust is a programming language, that provides a replacement API to Berkeley Sockets. data integrity and memory safety guarantees. It uses region based memory, freeing the programmer from the responsibil- ity of manually managing the heap resources. This in turn 1. INTRODUCTION eliminates the possibility of leaving the code vulnerable to Over the years, writing networked applications has be- resource management errors, as this is handled by the lan- come increasingly difficult. -

Sarath Singapati Inter Process Communication in Android Master of Science Thesis

SARATH SINGAPATI INTER PROCESS COMMUNICATION IN ANDROID MASTER OF SCIENCE THESIS Examiner: Professor Tommi Mikkonen Examiner and thesis subject approved by The Faculty of Computing and Electrical Engineering on 7th March 2012 II ABSTRACT TAMPERE UNIVERSITY OF TECHNOLOGY Master’s Degree Programme in Information Technology SARATH SINGAPATI INTER PROCESS COMMUNICATION IN ANDROID Master of Science Thesis, 45 pages, 4 Appendix pages June 2012 Major: Software Systems Examiner: Professor Tommi Mikkonen Keywords: Android, Google, mobile applications, Process, IPC Google's Android mobile phone software platform is currently the big opportunity for application software developers. Android has the potential for removing the barriers to success in the development and sale of a new generation of mobile phone application software. Just as the standardized PC and Macintosh platforms created markets for desktop and server software, Android, by providing a standard mobile phone application environment, creates a market for mobile applications and the opportunity for applica- tions developers to profit from those applications. One of the main intentions of Android platform is to eliminate the duplication of functionality in different applications to allow functionality to be discovered and in- voked on the fly, and to let users replace applications with others that offer similar func- tionality. The main problem here is how to develop applications that must have as few dependencies as possible, and must be able to provide services to other applications. This thesis studies the Android mobile operating system, its capabilities in develop- ing applications that communicate with each other and provide services to other applica- tions. As part of the study, a sample application called “Event Planner”, has been devel- oped to experiment how Inter Process Communication works in Android platform, ex- plains how to implement, and use Inter Process Communication (IPC). -

Lesson-3 Case Study of Coding for Sending Application Layer Byte Streams on a TCP/IP Network Router Using RTOS Vxworks

Design Examples and Case Studies of Program Modeling and Programming with RTOS: Lesson-3 Case Study Of Coding For Sending Application Layer Byte Streams on a TCP/IP Network Router Using RTOS VxWorks Chapter-13 L03: "Embedded Systems - Architecture, Programming and Design" , 2015 1 Raj Kamal, Publs.: McGraw-Hill Education 1. Specifications Chapter-13 L03: "Embedded Systems - Architecture, Programming and Design" , 2015 2 Raj Kamal, Publs.: McGraw-Hill Education TCP/IP Stack In TCP/IP suite of protocols, application layer transfers the data with appropriate header words to transport layer. At transport layer, either UDP or TCP protocol is used and additional header words placed. Chapter-13 L03: "Embedded Systems - Architecture, Programming and Design" , 2015 3 Raj Kamal, Publs.: McGraw-Hill Education TCP/IP Stack transmitting subsystem Chapter-13 L03: "Embedded Systems - Architecture, Programming and Design" , 2015 4 Raj Kamal, Publs.: McGraw-Hill Education TCP/IP Stack UDP is connection-less protocol for datagram transfer of total size including headers of less than 216 Byte. TCP is connection oriented protocol, in which data of unlimited size can be transferred in multiple sequences to the internet layer At internet layer, the IP protocol is used and IP packets are formed, each packet with an IP header transfers to the network through network driver subsystem. Chapter-13 L03: "Embedded Systems - Architecture, Programming and Design" , 2015 5 Raj Kamal, Publs.: McGraw-Hill Education TCP/IP stack A TCP/IP stack network driver is available in the RTOSes It has a library, sockLib for socket programming. The reader may refer to VxWorks Programmer’s Guide when using these APIs and sockLib. -

Learning Python Network Programming

Learning Python Network Programming Utilize Python 3 to get network applications up and running quickly and easily Dr. M. O. Faruque Sarker Sam Washington BIRMINGHAM - MUMBAI Learning Python Network Programming Copyright © 2015 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: June 2015 Production reference: 1100615 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78439-600-8 www.packtpub.com Credits Authors Project Coordinator Dr. M. O. Faruque Sarker Izzat Contractor Sam Washington Proofreaders Reviewers Stephen Copestake Konstantin Manchev Manchev Safis Editing Vishrut Mehta Anhad Jai Singh Indexer Hemangini Bari Ben Tasker Ilja Zegars Graphics Abhinash Sahu Commissioning Editor Kunal Parikh Production Coordinator Shantanu Zagade Acquisition Editor Kevin Colaco Cover Work Shantanu Zagade Content Development Editor Rohit Singh Technical Editor Saurabh Malhotra Copy Editors Ameesha Green Rashmi Sawant Trishla Singh About the Authors Dr. -

Android's Security Model

1 Android’S Security Model This chapter will first briefly introduce Android’s architecture, inter-process communication (IPC) mechanism, and main components. We then describe Android’s security model and how it relates to the underlying Linux security infrastructure and code signing. We conclude with a brief overview of some newer additions to Android’s security model, namely multi-user support, mandatory access control (MAC) based on SELinux, and verified boot. Android’s architecture and security model are built on top of the tra- ditional Unix process, user, and file paradigm, but this paradigm is not described from scratch here. We assume a basic familiarity with Unix-like systems, particularly Linux. Android’s Architecture Let’s briefly examine Android’s architecture from the bottom up. Figure 1-1 shows a simplified representation of the Android stack. Android Security Internals © 2014 by Nikolay Elenkov System Apps User-Installed Apps Settings/Phone/Launcher/... Android Framework Libraries Java android.* Runtime Libraries System Services java.* Activity Mgr./Package Mgr./Window Mgr./... javax.* Dalvik Runtime Native Native Init HAL Daemons Libraries Linux Kernel Figure 1-1: The Android architecture Linux Kernel As you can see in Figure 1-1, Android is built on top of the Linux kernel. As in any Unix system, the kernel provides drivers for hardware, networking, file- system access, and process management. Thanks to the Android Mainlining Project,1 you can now run Android with a recent vanilla kernel (with some effort), but an Android kernel is slightly different from a “regular” Linux kernel that you might find on a desktop machine or a non-Android embed- ded device. -

Towards Access Control for Isolated Applications

Towards Access Control for Isolated Applications Kirill Belyaev and Indrakshi Ray Computer Science Department, Colorado State University, Fort Collins, U.S.A Keywords: Access Control, Service and Systems Design, Inter-application Communication. Abstract: With the advancements in contemporary multi-core CPU architectures, it is now possible for a server operating system (OS), such as Linux, to handle a large number of concurrent application services on a single server instance. Individual application components of such services may run in different isolated runtime environ- ments, such as chrooted jails or application containers, and may need access to system resources and the ability to collaborate and coordinate with each other in a regulated and secure manner. We propose an access control framework for policy formulation, management, and enforcement that allows access to OS resources and also permits controlled collaboration and coordination for service components running in disjoint containerized environments under a single Linux OS server instance. The framework consists of two models and the policy formulation is based on the concept of policy classes for ease of administration and enforcement. The policy classes are managed and enforced through a Linux Policy Machine (LPM) that acts as the centralized reference monitor and provides a uniform interface for accessing system resources and requesting application data and control objects. We present the details of our framework and also discuss the preliminary implementation to demonstrate the feasibility of our approach. 1 INTRODUCTION plications for controlling access to OS resources, for regulating the access requests to the application data The advancements in contemporary multi-core CPU and control objects used for inter-application commu- architectures have greatly improved the ability of nication between service components. -

Android Architecture and Binder

ANDROID ARCHITECTURE AND BINDER DHINAKARAN PANDIYAN, SAKETH PARANJAPE 1. INTRODUCTION “Android is a Linux-based operating system designed primarily for touchscreen mobile devices such as smartphones and tablet computers” [1]. Android is also the world’s most widely used smartphone platform having the highest market share. The platform is made of the open source operating system Android, devices that run it and an extensive repository of applications developed for the OS. The open source nature of the OS allows anybody to freely modify and distribute it. The OS has code written in Assembly, Java, C++ and C. The applications are primarily written in Java; however C++ can also be used. Google PlayStore, the application repository for Android, has more than 700,000 applications. The devices that run Android can have the hardware range from a bare minimum 32MB RAM, 200 MHz processor to those with a state-of-the-art Quad core processor and 2GB of RAM. The primary hardware platform is the ARM architecture. Android’s kernel was based on the Linux 2.6 kernel. The latest version of Android called Jelly Bean has the Linux kernel 3.0.3. Android has added new drivers to the kernel, middleware, libraries and an extensive set of APIs that aid application development on top of the kernel. The relevance of smartphones in today’s world, ubiquity of the Android operating system and intriguing customization of Linux to run on mobile devices make a compelling case to study Android. Through our project we tried to explore the Android software stack and its unique features, primarily the Inter Process Communication (IPC) mechanism – Binder. -

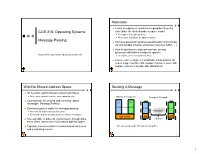

COS 318: Operating Systems Message Passing

Motivation u Locks, semaphores, monitors are good but they only COS 318: Operating Systems work under the shared-address-space model l Threads in the same process l Processes that share an address space Message Passing u We have assumed that processes/threads communicate via shared data (counter, producer-consumer buffer, …) u How to synchronize and communicate among processes with different address spaces? (http://www.cs.princeton.edu/courses/cos318/) l Inter-process communication (IPC) u Can we have a single set of primitives that work for all cases: single machine OS, multiple machines same OS, multiple machines multiple OS, distributed? With No Shared Address Space Sending A Message u No need for explicit mutual exclusion primitives l Processes cannot touch the same data directly Within A Computer Across A Network u Communicate by sending and receiving explicit messages: Message Passing P1 P2 Send() Recv() u Synchronization is implicit in message passing Send() Recv() l No need for explicit mutual exclusion Network l Event ordering via sending and receiving of messages OS Kernel OS OS u More portable to different environments, though lacks COS461 some of the convenience of a shared address space u Typically, communication in consummated via a send P1 can send to P2, P2 can send to P1 and a matching receive 4 1 Simple Send and Receive Simple API send( dest, data ), receive( src, data ) send( dest, data ), receive( src, data ) S S R u Destination or source l Direct address: send(dest, data) node Id, process Id recv(src, data) l Indirect address: send(dest, data) mailbox, socket, R channel, … u Data l Buffer (addr) and size recv(src, data) u Send “data” specifies where the data are in sender’s address space l Anything else that u Recv “data” specifies where the incoming message data should be specifies the source put in receiver’s address space data or destination data structure 5 6 Simple Semantics Issues/options u Send call does not return until data have been copied u Asynchronous vs.