Populating Categories Using Constrained Matrix Factorization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

See It Big! Action Features More Than 30 Action Movie Favorites on the Big

FOR IMMEDIATE RELEASE ‘SEE IT BIG! ACTION’ FEATURES MORE THAN 30 ACTION MOVIE FAVORITES ON THE BIG SCREEN April 19–July 7, 2019 Astoria, New York, April 16, 2019—Museum of the Moving Image presents See It Big! Action, a major screening series featuring more than 30 action films, from April 19 through July 7, 2019. Programmed by Curator of Film Eric Hynes and Reverse Shot editors Jeff Reichert and Michael Koresky, the series opens with cinematic swashbucklers and continues with movies from around the world featuring white- knuckle chase sequences and thrilling stuntwork. It highlights work from some of the form's greatest practitioners, including John Woo, Michael Mann, Steven Spielberg, Akira Kurosawa, Kathryn Bigelow, Jackie Chan, and much more. As the curators note, “In a sense, all movies are ’action’ movies; cinema is movement and light, after all. Since nearly the very beginning, spectacle and stunt work have been essential parts of the form. There is nothing quite like watching physical feats, pulse-pounding drama, and epic confrontations on a large screen alongside other astonished moviegoers. See It Big! Action offers up some of our favorites of the genre.” In all, 32 films will be shown, many of them in 35mm prints. Among the highlights are two classic Technicolor swashbucklers, Michael Curtiz’s The Adventures of Robin Hood and Jacques Tourneur’s Anne of the Indies (April 20); Kurosawa’s Seven Samurai (April 21); back-to-back screenings of Mad Max: Fury Road and Aliens on Mother’s Day (May 12); all six Mission: Impossible films -

Thematic, Moral and Ethical Connections Between the Matrix and Inception

Thematic, Moral and Ethical Connections Between the Matrix and Inception. Modern Day science and technology has been crucial in the development of the modern film industry. In the past, directors have been confined by unwieldy, primitive cameras and the most basic of special effects. The contemporary possibilities now available has enabled modern film to become more dynamic and versatile, whilst also being able to better capture the performance of actors, and better display scenes and settings using special effects. As the prevalence of technology in society increases, film makers have used their medium to express thematic, moral and ethical concerns of technology in human life. The films I will be looking at: The Matrix, directed by the Wachowskis, and Inception, directed by Christopher Nolan, both explore strong themes of technology. The theme of technology is expanded upon as the directors of both films explore the use of augmented reality and its effects on the human condition. Through the use of camera angles, costuming and lighting, the Witkowski brothers represent the idea that technology is dangerous. During the sequence where neo is unplugged from the Matrix, the directors have cleverly combines lighting, visual and special effects to craft an ominous and unpleasant setting in which the technology is present. Upon Neo’s confrontation with the spider drone, the use of camera angles to establishes the dominance of technology. The low-angle shot of the drone places the viewer in a subordinate position, making the drone appear powerful. The dingy lighting and poor mis-en-scene in the set further reinforces the idea that technology is dangerous. -

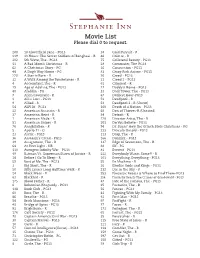

Movie List Please Dial 0 to Request

Movie List Please dial 0 to request. 200 10 Cloverfield Lane - PG13 38 Cold Pursuit - R 219 13 Hours: The Secret Soldiers of Benghazi - R 46 Colette - R 202 5th Wave, The - PG13 75 Collateral Beauty - PG13 11 A Bad Mom’s Christmas - R 28 Commuter, The-PG13 62 A Christmas Story - PG 16 Concussion - PG13 48 A Dog’s Way Home - PG 83 Crazy Rich Asians - PG13 220 A Star is Born - R 20 Creed - PG13 32 A Walk Among the Tombstones - R 21 Creed 2 - PG13 4 Accountant, The - R 61 Criminal - R 19 Age of Adaline, The - PG13 17 Daddy’s Home - PG13 40 Aladdin - PG 33 Dark Tower, The - PG13 7 Alien:Covenant - R 67 Darkest Hour-PG13 2 All is Lost - PG13 52 Deadpool - R 9 Allied - R 53 Deadpool 2 - R (Uncut) 54 ALPHA - PG13 160 Death of a Nation - PG13 22 American Assassin - R 68 Den of Thieves-R (Unrated) 37 American Heist - R 34 Detroit - R 1 American Made - R 128 Disaster Artist, The - R 51 American Sniper - R 201 Do You Believe - PG13 76 Annihilation - R 94 Dr. Suess’ How the Grinch Stole Christmas - PG 5 Apollo 11 - G 233 Dracula Untold - PG13 23 Arctic - PG13 113 Drop, The - R 36 Assassin’s Creed - PG13 166 Dunkirk - PG13 39 Assignment, The - R 137 Edge of Seventeen, The - R 64 At First Light - NR 88 Elf - PG 110 Avengers:Infinity War - PG13 81 Everest - PG13 49 Batman Vs. Superman:Dawn of Justice - R 222 Everybody Wants Some!! - R 18 Before I Go To Sleep - R 101 Everything, Everything - PG13 59 Best of Me, The - PG13 55 Ex Machina - R 3 Big Short, The - R 26 Exodus Gods and Kings - PG13 50 Billy Lynn’s Long Halftime Walk - R 232 Eye In the Sky - -

What's in a Name? the Matrix As an Introduction to Mathematics

St. John Fisher College Fisher Digital Publications Mathematical and Computing Sciences Faculty/Staff Publications Mathematical and Computing Sciences 9-2008 What's in a Name? The Matrix as an Introduction to Mathematics Kris H. Green St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/math_facpub Part of the Mathematics Commons How has open access to Fisher Digital Publications benefited ou?y Publication Information Green, Kris H. (2008). "What's in a Name? The Matrix as an Introduction to Mathematics." Math Horizons 16.1, 18-21. Please note that the Publication Information provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/math_facpub/12 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. What's in a Name? The Matrix as an Introduction to Mathematics Abstract In lieu of an abstract, here is the article's first paragraph: In my classes on the nature of scientific thought, I have often used the movie The Matrix to illustrate the nature of evidence and how it shapes the reality we perceive (or think we perceive). As a mathematician, I usually field questions elatedr to the movie whenever the subject of linear algebra arises, since this field is the study of matrices and their properties. So it is natural to ask, why does the movie title reference a mathematical object? Disciplines Mathematics Comments Article copyright 2008 by Math Horizons. -

The Matrix As an Introduction to Mathematics

St. John Fisher College Fisher Digital Publications Mathematical and Computing Sciences Faculty/Staff Publications Mathematical and Computing Sciences 2012 What's in a Name? The Matrix as an Introduction to Mathematics Kris H. Green St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/math_facpub Part of the Mathematics Commons How has open access to Fisher Digital Publications benefited ou?y Publication Information Green, Kris H. (2012). "What's in a Name? The Matrix as an Introduction to Mathematics." Mathematics in Popular Culture: Essays on Appearances in Film, Fiction, Games, Television and Other Media , 44-54. Please note that the Publication Information provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/math_facpub/18 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. What's in a Name? The Matrix as an Introduction to Mathematics Abstract In my classes on the nature of scientific thought, I have often used the movie The Matrix (1999) to illustrate how evidence shapes the reality we perceive (or think we perceive). As a mathematician and self-confessed science fiction fan, I usually field questionselated r to the movie whenever the subject of linear algebra arises, since this field is the study of matrices and their properties. So it is natural to ask, why does the movie title reference a mathematical object? Of course, there are many possible explanations for this, each of which probably contributed a little to the naming decision. -

Living in the Matrix: Virtual Reality Systems and Hyperspatial Representation in Architecture

Living in The Matrix: Virtual Reality Systems and Hyperspatial Representation in Architecture Kacmaz Erk, G. (2016). Living in The Matrix: Virtual Reality Systems and Hyperspatial Representation in Architecture. The International Journal of New Media, Technology and the Arts, 13-25. Published in: The International Journal of New Media, Technology and the Arts Document Version: Publisher's PDF, also known as Version of record Queen's University Belfast - Research Portal: Link to publication record in Queen's University Belfast Research Portal Publisher rights © 2016 Gul Kacmaz Erk. Available under the terms and conditions of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License (CC BY-NC-ND 4.0). The use of this material is permitted for non-commercial use provided the creator(s) and publisher receive attribution. No derivatives of this version are permitted. Official terms of this public license apply as indicated here: https://creativecommons.org/licenses/by-nc-nd/4.0/legalcode General rights Copyright for the publications made accessible via the Queen's University Belfast Research Portal is retained by the author(s) and / or other copyright owners and it is a condition of accessing these publications that users recognise and abide by the legal requirements associated with these rights. Take down policy The Research Portal is Queen's institutional repository that provides access to Queen's research output. Every effort has been made to ensure that content in the Research Portal does not infringe any person's rights, or applicable UK laws. If you discover content in the Research Portal that you believe breaches copyright or violates any law, please contact [email protected]. -

What Is the Link Between Religion, Myth and Plot in Any 2 Films of the Science Fiction Genre

Danielle Raffaele Robert A Dallen Prize Topic: A study of the influence of the Bible on the two contemporary science fiction films, The Matrix and Avatar, with a focus on the link between religion, myth and plot. The two films The Matrix (1999, the Wachowski brothers) and Avatar (2009, James Cameron)1 belong to the science fiction genre2 of the contemporary film world and each evoke a diverse set of religiosity and myth within their respective plots. The two films are rich with both overt and subvert religious imagery of motifs and symbolism which one can interpret as belonging to many diverse streams of religious thought and practice across the globe.3 For the purpose of this essay I will be focussing specifically on one religious mode of thought being a Judeo-Christian look at the role of the Messiah and its relation to the hero myth. I will develop this by exploring the archetype of the hero with the two different roles of the biblical Messiah (one being conqueror, and the other of 1 In this essay I presume the reader is familiar with the two films as I do not provide an extensive synopsis or summary of them. I recommend going to the Internet Movie Database (http://imdb.com) and searching for ‘The Matrix’ and ‘Avatar’ for a generous reading of the two plots. 2 Science fiction as a genre will not be discussed in this essay but a definition that struck me as useful for the purpose here is, “My own definition is that science fiction is literature about something that hasn’t happened yet, but might be possible some day. -

3.2 Bullet Time and the Mediation of Post-Cinematic Temporality

3.2 Bullet Time and the Mediation of Post-Cinematic Temporality BY ANDREAS SUDMANN I’ve watched you, Neo. You do not use a computer like a tool. You use it like it was part of yourself. —Morpheus in The Matrix Digital computers, these universal machines, are everywhere; virtually ubiquitous, they surround us, and they do so all the time. They are even inside our bodies. They have become so familiar and so deeply connected to us that we no longer seem to be aware of their presence (apart from moments of interruption, dysfunction—or, in short, events). Without a doubt, computers have become crucial actants in determining our situation. But even when we pay conscious attention to them, we necessarily overlook the procedural (and temporal) operations most central to computation, as these take place at speeds we cannot cognitively capture. How, then, can we describe the affective and temporal experience of digital media, whose algorithmic processes elude conscious thought and yet form the (im)material conditions of much of our life today? In order to address this question, this chapter examines several examples of digital media works (films, games) that can serve as central mediators of the shift to a properly post-cinematic regime, focusing particularly on the aesthetic dimensions of the popular and transmedial “bullet time” effect. Looking primarily at the first Matrix film | 1 3.2 Bullet Time and the Mediation of Post-Cinematic Temporality (1999), as well as digital games like the Max Payne series (2001; 2003; 2012), I seek to explore how the use of bullet time serves to highlight the medial transformation of temporality and affect that takes place with the advent of the digital—how it establishes an alternative configuration of perception and agency, perhaps unprecedented in the cinematic age that was dominated by what Deleuze has called the “movement-image.”[1] 1. -

Unbelievable Bodies: Audience Readings of Action Heroines As a Post-Feminist Visual Metaphor

Unbelievable Bodies: Audience Readings of Action Heroines as a Post-Feminist Visual Metaphor Jennifer McClearen A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts University of Washington 2013 Committee: Ralina Joseph, Chair LeiLani Nishime Program Authorized to Offer Degree: Communication ©Copyright 2013 Jennifer McClearen Running head: AUDIENCE READINGS OF ACTION HEROINES University of Washington Abstract Unbelievable Bodies: Audience Readings of Action Heroines as a Post-Feminist Visual Metaphor Jennifer McClearen Chair of Supervisory Committee: Associate Professor Ralina Joseph Department of Communication In this paper, I employ a feminist approach to audience research and examine the individual interviews of 11 undergraduate women who regularly watch and enjoy action heroine films. Participants in the study articulate action heroines as visual metaphors for career and academic success and take pleasure in seeing women succeed against adversity. However, they are reluctant to believe that the female bodies onscreen are physically capable of the action they perform when compared with male counterparts—a belief based on post-feminist assumptions of the limits of female physical abilities and the persistent representations of thin action heroines in film. I argue that post-feminist ideology encourages women to imagine action heroines as successful in intellectual arenas; yet, the ideology simultaneously disciplines action heroine bodies to render them unbelievable as physically powerful women. -

22Nd Anniversary of the MATRIX: Fans Highlight Interesting Information from This Trilogy

22nd Anniversary of THE MATRIX: fans highlight interesting information from this trilogy The successful Matrix trilogy will be available on HBO Max beginning on June 29thThe sci-fi trilogy, which premiered in 1999, describes a future in which the reality perceived by human beings is the matrix, a simulated reality created by machines in order to pacify the human population. Here is some of the information collected by fans that perhaps you did not know:The Bullet Time Effect was used for the first time in cinema. It is a filming technique that allows you to film specific action scenes in 360 degrees and play them back in slow-motion. Its premiere was so successful that at the time it became Warner Bros’ biggest hit to date (1999). It was the first film in the world to sell 1 million DVDs. Some of the actors that were considered for parts in the project were: Sandra Bullock, Brad Pitt and Leonardo DiCaprio Keanu Reeves decided to share his earnings with the entire team that took part in filming the movie, such as makeup, sound, lighting, etc. The recognizable code that appears on screens when the Matrix is encoded is a Japanese sushi recipe. The shooting scene in the lobby of the building where the Agents are holding Morpheus took 10 days to film. Ironically, the name Morpheus comes from Greek mythology and means God of sleep, which contradicts his role in the film. The movie was filmed in Australia although the names of the streets in the film come from Chicago. -

2016 FEATURE FILM STUDY Photo: Diego Grandi / Shutterstock.Com TABLE of CONTENTS

2016 FEATURE FILM STUDY Photo: Diego Grandi / Shutterstock.com TABLE OF CONTENTS ABOUT THIS REPORT 2 FILMING LOCATIONS 3 GEORGIA IN FOCUS 5 CALIFORNIA IN FOCUS 5 FILM PRODUCTION: ECONOMIC IMPACTS 8 6255 W. Sunset Blvd. FILM PRODUCTION: BUDGETS AND SPENDING 10 12th Floor FILM PRODUCTION: JOBS 12 Hollywood, CA 90028 FILM PRODUCTION: VISUAL EFFECTS 14 FILM PRODUCTION: MUSIC SCORING 15 filmla.com FILM INCENTIVE PROGRAMS 16 CONCLUSION 18 @FilmLA STUDY METHODOLOGY 19 FilmLA SOURCES 20 FilmLAinc MOVIES OF 2016: APPENDIX A (TABLE) 21 MOVIES OF 2016: APPENDIX B (MAP) 24 CREDITS: QUESTIONS? CONTACT US! Research Analyst: Adrian McDonald Adrian McDonald Research Analyst (213) 977-8636 Graphic Design: [email protected] Shane Hirschman Photography: Shutterstock Lionsgate© Disney / Marvel© EPK.TV Cover Photograph: Dale Robinette ABOUT THIS REPORT For the last four years, FilmL.A. Research has tracked the movies released theatrically in the U.S. to determine where they were filmed, why they filmed in the locations they did and how much was spent to produce them. We do this to help businesspeople and policymakers, particularly those with investments in California, better understand the state’s place in the competitive business environment that is feature film production. For reasons described later in this report’s methodology section, FilmL.A. adopted a different film project sampling method for 2016. This year, our sample is based on the top 100 feature films at the domestic box office released theatrically within the U.S. during the 2016 calendar -

Larger Than Life: Communicating the Scale of Prehistoric CG Animals

Larger than Life: Communicating the Scale of Prehistoric CG Animals Valentina Feldman Digital Media Antoinette Westphal College of Media Arts and Design Drexel University Philadelphia, PA 19104 [email protected] Abstract — Since the earliest days of cinema, toying with the This statement can hardly be applied to filmmakers, who perception of scale has given filmmakers the ability to create have historically paid great attention to differences of size. spectacular creatures that could never exist in the physical world. The perception of scale is one of the most widely manipulated With the flexibility of CG visual effects, this trend has persisted in aspects of “movie magic,” and has been so since the earliest the modern day, and blockbuster movies featuring enormous monsters are just as popular as ever. The trend of scaling days of cinema. Films featuring impossibly gigantic creatures creatures to impossible proportions for dramatic effect becomes have dominated the box office since the record-breaking problematic when filmmakers use this technique on non-fictional release of King Kong (1933) [LaBarbera, 2003]. Perhaps creatures. Prehistoric animals in particular have very few unsurprisingly, the trend of giant monsters has only continued scientifically accurate appearances in popular culture, which with the advancement of visual effects technology. Movie means that films such as Jurassic Park play an enormous role in monsters are growing bigger and bigger, and moviemakers determining the public’s view of these animals. When filmmakers arbitrarily adjust the scale of dinosaurs to make them appear show no signs of stopping their ceaseless pursuit of cinematic more fearsome, it can be detrimental to the widespread gigantism.