The Impact of the Microprocessor Anthony Davies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Considerations for Use of Microcomputers in Developing Countrystatistical Offices

Considerations for Use of Microcomputers in Developing CountryStatistical Offices Final Report Prepared by International Statistical Programs Center Bureau of the Census U.S. Department of Commerce Funded by Office of the Science Advisor (c Agency for International Development issued October 1983 IV U.S. Department of Commerce Malcolm Baldrige, Secretary Clarence J. Brown, Deputy Secretary BUREAU OF THE CENSUS C.L. Kincannon, Deputy Director ACKNOWLEDGE ME NT S This study was conducted by the International Statistical Programs Center (ISPC) of the U.S. Bureau of the Census under Participating Agency Services Agreement (PASA) #STB 5543-P-CA-1100-O0, "Strengthening Scientific and Technological Capacity: Low Cost Microcomputer Technology," with the U.S. Agency for International Development (AID). Funding fcr this project was provided as a research grant from the Office of the Science Advisor of AID. The views and opinions expressed in this report, however, are those of the authors, and do not necessarily reflect those of the sponsor. Project implementation was performed under general management of Robert 0. Bartram, Assistant Director for International Programs, and Karl K. Kindel, Chief ISPC. Winston Toby Riley III provided input as an independent consultant. Study activities and report preparation were accomplished by: Robert R. Bair -- Principal Investigator Barbara N. Diskin -- Project Leader/Principal Author Lawrence I. Iskow -- Author William K. Stuart -- Author Rodney E. Butler -- Clerical Assistant Jerry W. Richards -- Clerical Assistant ISPC would like to acknowledge the many microcomputer vendors, software developers, users, the United Nations Statistical Office, and AID staff and contractors that contributed to the knowledge and experiences of the study team. -

North Star Advantage User Manual

ADVANTAGE . User Manual TABLE OF CONTENTS 1 INTRODUCTION TO THE ADVANTAGE 1.1 THE NORTH STAR ADVANTAGE 1-1 1.2 WARRANTY 1-1 1.3 ADVANTAGE CONFIGURATION 1-2 1.3.1 Video Screen 1-3 1.3.2 Keyboard 1-3 1.3.3 Disk Drives 1-4 1.3.4 Diskettes 1-4 1.3.5 Demonstration/Diagnostic Diskette 1-5 1.4 SOFTWARE FOR THE ADVANTAGE 1-5 1.4.1 Operating Systems 1-5 1.4.2 Languages and Application Programs 1-6 1.5 LINE-PRINTER 1-6 1.6 USING THIS MANUAL 1-6 2 ADVANTAGE OPERATION 2:1 START-UP 2-1 2.2 DISK DRIVE UTILIZATION 2-2 2.3 INSERTING DISKETTES 2-2 2.4 LOADING THE SYSTEM 2-5 2.5 STANDARD KEY FUNCTIONS 2-6 2.5.1 Conventional Typewriter Keys 2-6 2.5.2 Numeric Pad Keys 2-8 2.5.3 Cursor Control Keys 2-9 2.5.4 . Program Control Keys 2-10 2.5.5 Function Keys 2-10 2.6 RESET 2-11 2.6.1 Keyboard Reset 2-11 2.6.2 Push Button Reset 2-12 2.7 ENDING A WORK SESSION 2-12 3 RECOMMENDED PROCEDURES 3.1 DISKETTE CARE 3-1 3.1.1 Inserting and Removing Diskettes 3-2 3.1.2 Backing Up Diskettes 3-3 3.1.3 Copying System Diskettes 3-3 3.1.4 Copying Data Diskettes 3-3 3.1.5 Write-Protect Tab 3-5 3.1.6 Labelling Diskettes 3-6 3.1.7 Storing Diskettes 3-6 3.1.8 A Word of Encouragement 3-7 3.2 ADVANTAGE MAINTENANCE 3-7 ADVANTAGE User Manual 4 TROUBLESHOOTING 4.1 TROUBLESHOOTING PROCEDURES 4-1 4.2 CHANGING THE FUSE 4-3 APPENDIX A SPECIFICATIONS A-1 APPENDIX B UNPACKING B-1 APPENDIX c INSTALLATION C-1 APPENDIX D GLOSSARY D-1 ii ADVANTAGE User Manual FIGURES AND TABLES FIGURES 1 INTRODUCTION TO THE ADVANTAGE Figure 1-1 The ADVANTAGE 1-2 Figure 1-2 Video Screen 1-3 Figure 1-3 Keyboard 1-3 -

Pv352vf2103.Pdf

" ASSOCIATION OF COMPUTER USERS VOLUME 3.1, NUMBER 4, APRIL 1980 " In This Issue: CROMEMCO's System Two and Z-2H BENCHMARK REPORT is publishedand distributed by The Association ofComputer Users,a not-for-profituser association, and authoredby the Business Research Division of the UniversityofColorado. ACU'sdistributionofBENCHMARKREPORT is " solelyfor the information and independent evaluationof its members, and does not in anywayconstituteverification of thedata contained, concurrencewith any of the conclusions herein, or endorsementof the productsmentioned. ®Copyright 1980,ACU. No part of this report may be reproducedwithout priorwrittenpermission from theAssociationofComputer Users. Firstclass postage paid at Boulder, Colorado 80301. CROMEMCO MODELS SYSTEM TWO AND Z-2H: BENCHMARK REPORT " TABLE OF CONTENTS Preface 3 Executive Summary 4 Summary of Benchmark Results 5 Benchmarks: The Process: Cromemco Models System Two and Z-2H 6 Overview of Programs and Results 7 Detail Pages Pricing Components 13 Hardware Components 14 Software Components 17 Support Services 20 Summary of User Comments 21 Conclusions 23 " 2 PREFACE " These two models from the System Two and the Z-2H, are evaluated in this fourth report covering small computing systems. Previously reviewed in this series have been the Texas Instruments 771, the Pertec PCC 2000, and the North Star Horizon. And still to come are eight more systems in the under- sls,ooo price range. The goal of this series is to provide users with compara tive information on a number of small systems, information which will be valuable in selecting from among the many alternatives available. We have found that many published comparisons of computing systems report only the technical specifications supplied by manufacturers, and such information is difficult to interpret and seldom comparable across different computers. -

Creative Computing Magazine Is Published Bi-Monthly by Creative Computing

he #1 magazine of computer applicafa *'are raHSJS? sfife a*«uiH O K» » #-. ^ *&> iiD o «» •— "^ Ul JT © O O Ul oo >- at O- X * 3 •O »- •« ^» ^ *© c * c ir — _j «_> o t^ ^ o am z 6 %' 7 * » • • Consumer Computers Buying Guide a/ Paf/i Analysis Electronic Game Reviews Mail Label Programs Someday all terminals will be smart. 128 Functions-software controlled 82 x 16 or 92 x 22 format-plus graphics 7x12 matrix, upper/lower case letters Printer output port 50 to 38,400 baud-selectable "CHERRY" keyboard CT-82 Intelligent Terminal, assembled and tested $795.00 ppd in Cont. U.S. SOUTHWEST TECHNICAL PRODUCTS CORPORATION 219 W. RHAPSODY SAN ANTONIO, TEXAS 78216 CIRCLE 106 ON READER 3ERVICE CARD Give creative Gontpattng to a fHend for " [W*nr fiwter service - call tell free X * • -540-0445] 800-631-8112 InNJ 201 TYPE OF SUBSCRIPTION BOOKS AND MERCHANDISE Foreign Foreign Term USA Surface Air D Gift Send to me 1 2 issues D $ 15 $ 23 $ 39 24 issues D 28 44 76 Gifts cannot be gift wrapped but a 36 issues D 40 64 112 Lifetime D 300 400 600 card with your name will be sent with each order YOUR NAME AND ADDRESS : Quan Cat Descriptions Price Name Address Cittj State Zip- NAME TO APPEAR ON GIFT CARD* SEND GIFT SUBSCRIPTION TO- Name Address Citvf State. .Zip. PAYMENT INFORMATION a Cash , check or 7M.O. enclosed o Visa/BankAmericard") Card no. Books shipping charge SI 00 USA S2 00 Foreign a Master Charge J Exp. NJ Residents add 5% sales lax DPlease bill me ($100 billing fee will be added) be prepaid- TOTAL (magazines and books) Book, orders from individuals must creative computing creative computing Books. -

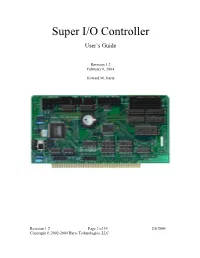

Super I/O Controller

Super I/O Controller User’s Guide Revision 1.2 February 6, 2004 Howard M. Harte Revision 1.2 Page 1 of 34 2/6/2004 Copyright © 2002-2004 Harte Technologies, LLC. First Edition (February 2004) The following paragraph does not apply to the United Kingdom or any country where such provisions are inconsistent with local law: HARTE TECHNOLOGIES, LLC PROVIDES THIS PUBLICATION “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you. This publication could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new revisions of the publication. Harte Technologies, LLC may make improvements and/or changes in the products and/or the programs described in this publication at any time. © Copyright 2000-2004, Harte Technologies, LLC. All rights reserved. Revision 1.2 Page 2 of 34 2/6/2004 Copyright © 2002-2004 Harte Technologies, LLC. Table of Contents 1. Introduction................................................................................................................ 5 1.1. System Requirements.......................................................................................... 7 1.2. Super-I/O Controller Packing List..................................................................... -

North-Star-Advantage-Product-Brochure

Integrated Desk Top Computer with 12 inch other parallel devices, a serial (RS-232C) port or a North Bit-Mapped Graphics or Character Display, Star Floating Point Board (FPB) for substantial compu tational performance increase. Sufficient power (llSV 64Kb RAM, 4 MHz Z80A~ Two Quad Capacity or 230V, 60 or 50 Hz) is also contained within the light Floppy Disk Drives, Selectric®Style 87 Key weight chassis. Keyboard, Business Graphics Software Included with the ADVANTAGE system is a system diskette containing a Business Graphics package, a The North Star ADVANTAGE™ is an interactive integrated complete system diagnostic program and a Graphics Demo graphics computer supplying the single user with a package. balanced set of Business-Data, Word, or Scientific-Data This powerful, compact and self-sufficient computer processing capabilities along with both character and is further enhanced by a broad selection of Systems and graphics output. ADVANTAGE is fully supported by North Application software. For the business user North Star Star's wide range of System and Application Software. offers proprietary Application Software modules con The ADVANTAGE contains a 4 MHz Z80A®CPU with trolled by North Star's proprietary Application Support 64Kb of 200 nsec Dynamic RAM (with parity) for program Program (ASP). For a wide variety of commercial, storage, a separate 20Kb 200 nsec RAM to drive the bit scientific or industrial applications North Star's graphics mapped display, a 2Kb bootstrap PROM and an auxiliary version of the industry standard CP! M® is offered. For Intel 8035 microprocessor to control the keyboard and the computation-intensive or graphics-intensive appli floppy disks. -

North Star Brochure May 1980

North Star Computers, Inc. is In 1978, North Star introduced the North Star has enjoyed rapid growth C located in Berkeley, Califor- Horizon® computer. The Horizon is a since its beginnings. We have moved nia, and was incorporated in high-performance microcomputer three times in as many years to 0 June, 1976. The company system with integrated floppy disk accommodate increased production offers the Horizon computer memory, and includes North Star demand and have grown from two ·- and other high-performance, BASIC and Disk Operating System employees to well over a hundred. high-quality microcomputer (DOS) on diskette. With the latest move, North Star has u products, both hardware and tripled facilities to over 50,000 square ::::s software. The North Star feet of manufacturing and administra reputation is based on the tive space. quality, performance, reliabi l 'C As North Star grows, our commitment ity and cost-effectiveness to technical innovation and services of its products. North Star to our dealers and customers will intends to maintain its repu e grow with us . tation for delivering well ... designed microcomputer c products by continuing to - use only the best compo nents available, assuring a high de gree of quality control , and providing maximum service to its customers through carefully chosen, well-trained Authorized Dealers and Service Centers throughout the world . In its relatively short corporate history, North Star has become one of the most successful enterprises in the The Horizon was designed for a wide young microcomputer industry. The range of applications, and quickly company began as a small opera became one of the most popular units tion, manufacturing a single circuit in the personal computing market board that would perform numerical place. -

Lifeboat Associates CPM2 Users Notes for Altair Disk

CP/M2 ON MITS DISK USER'S NOTES BY LIFEBOAT ASSOCIATES 1651 THIRD AVENUE NEW YORK, N.Y. 10028 TELEPHONE 212 860-0300 TELEX 220501 CP/M2 ON MITS DISK BY LIFEBOAT ASSOCIATES 1651 THIRD AVENUE, NEW YORK,N.Y.10028 COPYRIGHT (C) 1981 **NOTE** The name "MITS" is a registered trademark of Micro Instrumentation and Telemetry Systems, Albuquerque, Nm. "CP/M" is copyright and trademark of Digital Research, Pacific Grove, Ca. "Z80" is a trademark of Zilog Inc., Cupertino, Ca. This manual and portions of this software system are copyright by Lifeboat Associates, New York, N.Y. License to use this copyright material is granted to an individual for use on a single computer system only after execution and return of the registration card to Lifeboat Associates and Digital Research. This document was created by Patrick Linstruth from information contained in “CP/M on MITS DISK – USERS NOTES”, April 27, 1977, and “CP/M2 ON NORTH STAR – DOUBLE DENSITY – QUAD CAPACITY USER’S NOTES”, December 1979, produced by Lifeboat Associates. Errors may be reported at https://github.com/deltecent/lifeboat-cpm22. Revision 1.0 April 2020 CP/M2 ON MITS DISK TABLE OF CONTENTS INTRODUCTION ................................................... 1 GENERAL INPORMATION .......................................... 1 CP/M AND THE ALTAIR SYSTEM ................................... 1 WHAT IS CP/M? ................................................ 2 A BRIEF HISTORY OF CP/M ...................................... 2 GETTING STARTED ................................................ 3 YOUR CP/M -

GUI-Interface North Star HORIZON Emulator User Guide

NSE North Star Horizon Z80 Computer Emulator. GUI Version Copyright (1995-2021) Jack Strangio and Others This software is released under the General Public License, Version 2. 20th April 2021 North Star Emulator User's Guide (GUI Version) Page 1 This Page is left Blank North Star Emulator User's Guide (GUI Version) Page 2 TABLE OF CONTENTS 0.TABLE OF CONTENTS 0.0 Table of Contents 3 0.1 Images and printouts 4 1. INTRODUCTORY INFORMATION 1.1 Overview 5 1.2 Other Z80 Emulators 5 1.3 Attributions for Others' Code in NSE 5 1.4 Thanks 6 1.5 See Also ... 6 1.6 Floppy Disks and a Hard Disk Supplied with NSE 6 1.7 Screen Views 7 1.8 NSE Command-Line Startup Options 10 1.9 Startup examples 11 1.10 STARTUP CONFIGURATION 11 1.10 The Work Directory 11 1.11 Configuration Files 11 1.12 User Configuration Files: nse.conf 12 2. OBTAINING AND BUILDING NSE 2.1 Linux Libraries Required 13 2.2 Get the Source Files From https://itelsoft.com.au/code/nse_latest.tar.gz 13 2.3 What’s in the /home/username/nse work-directory? 13 2.4 Starting up NSE 14 2.5 Running North Star Horizon CP/M. The ‘go’ button 14 2.6. Pausing the Emulator. The ‘pause’ button 14 2.7 Rebooting/Resetting the Computer. The ‘reset’ button 14 2.8. Finishing Up. The ‘exit’ button 14 2.9 The ‘Status’ Window 15 3. The ‘OPTIONS’ MENU 3.1 Disk Management 16 3.2 Toggle Capslock ON and OFF 18 3.3 Use ‘aread’ Input 19 3.3 Toggle HD Delay ON/OFF 20 3.4 Allocate I/O Port Files 21 3.5 TEXT COLOR OF THE EMULATOR OUTPUT 21 4. -

North Star System Software Manual

•'•i ' NorthSlorCompulerslnc. 2547 Ninth Street Berkeley, Co. 94710 North Star System Software Manual Copyright© 1979, North Star Computers, Inc. SOFT-DOC Revision 2.1 25013 PREFACE This manual describes all the system software that is included with a North Star HORIZON computer or Micro Disk System. Use of the North Star Disk Operating System (DOS), Monitor, and BASIC are described in three of the major sections of this manual. The first major section, GETTING STARTED, describes the initial procedure required to begin using the North Star software. The table of contents for all the major sections of this manual follows this preface. Two indexes for the BASIC section appear at the very end of the manual. If you receive errata sheets for this manual, be sure to incorporate all the corrections into the manual, or attach the errata sheets to the manual. This manual applies to North Star system software diskettes stamped "RELEASE 5" or "RELEASE 5.X" where X is a digit indicating the update number. If you are working with earlier releases of North Star software, you should order a copy of the most recent release to take full advantage of all the features described in this manual. This manual covers both single-density and double-density versions of the North Star software. Differences between single- and double- density versions are noted in the text. Other software available for your North Star system is not described here. For example, North Star Pascal and the North Star Software Exchange diskettes are not described here. Consult a North Star Catalog, Newsletter, or your local computer dealer for up-to-date descriptions of available North Star software. -

18Mb Hard Disk Drive!

New on the North Star Horizon: 18Mb Hard Disk Drive! Horizon Computer with 64K RAM and dual quad capacity (720kb) floppy disks p III AIM' MI Up to four 18Mb Winchester - Display terminal Letter -quality or dot Horizon I/O flexibility type hard disk drives matrix printer allows expansion to meet your needs Unsurpassed Performance and Capacity! of the high -performance characteristics of the drive. Our North Star now gives you hard disk capacity and process- hard disk operating system implements a powerful file ing performance never before possible at such a low system as well as backup and recovery on floppy diskette. price! Horizon is a proven, reliable, affordable computer system with unique hardware and software. Now the Software Is The Key! Horizon's capabilities are expanded to meet your growing The Horizon's success to date has been built on the qual- system requirements. In addition to hard disk perform- ity of its system software (BASIC, DOS, PASCAL) and ance, the Horizon has I/O versatility and an optional hard- the very broad range and availability of application soft- ware floating point board for high -performance number ware. This reputation continues with our new hard disk crunching. The North Star large disk is a Century Data system. Existing software is upward compatible for use Marksman, a Winchester -type drive that holds 18 million with the hard disk system. And, with the dramatic increase bytes of formatted data. The North Star controller inter- in on -line storage and speed, there will be a continually faces the drive(s) to the Horizon and takes full advantage expanding library of readily available application software. -

Retrieval of Immunoelectrophoretic Data (12)

INTERPRETIVE REPORTING OF PROTEIN ELECTROPHORESIS DATA BY MICROCOMPUTER Thomas S. Talamo, M.D., Frank J. Losos, III, MT(ASCP), G. Frederick Kessler, M.D. Department of Pathology, IMontefiore Hospital and the University Health (Center of Pittsburgh, Pittsburgh, Pennsylvani a ABSTRACT microcomputer is currently interfaced with a SOROC IQ-120 cathode ray tube (CRT) for visual display, A microcomputer based system for interpretive programming and inputting of patient data, and a reporting of protein electrophoretic data has been DEC (Digital Equipment Corporation) LA-120 dot ma- developed. Data for serum, urine and cerebro- trix printer. The Monitor disc operating system spinal fluid protein electrophoreses as well as (version 5.0) and North Star extended disc basic immunoelectrophoresis can be entered. Patient were provided by the manufacturer. The entire demographic information is entered through the program package is written in BASIC and is adapt- keyboard followed by manual entry of total and able to other computer systems with similar hard- fractionated protein levels obtained after densi- ware employing BASIC as the programmable language. tometer scanning of the electrophoretic strip. The patterns are then coded, interpreted, and Serum, urine and cerebrospinal fluid electro- final reports generated. In most cases inter- phoreses were performed using an agarose gel sys- pretation time is less than one second. Misinter- tem (Pol-E-Film R). The strips were scanned with a pretation by computer is uncommon and can be Beckman model CDS-200 densitometer after electro- corrected by edit functions within the system. phoresis for 45 minutes in 0.05 molar sodium bar- These discrepancies between computer and path- bitol buffer and stained with Anido Black.