The Effect of a New Hausa Language Television Station on Attitudes About

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Inequality in Nigeria 12

Photo: Moshood Raimi/Oxfam Acknowledgement This report was written and coordinated by Emmanuel Mayah, an investigative journalist and the Director Reporters 360, Chiara Mariotti (PhD), Inequality Policy Manager, Evelyn Mere, who is Associate Country Director Oxfam in Nigeria and Celestine Okwudili Odo, Programme Coordinator Governance, Oxfam in Nigeria Several Oxfam colleagues gave valuable input and support to the finalisation of this report, and therefore deserve special mention. They include: Deborah Hardoon, Nick Galasso, Paul Groenewegen, Ilse Balstra, Henry Ushie, Chioma Ukwuagu, Safiya Akau, Max Lawson, Head of Inequality Policy Oxfam International, and Jonathan Mazliah. a former Oxfam staffer. Our partners also made invaluable contributions in the campaign strategy development and report review process. We wish to thank BudgIT Information Technology Network; National Association of Nigeria Traders (NANTS),Civil Society Legislative Advocacy Centre (CISLAC), Niger Delta Budget Monitoring Group (NDEBUMOG, KEBETKACHE Women Development and Resource Centre and the African Centre for Corporate Responsibility (ACCR). Ruona J. Meyer and Thomas Fuller did an excellent job editing the report, while the production process was given a special touch by BudgIT Information Technology Network, our Inequality Campaign partner. © Oxfam International May 2017 This publication is copyright but the text may be used free of charge for the purposes of advocacy, campaigning, education, and research, provided that the source is acknowledged in full. The copyright holder requests that all such use be registered with them for impact assessment purposes. For copying in any other circumstances, or for re-use in other publications, or for translation or adaptation, permission must be secured and a fee may be charged. -

Guidelines for Inclusion: Ensuring Access to Education for All Acknowledgments

Guidelines for Inclusion: Ensuring Access to Education for All Acknowledgments In UNESCO’s efforts to assist countries in making National Plans for Education more inclusive, we recognised the lack of guidelines to assist in this important process. As such, the Inclusive Education Team, began an exercise to develop these much needed tools. The elaboration of this manual has been a learning experience in itself. A dialogue with stakeholders was initiated in the early stages of elaboration of this document. “Guidelines for Inclusion: Ensuring Access to Education for All”, therefore, is the result of constructive and valuable feedback as well as critical insight from the following individuals: Anupam Ahuja, Mel Ainscow, Alphonsine Bouya-Aka Blet, Marlene Cruz, Kenneth Eklindh, Windyz Ferreira, Richard Halperin, Henricus Heijnen, Ngo Thu Huong, Hassan Keynan, Sohae Lee, Chu Shiu-Kee, Ragnhild Meisfjord, Darlene Perner, Abby Riddell, Sheldon Shaeffer, Noala Skinner, Sandy Taut, Jill Van den Brule-Balescut, Roselyn Wabuge Mwangi, Jamie Williams, Siri Wormnæs and Penelope Price. Published in 2005 by the United Nations Educational, Scientifi c and Cultural Organization 7, place de Fontenoy, 75352 PARIS 07 SP Composed and printed in the workshops of UNESCO © UNESCO 2005 Printed in France (ED-2004/WS/39 cld 17402) Foreward his report has gone through an external and internal peer review process, which targeted a broad range of stakeholders including within the Education Sector at UNESCO headquarters and in the fi eld, Internal Oversight Service (IOS) and Bu- Treau of Strategic Planning (BSP). These guidelines were also piloted at a Regional Work- shop on Inclusive Education in Bangkok. -

Gender Equality: Glossary of Terms and Concepts

GENDER EQUALITY: GLOSSARY OF TERMS AND CONCEPTS GENDER EQUALITY Glossary of Terms and Concepts UNICEF Regional Office for South Asia November 2017 Rui Nomoto GENDER EQUALITY: GLOSSARY OF TERMS AND CONCEPTS GLOSSARY freedoms in the political, economic, social, a cultural, civil or any other field” [United Nations, 1979. ‘Convention on the Elimination of all forms of Discrimination Against Women,’ Article 1]. AA-HA! Accelerated Action for the Health of Adolescents Discrimination can stem from both law (de jure) or A global partnership, led by WHO and of which from practice (de facto). The CEDAW Convention UNICEF is a partner, that offers guidance in the recognizes and addresses both forms of country context on adolescent health and discrimination, whether contained in laws, development and puts a spotlight on adolescent policies, procedures or practice. health in regional and global health agendas. • de jure discrimination Adolescence e.g., in some countries, a woman is not The second decade of life, from the ages of 10- allowed to leave the country or hold a job 19. Young adolescence is the age of 10-14 and without the consent of her husband. late adolescence age 15-19. This period between childhood and adulthood is a pivotal opportunity to • de facto discrimination consolidate any loss/gain made in early e.g., a man and woman may hold the childhood. All too often adolescents - especially same job position and perform the same girls - are endangered by violence, limited by a duties, but their benefits may differ. lack of quality education and unable to access critical health services.i UNICEF focuses on helping adolescents navigate risks and vulnerabilities and take advantage of e opportunities. -

PP Equal Access to Education

ATUMUN SOMMERCAMP 2021 United Nations Educational, Scientific and Cultural Organization Position Papers Equal Access to Education Introduction Dear Delegates, The following documents are a collection of position papers for the countries represented in our ATUMUN conference on the topic of Equal Access to Education. We expect you to have read the Study Guide beforehand and hope that you’ve had the time to research by yourself as well. It is of great importance that you read the Position paper for your respective country before the session begins, as this will serve as the very basis of the negotiations. When reading your Position Paper, it is important to note the central view that your country represents. We highly recommend that you complement your Position Paper with your own research. The Position Papers are kept short in order for you to make your own conclusions as well as the position that the paper reveals. In order for you to be able to have a fruitful debate, we highly recommend that you read other countries' Position Papers as well. By doing so, you will get a better understanding of the topic, and the different perspectives that the countries represent. At last, we would like to thank our fellow co-writers, Kresten Knøsgaard, Julie Skogstad Blaabjerg, Hekmatullah Akbari, Laurits Rasmussen, Anna Møller Yang and Simon Thomsen, for taking their time to help us with writing these Position Papers. If you have any questions, we would like to remind you that our inbox is open, and if you need any help with your research, we would like to refer to Questions a resolution should answer, Further reading, Bloc positions and Background in the Study Guide. -

Securing Women's Land Rights Scaling for Impact in Kenya

Working paper 1: Securing women land rights in Africa July 2018 Securing women’s land rights Scaling for impact in Kenya ActionAid Kenya , GROOTS Kenya and LANDac About the programme Introduction Despite their key role in agriculture, in many Kenya is a leading country supporting the African movement regions in Africa, women do not have equal access for women’s rights to land. Legal frameworks and the and rights over land and natural resources. To constitution claim equal access over land and natural resources support the women’s land rights agenda and for women and men. Yet there is a lack of accurate data on to build on a growing momentum following the status of women’s land rights. Studies indicate that many the Women2Kilimanjaro initiative, LANDac in Kenyan women do not have access and control over land cooperation with grassroots and development (Doss et al. 2015; Musangi 2017). Women provide 89 per organisations, including ENDA Pronat in Senegal, cent of labour in subsistence farming and 70 per cent of cash GROOTS Kenya and ActionAid Kenya in Kenya, crop labour (Kenya Land Alliance 2014). About 32 per cent of ADECRU and Fórum Mulher in Mozambique, households are headed by women (ibid). Yet most women and Oxfam in Malawi have implemented a year- do not own land or movable property1. At best, women long action research programme: Securing enjoy rights through their relationship to men either as their Women’s Land Rights in Africa: Scaling Impact in husbands, fathers, brothers or sons who own and control land. Senegal, Kenya, Malawi and Mozambique. -

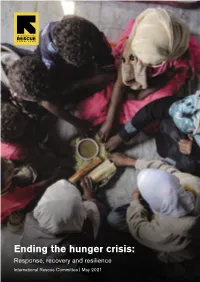

Ending the Hunger Crisis

Ending the hunger crisis: Response, recovery and resilience International Rescue Committee | May 2021 Acknowledgements Written by Alicia Adler, Ellen Brooks Shehata, Christopher Eleftheriades, Laurence Gerhardt, Daphne Jayasinghe, Lauren Post, Marcus Skinner, Helen Stawski, Heather Teixeira and Anneleen Vos with support from Louise Holly and research Company Limited by Guarantee assistance by Yael Shahar and Sarah Sakha. Registration Number 3458056 (England and Wales) Thanks go to colleagues from across the IRC for their helpful insights and important contributions. Charity Registration Number 1065972 Front cover: Family eating a meal, Yemen. Saleh Ba Hayan/IRC Ending the hunger crisis: Response, recovery and resilience Executive summary 1 Introduction 4 1. Humanitarian cash to prevent hunger 7 2. Malnutrition prevention and response 10 3. Removing barriers to humanitarian access 12 4. Climate-resilient food systems that empower women and girls 14 5. Resourcing famine prevention and response 17 Conclusion and recommendations 19 Annex 1 20 Endnotes 21 1 Ending the hunger crisis: Response, recovery and resilience Executive summary Millions on the brink of famine A global hunger crisis – fuelled by conflict, economic turbulence and climate-related shocks – has been exacerbated by the COVID-19 pandemic. The number of people experiencing food insecurity and hunger has risen since the onset of the pandemic. The IRC estimates that the economic downturn alone will drive the number of hungry people up by an additional 35 million in 2021. Without drastic action, the economic downturn caused by COVID-19 will suspend global progress towards ending hunger by at least five years. Of further concern are the 34 million people currently As the world’s largest economies, members of the G7 experiencing emergency levels of acute food insecurity who must work with the international community to urgently according to WFP and FAO warnings are on the brink of deliver more aid to countries at risk of acute food insecurity. -

Programs – Voices for Peace JOB DESCRIPTION

Equal Access International Deputy Chief of Party - Programs – Voices for Peace JOB DESCRIPTION Job Title: Deputy Chief of Party - Programs, Voices for Peace Location: Abidjan, Côte d’Ivoire Travel Requirements: Roughly 50% travel to Burkina Faso, Cameroon, Chad, Mali, and Niger, as well as other regions as assigned. REPORTING RELATIONSHIP: Reports to: Chief of Party BACKGROUND: Equal Access International (EAI), a non-governmental organization based in Washington, D.C., partners with communities around the world to co-create sustainable solutions utilizing community engagement and participatory media and technology. With funding from bi-laterals, multi-laterals, foundations, corporations and individual donors, EAI has a 17-year track record implementing media and social change projects and currently operates in Afghanistan, Burkina Faso, Cameroon, Chad, Kenya, Mali, Nepal, Niger, Nigeria, Pakistan, and the Philippines. EAI seeks a technically focused Deputy Chief of Party (DCOP) to lead program oversight and delivery, monitoring and evaluation, documentation and reporting, and research and learning of our regional USAID-funded Voices for Peace (V4P) program and represent the organization both in the operating countries and internationally. POSITION SUMMARY: EAI is looking for a Deputy Chief of Party - Programs for its V4P program. In September 2016, EAI began implementation of the five-year regional program to counter violent extremism (VE) and promote democracy, human rights, and governance. The project is now fully operational in Burkina Faso, Cameroon, Chad, Mali, and Niger. Through the project, Equal Access International works with radio station partners to become centers of knowledge-sharing and dialogue, while empowering dialogue, local governance, and civil society mobilization by addressing root causes of VE and building community resilience and agency. -

Gender Inequality, Poverty and Human Development in Kenya: Main Indicators, Trends and Limitations*

ISSN: 1442-8563 SOCIAL ECONOMICS, POLICY AND DEVELOPMENT Working Paper No. 35 Gender Inequality, Poverty and Human Development in Kenya: Main Indicators, Trends and Limitations by Tabitha Kiriti and Clem Tisdell June 2003 THE UNIVERSITY OF QUEENSLAND ISSN 1442-8563 WORKING PAPERS ON SOCIAL ECONOMICS, POLICY AND DEVELOPMENT Working Paper No. 35 Gender Inequality, Poverty and Human Development in Kenya: Main Indicators, Trends and Limitations* by Tabitha Kiriti† and Clem Tisdell‡ © All rights reserved * The authors would like to thank the Directorate of Personnel Management (DPM) in Kenya and the African Economic Research Consortium (AERC) for financial support. As usual the opinions do not necessarily reflect those of the DPM or AERC and any errors are the responsibility of the authors. † Tabitha Kiriti is a lecturer in Economics on study leave from the University of Nairobi. Email: [email protected] ‡ School of Economics, The University of Queensland, Brisbane QLD 4072, Australia Email: [email protected] WORKING PAPERS IN THE SERIES, Social Economics, Policy and Development are published by School of Economics, University of Queensland, 4072, Australia. They are designed to provide an initial outlet for papers resulting from research funded by the Australian Research Council in relation to the project ‘Asset Poor Women in Development’, Chief Investigator: C.A. Tisdell and Partner Investigators: Associate Professor K.C. Roy and Associate Professor S. Harrison. However this series will also provide an outlet for papers on related topics. Views expressed in these working papers are those of their authors and not necessarily of any of the organisations associated with the Project. -

Equality and Non-Discrimination at Work in Cambodia: Manual

Equality and non-discrimination at work in Cambodia: Manual ILO Regional Office for Asia and the Pacific ILO C111 Manual (en)_cover.indd 1 12/5/12 12:10 AM Equality and non-discrimination at work in Cambodia: Manual ILO Regional Office for Asia and the Pacific Copyright © International Labour Organization 2012 First published 2012 Publications of the International Labour Office enjoy copyright under Protocol 2 of the Universal Copyright Convention. Nevertheless, short excerpts from them may be reproduced without authorization, on condition that the source is indicated. For rights of reproduction or translation, application should be made to ILO Publications (Rights and Permissions), International Labour Office, CH-1211 Geneva 22, Switzerland, or by email: [email protected]. The International Labour Office welcomes such applications. Libraries, institutions and other users registered with reproduction rights organizations may make copies in accordance with the licences issued to them for this purpose. Visit www.ifrro.org to find the reproduction rights organization in your country. Equality and non-discrimination at work in Cambodia: Manual / ILO Regional Office for Asia and the Pacific. - Phnom Penh: ILO, 2012 288 p. ISBN: 9789221268277; 9789221268284 (web pdf) ILO Regional Office for Asia and the Pacific equal rights / equal employment opportunity / gender equality / affirmative action / role of ILO / ILO Convention / Cambodia 04.02.2 Also published in Cambodian: esovePAENnaMGMBIsmPaB nigkarminerIseGIgenAkEnøgkargar enAkñúgRbeTskm<úCa, ISBN 9789228268270; 9789228268287 (web pdf), Phnom Penh, 2012. ILO Cataloguing in Publication Data The designations employed in ILO publications, which are in conformity with United Nations practice, and the presentation of material therein do not imply the expression of any opinion whatsoever on the part of the International Labour Office concerning the legal status of any country, area or territory or of its authorities, or concerning the delimitation of its frontiers. -

No. # RFP-EAI-KE-2021-001 Audit Services Issue Date

RFP No. 2021-001 Request for Proposals (RFP) No. # RFP-EAI-KE-2021-001 Audit Services Issue Date: 2/18/2021 WARNING: Prospective Offerors, who have received this document from a source other than the Equal Access International- East Africa, should immediately contact [email protected] and provide their name and mailing address in order that amendments to the RFP or other communications can be sent directly to them. Any prospective Offeror who fails to register their interest assumes complete responsibility in the event that they do not receive communications prior to the closing date. Any amendments to this solicitation will be issued and posted via email. Page 1 of 17 RFP No. 2021-001 1. Request for Proposal EAI would like to engage the services of a reputable audit firm to carry-out the statutory audit for the period November 2019 to December 31, 2020. Qualified vendors are invited to submit a proposal as outlined below. 1. RFP No. No. # RFP-EAI-KE-2021-001 2. Issue Date 2/18/2021 3. Title Audit Services 4. Issuing Office & Email Equal Access International- East Africa Address for Submission of Email for submission of quotes: Quotations [email protected] 5. Deadline for Questions Questions due by 1 pm local Nairobi, Kenya time on 23 February 2021. Each Offeror is responsible for reading very carefully and understanding fully the terms and conditions of this RFP. All communications regarding this solicitation are to be made solely through the Issuing Office and must be submitted via email or in writing delivered to the Issuing Office no later than the date specified above. -

Women at Work: Job Opportunities in the Middle East

Women at work: Job opportunities in the Women at work: Job opportunities in the Middle East set double to with the Fourth Industrial Revolution Middle East set to double with the Fourth Industrial Revolution by McKinsey Authors: Rima Assi [email protected] Chiara Marcati [email protected] 2020 Copyright © McKinsey & Company Designed by Visual Media MEO www.mckinsey.com @McKinsey Women @McKinsey at work Job opportunities in the Middle East set to double with the Fourth Industrial Revolution Preface The notion that providing equal access to education, work, financing, and legal protection have positive economic and social implications is not a new one. The stakes, however, are growing. ‘Women at work: Job opportunities in the Middle East set to double with the Fourth Industrial Revolution’ is the result of a year-long research initiative that reveals insights on the biggest barriers and unlocks to female participation in professional and technical jobs in the Middle East. The research covers selected countries from the GCC and the Levant, home to around 78 million women, and shows a complex picture with striking regional variations.1,2 Our report builds on McKinsey’s global ‘future of work’ research to understand how women are likely to be affected by the Fourth Industrial Revolution the Middle East. The data shows that jobs are likely to more than double by 2030. The data also shows that women are not yet sufficiently integrated into high-productivity sectors in the Middle East, nor are they adequately equipped with the advanced technological skills required to take advantage of these opportunities. -

Guide to Nongovernmental Organizations for the Military

Guide to Nongovernmental Organizations for the Military A primer for the military about private, voluntary, and nongovernmental organizations operating in humanitarian emergencies globally edited by Lynn Lawry MD, MSPH, MSc Guide to Nongovernmental Organizations for the Military A primer for the military about private, voluntary, and nongovernmental organizations operating in humanitarian emergencies globally Edited and rewritten by Lynn Lawry MD, MSPH, MSc Summer 2009 Originally written by Grey Frandsen Fall 2002 The Center for Disaster and Humanitarian Assistance Medicine (CDHAM) Uniformed Services University of Health Sciences (USUHS) International Health Division Office of the Assistant Secretary of Defense (Health Affairs) U.S. Department of Defense Copyright restrictions pertain to certain parts of this publication. All rights reserved. No copyrighted parts of this publication may be reprinted or transmitted in any form without written permission of the publisher or copyright owner. in earlier versions, but will not necessarily appear as it was designed to appear, and hyperlinks may notThis function pdf document correctly. is compatible with Adobe Acrobat Reader version 5.0 and later. The file may open Acrobat Reader is available for free download from the Adobe website http://get.adobe.com/reader/ Contents About CDHAM ...................................................................................................................................................................5 About OASD(HA)-IHD ....................................................................................................................................................6