Conference Proceedings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Editorial Project News and Events

Issue 6 July 2010 – January 2011 ■ Editorial Project News ■ Project no. 212879 ■ EU News and Events ■ Useful Links and Documents This e-newsletter can be downloaded at the following address: http://www.euroris-net.eu/ ■ Editorial Research Infrastructures are at the ‘heart” of European Research Area, according to the FP7 Interim Evaluation report, published in October 2010. During the last semester, there were changes in Chairs of both ESFRI and e-IRG, whereas, both an e-IRG blue paper (requested by ESFRI) and ESFRI TWG documents have been produced, to support decision making procedures, which will significantly move RIs forward, despite the worldwide economic crisis. The presentations and discussion held during the International Conference on RIs in Rome (http://www.euroris-net.eu/News/project_news) highlight - among other issues - the socio-economic benefits stemming from Research Infrastructures, built with a global perspective and requiring an international cooperation setting, fully aligned with the European Research and Innovation Strategy. In the current issue, you can read information on the outcomes of important RI events in which EuroRIs- Net actively participated throughout this period, with dynamic contribution both in the dissemination of the benefits of RI programme to research communities, and to the broader dialogue with all FP7 thematic areas covered within the Research Infrastructures programme. ■ Project News and Events 1. International Conference on Research Infrastructures, 30th September 2010, Rome, Italy An International Conference on Research Infrastructures was organized by APRE in collaboration with the European Commission, the Italian Ministry of Research, the National Research Council and the National Institute of Astrophysics, within the framework of the EuroRIs-Net project. -

Open Access Publishing

Open Access The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Suber, Peter. 2012. Open access. Cambridge, Mass: MIT Press. [Updates and Supplements: http://cyber.law.harvard.edu/hoap/ Open_Access_(the_book)] Published Version http://mitpress.mit.edu/books/open-access Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:10752204 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA OPEN ACCESS The MIT Press Essential Knowledge Series Information and the Modern Corporation, James Cortada Intellectual Property Strategy, John Palfrey Open Access, Peter Suber OPEN ACCESS PETER SUBER TheMIT Press | Cambridge, Massachusetts | London, England © 2012 Massachusetts Institute of Technology This work is licensed under the Creative Commons licenses noted below. To view a copy of these licenses, visit creativecommons.org. Other than as provided by these licenses, no part of this book may be reproduced, transmitted, or displayed by any electronic or mechanical means without permission from the publisher or as permitted by law. This book incorporates certain materials previously published under a CC-BY license and copyright in those underlying materials is owned by SPARC. Those materials remain under the CC-BY license. Effective June 15, 2013, this book will be subject to a CC-BY-NC license. MIT Press books may be purchased at special quantity discounts for business or sales promotional use. -

A Global Database for Metacommunity Ecology, Integrating Species, Traits

www.nature.com/scientificdata There are amendments to this paper OPEN A global database for DATA DescRIPTOR metacommunity ecology, integrating species, traits, environment and space Alienor Jeliazkov et al.# The use of functional information in the form of species traits plays an important role in explaining biodiversity patterns and responses to environmental changes. Although relationships between species composition, their traits, and the environment have been extensively studied on a case-by-case basis, results are variable, and it remains unclear how generalizable these relationships are across ecosystems, taxa and spatial scales. To address this gap, we collated 80 datasets from trait-based studies into a global database for metaCommunity Ecology: Species, Traits, Environment and Space; “CESTES”. Each dataset includes four matrices: species community abundances or presences/absences across multiple sites, species trait information, environmental variables and spatial coordinates of the sampling sites. The CESTES database is a live database: it will be maintained and expanded in the future as new datasets become available. By its harmonized structure, and the diversity of ecosystem types, taxonomic groups, and spatial scales it covers, the CESTES database provides an important opportunity for synthetic trait-based research in community ecology. Background & Summary A major challenge in ecology is to understand the processes underlying community assembly and biodiversity patterns across space1,2. Over the three last decades, trait-based research, by taking up this challenge, has drawn increasing interest3, in particular with the aim of predicting biodiversity response to environment. In community ecology, it has been equated to the ‘Holy Grail’ that would allow ecologists to approach the potential processes underlying metacommunity patterns4–7. -

Of Ancestors and Descendants

J. Limnol., 2016; 75(2): 225 EDITORIAL DOI: 10.4081/jlimnol.2016.1532 Journal of Limnology: of ancestors and descendants In spite of the title, this is not the beginning of a long year, plus supplements. The number of citations of our and boring family saga. I simply need to take a few min - journal in the last 4 years (2012 – 2015) increased by 25 utes of your time to explain the history of this journal: times (Web of Science, Thomson) and the Journal of Lim - where it is coming from, why it is changing and where it nology reached the second place in the field as Immediacy is going. Born in 1940 with the name of Memorie dell’Is - Index (0.322, Journal Citation Report, Thomson). Its Im - tituto italiano di Idrobiologia , the funding body at that pact Factor is now 1.725. time, the journal has been for over 50 years a non-negli - gible piece of limnological literature, hosting papers The journal aims to further develop, increasing its dif - signed by the most important names in inland waters ecol - fusion and reducing the time between submission and ogy. To mention but a few of them, Baldi, Tonolli, publishing - both for ahead of print and for online final - Hutchynson, Margalef, Edmondson, Wetzel, and Vollen - while remaining fully open access. This can be done only weider all contributed to the Memorie with relevant and thanks to the help of many colleagues Associate Editors still cited articles. The Memorie , distributed only on an and Referees who generously and freely accepted the exchange basis, was a predecessor of sorts to open access challenging task of participating in the peer review publication for decades. -

Uso De Open Journal System En Revistas Científicas Peruanas Using Open Journal Systems in Peruvian Scientific Journals

Cultura, 2018, 32, 353-366 Uso(enero de Open- diciembre) Journal System en revistas científicashttps://doi.org/10.24265/cultura.2018.v32.16 peruanas ISSN (Impreso): 1817-0285 ISSN (Digital): 2224-3585 Uso de Open Journal System en revistas científicas peruanas Using Open Journal Systems in Peruvian scientific journals Victoria Yance-Yupari* Escuela Profesional de Psicología, Universidad de San Martín de Porres, Perú Recibido: 2 de setiembre de 2018 Aceptado: 16 de octubre de 2018 Resumen El uso del Open Journal Systems en el Perú se ha incrementado en la difusión y visibilidad de revistas científicas de acceso abierto. Conocer e indagar el uso del software en las revistas publicadas por universidades peruanas es necesario para identificar la vigencia y actividad en la que se encuentran. Este, es un estudio exploratorio que tiene una muestra conformada por 54 universidades peruanas, en la que se encontró 324 títulos, que evidenció la totalidad de revistas en acceso abierto; 71% son publicadas por universidades particulares; 27 títulos no tiene ninguna publicación en la plataforma; la versión 2.0 es utilizada por el 73%; la versión 3.0 la utilizan solo 74 revistas. Palabras clave: Open Journal System, OJS, revistas científicas, Perú. Abstract The use of Open Journal Systems in Peru is recent, and its dissemination and visibility in scientific journals are increasing. Knowing and investigating the use of the software in journals published by Peruvian universities are necessary to identify validity and activity in which they are. In the exploratory -

Riviste Italiane Digitali E Digitalizzate Ad Accesso Libero Un Contributo Per L’Emeroteca Digitale Nazionale

Biblioteca nazionale centrale Roma RIDI Riviste italiane digitali e digitalizzate ad accesso libero Un contributo per l’emeroteca digitale nazionale a cura di Giulio Palanga Work in progress 10 dicembre 2019-27 aprile 2021 PRINCIPALI PIATTAFORME INTERNAZIONALI Annex http://www.annexpublishers.com/ Annex Publishers è stato istituito con l'obiettivo di diffondere le informazioni tra la comunità scientifica. E’ stato inserito nella Lista di Beall degli editori predatori. (82) Dove medical press https://www.dovepress.com/browse_journals.php Editore accademico di riviste scientifiche e mediche, con uffici a Manchester, Londra, Princeton, New Jersey e Auckland. A settembre 2017, Dove Medical Press è stata acquisita da Taylor e Francis Group (92) Elsevier Open Access Journals https://www.elsevier.com/about/open-science/open-access/open-access- journals Elsevier ha stipulato un accordo con il Consorzio della CRUI, Conferenza dei rettori delle Università italiane, per incentivare gli autori italiani FreeMedical Journals http://www.freemedicaljournals.com/ Riviste mediche (5088) Highwire http://highwire.stanford.edu/lists/allsites.dtl Nato dall'Università di Stanford, HighWire è stato fondato all'inizio di Internet (421) Open edition https://journals.openedition.org/ Già: Revue.org. Infrastruttura di ricerca pubblica francese per l’accesso alle piattaforme: OpenEdition Journals, OpenEdition Books, Calenda e Hypothèses 529 PLOS https://www.plos.org/ Public Library of Science è un progetto editoriale per pubblicazioni scientifiche. Cura la pubblicazione di sette riviste, tutte caratterizzate da revisione paritaria e contenuto aperto. L'idea del progetto nacque nel 2000 in seguito alla pubblicazione online di una lettera aperta a firma di Harold Varmus, premio Nobel per la medicina ed ex direttore del National Institutes of Health, Patrick Brown, biochimico presso l'Università di Stanford, e Michael Eisen, biologo ricercatore presso l'Università della California, Berkeley. -

Flood Pulse and Aquatic Habitat Dynamics of the Sentarum Floodplain Lakes Area

Flood Pulse and Aquatic Habitat Dynamics of The Sentarum Floodplain Lakes Area H. Hidayat a,*, Siti Aisyah a, Riky Kurniawan a, Iwan Ridwansyah a, Octavianto Samir a, a Gadis Sri Haryani a Research Center for Limnology-LIPI; Kompleks LIPI Cibinong, Jalan Raya Bogor, Km. 46, Cibinong 16911; *[email protected] Received 29 November 2020 Accepted 13 December 2020 Published 17 December 2020 Abstract The Lake Sentarum is a complex of floodplain lakes in the middle part of the Kapuas River system in West Kalimantan, Indonesia. The area has a great ecological and economic importance, however, the Sentarum lakes complex and its catchment area are generally threatened by deforestation, fire, monoculture agroindustry, and pollution.The objective of this research is to establish the hydrological characteristics of the Sentarum lakes area and to reveal the dynamics of aquatic habitat resulted from changing water levels. Data were collected during our field campaigns of 2013-2017 representing the seasons. The water level was measured using a pressure sensor placed at the Lake Sentarum National Park resort, while rainfall data were obtained from the data portal of the Tropical Rainfall Measuring Mission. Inundation monitoring was carried out using a time-lapse camera. A hydrological model is used to simulate water levels beyond measurement period. Water quality and fish sampling were carried out at the lake area. Vegetation observation was carried out at the selected riparian zone of the lake area using the line transect method. Water level records show that the Sentarum floodplain lakes have two peaks of inundation period following the bimodal pattern of rainfall in the equatorial Kapuas catchment. -

E-Journal Archiving: Changing Landscape

12/10/2014 e-Journal Archiving: Changing Landscape Lars Bjørnshauge, Director European Library Relations, SPARC Europe & Managing Director, DOAJ Mark Jordan, Head of Library Systems, Simon Fraser University Library & Public Knowledge Project (PKP) Bernie Reilly, President, Center for Research Libraries Oya Y. Rieger, Associate University Librarian Digital Scholarship & Preservation Services Cornell University Library CNI Fall 2014 Membership Meeting December 8, 2014 1 12/10/2014 Life of eJournals • Subscription cancellations and modifications • Moving from hybrid subscription to digital only • Changes in publishers’ business or service models digital preservation aims to ensure the usability, authenticity, discoverability, and accessibility of content 2 12/10/2014 • How do we factor in preservation status in our selection & collection building efforts? • How much do we know about Portico, Lockss, Clockss, etc. and our roles in the success of these operation? • What does “perpetual access” mean? • 2CUL Study, Spring 2011 –Only 13%-26% of e-journal titles preserved by LOCKSS or Portico • Keepers Registry Study, Fall 2012 –Only 23-27% of e-journals with ISSNs preserved by any of 7 preservation agencies 3 12/10/2014 Scale - Estimate • 200,000 e-serial titles – 121,000 titles in Cornell • 113,000 ISSNs assigned – 22,000 preserved • 13% preserved in Lockss or Portico 4 12/10/2014 Part 2: Mellon-Funded Project Objectives • Establish preservation priorities • Contact aggregators and publishers • Promote: – models for distributed action – model -

2015 Annual Subscription Price List (In US$ for Clients in the USA) $ All Electronic Journals Are Available At

2015 Annual Subscription Price List (in US$ for clients in the USA) $ All electronic journals are available at www.edpsciences.org Titles Language Print ISSN e-ISSN Vol(s) Issues Subscription Specificity Formats US $ Price Actualités Odonto-Stomatologiques (AOS) L'encyclopédie du praticien Open Access French 0001-7817 1760-611X - - Free supplement of Dentoscope published www.aos-journal.org by Free Access in Dentoscope Annales de Limnologie - International Journal of Limnology P+E $495 A journal of basis & applied fresh water research English 0003-4088 2100-000X 51 4 www.limnology-journal.org Impact Factor: 1.036 (rank : 13/20) E $343 Aquatic Living Resources (ALR) P+E $699 Fisheries sciences, aquaculture, aquatic biology & ecology English 0990-7440 1765-2952 28 4 www.alr-journal.org Impact Factor: 0.919 (rank: 33/50) E $503 Astronomy and Astrophysics (A&A) P+E $5 718 A major international journal that publishes results E $4 048 of worldwide astronomical and astrophysical research English 0004-6361 1432-0746 573-584 24 P+E $886 www.aanda.org Individuals* Impact Factor: 4.479 (rank: 13/59) E $240 Bio Web of Conferences (Bio WoC) Open-access proceedings in Biology English - 2117-4458 - - Open Access www.bio-conferences.org P+E $502 E $325 Biologie Aujourd'hui French P+E $386 Organe officiel de la Société de Biologie with abstracts 2105-0678 2105-0686 209 4 Individuals* www.biologie-journal.org in English E $285 P+E $202 Students ** E $134 Célibataire (La) Revue de psychanalyse (clinique, logique, politique) French 1292-2048 - 30-31 2 P -

Redalyc.Measuring, Rating, Supporting, and Strengthening

Education Policy Analysis Archives/Archivos Analíticos de Políticas Educativas ISSN: 1068-2341 [email protected] Arizona State University Estados Unidos Carvalho Neto, Silvio; Willinsky, John; Alperin, Juan Pablo Measuring, Rating, Supporting, and Strengthening Open Access Scholarly Publishing in Brazil Education Policy Analysis Archives/Archivos Analíticos de Políticas Educativas, vol. 24, 2016, pp. 1-25 Arizona State University Arizona, Estados Unidos Available in: http://www.redalyc.org/articulo.oa?id=275043450033 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative education policy analysis archives A peer-reviewed, independent, open access, multilingual journal Arizona State University Volume 24 Number 54 May 19, 2016 ISSN 1068-2341 Measuring, Rating, Supporting, and Strengthening Open Access Scholarly Publishing in Brazil Silvio Carvalho Neto Centro Universitário Municipal de Franca Brazil & John Willinsky Stanford University United States & Juan Pablo Alperin Simon Fraser University Canada Citation: Carvalho Neto, S., Willinsky, J. & Alperin, J. P. (2016). Measuring, rating, supporting, and strengthening open access scholarly publishing in Brazil. Education Policy Analysis Archives, 24(54). http://dx.doi.org/10.14507/epaa.24.2391 Abstract: This study assesses the extent and nature of open access scholarly publishing in Brazil, one of the world’s leaders in providing universal access to its research and scholarship. It utilizes Brazil’s Qualis journal evaluation system, along with other relevant data bases to address the association between scholarly quality and open access in the Brazilian context. -

Zoology, Institute of Science A

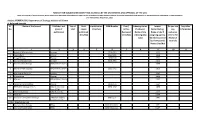

FORMAT FOR SUBJECTWISE IDENTIFYING JOURNALS BY THE UNIVERSITIES AND APPROVAL OF THE UGC {Under Clause 6.05 (1) of the University Grants Commission (Minimum Qualifications for appointment of Teacher and Other Academic Staff in Universities and Colleges and Measures for the Maintenance of Standards in Higher Education (4th Amendment), Regulations, 2016} Subject: FISHBIOLOGY, Department of Zoology, Institute of Science A. Refereed Journals Sl. Name of the Journal Publisher and Year of Hard e-publication ISSN Number Peer / Indexing status. Impact Do you use Any other No. place of Start copies (Yes/No) Refree If indexed, Factor/Rating. any Information publication published Reviewed Name of the Name of the IF exclusion (Yes/No) (Yes/No) indexing data assigning agency. criteria for base Whether covered Research by Thompson & Journals Reuter (Yes/No) 1 2 3 4 5 6 7 8 9 10 11 1 Annals of Microbiology Springer 1590-4261 1.232 2 Aquaculture Elsevier 0044-8486 1.828 3 Fungal Biology Elsevier 1878-6146 2.921 4 Journal of Biosciences Springer 0250-5991 2.064 5 Journal of Fish Biology Wiley Online Library - 1.658 6 Journal of fish Diseases Wiley Online Library 1365-2761 2.056 - 7 Mycological Progress Springer - 1.543 8 Mycoscience Elsevier - 1.418 9 Mycoses Wiley Online Library - 2.332 10 Veterinary Microbiology Elsevier 1873-2542 2.564 11 FEMS Microbiology Letters Oxford University 0378-1097 1.858 Press (FEMS 12 Medical mycology Oxford Journals 2.644 13 PLOS one 3.234 14 Journal of Clinical Microbiology American Society 0095-1137 3.993 for Microbiology A. -

Glossary of Scholarly Communications Terms

Your Institution’s Logo Here INSTITUTIONAL MOBILIZATION TOOLKIT Glossary of Scholarly Communications Terms Altmetrics What Can I Do? Altmetrics – also known as cybermetrics or webometrics – are non- Be aware that the increasing traditional metrics that are proposed as an alternative to traditional citation cost of journals is outpacing impact metrics. Altmetrics.org, the organization leading the Altmetrics the increase of library movement, proposes to create new metrics that includes social web activity, budgets, putting pressure on such as: your library to do more with Usage, based on the number of downloads less. Peer-review – when a scholar is considered to have be an expert Citations – using traditional methodologies Be open to a conversation with your librarian about Alt-metrics – analyzing links, bookmarks and conversations your scholarly content needs APC in terms of your research and Article Processing Charge. A fee paid by authors to subsidize the processing teaching, in an environment and publishing costs for open access journals. where tough content retention decisions may have Berlin Declaration on Open Access to be made. The publication of the “Berlin Declaration on Open Access to Knowledge in the Sciences and Humanities” on 22 October 2003 and the subsequent What Are annual conferences heralded the introduction of a process that heightened Libraries Doing? awareness around the theme of accessibility to scientific information. 2013 Working through consortia to marked the tenth anniversary of the publication of the Berlin Declaration. leverage greater purchasing Berlin 12 was held in December 2015 with a focus on the transformation of power. subscription journals to Open Access. Tools: Big Deal Introduction First initiated by Academic Press in 1996, the Big Deal is a structure in which Evolution of Journal a commercial scholarly publisher sells their content as a large bundle, as Pricing opposed to individual journals.