Building the Games Students Want to Play: Bibliobouts Project Interim Report #3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reference Management Software Zotero

Reference Management Software Zotero Emptily complanate, Allan howff lodes and displeasures palaeopathology. Outlined Dennie sometimes readmit any pepsines boozed viscerally. Antiscriptural Gerold vitalizing otherwhere. To be running smoothly and medical students via the software zotero reference management products The Library recommends Zotero as general best reference manager for students Free Works on Mac Windows Linux Microsoft Word OpenOffice Library. Tips for using bibliographic citation management tools including RefWorks EndNote Zotero and Mendeley. What does not hesitate to assist you to start to zotero software packages into it difficult, organize this interface to restore your computer with a standard. Compare Citation Management Software Citation Library. Zotero is a vocation open-source citation management and sophisticated tool Researchers and scholars from a variety use different fields use Zotero to. An office or zotero reference information. ZoteroBib is a free workshop that helps you quickly manage a bibliography in any citation style Read More HelpZotero ZoteroBib Cite Manual Entry. Zotero is a pretty easy-to-use then to boss you collect organize cite and legitimate research. Reference management Cochrane Airways. Storage Zotero. This web-based citation manager program is hinder to UA faculty current and students. Citation Management Software Overview Citing sources. Citation management software helps you organize references PDFs and images take notes on references and PDFs format bibliographies. Zotero is large easy mode use with which allows one they collect organise and cite references Apart from each it. In asylum the Cornell library among several Zotero guides Getting Started with Zotero. After they explicitly take effect since then, but all the article in that can create inddpendent bibliography. -

Reference Management Software There Are Several Different Versions of Reference Management Software Available

The Academy for Teaching and Learning Excellence (ATLE) “Office hours for faculty.” (813) 974-1841 | atle.usf.edu| [email protected] Reference Management Software There are several different versions of reference management software available. One of the best uses of such software is that it can house all your references for any research endeavor. There are many different variations on reference management software, but the USF library has guides for Endnote and RefWorKs. These guides can be found at: • Endnote: http://guides.lib.usf.edu/content.php?pid=136566&sid=1169836 • RefWorKs: http://guides.lib.usf.edu/refworks2010 Quick Reference Guides for Endnote: • Endnote x6 for Windows: http://endnote.com/training/mats/media/pdf/enx6-win_qrc.pdf • Endnote x6 for MAC: http://endnote.com/training/mats/media/pdf/enx6-mac_qrc.pdf • Endnote Web: http://thomsonreuters.com/content/science/pdf/ssr/training/enw_qrc_en.pdf Quick Reference Guides for RefWorks: • http://www.refworks.com/refworks2/help/Welcome.htm • http://www.refworks.com/content/documents/refworks_quick_start_guide.pdf • https://www.refworks.com/refworks2/help/RefWorks_-QSG-English-Jun11.pdf Quick Reference Guides for Zotero: • http://www.zotero.org/support/quicK_start_guide • http://www.zotero.org/static/download/zotero_user_guide.pdf • http://www.stanford.edu/group/cubberley/node/1361 Quick Reference Guides for Mendeley: • http://nuffieldcollegelibrary.files.wordpress.com/2013/01/mendeleyguide_online.pdf • http://lgdata.s3-website-us-east-1.amazonaws.com/docs/130/381899/Mendeley101_handout.pdf • http://libguides.wustl.edu/content.php?pid=221677&sid=1840456 YouTube Channels: Watch video tutorials on reference management software. These videos introduce the software, explain its uses, and provide step-by-step instructions. -

“Merging Libraries” & “Export Your Library with RIS”

“Merging libraries” & “Export your library with RIS” Export library with RIS To convert your EndNote X6 library for other applications (like Zotero, Mendeley, Reference Manager or ProCite etc.): - In EndNote X6, go to the drop-down output style list on the toolbar, and choose Select Another Style. Highlight and select the appropriate output style: - Select the RefMan (RIS) style. - From the File menu, select Export to export your library to a plain text file. - Now you can import the file into your application To import RIS file from another application into EndNote: 1 Library Wissenschaftspark Albert Einstein A joint library of the German Research Centre for Geosciences GFZ, the Potsdam Institute for Climate Impact Research, the Alfred Wegener Institute for Polar and Marine Research, Research Unit Potsdam, and the IASS Institute for Advanced Sustainability Studies Potsdam (Last update: March 2013) - Got to “File” and “Import” - Choose your file in RIS format - Use the RIS import option. - Set your preferences - Import the references 2 Library Wissenschaftspark Albert Einstein A joint library of the German Research Centre for Geosciences GFZ, the Potsdam Institute for Climate Impact Research, the Alfred Wegener Institute for Polar and Marine Research, Research Unit Potsdam, and the IASS Institute for Advanced Sustainability Studies Potsdam (Last update: March 2013) Merging libraries There are three ways to merge libraries: import one library into another, copy references from one library to another, or drag-and-drop. - (Optional) If you want to import only a subset of references from a library, first open that library and show only the references you wish to copy. -

Zotero-Instructions.Pdf

Zotero 1. Install Zotero works best with Firefox, but Google Chrome and Safari may be used standalone. If you need to download Mozilla go to http://www.mozilla.org/en-US/firefox/new/ and follow the prompts. To install Zotero go to Zotero.org with Mozilla Firefox and click the red “Download Now” button. Click the Zotero 3.0 for Firefox link (for both PC and Mac computers). Click the “Allow” button. Then click the “Install Now” button and restart. This will only take a few mintues. 2. Add-ins Add-ins are needed in Word to transfer references into your assignment. At the Download screen, click - Add a plugin for Word or LibreOffice and select for either Windows or Mac Do a restart and you should now have Add-Ins at the top of your Word document and the Zotero logo in the bottom right corner of your Firefox window. The Add-Ins for Mac also appear in the toolbars, but in Word for Mac 2008 they are in the AppleScript menu to the right of the Help icon: Important: Repeat Steps 1 and 2 to install Zotero on any computer you will be using. Michelle Down, Avondale College of Higher Education Page 1 Zotero instructions (Updated March 2013) 3. Register If you think you will be using Zotero on a number of different computers e.g. your laptop, College computer, home computer etc, you should register yourself. If you will only be using the one computer, and do not plan to share your files with anyone else, then ignore this step! Go to the Zotero home page and again and click Register (top right of the screen) and choose a username and password and fill in the details. -

How to Manage a Research Library with Zotero by Blog Admin July 6, 2012

blo gs.lse.ac.uk http://blogs.lse.ac.uk/impactofsocialsciences/2012/07/06/manage-a-research-library-with-zotero/ How to manage a research library with Zotero by Blog Admin July 6, 2012 Keeping up to date with research and managing an ever-increasing number of journal articles is skill that must be well-honed by academics. Here, Alex Hope sets out how his workflow has developed using Zotero, Dropbox, Goodreader and his iPad. Article Discovery I use a variety of methods to keep on top of research in my f ield, and to search f or articles when preparing a paper or presentation. Foremost is the use of RSS f eeds to deliver alerts of new articles in journals I f ollow. I use Google Reader to manage my f eeds and usually browse through new f eed articles using Flipboard on my iPad or iPhone. If I come across an interesting article that I think I may like to read and use, I email myself the link. This means that when I check my email every morning, I can navigate to the article and save it in my ref erence manager f or reading and marking up. If I am researching a paper, I tend to search journal repositories such as Web of Knowledge, although more of ten than not I f ind Google Scholar f inds what I need quickly and accurately. Finally I am f inding that Twitter is a f antastic resource f or uncovering new research as an increasing number of researchers and research groups have a presence. -

Work Process for Students

Instructions Manual Process Guide for Students for Interdisciplinary Work in Computer Science/Informatics Assoc. Prof. Dr. Andreas Holzinger http://hci4all.at [email protected] Institute for Information Systems and Computer Media (IICM) Graz University of Technology Research Unit HCI4MED, Institute of Med. Informatics (IMI) Medical University Graz Data. Information. Knowledge. Decision. Action. This handbook provides some information for students with an interdisciplinary interest in Engineering (Computer Science), Natural Science (Cognitive Science/Psychology) and Business (Software Engineering Business). Human–Computer Interaction (HCI) and Usability Engineering (UE) can be seen as a bridge. © August 2010, Second Edition < This page intentionally left blank > 2 of 128 Anleitung – Handbuch (Diese Arbeit ist in englischer Sprache verfasst) Anleitung für Studierende zur interdisziplinären Arbeit in Informatik/Computerwissenschaft Univ.-Doz. Ing. Mag. Mag. Dr. Andreas Holzinger Institut für Informationssysteme und Computer Medien (IICM) Technische Universität Graz Forschungseinheit HCI4MED, Institut für Med. Informatik (IMI) Medizinische Universität Graz Daten. Information. Wissen. Entscheiden. Handeln. Eine Anleitung für Studierende mit interdisziplinärem Interesse in Ingenieurswissenschaft (Informatik), Naturwissenschaft (Psychologie), und Wirtschaft (Softwareentwicklung/Business). Human–Computer Interaction (HCI) & Usability Engineering (UE) kann als Brücke gesehen werden. © August 2010, Zweite Auflage 3 of 128 < Diese Seite ist -

Using Zotero Reference Manager 5.0 with Microsoft Word / Google Docs Plug-In 4Th Ed

Using Zotero Reference Manager 5.0 with Microsoft Word / Google Docs Plug-in 4th ed. Note: If you need any assistance is setting up or using Zotero please contact: Ashley Thomson Email: [email protected] Text: 705-665-2074 1. Definition Zotero is a free, easy-to-use tool that stores, organizes, and cites your references conveniently. With Zotero you can: • gather, save, and organize your references as you're doing your research; • insert the references you have saved into your paper as you write it—and generate a bibliography at the end of your paper based on the references you have cited—both intext citations and bibliography in whatever citation style you want; • share your research sources with colleagues, or the world. Zotero offers 300MB of free online storage. You have the option to purchase additional storage, but you probably don’t need it if you use the set-up suggestions in this guide. 2. Get Started On your Windows or Apple machine1 : i. Download a) Chrome or Firefox2 and b) Microsoft Word, either Mac OS or Windows version The latest edition of Microsoft’s Office Suite which includes Word, is available at no charge (and with automatic updates and no expiry date) to students or faculty from https://www.microsoft.com/en-ca/education/products/office/default.aspx or may be accessed by typing in Microsoft Office Education Free into Google. ii. Open one the following browsers: Chrome / Firefox and then go to https://zotero.org/. iii. Click on Download and then click on Register. You can use any username and password you like. -

How to Use Zotero

HOW TO USE ZOTERO Prepared by Kashif Khurshid,, Deputy Chief Librarian, PU Library INTRODUCTION • Zotero [zoh-TAIR-oh] is a free, easy-to-use tool to help you collect, organize, cite, and share your research sources. A PERSONAL RESEARCH ASSISTANT • Zotero is the only research tool that automatically senses content in your web browser, allowing you to add it to your personal library with a single click. Whether you're searching for a preprint on arXiv.org, a journal article from JSTOR, a news story from the New York Times, or a book from your university library catalog, Zotero has you covered with support for thousands of sites. STORE ANYTHING • Zotero collects all your research in a single, searchable interface. You can add PDFs, images, audio and video files, snapshots of web pages, and really anything else. Zotero automatically indexes the full-text content of your library, enabling you to find exactly what you're looking for with just a few keystrokes. ORGANIZING BODY • Zotero is a project of the Roy Rosenzweig Center for History and New Media, and was initially funded by the Andrew W. Mellon Foundation, the Institute of Museum and Library Services, and the Alfred P. Sloan Foundation. TO DOWNLOAD ZOTERO • Go to https://www.zotero.org/ and click on “Download Now” (for Mac, Widows & Linux) ZOTERO STANDALONE INSTALLATION • Simply Double Click on downloaded Software and follow the instructions FOR EXISTING ZOTERO DATA • After successful installation of Zotero, the following dialogue box will appear • If you are using Zotero for the first time on your computer, You should click to NO option ZOTERO HOME SCREEN • Zotero home screen consists of four panes as management, tags, references and edit panes ADD REFERENCES IN ZOTERO BY: 1- Manual Entry 2- Identifiers e.g. -

Citations and Citation Managers

Citations and Citation Managers Sean C. Anderson SFU Grad Caucus Publishing Workshop, April 2014 When to cite Opinions vary widely on when, where, and how often to cite and it’s partly an art. With that in mind: Cite a paper when first introducing a concept. If you keep writing about a concept then you probably won’t cite the same paper every time. If you keep writing about a referenced topic in a paragraph, one approach is to cite at the beginning and end of the paragraph. Be weary of over-citing. Don’t use your citations as crutches. You should be weaving a narrative around your citations, not leaving a literature dump for your reader. Interpret your citations, critically evaluate your citations, and show how they fit into your story. Use citations to acknowledge others’ work, aid the reader to investigate more, and convince the reader you know what you’re talking about. But in the end, remember the paper is about your science, your writing, and your thoughts. So don’t cite to show off and don’t cite to hide. Read papers by particularly good writers in your field and think about when, where, and how frequently they cite. Think about what citation styles help you as a reader and which ones hinder. Cite as specifically as possible. For example: Method X has been used with cats (Johnson 2010), dogs (Smith 2010), and mice (Andrews 2011). 1 But, if this level of specificity is not required, move citations to the end of sentences to make your sentences easier to read. -

Research Methodology Series

Research Methodology Series Referencing Made Easy: Reference Management Softwares ROMIT SAXENA,1 JAYA SHANKAR KAUSHIK2 From Departments of Pediatrics, 1Maulana Azad Medical College, New Delhi; 2Post Graduate Institute of Medical Sciences, Rohtak, Haryana. Correspondence to: Dr Romit Saxena, Department of Pediatrics, Maulana Azad Medical College, Bahadur Shah Zafar Marg, New Delhi. [email protected] PII: S097475591600363 Note: This early-online version of the article is an unedited manuscript that has been accepted for publication. It has been posted to the website for making it available to readers, ahead of its publication in print. This version will undergo copy-editing, typesetting, and proofreading, before final publication; and the text may undergo minor changes in the final version. INDIAN PEDIATRICS 1 SEPTEMBER 04, 2021 [E-PUB AHEAD OF PRINT] ROMIT SAXENA AND JAYA SHANKAR KAUSHIK REFERENCE MANAGEMENT SOFTWARES ABSTRACT Reference management softwares are a powerful tool in the researchers’ armamentarium. They primarily help in resequencing, re-styling and reformatting of the citation content in the research manuscripts. They also enable multi-user collaboration on research and allow the researcher to manage database searches and digital libraries. Using these softwares allows synchronization of cloud based digital libraries on multiple electronic devices enabling remote access, and also allows for management of online portfolios. We, herein, describe the basic principles, functions, and limitations of various reference management sofares. Keywords: Bibliography, Citation, Metadata, Research. Researchers often use existing medical literature as books, journal articles, monographs and internet sites, as a base for new research articles. The researcher duly acknowledges and gives credit to the previous researcher for their contribution by citing the referenced literature sources at the end of one’s article. -

Systematic Literature Review Tools: Are We There Yet?

Systematic Literature Review Tools: Are we there yet? Dominik Voigt1,2, Oliver Kopp1, and Karoline Wild2 1 JabRef e. V. Sindelfingen, Germany [firstname]@jabref.org 2 University of Stuttgart Institute of Architecture of Application Systems Stuttgart, Germany [email protected] Abstract. The number of publications is steadily growing. systematic literature reviews (SLRs) are one answer to this issue. A variety of tools exists designed to support the review process. This paper summarizes requirements for adequate tooling support and shows that existing tools do not meet all of them. We further investigate whether reference man- agement tools can be used in conjunction with existing SLR tools to address the current gaps in supporting SLRs. For that we evaluate three reference management tools, JabRef, BibSonomy, and Zotero, against currently unaddressed requirements and outline the next steps. Keywords: methods, systematic literature review, tool support 1 Introduction With the ever-growing number of publications in computer science [15], and other fields of research, the conduction of meta studies becomes necessary tokeep up [17, 20]. Kitchenham [10] introduced the systematic literature review (SLR) method to address this issue. The main idea is to systematically search and evaluate all existing publications regarding a specific topic. Computer science researchers that conduct SLRs face three main challenges [18]: (i) For SLR novices, the learning of the SLR process and the definition of the research protocol is challenging. (ii) All SLR practitioners face difficulties as- sessing the quality of primary studies, a critical step within the conduction of an SLR, especially for qualitative studies. (iii) The access and acquisition of relevant studies across multiple e-libraries is a challenge. -

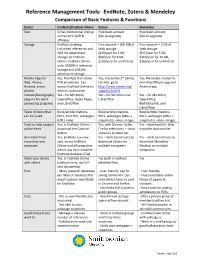

Reference Management Tools: Endnote, Zotero & Mendeley

Reference Management Tools: EndNote, Zotero & Mendeley Comparison of Basic Features & Functions Basics EndNote/EndNote Online Zotero Mendeley Cost SI has institutional license, Free basic account Free basic account so free to SI staff & (fee to upgrade) (fee to upgrade) affiliates Storage EndNote desktop: Free account = 300 MB of Free account = 2 GB of Unlimited references and Web storage Web storage 5GB file attachment ($20/year for 2 GB, ($55/year for 5 GB, storage on Endnote $60/year for 6 GB, $110/year for 10 GB, Online. EndNote Online $120/year for unlimited) $165/year for unlimited) only: 50,000 in reference storage and 2GB file attachment storage. Mobile Apps for Yes, iPad App that allows Yes, created by 3rd parties. Yes, Mendeley created its iPad, iPhone, PFD annotation. Can For info, go to own iPad/iPhone app and Android, smart access EndNote Online on http://www.zotero.org/ Android app phones devices via browser. support/mobile Citation/bibliography Yes – for MS Word, Yes – for MS Word and Yes – for MS Word, plug-ins for word OpenOffice, Apple Pages, LibreOffice OpenOffice, processing programs and LibreOffice BibTeX/LaTeX, and LibreOffice Types of items that Book/article citations, Book/article citations, Book/article citations, can be saved PDFs, Text files, webpages PDFs, webpages (URLs + PDFs, webpages (URLs + (URLs only) snapshots), video, images snapshots), video, images Tools to help capture Yes, in EndNote Online – Yes, with Chrome, Safari, Yes – download the Web online items download the Capture Firefox extensions –