Stat 8112 Lecture Notes Unbiased Estimating Equations Charles J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Autoregressive Conditional Kurtosis

Autoregressive conditional kurtosis Article Accepted Version Brooks, C., Burke, S. P., Heravi, S. and Persand, G. (2005) Autoregressive conditional kurtosis. Journal of Financial Econometrics, 3 (3). pp. 399-421. ISSN 1479-8417 doi: https://doi.org/10.1093/jjfinec/nbi018 Available at http://centaur.reading.ac.uk/20558/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: http://dx.doi.org/10.1093/jjfinec/nbi018 To link to this article DOI: http://dx.doi.org/10.1093/jjfinec/nbi018 Publisher: Oxford University Press All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online This is a pre-copyedited, author-produced PDF of an article accepted for publication in the Journal of Financial Econometrics following peer review. The definitive publisher-authenticated version (C. Brooks, S.P. Burke, S. Heravi and G. Persand, ‘Autoregressive Conditional Kurtosis’, Journal of Financial Econometrics, 3.3 (2005)) is available online at: http://jfec.oxfordjournals.org/content/3/3/399 1 Autoregressive Conditional Kurtosis Chris Brooks1, Simon P. Burke2, Saeed Heravi3, Gita Persand4 The authors’ affiliations are 1Corresponding author: Cass Business School, City of London, 106 Bunhill Row, London EC1Y 8TZ, UK, tel: (+44) 20 70 40 51 68; fax: (+44) 20 70 40 88 81 41; e-mail: [email protected] ; 2School of Business, University of Reading 3Cardiff Business School, and 4Management School, University of Southampton. -

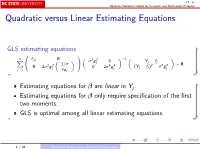

Quadratic Versus Linear Estimating Equations

ST 762 Nonlinear Statistical Models for Univariate and Multivariate Response Quadratic versus Linear Estimating Equations GLS estimating equations 0 1 n fβj 0 2 2 −1 X σ gj 0 Yj − fj @ 2 2 1/σ A 4 4 2 2 2 = 0: 0 2σ gj 0 2σ gj (Yj − fj ) − σ gj j=1 νθj Estimating equations for β are linear in Yj . Estimating equations for β only require specification of the first two moments. GLS is optimal among all linear estimating equations. 1 / 26 Quadratic versus Linear Estimating Equations ST 762 Nonlinear Statistical Models for Univariate and Multivariate Response Gaussian ML estimating equations 0 2 2 1 n fβj 2σ gj νβj 2 2 −1 X σ g 0 Yj − fj j = 0: @ 2 2 1/σ A 4 4 (Y − f )2 − σ2g 2 0 2σ gj 0 2σ gj j j j j=1 νθj Estimating equations for β are quadratic in Yj . Estimating equations for β require specification of the third and fourth moments as well. Specifically, if we let Yj − f (xj ; β) j = ; σg (β; θ; xj ) then we need to know 3 ∗ 2 ∗ E j = ζj and var j = 2 + κj : 2 / 26 Quadratic versus Linear Estimating Equations ST 762 Nonlinear Statistical Models for Univariate and Multivariate Response Questions ∗ ∗ ^ If we know the true values ζj and κj , how much is β improved using the quadratic estimating equations versus using the linear estimating equations? If we use working values (for example ζj = κj = 0, corresponding to ∗ ∗ normality) that are not the true values (i.e., ζj and κj ), is there any improvement in using the quadratic estimating equations? If we use working variance functions that are not the true variance functions, is there -

Inference Based on Estimating Equations and Probability-Linked Data

University of Wollongong Research Online Centre for Statistical & Survey Methodology Faculty of Engineering and Information Working Paper Series Sciences 2009 Inference Based on Estimating Equations and Probability-Linked Data R. Chambers University of Wollongong, [email protected] J. Chipperfield Australian Bureau of Statistics Walter Davis University of Wollongong, [email protected] M. Kovacevic Statistics Canada Follow this and additional works at: https://ro.uow.edu.au/cssmwp Recommended Citation Chambers, R.; Chipperfield, J.; Davis, Walter; and Kovacevic, M., Inference Based on Estimating Equations and Probability-Linked Data, Centre for Statistical and Survey Methodology, University of Wollongong, Working Paper 18-09, 2009, 36p. https://ro.uow.edu.au/cssmwp/38 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Centre for Statistical and Survey Methodology The University of Wollongong Working Paper 18-09 Inference Based on Estimating Equations and Probability-Linked Data Ray Chambers, James Chipperfield, Walter Davis, Milorad Kovacevic Copyright © 2008 by the Centre for Statistical & Survey Methodology, UOW. Work in progress, no part of this paper may be reproduced without permission from the Centre. Centre for Statistical & Survey Methodology, University of Wollongong, Wollongong NSW 2522. Phone +61 2 4221 5435, Fax +61 2 4221 4845. Email: [email protected] Inference Based on Estimating Equations and Probability-Linked Data Ray Chambers, University of Wollongong James Chipperfield, Australian Bureau of Statistics Walter Davis, Statistics New Zealand Milorad Kovacevic, Statistics Canada Abstract Data obtained after probability linkage of administrative registers will include errors due to the fact that some linked records contain data items sourced from different individuals. -

Generalized Estimating Equations for Mixed Models

GENERALIZED ESTIMATING EQUATIONS FOR MIXED MODELS Lulah Alnaji A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY August 2018 Committee: Hanfeng Chen, Advisor Robert Dyer, Graduate Faculty Representative Wei Ning Junfeng Shang Copyright c August 2018 Lulah Alnaji All rights reserved iii ABSTRACT Hanfeng Chen, Advisor Most statistical approaches of molding the relationship between the explanatory variables and the responses assume subjects are independent. However, in clinical studies the longitudinal data are quite common. In this type of data, each subject is assessed repeatedly over a period of time. Therefore, the independence assumption is unlikely to be valid with longitudinal data due to the correlated observations of each subject. Generalized estimating equations method is a popular choice for longitudinal studies. It is an efficient method since it takes the within-subjects correla- tion into account by introducing the n n working correlation matrix R(↵) which is fully char- ⇥ acterized by the correlation parameter ↵. Although the generalized estimating equations’ method- ology considers correlation among the repeated observations on the same subject, it ignores the between-subject correlation and assumes subjects are independent. The objective of this dissertation is to provide an extension to the generalized estimating equa- tions to take both within-subject and between-subject correlations into account by incorporating the random effect b to the model. If our interest focuses on the regression coefficients, we regard the correlation parameter ↵ and as nuisance and estimate the fixed effects β using the estimating equations U(β,G,ˆ ↵ˆ). -

Using Generalized Estimating Equations to Estimate Nonlinear

Using generalized estimating equations to estimate nonlinear models with spatial data ∗ § Cuicui Lu†, Weining Wang ‡, Jeffrey M. Wooldridge Abstract In this paper, we study estimation of nonlinear models with cross sectional data using two-step generalized estimating equations (GEE) in the quasi-maximum likelihood estimation (QMLE) framework. In the interest of improving efficiency, we propose a grouping estimator to account for the potential spatial correlation in the underlying innovations. We use a Poisson model and a Negative Binomial II model for count data and a Probit model for binary response data to demon- strate the GEE procedure. Under mild weak dependency assumptions, results on estimation consistency and asymptotic normality are provided. Monte Carlo simulations show efficiency gain of our approach in comparison of different esti- mation methods for count data and binary response data. Finally we apply the GEE approach to study the determinants of the inflow foreign direct investment (FDI) to China. keywords: quasi-maximum likelihood estimation; generalized estimating equations; nonlinear models; spatial dependence; count data; binary response data; FDI equation JEL Codes: C13, C21, C35, C51 arXiv:1810.05855v1 [econ.EM] 13 Oct 2018 ∗This paper is supported by the National Natural Science Foundation of China, No.71601094 and German Research Foundation. †Department of Economics, Nanjing University Business School, Nanjing, Jiangsu 210093 China; email: [email protected] ‡Department of Economics, City, U of London; Northampton Square, Clerkenwell, London EC1V 0HB. Humboldt-Universität zu Berlin, C.A.S.E. - Center for Applied Statistics and Economics; email: [email protected] §Department of Economics, Michigan State University, East Lansing, MI 48824 USA; email: [email protected] 1 1 Introduction In empirical economic and social studies, there are many examples of discrete data which exhibit spatial or cross-sectional correlations possibly due to the closeness of ge- ographical locations of individuals or agents. -

Introduction to the Generalized Estimating Equations and Its Applications in Small Cluster Randomized Trials

Introduction to the Generalized Estimating Equations and its Applications in Small Cluster Randomized Trials Fan Li BIOSTAT 900 Seminar November 11, 2016 1 / 24 Overview Outline Background The Generalized Estimating Equations (GEEs) Improved Small-sample Inference Take-home Message How GEEs work? How to improve small-sample inference, especially in cluster randomized trial (CRT) applications? 2 / 24 Cluster Randomized Trials (CRTs) Randomizing clusters of subjects rather than independent subjects (convenience, ethics, contamination etc.) Intervention administered at the cluster level Outcomes are measured at the individual level Outcomes from subjects within the same cluster exhibit a greater correlation than those from different clusters Intraclass correlation coefficient (ICC) ranges from 0:01 to 0:1 Interest lies in evaluating the intervention effect Small number of clusters with large cluster sizes 3 / 24 The Stop Colorectal Cancer (STOP CRC) Study Study the effect of intervention to improve cancer screening 26 health clinics (n = 26) are allocated to either usual care or intervention (1-to-1 ratio) Usual care - provided opportunistic/occasional screening Intervention - an automated program for mailing testing kits with instructions to patients The clinics contain variable number of patients (mi): Min 461/Med 1426/Max 3302 Primary outcome - The patient-level binary outcome (yij), completion status of screening test within a year of study initiation Baseline clinic- and individual-level covariates Inference { estimand? 4 / 24 The Estimand -

Maximum Likelihood Estimation of Distribution Parameters from Incomplete Data Edwin Joseph Hughes Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1962 Maximum likelihood estimation of distribution parameters from incomplete data Edwin Joseph Hughes Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Mathematics Commons Recommended Citation Hughes, Edwin Joseph, "Maximum likelihood estimation of distribution parameters from incomplete data " (1962). Retrospective Theses and Dissertations. 2089. https://lib.dr.iastate.edu/rtd/2089 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. This dissertation has been 62—6497 microfilmed exactly as received HUGHES, Edwin Joseph, 1928- MAXIMUM LIKELIHOOD ESTIMATION OF DISTRIBUTION PARAMETERS FROM INCOMPLETE DATA. Iôwà State University of Science and Technology Ph.D., 1962 Mathematics University Microfilms, Inc., Ann Arbor, Michigan MAXIMUM LIKELIHOOD ESTIMATION OF DISTRIBUTION PARAMETERS FROM INCOMPLETE DATA by Edwin Joseph Hughes A Dissertation Submitted to the Graduate Faculty in Partial Fulfillment of The Requirements for the Degree of DOCTOR OF PHILOSOPHY Major Subject: Statistics Approved: Signature was redacted for privacy. In Ch _ „ rk Signature was redacted for privacy. -

Application of Generalized Linear Models and Generalized Estimation Equations

Fisheries Research 145 (2013) 66–75 Contents lists available at SciVerse ScienceDirect Fisheries Research j ournal homepage: www.elsevier.com/locate/fishres Application of Generalized Linear Models and Generalized Estimation Equations to model at-haulback mortality of blue sharks captured in a pelagic longline fishery in the Atlantic Ocean a,∗ b a Rui Coelho , Paulo Infante , Miguel N. Santos a Instituto Português do Mar e da Atmosfera (IPMA I.P.), Avenida 5 de Outubro s/n, 8700-305 Olhão, Portugal b Centro de Investigac¸ ão em Matemática e Aplicac¸ ões (CIMA-UE) e Departamento de Matemática, ECT da Universidade de Évora, Rua Romão Ramalho 59, 7000-671 Évora, Portugal a r t a b i s c l e i n f o t r a c t Article history: At-haulback mortality of blue shark (Prionace glauca) captured by the Portuguese pelagic longline fish- Received 31 July 2012 ery targeting swordfish in the Atlantic was modeled. Data was collected by onboard fishery observers Received in revised form 29 January 2013 that monitored 762 fishing sets (1 005 486 hooks) and recorded information on 26 383 blue sharks. The Accepted 16 February 2013 sample size distribution ranged from 40 to 305 cm fork length, with 13.3% of the specimens captured dead at-haulback. Data modeling was carried out with Generalized Linear Models (GLM) and Gener- Keywords: alized Estimation Equations (GEE), given the fishery-dependent source of the data. The explanatory Generalized Estimation Equations variables influencing blue shark mortality rates were year, specimen size, fishing location, sex, season Generalized Linear Models and branch line material. -

M-Estimation (Estimating Equations)

Chapter 7 M-Estimation (Estimating Equations) 7.1 Introduction In Chapter 1 we made the distinction between the parts of a fully specified statistical model. The primary part is the part that is most important for answering the underlying scientific questions. The secondary part consists of all the remaining details of the model. Usually the primary part is the mean or systematic part of the model, and the secondary part is mainly concerned with the distributional assumptions about the random part of the model. The full specification of the model is important for constructing the likelihood and for using the associated classical methods of inference as spelled out in Chapters 2 and 3 and supported by the asymptotic results of Chapter 6. However, we are now ready to consider robustifying the inference so that misspecification of some secondary assumptions does not invalidate the resulting inferential methods. Basically this robustified inference relies on replacing the 1 b information matrix inverse I.Â/ in the asymptotic normality result for ÂMLE by a generalization I.Â/1B.Â/I.Â/1 called the sandwich matrix. In correctly specified models, I.Â/ D B.Â/, and the sandwich matrix just reduces to the usual I.Â/1. When the model is not correctly specified, I.Â/ ¤ B.Â/, and the sandwich matrix is important for obtaining approximately valid inference. Thus, use of this more general result accommodates misspecification but is still appropriate in correctly specified models although its use there in small samples can entail some loss of efficiency relative to standard likelihood inference. -

Estimated Estimating Equations: Semiparamet- Ric Inference for Clustered/Longitudinal Data

Estimated estimating equations: Semiparamet- ric inference for clustered/longitudinal data Jeng-Min Chiou Academia Sinica, Taipei, Taiwan and Hans-Georg Muller¨ † University of California, Davis, USA Summary. We introduce a flexible marginal modelling approach for statistical in- ference for clustered/longitudinal data under minimal assumptions. This estimated estimating equations (EEE) approach is semiparametric and the proposed mod- els are fitted by quasi-likelihood regression, where the unknown marginal means are a function of the fixed-effects linear predictor with unknown smooth link, and variance-covariance is an unknown smooth function of the marginal means. We propose to estimate the nonparametric link and variance-covariance functions via smoothing methods, while the regression parameters are obtained via the esti- mated estimating equations. These are score equations that contain nonparamet- ric function estimates. The proposed EEE approach is motivated by its flexibil- ity and easy implementation. Moreover, if data follow a generalized linear mixed model (GLMM), with either specified or unspecified distribution of random effects and link function, the proposed model emerges as the corresponding marginal (population-average) version and can be used to obtain inference for the fixed ef- fects in the underlying GLMM, without the need to specify any other components of this GLMM. Among marginal models, the EEE approach provides a flexible al- ternative to modelling with generalized estimating equations (GEE). Applications of EEE include diagnostics and link selection. The asymptotic distribution of the proposed estimators for the model parameters is derived, enabling statistical infer- ence. Practical illustrations include Poisson modelling of repeated epileptic seizure counts and simulations for clustered binomial responses. -

Association Between Early Cerebral Oxygenation and Neurodevelopmental Impairment Or Death in Premature Infants

Journal of Perinatology https://doi.org/10.1038/s41372-021-00942-w ARTICLE Association between early cerebral oxygenation and neurodevelopmental impairment or death in premature infants 1 1 1 1 1 1 Anup C. Katheria ● Jacob Stout ● Ana L. Morales ● Debra Poeltler ● Wade D. Rich ● Jane Steen ● 1 1 Shauna Nuzzo ● Neil Finer Received: 6 July 2020 / Revised: 15 October 2020 / Accepted: 19 January 2021 © The Author(s), under exclusive licence to Springer Nature America, Inc. 2021 Abstract Objective To assess the relationship between cerebral oxygenation in the first 72 h of life and neurodevelopmental impairment (NDI) at 2 years corrected age in former premature infants. Study design Prospective observational cohort study of 127 infants <32 weeks GA at birth with cerebral oxygenation monitoring using NIRS in the first 72 h of life. Results Using a threshold cutoff for cerebral hypoxia, infants with NDI or death had increased duration of hypoxia (4 vs 2.3%, p = 0.001), which was more pronounced in the 23–27 week subgroup (7.6 vs 3.2%, p < 0.001). Individual generalized 1234567890();,: 1234567890();,: estimating equations to adjust for repeated measures were modeled in this subgroup for the physiologic parameters including StO2. StO2 < 67% was a predictor for death or NDI (OR 2.75, 95% CI 1.006, 7.5132, p = 0.049). Conclusion An increased duration of cerebral hypoxia is associated with NDI or death in infants born <32 weeks GA. Introduction context of neonatal morbidities like hypoxic ischemic encephalopathy (HIE), late-onset sepsis, and congenital Underdeveloped cerebral circulatory systems of preterm heart disease. -

Estimating Equations and Maximum Likelihood Asymptotics

This is page 188 Printer: Opaque this 19 Estimating Equations and Maximum Likelihood asymptotics Here we give a rigorous account of the consistency and asymptotic normal- ity for certain solutions of estimating equations, of which least squares and maximum likelihood estimation are special cases. Though the material and the proof in particular are technical, it is worthwhile to understand the conditions under which these types of estimators have such desired prop- erties, and how those conditions can be verified, as is done in the examples that follow. Let be given n 2 N and a set χ, a random vector X 2 χn, a parameter p n p set Θ ⊂ R with non-empty interior and a function Un : χ × Θ ! R . We consider the estimating equation Un(X; θ) = 0; θ 2 Θ: (19.1) For least squares estimation, say pairs (Xi;Yi); i = 1; : : : ; n with distribution depending on θ are observed for which E[YijXi] = fi(Xi; θ) for fi(x; θ) in some parametric class of functions. The least squares estimate of θ is given as the minimizer of n 1 X 2 J(θ; X) = (y − f (X ; θ)) ; 2n i i i i=1 19. Estimating Equations and Maximum Likelihood asymptotics 189 which under smoothness conditions can be obtained via (19.1) with n 1 X U (x; θ) = @ J(θ; x) = (f (x ; θ) − y ) @ f (x ; θ): (19.2) n θ n i i i θ i i i=1 In the following, @θ as in (19.2) applied to a real valued function depending 2 on a vector parameter θ returns a gradient vector, and likewise @θ returns a matrix of second partial derivatives.