Machine Learning of Symbolic Compositional Rules with Genetic Programming: Dissonance Treatment in Palestrina

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Daily Eastern News: August 27, 2018 Eastern Illinois University

Eastern Illinois University The Keep August 2018 8-27-2018 Daily Eastern News: August 27, 2018 Eastern Illinois University Follow this and additional works at: https://thekeep.eiu.edu/den_2018_aug Recommended Citation Eastern Illinois University, "Daily Eastern News: August 27, 2018" (2018). August. 7. https://thekeep.eiu.edu/den_2018_aug/7 This Book is brought to you for free and open access by the 2018 at The Keep. It has been accepted for inclusion in August by an authorized administrator of The Keep. For more information, please contact [email protected]. HOUSING AND DINING VOLLEYBALL RECORD Eastern’s housing and dining staff work with students to establish a smooth start Eastern’s volleyball team had a 2-2 record this past weekend, finishing to the semester. the Panther Invitational in third place. PAGE 3 PAGE 8 HE T Monday, August 27,aily 2018 astErn Ews D E“TELL THE TRUTH AND DON’T BE AFRAID” n VOL. 103 | NO. 6 CELEBRATING A CENTURY OF COVERAGE EST. 1915 WWW.DAILYEASTERNNEWS.COM Phishy business: Students receive spam from people they know Staff Report | @DEN_News Beneath the green box there is more text that states the “message has been delayed” and Phishing emails and spam have been sent gives a date. to several students with a panthermail email Josh Reinhart, Eastern’s public informa- address from people they know Sunday night. tion coordinator, said the emails might have The emails contain a subject line that re- reached a sizeable population of people with lates to the person receiving the email, and an [email protected] email address. -

AP Music Theory Course Description Audio Files ”

MusIc Theory Course Description e ffective Fall 2 0 1 2 AP Course Descriptions are updated regularly. Please visit AP Central® (apcentral.collegeboard.org) to determine whether a more recent Course Description PDF is available. The College Board The College Board is a mission-driven not-for-profit organization that connects students to college success and opportunity. Founded in 1900, the College Board was created to expand access to higher education. Today, the membership association is made up of more than 5,900 of the world’s leading educational institutions and is dedicated to promoting excellence and equity in education. Each year, the College Board helps more than seven million students prepare for a successful transition to college through programs and services in college readiness and college success — including the SAT® and the Advanced Placement Program®. The organization also serves the education community through research and advocacy on behalf of students, educators, and schools. For further information, visit www.collegeboard.org. AP Equity and Access Policy The College Board strongly encourages educators to make equitable access a guiding principle for their AP programs by giving all willing and academically prepared students the opportunity to participate in AP. We encourage the elimination of barriers that restrict access to AP for students from ethnic, racial, and socioeconomic groups that have been traditionally underserved. Schools should make every effort to ensure their AP classes reflect the diversity of their student population. The College Board also believes that all students should have access to academically challenging course work before they enroll in AP classes, which can prepare them for AP success. -

02-11-Nonchordtones.Pdf

LearnMusicTheory.net 2.11 Nonchord Tones Nonchord tones = notes that aren't part of the chord. Nonchord tones always embellish/decorate chord tones. Keep in mind that many authors use the term "consonance" for a chord tone, and "dissonance" for a nonchord tone. suspension (S) = "delayed step down" passing tone (PT) neighbor tone (NT) 1. Starts as chord tone, then becomes... PTs are approached and NTs are approached and 2. Nonchord tone metrically accented, left by step in the left by step in different 3. ...then resolves down by step same direction. directions. Common types: 7-6, 4-3, 9-8, 2-3 Preparation Suspension Resolution C:IV6 I escape tone (esc.) retardation (R) -"step-leap" 1. Starts as chord tone, then becomes... appoggiatura (app.) -"leap-step" -to escape, you "step to window, 2. Nonchord tone metrically accented leap out" 3. ...then resolves up by step -may be metrically accented or unaccented -may be metrically accented or unaccented "DELAYED STEP UP" -sometimes called "incomplete neighbor tone" -also sometimes called "incomplete Preparation Retardation Resolution neighbor tone" vii°6 I anticipation (ant.) cambiata (c) -- LESS COMMON -approached by leap or step -also called "changing tone" from either direction -connect 2 consonances a 3rd apart -unaccented (i.e. C-A in this example) neighbor group (n gr.) -must be a chord tone in the -only the 2nd note of the pattern is -upper and lower neighbor together next harmony a dissonance -can also be lower neighbor -may or may not be tied into -specific pattern shown below followed by upper neighbor the resolution note -common in 15th and 16th centuries vii°6 I pedal point or pedal tone (bottom C in left hand) from Bach, WTC, Fugue I in C, m. -

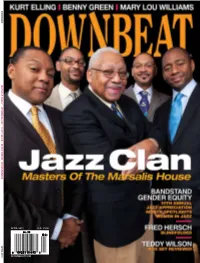

Downbeat.Com April 2011 U.K. £3.50

£3.50 £3.50 U.K. PRIL 2011 DOWNBEAT.COM A D OW N B E AT MARSALIS FAMILY // WOMEN IN JAZZ // KURT ELLING // BENNY GREEN // BRASS SCHOOL APRIL 2011 APRIL 2011 VOLume 78 – NumbeR 4 President Kevin Maher Publisher Frank Alkyer Editor Ed Enright Associate Editor Aaron Cohen Art Director Ara Tirado Production Associate Andy Williams Bookkeeper Margaret Stevens Circulation Manager Sue Mahal Circulation Associate Maureen Flaherty ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile 630-941-2030 [email protected] Musical Instruments & East Coast Schools Ritche Deraney 201-445-6260 [email protected] Classified Advertising Sales Sue Mahal 630-941-2030 [email protected] OFFICES 102 N. Haven Road Elmhurst, IL 60126–2970 630-941-2030 Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, John McDonough, Howard Mandel Atlanta: Jon Ross; Austin: Michael Point, Kevin Whitehead; Boston: Fred Bouchard, Frank-John Hadley; Chicago: John Corbett, Alain Drouot, Michael Jackson, Peter Margasak, Bill Meyer, Mitch Myers, Paul Natkin, Howard Reich; Denver: Norman Provizer; Indiana: Mark Sheldon; Iowa: Will Smith; Los Angeles: Earl Gibson, Todd Jenkins, Kirk Silsbee, Chris Walker, Joe Woodard; Michigan: John Ephland; Minneapolis: Robin James; Nashville: Robert Doerschuk; New Orleans: Erika Goldring, David Kunian, Jennifer Odell; New York: Alan Bergman, Herb Boyd, Bill Douthart, Ira Gitler, Eugene Gologursky, Norm Harris, D.D. Jackson, Jimmy Katz, -

IV. Fabric Summary 282 Copyrighted Material

Eastern State Penitentiary HSR: IV. Fabric Summary 282 IV. FABRIC SUMMARY: CONSTRUCTION, ALTERATIONS, AND USES OF SPACE (for documentation, see Appendices A and B, by date, and C, by location) Jeffrey A. Cohen § A. Front Building (figs. C3.1 - C3.19) Work began in the 1823 building season, following the commencement of the perimeter walls and preceding that of the cellblocks. In August 1824 all the active stonecutters were employed cutting stones for the front building, though others were idled by a shortage of stone. Twenty-foot walls to the north were added in the 1826 season bounding the warden's yard and the keepers' yard. Construction of the center, the first three wings, the front building and the perimeter walls were largely complete when the building commissioners turned the building over to the Board of Inspectors in July 1829. The half of the building east of the gateway held the residential apartments of the warden. The west side initially had the kitchen, bakery, and other service functions in the basement, apartments for the keepers and a corner meeting room for the inspectors on the main floor, and infirmary rooms on the upper story. The latter were used at first, but in September 1831 the physician criticized their distant location and lack of effective separation, preferring that certain cells in each block be set aside for the sick. By the time Demetz and Blouet visited, about 1836, ill prisoners were separated rather than being placed in a common infirmary, and plans were afoot for a group of cells for the sick, with doors left ajar like others. -

Cultural & Heritagetourism

Cultural & HeritageTourism a Handbook for Community Champions A publication of: The Federal-Provincial-Territorial Ministers’ Table on Culture and Heritage (FPT) Table of Contents The views presented here reflect the Acknowledgements 2 Section B – Planning for Cultural/Heritage Tourism 32 opinions of the authors, and do not How to Use this Handbook 3 5. Plan for a Community-Based Cultural/Heritage Tourism Destination ������������������������������������������������ 32 necessarily represent the official posi- 5�1 Understand the Planning Process ������������������������������������������������������������������������������� 32 tion of the Provinces and Territories Developed for Community “Champions” ��������������������������������������� 3 which supported the project: Handbook Organization ����������������������������������������������������� 3 5�2 Get Ready for Visitors ����������������������������������������������������������������������������������������� 33 Showcase Studies ���������������������������������������������������������� 4 Alberta Showcase: Head-Smashed-In Buffalo Jump and the Fort Museum of the NWMP Develop Aboriginal Partnerships ��� 34 Learn More… �������������������������������������������������������������� 4 5�3 Assess Your Potential (Baseline Surveys and Inventory) ������������������������������������������������������������� 37 6. Prepare Your People �������������������������������������������������������������������������������������������� 41 Section A – Why Cultural/Heritage Tourism is Important 5 6�1 Welcome -

Æ‚‰É”·ȯLj¾é “ Éÿ³æ¨‚Å°ˆè¼¯ ĸ²È¡Œ (ĸ“Ⱦ

æ‚‰é” Â·è¯ çˆ¾é “ 音樂專輯 串行 (专辑 & æ—¶é— ´è¡¨) Spectrum https://zh.listvote.com/lists/music/albums/spectrum-7575264/songs The Electric Boogaloo Song https://zh.listvote.com/lists/music/albums/the-electric-boogaloo-song-7731707/songs Soundscapes https://zh.listvote.com/lists/music/albums/soundscapes-19896095/songs Breakthrough! https://zh.listvote.com/lists/music/albums/breakthrough%21-4959667/songs Beyond Mobius https://zh.listvote.com/lists/music/albums/beyond-mobius-19873379/songs The Pentagon https://zh.listvote.com/lists/music/albums/the-pentagon-17061976/songs Composer https://zh.listvote.com/lists/music/albums/composer-19879540/songs Roots https://zh.listvote.com/lists/music/albums/roots-19895558/songs Cedar! https://zh.listvote.com/lists/music/albums/cedar%21-5056554/songs The Bouncer https://zh.listvote.com/lists/music/albums/the-bouncer-19873760/songs Animation https://zh.listvote.com/lists/music/albums/animation-19872363/songs Duo https://zh.listvote.com/lists/music/albums/duo-30603418/songs The Maestro https://zh.listvote.com/lists/music/albums/the-maestro-19894142/songs Voices Deep Within https://zh.listvote.com/lists/music/albums/voices-deep-within-19898170/songs Midnight Waltz https://zh.listvote.com/lists/music/albums/midnight-waltz-19894330/songs One Flight Down https://zh.listvote.com/lists/music/albums/one-flight-down-20813825/songs Manhattan Afternoon https://zh.listvote.com/lists/music/albums/manhattan-afternoon-19894199/songs Soul Cycle https://zh.listvote.com/lists/music/albums/soul-cycle-7564199/songs -

Third Species Counterpoint (4:1) Heath: Counterpoint (Music 221) Rules and Guidelines

Third Species Counterpoint (4:1) Heath: Counterpoint (Music 221) Rules and Guidelines Four quarter notes of counterpoint are written for each whole note: Beat 1 (Strong); Beats 2 and 4 (weak); Beat 3 (weaker than beat 1, more accented than beats 2 and 4). • Strong beats should not outline a dissonant interval. No more than 3 consecutive measures should begin with the same interval. No unisons should be used on strong beats (other than at the beginning and end). Consecutive downbeats may be 5ths and 8ves, but no more than two in a row of the same kind should be used. • The interval on the first quarter note is always consonant and the interval on the third is often also consonant, while the 2nd and 4th intervals may be dissonant. The third may be dissonant if the other 3 intervals are consonant. • P5s and P8s that occur against two different notes of the cantus firmus should be separated by at least two quarter notes. P5s or P8s with only one intervening quarter note occurring within a measure (i.e., over the same cantus firmus note) are fine. Avoid having more than two consecutive strong-beat 5ths or 8ves. • Leaps must be treated very carefully in 3rd species. Avoid too many consecutive leaps (especially in the same direction). Avoid leaps into strong beats (downbeats especially). Dissonances are allowed on all beats except for beat 1, ONLY if they belong to one of the following gestures: 1. Passing Tone: a dissonance that fills the space between a third by direct, stepwise motion. -

Glossary Alphabetical List of Terms with Short Definitions and Cross-References

137 Pure Counterpoint Glossary Alphabetical list of terms with short definitions and cross-references A cappella. Literally, the chapel style of choral Authentic cadence. The modal *clausula vera (true performance. By the 19th century, it had come to close) cadence that is articulated harmonically by mean unaccompanied choral music such as the a *leading tone. See Dominant function. sacred Renaissance style, but historically instru- ments were often used to accompany and double Authentic mode. A modal scale beginning and vocal parts. ending on its final. See Mode; Plagal mode. Accidental. A sharp or flat appended to alter a Baroque era or period. In music history, 1600- note. See Musica ficta; Signature. 1750. Adjacent voices. Different voices with the closest Boundary interval. The intervallic distance be- ranges. For example, Alto and Tenor, but not So- tween the lowest and highest notes of a melodic prano and Tenor. See Voice types. passage. Alternatim. Textural alternation between chant Breve. See Duration. and polyphony, a technique derived from 1*antiphonal *psalmody. Cadence (cadenza, clausula). The musical gesture of a point of arrival and often, repose. It occurs at Ambitus (Ambit). The overall range of a voice or a the end of a phrase or larger section, marking clo- mode, typically an octave. sure. In polyphony, most cadences are preceded by a *suspension. A cadence pattern could be as Answer. The restatement of a *subject in another short as two notes or quite elongated. See Har- voice or voices, confirming *imitation. In strict monic Cadence. imitation between *equal voices, an answer would come at a unison or octave. -

(A) Data in the Life: Authorship Attribution of Lennon-Mccartney Songs

(A) Data in the Life: Authorship Attribution of Lennon-McCartney Songs Mark E. Glickman∗ Department of Statistics Harvard University [email protected] Jason I. Brown Department of Mathematics and Statistics Dalhousie University [email protected] Ryan B. Song School of Engineering and Applied Sciences Harvard University [email protected] Abstract The songwriting duo of John Lennon and Paul McCartney, the two founding mem- bers of the Beatles, composed some of the most popular and memorable songs of the last century. Despite having authored songs under the joint credit agreement of arXiv:1906.05427v1 [cs.SD] 12 Jun 2019 Lennon-McCartney, it is well-documented that most of their songs or portions of songs were primarily written by exactly one of the two. Furthermore, the authorship of some Lennon-McCartney songs is in dispute, with the recollections of authorship based on previous interviews with Lennon and McCartney in conflict. For Lennon-McCartney songs of known and unknown authorship written and recorded over the period 1962-66, we extracted musical features from each song or song portion. These features consist of the occurrence of melodic notes, chords, melodic note pairs, chord change pairs, and four-note melody contours. We developed a prediction model based on variable ∗Address for correspondence: Department of Statistics, Harvard University, 1 Oxford Street, Cambridge, MA 02138, USA. E-mail address: [email protected]. Jason Brown is supported by NSERC grant RGPIN 170450-2013. The authors would like to thank Xiao-Li Meng, Michael Jordan, David C. Hoaglin, and the three anonymous referees for their helpful comments. -

Information to Users

INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleedthrough, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand corner and continuing from left to right in equal sections with small overlaps. Each original is also photographed in one exposure and is included in reduced form at the back of the book. Photographs included in the original manuscript have been reproduced xerographically in this copy. Higher quality 6" x 9" black and white photographic prints are available for any photographs or illustrations appearing in this copy for an additional charge. Contact UMI directly to order. University Microfilms International A Bell & Howell Information Com pany 300 North Z eeb Road. Ann Arbor, Ml 48106-1346 USA 313/761-4700 800/521-0600 Order Number 9227220 Aspects of early major-minor tonality: Structural characteristics of the music of the sixteenth and seventeenth centuries Anderson, Norman Douglas, Ph.D. -

Cultural & Heritage Tourism: a Handbook for Community Champions

Cultural & HeritageTourism a Handbook for Community Champions Table of Contents The views presented here reflect the Acknowledgements 2 opinions of the authors, and do not How to Use this Handbook 3 necessarily represent the official posi- tion of the Provinces and Territories Developed for Community “Champions” ��������������������������������������� 3 which supported the project: Handbook Organization ����������������������������������������������������� 3 Showcase Studies ���������������������������������������������������������� 4 Learn More… �������������������������������������������������������������� 4 Section A – Why Cultural/Heritage Tourism is Important 5 1. Cultural/Heritage Tourism and Your Community ��������������������������� 5 1�1 Treasuring Our Past, Looking To the Future �������������������������������� 5 1�2 Considering the Fit for Your Community ���������������������������������� 6 2. Defining Cultural/Heritage Tourism ��������������������������������������� 7 2�1 The Birth of a New Economy �������������������������������������������� 7 2�2 Defining our Sectors ��������������������������������������������������� 7 2�3 What Can Your Community Offer? �������������������������������������� 10 Yukon Showcase: The Yukon Gold Explorer’s Passport ����������������������� 12 2�4 Benefits: Community Health and Wellness ������������������������������� 14 3. Cultural/Heritage Tourism Visitors: Who Are They? ������������������������ 16 3�1 Canadian Boomers Hit 65 ���������������������������������������������� 16 3�2 Culture as a