Intervention Strategies for the Management of Human Error

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aero Twin, Inc. STC for Rudder Gust Lock

-- ST02540AK Aero Twin, Inc. 2403 Merrill Field Drive Anchorage, AK 99501 A43EU Airbus Defense and Space S. A. C-212-CB, CC, CD, CE, CF, DF, DE Fabrication and installation of Aero Twin, Inc., Rudder Gust Lock Kit No. 4111-212 on Airbus Defense and Space S. A. C-212 aircraft in accordance with Aero Twin, Inc., Master Data List No. 4111-212-MDL, Original Issue, dated May 8, 2020, or later FAA approved revision. : 1. The compatibility of this design change with previously approved modifications must be determined by the installer. 2. If the holder agrees to permit another person to use this Certificate to alter the product, the holder shall give the other person written evidence of that permission. 3. Instructions for Continued Airworthiness, Aero Twin, Inc. document number 4111-212-ICA, Original Issue, dated May 8, 2020, or later FAA accepted revision is a required part of this modification. 4. Airplane Flight Manual Supplement (AFMS), Aero Twin Doc. No. 4111-212-AFMS, Original Issue, dated August 27, 2020, or later FAA approved revision is a required part of this modification. November 20, 2017 September 8, 2020 _______________________________________________________ (Signature) August A. Asay Manager, Anchorage Aircraft Certification Office _______________________________________________________ (Title) _____________________________________________________________________________________________________________________________________ Any alteration of this certificate is punishable by a fine of not exceeding $1,000, or imprisonment not exceeding 3 years, or both. _____________________________________________________________________________________________________________________________________ FAA FORM 8110-2(10-68) PAGE 1 of 2 PAGES This certificate may be transferred in accordance with FAR 21.47. INSTRUCTIONS: The transfer endorsement below may be used to notify the appropriate FAA Regional Office of the transfer of this Supplemental Type Certificate. -

Aviation Maintenance Alerts

ADVISORY CIRCULAR 43-16A AVIATION MAINTENANCE ALERTS ALERT FEBRUARY NUMBER 2006 331 CONTENTS AIRPLANES AVIAT .........................................................................................................................................1 BEECH ........................................................................................................................................2 CESSNA ......................................................................................................................................4 DASSAULT.................................................................................................................................6 GULFSTREAM...........................................................................................................................8 ISRAEL AIRCRAFT.................................................................................................................11 PIPER.........................................................................................................................................13 RAYTHEON..............................................................................................................................15 HELICOPTERS AGUSTA ...................................................................................................................................16 POWERPLANTS PRATT & WHITNEY ...............................................................................................................16 ACCESSORIES AERO-TRIM .............................................................................................................................18 -

2018 KODIAK 100, Series II Serial Number: 100-253 Registration: N352CL

2018 KODIAK 100, Series II Serial Number: 100-253 Registration: N352CL www.modern-aviation.com | [email protected]| 1.206.762.6000 2018 KODIAK 100, Series II Serial Number: 100-253 Registration: N352CL AIRCRAFT HIGHLIGHTS • Upgraded Timberline Interior Seating • TKS Ice Protection • 10-Place Oxygen Upgrade • Air Conditioning • Garmin G1000 Nxi Avionics Suite Airframe Total Time Since New Airframe 60 Hrs Engine 1 60 Hrs Modern Aviation Aircraft Sales *All Specifications subject to independent verification Options Options Installed on Kodiak S/N 253 Kodiak Series II Standard Equipped Aircraft $2,150,000 Series II Paint Scheme allover white with black and silver stripes External baggage compartment $94,500 TKS Ice Protection System (Tank in Cargo Pod) $124,500 29” Tire Combo $1,750 GTS 800 TAS/WX-500 Stormscope Package $28,700 GDL 69A-XM Data Link with Audio Infotainment $6,950 ChartView Enable Card $5,000 Timberline Interior (Warm Brown) 4 seats $20,000 2 additional seats $17,700 10-place oxygen system $10,000 Bose A20 Headset (Passenger) (x2) $ 2,190 Air Conditioning $42,500 Total Retail Price as Optioned $2,503,790 Modern Aviation Aircraft Sales *All Specifications subject to independent verification Avionics and Equipment AVIONICS ENGINE INSTRUMENTS (Fully integrated in the G1000NXI) •Garmin G1000NXi Integrated Avionics Suite: • Torque (ft-lb) • RPM Prop •(2) Primary Flight Displays – PFD • ITT •Multifunction Display – MFD • RPM NG (%) •All three are next gen, high resolution 10. inch displays • Oil Temp/Pressure •Enhanced -

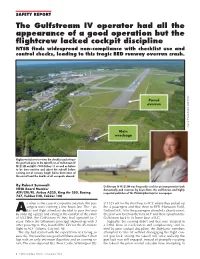

The Gulfstream IV Operator Had All the Appearance of a Good Operation But

SAFETY REPORT The Gulfstream IV operator had all the appearance of a good operation but the flightcrew lacked cockpit discipline NTSB finds widespread non-compliance with checklist use and control checks, leading to this tragic BED runway overrun crash. Paved overrun Source: Massachusetts State Police Main wreckage Flightcrew failure to review the checklist and release the gust lock prior to the takeoff run of Gulfstream IV N121JM on BED’s 7000-ft Rwy 11 as well as failure to be time-sensitive and abort the takeoff before running out of runway length led to destruction of the aircraft and the deaths of all occupants aboard. By Robert Sumwalt Gulfstream IV N121JM was frequently used for air transportation both NTSB Board Member domestically and overseas by Lewis Katz, the well-known and highly ATP/CFII/FE. Airbus A320, King Air 350, Boeing respected publisher of The Philadelphia Inquirer newspaper. 737, Fokker F28, Fokker 100 s often is the case in corporate aviation, the pas- at 1325 edt for the short hop to ACY, where they picked up sengers were running a few hours late. The 2 pi- the 4 passengers and flew them to BED (Hanscom Field, Alots and flight attendant decided to pass the time Bedford MA). After the passengers attended a charity event, by ordering a pizza and eating in the comfort of the cabin the plan was to return them to ACY and then reposition the of N121JM, the Gulfstream IV they had operated for 7 Gulfstream back to its home base at ILG. years. When the billionaire principal showed up with 3 Tragically, the evening didn’t end that way. -

Service Bulletin

MANDATORY SERVICE BULLETIN TITLE: FLIGHT CONTROLS - FLIGHT CONTROL (GUST) LOCK INSPECTION / REPLACEMENT SYNOPSIS OF CHANGE This Service Bulletin has been revised to add ending serial effectivity. The first paragraph of th e Description statement has been moved to the Reason statement. The Material Information table has been revised to reflect additional control lock part number information and ending serial effectivity. Although a company name change to Textron Aviation occurred, this service bulletin will revise only technical and contact information. Relevant technical changes are marked with change bars in the outside margins. 1. Planning Information A. Effectivity (1) Airplanes (a) Civil Beech Model 19 Series, Serials MB-1 through MB-722, and MB-724 through MB-905; Beech Model 23 Series, Serials M-3, and M-555 through M-2392; Beech Model 24 Series, Serials MA-1 through MA-368, MC-2 through MC-150, and MC-152 through MC-795; Beech Model 33 Series, Serials CD-1 through CD-981, CD-983 through CD-1304, CE-1 through CE-235, CE-249, CE-250, CE-256, CE-260, CE-264 through CE-268, CE-270 through CE-1791, and CJ-1 through CJ-179; Beech Model 35 Bonanza Series, Serials D-1 through D-10403; Beech Model 36 Bonanza, Serials E-1 through E-184; Beech Model A36 Bonanza, Serials E-185 through E-3629; E-3631 through E-3635; Beech Model G36 Bonanza, Serials E-3630, E-3636 and after; Beech Model A36TC Bonanza, Serials EA-1 through EA-241 and EA-243 through EA-272; Beech Model B36TC Bonanza, Serials EA-242 and EA-273 through EA-695; The export of t hese commodities, technology or software are subject to th e U.S. -

Airframe & Aircraft Components By

Airframe & Aircraft Components (According to the Syllabus Prescribed by Director General of Civil Aviation, Govt. of India) FIRST EDITION AIRFRAME & AIRCRAFT COMPONENTS Prepared by L.N.V.M. Society Group of Institutes * School of Aeronautics ( Approved by Director General of Civil Aviation, Govt. of India) * School of Engineering & Technology ( Approved by Director General of Civil Aviation, Govt. of India) Compiled by Sheo Singh Published By L.N.V.M. Society Group of Institutes H-974, Palam Extn., Part-1, Sec-7, Dwarka, New Delhi-77 Published By L.N.V.M. Society Group of Institutes, Palam Extn., Part-1, Sec.-7, Dwarka, New Delhi - 77 First Edition 2007 All rights reserved; no part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of the publishers. Type Setting Sushma Cover Designed by Abdul Aziz Printed at Graphic Syndicate, Naraina, New Delhi. Dedicated To Shri Laxmi Narain Verma [ Who Lived An Honest Life ] Preface This book is intended as an introductory text on “Airframe and Aircraft Components” which is an essential part of General Engineering and Maintenance Practices of DGCA license examination, BAMEL, Paper-II. It is intended that this book will provide basic information on principle, fundamentals and technical procedures in the subject matter areas relating to the “Airframe and Aircraft Components”. The written text is supplemented with large number of suitable diagrams for reinforcing the key aspects. I acknowledge with thanks the contribution of the faculty and staff of L.N.V.M. -

ATA Chapters

ATA Chapters AIRCRAFT GENERAL ATA Number ATA Chapter name ATA 01 Reserved for Airline Use ATA 02 Reserved for Airline Use ATA 03 Reserved for Airline Use ATA 04 Reserved for Airline Use ATA 05 TIME LIMITS/MAINTENANCE CHECKS ATA 06 DIMENSIONS AND AREAS ATA 07 LIFTING AND SHORING ATA 08 LEVELING AND WEIGHING. ATA 09 TOWING AND TAXI ATA 10 PARKING, MOORING, STORAGE AND RETURN TO SERVICE ATA 11 PLACARDS AND MARKINGS ATA 12 SERVICING - ROUTINE MAINTENANCE ATA 18 VIBRATION AND NOISE ANALYSIS (HELICOPTER ONLY) ATA 89 FLIGHT TEST INSTALLATION AIRFRAME SYSTEMS ATA ATA Chapter name Number ATA 20 STANDARD PRACTICES - AIRFRAME ATA 21 AIR CONDITIONING AND PRESSURIZATION ATA 22 AUTO FLIGHT ATA 23 COMMUNICATIONS ATA 24 ELECTRICAL POWER ATA 25 EQUIPMENT/FURNISHINGS ATA 26 FIRE PROTECTION ATA 27 FLIGHT CONTROLS ATA 28 FUEL ATA 29 HYDRAULIC POWER ATA 30 ICE AND RAIN PROTECTION ATA 31 INDICATING / RECORDING SYSTEM ATA 32 LANDING GEAR ATA 33 LIGHTS ATA 34 NAVIGATION ATA 35 OXYGEN ATA 36 PNEUMATIC ATA 37 VACUUM ATA 38 WATER/WASTE ELECTRICAL - ELECTRONIC PANELS AND MULTIPURPOSE ATA 39 COMPONENTS ATA 40 MULTISYSTEM ATA 41 WATER BALLAST ATA 42 INTEGRATED MODULAR AVIONICS ATA 44 CABIN SYSTEMS ATA 45 DIAGNOSTIC AND MAINTENANCE SYSTEM ATA 46 INFORMATION SYSTEMS ATA 47 NITROGEN GENERATION SYSTEM ATA 48 IN FLIGHT FUEL DISPENSING ATA 49 AIRBORNE AUXILIARY POWER ATA 50 CARGO AND ACCESSORY COMPARTMENTS STRUCTURE ATA Number ATA Chapter name ATA 51 STANDARD PRACTICES AND STRUCTURES - GENERAL ATA 52 DOORS ATA 53 FUSELAGE ATA 54 NACELLES/PYLONS ATA 55 STABILIZERS ATA 56 -

Cessna 172SP

CESSNA INTRODUCTION MODEL 172S NOTICE AT THE TIME OF ISSUANCE, THIS INFORMATION MANUAL WAS AN EXACT DUPLICATE OF THE OFFICIAL PILOT'S OPERATING HANDBOOK AND FAA APPROVED AIRPLANE FLIGHT MANUAL AND IS TO BE USED FOR GENERAL PURPOSES ONLY. IT WILL NOT BE KEPT CURRENT AND, THEREFORE, CANNOT BE USED AS A SUBSTITUTE FOR THE OFFICIAL PILOT'S OPERATING HANDBOOK AND FAA APPROVED AIRPLANE FLIGHT MANUAL INTENDED FOR OPERATION OF THE AIRPLANE. THE PILOT'S OPERATING HANDBOOK MUST BE CARRIED IN THE AIRPLANE AND AVAILABLE TO THE PILOT AT ALL TIMES. Cessna Aircraft Company Original Issue - 8 July 1998 Revision 5 - 19 July 2004 I Revision 5 U.S. INTRODUCTION CESSNA MODEL 172S PERFORMANCE - SPECIFICATIONS *SPEED: Maximum at Sea Level ......................... 126 KNOTS Cruise, 75% Power at 8500 Feet. ................. 124 KNOTS CRUISE: Recommended lean mixture with fuel allowance for engine start, taxi, takeoff, climb and 45 minutes reserve. 75% Power at 8500 Feet ..................... Range - 518 NM 53 Gallons Usable Fuel. .................... Time - 4.26 HRS Range at 10,000 Feet, 45% Power ............. Range - 638 NM 53 Gallons Usable Fuel. .................... Time - 6.72 HRS RATE-OF-CLIMB AT SEA LEVEL ...................... 730 FPM SERVICE CEILING ............................. 14,000 FEET TAKEOFF PERFORMANCE: Ground Roll .................................... 960 FEET Total Distance Over 50 Foot Obstacle ............... 1630 FEET LANDING PERFORMANCE: Ground Roll .................................... 575 FEET Total Distance Over 50 Foot Obstacle ............... 1335 FEET STALL SPEED: Flaps Up, Power Off ..............................53 KCAS Flaps Down, Power Off ........................... .48 KCAS MAXIMUM WEIGHT: Ramp ..................................... 2558 POUNDS Takeoff .................................... 2550 POUNDS Landing ................................... 2550 POUNDS STANDARD EMPTY WEIGHT .................... 1663 POUNDS MAXIMUM USEFUL LOAD ....................... 895 POUNDS BAGGAGE ALLOWANCE ........................ 120 POUNDS (Continued Next Page) I ii U.S. -

Emergency Evacuation of Commercial Passenger Aeroplanes Second Edition 2020

JUNE 2020 EMERGENCY EVACUATION OF COMMERCIAL PASSENGER AEROPLANES SECOND EDITION 2020 @aerosociety A specialist paper from the Royal Aeronautical Society www.aerosociety.com About the Royal Aeronautical Society (RAeS) The Royal Aeronautical Society (‘the Society’) is the world’s only professional body and learned society dedicated to the entire aerospace community. Established in 1866 to further the art, science and engineering of aeronautics, the Society has been at the forefront of developments in aerospace ever since. The Society seeks to; (i) promote the highest possible standards in aerospace disciplines; (ii) provide specialist information and act as a central forum for the exchange of ideas; and (iii) play a leading role in influencing opinion on aerospace matters. The Society has a range of specialist interest groups covering all aspects of the aerospace world, from airworthiness and maintenance, unmanned aircraft systems and aerodynamics to avionics and systems, general aviation and air traffic management, to name a few. These groups consider developments in their fields and are instrumental in providing industry-leading expert opinion and evidence from their respective fields. About the Honourable Company of Air Pilots (Incorporating Air Navigators) Who we are The Company was established as a Guild in 1929 in order to ensure that pilots and navigators of the (then) fledgling aviation industry were accepted and regarded as professionals. From the beginning, the Guild was modelled on the lines of the Livery Companies of the City of London, which were originally established to protect the interests and standards of those involved in their respective trades or professions. In 1956, the Guild was formally recognised as a Livery Company. -

2013 Cessna Caravan 208B I SN 2426

2013 Cessna Caravan 208B I SN 2426 Chase Woolsey www.millionair.com [email protected] 7555 Ipswich Rd. c. (409) 370-0385 Houston, TX 77061 o. (713) 640-4000 Avionics ADF: Bendix/King KR-87 AHRS: Dual Garmin GRS-77 Autopilot: Garmin GFC-700 IFCS w/yaw damper Avionics Package: Garmin G1000 Communication Radios: Dual Garmin CVR: Fairchild FA2100 DME: Bendix/King KN-63 EFIS: Garmin 2-tube Airframe FDR: Provisions Flight Director: Garmin GFC-700 IFCS Total Hours: 2585.8 Total Landings: 1897 Hi Frequency: Bendix/King KHF-1050 Navigation Radios: Dual Garmin Radar Altimeter: Bendix/King KRA-405B Engines TAWS: Garmin Class B PT6A-114A TCAS: Bendix/King KTA-870 On Condition TBO: 3600 hour overhaul Transponder: Garmin GTX-33 Mode S Maintenance Program: Manufacturer Weather Radar: Garmin GWX-68 color Total Hours: 2585.8 Engine Cycles: 1926 Time Between Overhaul: 3600 Serial Number: PCEPC2049 Maintenance Maintained: FAR Part 135 Airframe Maint. Program: Manufacturer Propeller Airframe Tracking Program: CESCOM McCauley 3-blade Serial Number: 120594 Interior Configuration/PAX: Commuter/9 passengers/2 crew Seating: Three right side 2-place seats opposite three single seats, dual crew seats General: Interior is in excellent condition as reported 03/26/2018 Storage: Aft baggage restraint Air Conditioning: Freon Features Cockpit Voice Recorder Cargo Pod Ice Protection Freon Air Conditioning Weather Radar Additional Equipment Cockpit: Multi-function display Dual Garmin GDC-74A air data computers Garmin GEA-71 engine/airframe unit Garmin GMA-1374 audio system Artex ME-406-2 2-frequency ELT Dual avionics master switches Passenger address system ADS-B Out Cabin PA system Equipment: McCauley 3-blade prop FIKI; ice protection/de-ice equipped (TKS de-ice system) Cargo pod Rudder gust lock STC 300 amp starter/generator Chase Woolsey [email protected] c. -

Jammed Elevator Prompts Twin-Turboprop Rejected Takeoff, Runway Over-Run

FLIGHT SAFETY FOUNDATION Accident Prevention Vol. 56 No. 4 For Everyone Concerned with the Safety of Flight April 1999 Jammed Elevator Prompts Twin-turboprop Rejected Takeoff, Runway Over-run The investigating authority said that deficiencies in the Hawker Siddeley 748 flight control gust-lock system might have caused the elevator gust lock to re-engage when the flight crew checked the flight controls at the beginning of the takeoff. FSF Editorial Staff On Aug. 16, 1996, the flight crew of an Emerald • “Inadequacies in maintenance information and Airways Hawker Siddeley (HS) 748 Series 2A implementation that led to failure to correctly rejected a takeoff at Liverpool (England) Airport maintain a gust-lock system, the design of which when takeoff rotation was prevented by a jammed is inherently sensitive to deficiencies; [and,] elevator. The airplane was substantially damaged when it struck an instrument landing system (ILS) • “Lack of fully effective modification action, power-supply building off the end of the runway. The following the fatal over-run accident to HS 748, flight crew, alone aboard the airplane, was not G-BEKF, at Sumburgh [Shetland Islands, injured. Scotland] Airport on 31 July 1979 (AIB [U.K. Accidents Investigation Branch1] Report 1/81), The U.K. Air Accidents Investigation Branch to address the inherent design sensitivity of the (AAIB) said, in its final report, that the causes of the flight controls gust-lock system.” accident were: The Sumburgh accident occurred when the airplane, operated • “Flight control gust-lock system deficiencies, which by Dan-Air Services, exited the end of the runway and entered probably caused the elevator lock to re-engage on the North Sea while taking off for a charter flight to Aberdeen, completion of the crew’s full-and-free check of the flight Scotland. -

Dash8-200/300 - Flight Controls

Dash8-200/300 - Flight Controls FLIGHT CONTROLS CONTROLS AND INDICATORS Rudder and yaw damper Page 1 Dash8-200/300 - Flight Controls Elevators Page 2 Dash8-200/300 - Flight Controls Spoiler and aileron Page 3 Dash8-200/300 - Flight Controls Flaps Page 4 Dash8-200/300 - Flight Controls Flaps Page 5 Dash8-200/300 - Flight Controls Miscellaneous controls Page 6 Dash8-200/300 - Flight Controls Powered flight control surface controls and indications Page 7 Dash8-200/300 - Flight Controls Powered flight control switches and indications Page 8 Dash8-200/300 - Flight Controls 1SYSTEMS DESCRIPTION General The primary flight controls consist of ailerons and spoilers for roll control, elevator for pitch control and a two sectioned (fore and trailing) rudder for yaw control. Roll spoilers and fore rudder are hydraulically powered. Secondary flight controls, consists of wing flaps and trimming systems for the primary flight controls, and a flight control gust lock mechanism. All flight controls may be operated from either the left or right pilots’ position. Indications for flight control positions or malfunctions are provided by trim position indicators, the powered flight control surface indicator and a series of caution lights. Flight control surfaces location Page 9 Dash8-200/300 - Flight Controls Yaw control Directional control about the yaw axis is provided by the rudder control system. The rudder is hydraulically powered and controlled through displacement of either pilot’s rudder pedals. A yaw damper operates through the rudder control system to improve directional stability. Rudder control system The rudder has two sections (fore and trailing) driven by dual hydraulic actuators positioned by either pilot’s rudder pedals.