Formal Languages and Logics Lecture Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bibliography

Bibliography [57391] ISO/IEC JTC1/SC21 N 5739. Database language SQL, April 1991. [69392] ISO/IEC JTC1/SC21 N 6931. Database language SQL (SQL3), June 1992. [A+76] M. M. Astrahan et al. System R: a relational approach to data management. ACM Trans. on Database Systems, 1(2):97–137, 1976. [AA93] P. Atzeni and V. De Antonellis. Relational Database Theory. Benjamin/Cummings Publishing Co., Menlo Park, CA, 1993. [AABM82] P. Atzeni, G. Ausiello, C. Batini, and M. Moscarini. Inclusion and equivalence between relational database schemata. Theoretical Computer Science, 19:267–285, 1982. [AB86] S. Abiteboul and N. Bidoit. Non first normal form relations: An algebra allowing restructuring. Journal of Computer and System Sciences, 33(3):361–390, 1986. [AB87a] M. Atkinson and P. Buneman. Types and persistence in database programming languages. ACM Computing Surveys, 19(2):105–190, June 1987. [AB87b] P. Atzeni and M. C. De Bernardis. A new basis for the weak instance model. In Proc. ACM Symp. on Principles of Database Systems, pages 79–86, 1987. [AB88] S. Abiteboul and C. Beeri. On the manipulation of complex objects. Technical Report, INRIA and Hebrew University, 1988. (To appear, VLDB Journal.) [AB91] S. Abiteboul and A. Bonner. Objects and views. In Proc. ACM SIGMOD Symp. on the Management of Data, 1991. [ABD+89] M. Atkinson, F. Bancilhon, D. DeWitt, K. Dittrich, D. Maier, and S. Zdonik. The object- oriented database system manifesto. In Proc. of Intl. Conf. on Deductive and Object-Oriented Databases (DOOD), pages 40–57, 1989. [ABGO93] A. Albano, R. Bergamini, G. Ghelli, and R. Orsini. -

Continuance and Transience of Lifetime Co-Authorships

Open Archive TOULOUSE Archive Ouverte ( OATAO ) OATAO is an open access repository that collects the work of Toulouse researchers and makes it freely available over the web where possible. This is an author-deposited version published in : http://oatao.univ-toulouse.fr/ Eprints ID : 13197 To link to this article : doi:10.1007/s11192-014-1426-0 URL : http://dx.doi.org/10.1007/s11192-014-1426-0 To cite this version : Cabanac, Guillaume and Hubert, Gilles and Milard, Béatrice Academic careers in Computer Science: Continuance and transience of lifetime co-authorships. (2015) Scientometrics, vol. 102 (n° 1). pp. 135-150. ISSN 0138-9130 Any correspondance concerning this service should be sent to the repository administrator: [email protected] DOI 10.1007/s11192-014-1426-0 Academic careers in Computer Science: Continuance and transience of lifetime co-authorships Guillaume Cabanac · Gilles Hubert · Béatrice Milard Abstract Scholarly publications reify fruitful collaborations between co-authors. A branch of research in the Science Studies focuses on analyzing the co-authorship networks of established scientists. Such studies tell us about how their collaborations developed through their careers. This paper updates previous work by reporting a transversal and a longitudinal studies spanning the lifelong careers of a cohort of researchers from the DBLP bibliographic database. We mined 3,860 researchers’ publication records to study the evolution patterns of their co-authorships. Two features of co-authors were considered: 1) their expertise, and 2) the history of their partnerships with the sampled researchers. Our findings reveal the ephemeral nature of most collaborations: 70% of the new co-authors were only one-shot partners since they did not appear to collaborate on any further publications. -

Foundations of Databases

Foundations of Databases Foundations of Databases Serge Abiteboul INRIA–Rocquencourt Richard Hull University of Southern California Victor Vianu University of California–San Diego Addison-Wesley Publishing Company Reading, Massachusetts . Menlo Park, California New York . Don Mills, Ontario . Wokingham, England Amsterdam . Bonn . Sydney . Singapore Tokyo . Madrid . San Juan . Milan . Paris Sponsoring Editor: Lynne Doran Cote Associate Editor: Katherine Harutunian Senior Production Editor: Helen M. Wythe Cover Designer: Eileen R. Hoff Manufacturing Coordinator: Evelyn M. Beaton Cover Illustrator: Toni St. Regis Production: Superscript Editorial Production Services (Ann Knight) Composition: Windfall Software (Paul C. Anagnostopoulos, Marsha Finley, Jacqueline Scarlott), using ZzTEX Copy Editor: Patricia M. Daly Proofreader: Cecilia Thurlow The procedures and applications presented in this book have been included for their instructional value. They have been tested with care but are not guaranteed for any purpose. The publisher does not offer any warranties or representations, nor does it accept any liabilities with respect to the programs and applications. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed in initial caps or all caps. Library of Congress Cataloging-in-Publication Data Abiteboul, S. (Serge) Foundations of databases / Serge Abiteboul. Richard Hull, Victor Vianu. p. cm. Includes bibliographical references and index. ISBN 0-201-53771-0 1. Database management. I. Hull, Richard, 1953–. II. Vianu, Victor. III. Title. QA76.9.D3A26 1995 005.74’01—dc20 94-19295 CIP Copyright © 1995 by Addison-Wesley Publishing Company, Inc. -

Automatic Data Restructuring

Journal of Universal Computer Science, vol. 5, no. 4 (1999), 243-286 submitted: 12/4/99, accepted: 23/4/99, appeared: 28/4/99 Springer Pub. Co. Automatic Data Restructuring Seymour Ginsburg घComputer Science Department, University of Southern California, Los Angeles, California, 90089 e-mail: ginsburg@p ollux.usc.eduङ Nan C. Shu घIBM Los Angeles Scienti c Center, Los Angeles,Californiaङ Dan A. Simovici घUniversity of Massachusetts at Boston, Department of Mathematics and Computer Science, Boston, Massachusetts 02125 e-mail: [email protected]ङ Abstract: Data restructuring is often an integral but non-trivial part of informa- tion pro cessing, esp ecially when the data structures are fairly complicated. This pap er describ es the underpinnings of a program, called the Restructurer, that relieves the user of the \thinking and co ding" pro cess normally asso ciated with writing pro cedural programs for data restructuring. The pro cess is accomplished by the Restructurer in two stages. In the rst, the di erences in the input and output data structures are recognized and the applicabilityofvarious transformation rules analyzed. The result is a plan for mapping the sp eci ed input to the desired output. In the second stage, the plan is executed using emb edded knowledge ab out b oth the target language and run-time eciency considerations. The emphasis of this pap er is on the planning stage. The restructuring op erations and the mapping strategies are informally describ ed and explained with mathematical formalism. The notion of solution of a set of instantiated forms with resp ect to an output form is then intro duced. -

Position-And-Length-Dependent Context-Free Grammars – a New Type of Restricted Rewriting

Position-and-Length-Dependent Context-Free Grammars – A New Type of Restricted Rewriting fCFL flCFL fldCFL eREG fedREG REG feREG CFL lCFL feCFL fedCFL fledCFL fleCFL fREG eCFL leCFL ledCFL Vom Fachbereich Informatik der Technischen Universität Kaiserslautern zur Erlangung des akademischen Grades Doktor der Naturwissenschaften (Dr. rer. nat) genehmigte Dissertation von Frank Weinberg Dekan: Prof. Dr. Klaus Schneider Vorsitzender der Prüfungskommission: Prof. Dr. Hans Hagen 1. Berichterstatter: Prof. Dr. Markus Nebel 2. Berichterstatter: Prof. Dr. Hening Fernau Datum der wissenschaftlichen Aussprache: 3. März 2014 D 386 Abstract For many decades, the search for language classes that extend the context-free laguages enough to include various languages that arise in practice, while still keeping as many of the useful properties that context-free grammars have – most notably cubic parsing time – has been one of the major areas of research in formal language theory. In this thesis we add a new family of classes to this field, namely position-and-length- dependent context-free grammars. Our classes use the approach of regulated rewriting, where derivations in a context-free base grammar are allowed or forbidden based on, e.g., the sequence of rules used in a derivation or the sentential forms, each rule is applied to. For our new classes we look at the yield of each rule application, i.e. the subword of the final word that eventually is derived from the symbols introduced by the rule application. The position and length of the yield in the final word define the position and length of the rule application and each rule is associated a set of positions and lengths where it is allowed to be applied. -

Foundations of Databases

Foundations of Databases Foundations of Databases Serge Abiteboul INRIA–Rocquencourt Richard Hull University of Southern California Victor Vianu University of California–San Diego Addison-Wesley Publishing Company Reading, Massachusetts . Menlo Park, California New York . Don Mills, Ontario . Wokingham, England Amsterdam . Bonn . Sydney . Singapore Tokyo . Madrid . San Juan . Milan . Paris Sponsoring Editor: Lynne Doran Cote Associate Editor: Katherine Harutunian Senior Production Editor: Helen M. Wythe Cover Designer: Eileen R. Hoff Manufacturing Coordinator: Evelyn M. Beaton Cover Illustrator: Toni St. Regis Production: Superscript Editorial Production Services (Ann Knight) Composition: Windfall Software (Paul C. Anagnostopoulos, Marsha Finley, Jacqueline Scarlott), using ZzTEX Copy Editor: Patricia M. Daly Proofreader: Cecilia Thurlow The procedures and applications presented in this book have been included for their instructional value. They have been tested with care but are not guaranteed for any purpose. The publisher does not offer any warranties or representations, nor does it accept any liabilities with respect to the programs and applications. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed in initial caps or all caps. Library of Congress Cataloging-in-Publication Data Abiteboul, S. (Serge) Foundations of databases / Serge Abiteboul. Richard Hull, Victor Vianu. p. cm. Includes bibliographical references and index. ISBN 0-201-53771-0 1. Database management. I. Hull, Richard, 1953–. II. Vianu, Victor. III. Title. QA76.9.D3A26 1995 005.74’01—dc20 94-19295 CIP Copyright © 1995 by Addison-Wesley Publishing Company, Inc. -

Stal Aanderaa Hao Wang Lars Aarvik Martin Abadi Zohar Manna James

Don Heller J. von zur Gathen Rodney Howell Mark Buckingham Moshe VardiHagit Attiya Raymond Greenlaw Henry Foley Tak-Wah Lam Chul KimEitan Gurari Jerrold W. GrossmanM. Kifer J.F. Traub Brian Leininger Martin Golumbic Amotz Bar-Noy Volker Strassen Catriel Beeri Prabhakar Raghavan Louis E. Rosier Daniel M. Kan Danny Dolev Larry Ruzzo Bala Ravikumar Hsu-Chun Yen David Eppstein Herve Gallaire Clark Thomborson Rajeev Raman Miriam Balaban Arthur Werschulz Stuart Haber Amir Ben-Amram Hui Wang Oscar H. Ibarra Samuel Eilenberg Jim Gray Jik Chang Vardi Amdursky H.T. Kung Konrad Jacobs William Bultman Jacob Gonczarowski Tao Jiang Orli Waarts Richard ColePaul Dietz Zvi Galil Vivek Gore Arnaldo V. Moura Daniel Cohen Kunsoo Park Raffaele Giancarlo Qi Zheng Eli Shamir James Thatcher Cathy McGeoch Clark Thompson Sam Kim Karol Borsuk G.M. Baudet Steve Fortune Michael Harrison Julius Plucker NicholasMichael Tran Palis Daniel Lehmann Wilhelm MaakMartin Dietzfelbinger Arthur Banks Wolfgang Maass Kimberly King Dan Gordon Shafee Give'on Jean Musinski Eric Allender Pino Italiano Serge Plotkin Anil Kamath Jeanette Schmidt-Prozan Moti Yung Amiram Yehudai Felix Klein Joseph Naor John H. Holland Donald Stanat Jon Bentley Trudy Weibel Stefan Mazurkiewicz Daniela Rus Walter Kirchherr Harvey Garner Erich Hecke Martin Strauss Shalom Tsur Ivan Havel Marc Snir John Hopcroft E.F. Codd Chandrajit Bajaj Eli Upfal Guy Blelloch T.K. Dey Ferdinand Lindemann Matt GellerJohn Beatty Bernhard Zeigler James Wyllie Kurt Schutte Norman Scott Ogden Rood Karl Lieberherr Waclaw Sierpinski Carl V. Page Ronald Greenberg Erwin Engeler Robert Millikan Al Aho Richard Courant Fred Kruher W.R. Ham Jim Driscoll David Hilbert Lloyd Devore Shmuel Agmon Charles E. -

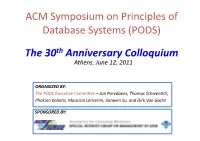

ACM Symposium on Principles of Database Systems (PODS) the 30Th Anniversary Colloquium Athens, June 12, 2011

ACM Symposium on Principles of Database Systems (PODS) The 30th Anniversary Colloquium Athens, June 12, 2011 ORGANIZED BY: The PODS Execu6ve Commi<ee – Jan Paredaens, Thomas Schwen6ck, Phokion Kolai6s, Maurizio Lenzerini, Jianwen Su, and Dirk Van Gucht SPONSORED BY: Symposium on Principles of Database Systems • Started in 1982, it is the premiere internaonal conference on Database Theory • It is co-sponsored by three ACM Special Interest Groups: SIGACT (TheoreBcal Computer Science), SIGART (ArBficial Intelligence), and SIGMOD (Management of Data) • hKp://www.sigmod.org/the-pods-pages • Symposium from Greek Συμπόσιον – syn "together" + posis "a drinking” The sense of "meeBng on some subject" is from 1700, reflecBng the Greek fondness for miXing wine and intellectual discussion. Before PODS • Progenitors of PODS: – The XP workshops – Advanced Seminar on TheoreBcal Issues in Data Bases (TIDB), Cetraro, Italy, 1981 (organized by Giorgio Ausiello, François Bancilhon, Domenico Saccà, Nicolas Spyratos) XP1 Workshop on Database Theory XP stands for "eX Princetonian” (most par6cipants – especially in the early XPs – were students of Jeffrey D. Ullman at Princeton or visi6ng researchers there). XP1 (1980) David Maier (Ed.): XP1 Workshop on Relaonal Database Theory, 30 June - 2 July 1980, SUNY at Stony Brook, NY, USA. XP2 (1981) XP2 Workshop on Relaonal Database Theory, June 22-24 1981, The Pennsylvania State University, PA, USA. XP4.5 (1983) XP4.5 Workshop on Database Theory, 1983 Palo Alto, California, USA. XP7.52 (1986) Henry F. korth (Ed.): XP / 7.52 Workshop on Database Theory, University of TeXas at AusBn, TX, USA, August 13-15, 1986. XP1 Workshop on Database Theory • Barry E. -

Chicago Journal of Theoretical Computer Science the MIT Press

Chicago Journal of Theoretical Computer Science The MIT Press Volume 1996, Article 4 13 November 1996 ISSN 1073–0486. MIT Press Journals, 55 Hayward St., Cambridge, MA 02142 USA; (617)253-2889; [email protected], [email protected]. Published one article at a time in LATEX source form on the Internet. Pag- ination varies from copy to copy. For more information and other articles see: http://www-mitpress.mit.edu/jrnls-catalog/chicago.html • http://www.cs.uchicago.edu/publications/cjtcs/ • gopher.mit.edu • gopher.cs.uchicago.edu • anonymous ftp at mitpress.mit.edu • anonymous ftp at cs.uchicago.edu • Buntrock and Niemann Weakly Growing CSGs (Info) The Chicago Journal of Theoretical Computer Science is abstracted or in- R R R dexed in Research Alert, SciSearch, Current Contents /Engineering Com- R puting & Technology, and CompuMath Citation Index. c 1996 The Massachusetts Institute of Technology. Subscribers are licensed to use journal articles in a variety of ways, limited only as required to insure fair attribution to authors and the journal, and to prohibit use in a competing commercial product. See the journal’s World Wide Web site for further details. Address inquiries to the Subsidiary Rights Manager, MIT Press Journals; (617)253-2864; [email protected]. The Chicago Journal of Theoretical Computer Science is a peer-reviewed scholarly journal in theoretical computer science. The journal is committed to providing a forum for significant results on theoretical aspects of all topics in computer science. Editor in chief: Janos -

On Completing Tables to Satisfy Functional Dependencies

Theoretical Computer Science 39 (1985) 309-317 309 North-Holland ON COMPLETING TABLES TO SATISFY FUNCTIONAL DEPENDENCIES Seymour GINSBURG* Department of Computer Science, University of Southern California, Los Angeles, CA 90089, U.S.A. Edwin H. SPANIER Department of Mathematics, University of California at Berkeley, Berkeley, CA 94720, U.S.A. Communicated by M. Nivat Received May 1984 Abstract. Several sufficiency conditions on a given set of functional dependencies are presented to ensure that a partially-specified table can be extended to a completely-specified table satisfying the dependencies. In particular, each table over a minimal key can be extended to such a table (over the entire attribute set). Introduction When dealing with partially-specified tables in relational database theory, it is customarily assumed that all values are specified over a given minimal key [1]. On the other hand, the meaning of a functional dependency X ~ Y is that the values for Y are a function of the values for X. The impetus for the present research came from these two observations and the question: "Let (U, F) be a functional depen- dency schema. If T is a table defined only over a minimal key, can T be extended to be an instance (= completely-specified table) over U satisfying F?" In this paper we resolve that question affirmatively (Corollary 2.5 to Theorem 2.4). We also obtain some sufficiency conditions on a given set of functional dependencies for the more general problem of when a partially-specified table can be extended to be an instance satisfying the dependencies. While there are several papers in the literature dealing with the extension of a partially-specified table to a completely-specified one [3, 4, 5, 6], they only involve algorithms based on the chase procedure and do not consider general sufficiency conditions. -

Not Only Theory

Hermann Maurer Professor Maurer was b orn in 1941 in Vienna, Austria. He has got a Ph.D. in Math- ematics from the University of Vienna 1965. Assistant and Asso ciate Professor for Computer Science at the University of Calgary and Professor for Applied Computer Science at the University of Karlsruhe, West Germany. Since 1978 Full Professor at the Graz UniversityofTechnology. Honorary Adjunct Professor at the University of Auck- land, New Zealand since Octob er 1993. Honorary Do ctorate Polytechnical University of St. Petersburg 1992, Foreign Memb er of the Finnish Academy of Sciences 1996. Author of thirteen b o oks, over 400 scienti c contributions, and dozens of multimedia pro ducts. Editor-in-Chief of the journals J.UCS and J.NCA. Chairp erson of steering committee of WebNet and ED-MEDIA Conference series. Pro ject manager of a num- berofmultimillion-dollar undertakings including the development of a colour-graphic micro computer, a distributed CAI-system, multi-media pro jects such as \Images of Austria" Exp o'92 and Exp o'93, resp onsible for the development of the rst second generation Web system Hyp er-G, now Hyp erWave, and various electronic publishing pro jects such as the \PC Library", \Geothek" and \J.UCS" and participation in a num- b er of EU pro jects e.g., LIBERATION. Professor Maurer research and pro ject areas include: networked multimedia/hyp ermedia systems Hyp erWave; electronic publish- ing and applications to university life, exhibitions and museums, Web based learning environments; languages and their applications, data structures and their ecient use, telematic services, computer networks, computer assisted instruction, computer sup- p orted new media, and so cial implications of computers. -

A Complete Bibliography of International Conferences on Very Large Data Bases

A Complete Bibliography of International Conferences on Very Large Data Bases Nelson H. F. Beebe University of Utah Department of Mathematics, 110 LCB 155 S 1400 E RM 233 Salt Lake City, UT 84112-0090 USA Tel: +1 801 581 5254 FAX: +1 801 581 4148 E-mail: [email protected], [email protected], [email protected] (Internet) WWW URL: http://www.math.utah.edu/~beebe/ 06 November 2012 Version 2.10 Title word cross-reference [1911]. 2nd [1882]. 2PL [502]. 2Q [1012]. 3 [691, 1718]. 370 [46]. 3W [1443]. 0 [670]. 1 [1248]. 1: N [1290]. 2 [1126]. 20 548-559 [1805]. [1171]. 20=20 [1511]. 80 [1171]. 2 [1572]. Π [1092]. A [1488]. K [1402]. L [1476]. N p 7.0 [1387]. 7.3 [1198]. 7.5 [1386]. [1403, 1125]. [567]. '86 [1893, 556]. -Based [1037]. -Complete [670]. -D [1126]. -Dimensional [1125]. -Safe [1248]. -Tree '92 [1899]. '93 [1900]. '95 [1902]. 9th [1890]. [637, 1092, 1488]. -Trees [515]. = [1773]. 1.0 [1859]. 10-Year [1464]. 11th [1892]. 13th [1894]. 14th [1909, 1895]. 16th [1897]. A-TOPSS [1750]. Abduction [784]. 18th [1899]. 19th [1900]. abridged [1761]. Abstract [943, 1542, 189, 447, 125, 849, 154, 554, 810, 2002 [1910]. 2003 [1911]. 2020 [1511]. 20th 830, 797, 450, 198, 396, 1222, 418, 432, 191, [1901]. 21st [1902]. 26th [1908]. 29th 1 2 119, 576, 173, 118, 821, 421, 1223, 1282, 158, Aggregates [1188, 605, 506, 1710, 1322, 753, 393, 569, 1600, 1538, 1539]. Abstraction 1496, 712, 1366, 1189, 1456, 609]. [255, 1044, 9, 1028, 58]. Accelerating Aggregation [1348, 1711, 1824, 1833, 1708, [1623].