Hand Gesture and Mathematics Learning: Lessons from an Avatar

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COVID-19 Vaccine News & Info

September 27, 2021 COVID-19 Vaccine News & Infoi TIMELY UPDATES • New York State launched an outreach and implementation plan to ensure the availability and accessibility of booster doses statewide on Monday, September 27, 2021. The plan also includes a new dedicated website: NY.gov/Boosters • The director of the Centers for Disease Control and Prevention on Friday, September 24, 2021 reversed a recommendation by an agency advisory panel that did not endorse booster shots of the Pfizer-BioNTech Covid vaccine for frontline and essential workers. Occupational risk of exposure will now be part of the consideration for the administration of boosters, which is consistent with the FDA determination. See: CDC Statement CDC recommends: o people 65 years and older and residents in long-term care settings should receive a booster shot of Pfizer-BioNTech’s COVID-19 vaccine at least 6 months after their Pfizer-BioNTech primary series, o people aged 50–64 years with underlying medical conditions should receive a booster shot of Pfizer-BioNTech’s COVID-19 vaccine at least 6 months after their Pfizer-BioNTech primary series, o people aged 18–49 years with underlying medical conditions may receive a booster shot of Pfizer-BioNTech’s COVID-19 vaccine at least 6 months after their Pfizer-BioNTech primary series, based on their individual benefits and risks, and o people aged 18-64 years who are at increased risk for COVID-19 exposure and transmission because of occupational or institutional setting may receive a booster shot of Pfizer-BioNTech’s COVID-19 vaccine at least 6 months after their Pfizer-BioNTech primary series, based on their individual benefits and risks. -

The Fairview Town Crier SEPTEMBER 2021 VOL

YOUR NONPROFIT, COMMUNITY NEWSPAPER SINCE 1997 The Fairview Town Crier SEPTEMBER 2021 VOL. 25, No. 9 | FAIRVIEW, NC | fairviewtowncrier.com INSIDE Treasured Trees p8 // Learn the Bear Necessities p10-11 // History and Romance in Fairview p16-17 FAA Play Ball! Crier Scavenger Hunt From February to June each year, the fi elds Solve the clues, accomplish the tasks and submit your answers and behind Fairview Elementary School are usually photos to [email protected]. e highest score abuzz with activity. In 2020, the youth baseball wins a $25 gi card and exceptional bragging rights—and and softball leagues abruptly ended after only maybe your picture in the next issue. (A drawing will be a handful of practices due to COVID-19 con- held in case of a tie.) Deadline to enter is September 16. cerns, and area ballplayers had to anxiously 1 Name the state bird of North Carolina (5 points). await the resurgence of their beloved sport the Grab a photo of you and the state bird in the same following year. It was wonderful news to parents shot and earn 20 bonus points. and children alike when the 2021 season was 2 is little piggie went to market, this little piggie stayed announced and practice resumed. The Fairview home…and this little piggie went ying. Find the sky-high Athletic Association is run 100% by volunteers, swine and send a photo of yourself standing below, pointing in the same direction as the arrow (20 points). and among the most dedicated are husband and wife Travis and McKayla Spivey (pictured above), who graciously donated six to seven days per week 3 Name the oldest known building in Buncombe County to ensure our area youth could return to sports. -

The Fairview Town Crier the Fairview

YOUR NONPROFIT, COMMUNITY NEWSPAPER SINCE 1997 The Fairview Town Crier MARCH 2021 VOL. 25, No. 3 | FAIRVIEW, NC | fairviewtowncrier.com INSIDE Local Takeout Options p11 Backyard Birding Guide p16-17 // WastePro Trash/Recycle Schedule p30 Helping the Helpers COVID-19 Update At the end of last month, Governor Cooper announced the easing of some COVID-related restrictions due to improving numbers and the vaccine rollout: • ! e 10 pm curfew for businesses and people has been li# ed. • Indoor areas of bars may reopen at 30% capacity, and alcohol can be sold until 11 pm. Bars and breweries can sell • Many indoor businesses can operate at 50% alcohol until 11 pm. capacity. • Movie theaters and sports arenas can operate at 30% capacity. • ! e limit for indoor mass gatherings has been increased to 25 people. A vaccine shot is now available for those who want it and are eligible. Group 3 Rich Mueller (right), Tyler Hembree (left) and Lume Beddingfi eld helped to fence in some Great essential workers, including child care and Pre–K-12 school workers, should now Pyrenees. (photo: My Fairview NC Community Facebook group) be eligible. For more information on COVID-19 in Buncombe County, go to buncombecounty.org/covid-19 or call 419-0095. e are so fortunate to live in a to cover the costs. And people have community that helps our reached out to donate their time or with W neighbors, and that gets things o$ ers of materials to donate. done. Some donate money or materials. It has been ful" lling for him, too. -

2019 Annual Report

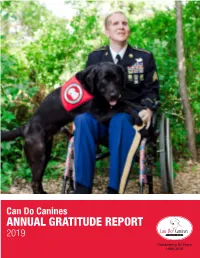

Can Do Canines ANNUAL GRATITUDE REPORT 2019 ® Celebrating 30 Years 1989-2019 A Letter From Can Do Canines EXECUTIVE DIRECTOR ALAN PETERS Mission Annual Gratitude Report 2019 Can Do Canines is dedicated to & BOARD CHAIR MITCH PETERSON enhancing the quality of life for 3 A Letter From the Executive Director and Board Chair people with disabilities by creating 4 At a Glance Infographic Dear Friends, mutually beneficial partnerships 5 Client Stories with specially trained dogs. We are pleased to share this report of Can Do Canines’ accomplishments 8 Graduate Teams during 2019. Throughout these pages you’ll see our mission come to life through the stories and words of our graduates. You’ll witness the many 11 The Numbers people who have helped make Can Do Canines possible. And we hope you’ll Celebrating 12 Volunteers be inspired to continue your support. Vision 30 Years We envision a future in which every 16 Contributors We celebrated our 30th anniversary during 2019! Thirty years of service 1989-2019 to the community is a significant accomplishment. An anniversary video person who needs and wants an 25 Legacy Club Alan M. Peters and special logo were created and shared at events and in publications Executive Director assistance dog can have one. 26 Donor Policy throughout the year. Our 30th Anniversary Gala was made extra special by inviting two-time Emmy award winner Louie Anderson to entertain us. And enclosed with this report is the final part of the celebration: our booklet celebrating highlights of those 30 years. Values Those Who Served on the Most importantly, we celebrated our mission through action. -

Greater Philadelphia Future Forces Summary

Greater Philadelphia Technical Report CONNECTIONS The Delaware Valley Regional Planning Commission is dedicated to uniting the region’s elected officials, planning professionals, and the public with a common vision of making a great region even greater. Shaping the way we live, work, and play, DVRPC builds consensus on improving transportation, promoting smart growth, protecting the environment, and enhancing the economy. We serve a diverse region of nine counties: Bucks, Chester, Delaware, Montgomery, and Philadelphia in Pennsylvania; and Burlington, Camden, Gloucester, and Mercer in New Jersey. DVRPC is the federally designated Metropolitan Planning Organization for the Greater Philadelphia Region — leading the way to a better future. The symbol in our logo is adapted from the official DVRPC seal and is designed as a stylized image of the Delaware Valley. The outer ring symbolizes the region as a whole while the diagonal bar signifies the Delaware River. The two adjoining crescents represent the Commonwealth of Pennsylvania and the State of New Jersey. DVRPC is funded by a variety of funding sources including federal grants from the U.S. Department of Transportation’s Federal Highway Administration (FHWA) and Federal Transit Administration (FTA), the Pennsylvania and New Jersey departments of transportation, as well as by DVRPC’s state and local member governments. The authors, however, are solely responsible for the findings and conclusions herein, which may not represent the official views or policies of the funding agencies. The Delaware Valley Regional Planning Commission (DVRPC) fully complies with Title VI of the Civil Rights Act of 1964, the Civil Rights Restoration Act of 1987, Executive Order 12898 on Environmental Justice, and related nondiscrimination statutes and regulations in all programs and activities. -

Additional Ways to Graduate Quick Reference Guide

䄀搀搀椀琀椀漀渀愀氀 圀愀礀猀 吀漀 䜀爀愀搀甀愀琀攀 䠀椀最栀 匀挀栀漀漀氀 䐀椀瀀氀漀洀愀 ☀ 䔀焀甀椀瘀愀氀攀渀挀礀 倀爀漀最爀愀洀猀 ㈀ 㜀ⴀ㈀ 㠀 ADDITIONAL WAYS TO GRADUATE QUICK REFERENCE GUIDE YOUR OPTIONS: . Stay at your school. Depending on your age and school history (credit accumulation and Regents examinations) – staying in or returning to – your home school may be the best option for you. Enroll in a school or program that can help you get back on track to graduation. If staying in current school is not the best option, the schools and programs in this directory might be right for you. They include smaller classes, personalized learning environments, and connections to college and careers. General admissions criteria for schools and programs in this directory are listed below: TRANSFER SCHOOLS YOUNG ADULT BOROUGH CENTERS HIGH SCHOOL EQUIVALENCY . Ages 16-21 (varies by school) (YABCS) PROGRAMS . Must have completed one . Ages 17.5-21 . Ages 18-21 year of high school . Be in the fifth year of high . Attend a full-time or part-time . Number of credits required for school program entry varies by school but . Have, at least, 17 credits . Earn a high school equivalency could be as low as 0 credits . Part-time afternoon/evening diploma (formerly known as a . Full time, day school programs GED®) . Earn a high school diploma . Earn a high school diploma LEARNING TO WORK Many Transfer Schools and Young Adult Borough Centers are supported by the Learning to Work (LTW) initiative. LTW assists students overcome obstacles that impede their progress toward a high school diploma and leads them toward rewarding employment and educational experiences after graduation. -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

1 ROBBINS ARROYO LLP BRIAN J. ROBBINS (SBN 190264) 2 [email protected] KEVIN A. SEELY (SBN 199982) 3 [email protected] MICHAEL J. NICOUD (SBN 272705) 4 [email protected] 600 B Street, Suite 1900 5 San Diego, CA 92101 Telephone: (619) 525-3990 6 Facsimile: (619) 525-3991 7 THEGRANTLAWFIRM, PLLC LYNDA J. GRANT (admitted pro hac vice) 8 [email protected] 521 Fifth Ave, 17th Floor 9 New York, NY 10175 Telephone: (212) 292-4441 10 Facsimile: (212) 292-4442 11 Co-Lead Counsel for Plaintiffs 12 13 SUPERIOR COURT OF THE STATE OF CALIFORNIA 14 COUNTY OF SANTA CLARA 15 IN RE APPLE INC. E-BOOK Lead Case No. 1-14-CV-269543 DERIVATIVE LITIGATION (Consolidated with Case Nos. 16 1-14-CV-270239 and 1-15-CV-277705) 17 AMENDED NOTICE OF PROPOSED SETTLEMENT AND SETTLEMENT 18 HEARING 19 Judge: Hon. Brian C. Walsh Dept.: 1 (Complex Civil Litigation) 20 Date Initial Action Filed: August 15, 2014 21 22 23 24 25 26 27 28 1 TO: ALL CURRENT RECORD HOLDERS AND BENEFICIAL OWNERS OF THE COMMON STOCK OF APPLE INC. (“APPLE” OR THE “COMPANY”) AS OF FEBRUARY 2 16, 2018 (“CURRENT APPLE SHAREHOLDERS”). 3 THIS NOTICE RELATES TO THE PENDENCY AND PROPOSED SETTLEMENT OF SHAREHOLDER DERIVATIVE LITIGATION AND CONTAINS IMPORTANT 4 INFORMATION ABOUT YOUR RIGHTS. PLEASE READ THIS NOTICE CAREFULLY AND IN ITS ENTIRETY. THIS IS NOT A “CLASS ACTION.” THUS, THERE IS NO 5 COMMON FUND UPON WHICH YOU CAN MAKE A CLAIM FOR A MONETARY PAYMENT. 6 IF YOU HOLD APPLE COMMON STOCK FOR THE BENEFIT OF ANOTHER, 7 PLEASE PROMPTLY TRANSMIT THIS DOCUMENT TO SUCH BENEFICIAL OWNER. -

Apple Inc. Report of the Special Litigation Committee

APPLE INC. REPORT OF THE SPECIAL LITIGATION COMMITTEE OF THE BOARD OF DIRECTORS REGARDING MR. EDUARDO CUE JANUARY 19, 2017 TABLE OF CONTENTS Page I. EXECUTIVE SUMMARY ............................................................................................... 1 II. BACKGROUND OF APPLE INC. ................................................................................... 5 III. APPLE’S ENTRY INTO THE EBOOKS MARKET AND THE TRANSACTIONS THAT GAVE RISE TO THE ANTITRUST JUDGMENT ............... 6 A. Background Of The Ebook Industry ...................................................................... 6 B. Apple’s Initial Negotiations With Publishers ........................................................ 7 C. The Written Publisher Agreements ...................................................................... 11 D. The Launch of the iPad and iBookstore ............................................................... 18 IV. THE ANTITRUST JUDGMENT AND SETTLEMENT ................................................ 19 A. Antitrust Judgment ............................................................................................... 19 B. Monetary Settlement ............................................................................................ 22 C. Appellate Proceedings ......................................................................................... 22 V. THE MONITOR .............................................................................................................. 23 VI. THE DERIVATIVE ACTION ....................................................................................... -

BULLETIN March 2021, Adar – Nisan 5781

Beth Israel – The West Temple BULLETIN March 2021, Adar – Nisan 5781 Our Mission from the Rabbi's Desk... To be a center of worship and 15 Steps to Hope vital community life where Jews and their families from It’s the month of March. The month of spring. Cleveland’s western Time for spring cleaning. Time to clean out our communities learn Jewish cupboards. And time to get ready for Passover! traditions and values, develop We can use the 15 steps of the Passover their Jewish identity, and seder as part of our personal preparation assure the continuity of process, especially in this continuing time of Jewish life. COVID. Our seder can be the ultimate antidote to despair as we move from sadness to joy, from desperation to hope. Let’s take a look… Features 1. Kadesh – reciting Kiddush, designating the time as Rabbi's Desk..................... 1-2 sacred. Set aside time to allow for real listening, March & Passover introspection, and growth. Programming ....................2-3 2. Urchatz – washing the hands. Set aside time for reflecting 21st-century Renovations .....4 on that which burdens you and how you can let it go. BI-TWT Supporting Statement for the Historical Marker ......5 3. Karpas – eating a vegetable dipped in salt water. How has sadness (the salty tears) over this past year tempered What Was It Like?.............6-8 our growth? We eat a green vegetable—a sign of spring, of By the Book ....................9-10 new growth. How does that reflect us as we come to bloom Educator's Desk & Virtual after the winter? Retreat Pictures .............11-12 4. -

Amerimuncvi BG Apple.Pdf

© 2018 American University Model United Nations Conference All rights reserved. No part of this background guide may be reproduced or transmitted in any form or by any means whatsoever without express written permission from the American University Model United Nations Conference Secretariat. Please direct all questions to [email protected] Pia Paul Chair Dear Delegates, Welcome to the Apple Board of Directors’ committee! I am so ridiculously excited to take part in this experience with all of you! The main mission of our committee is to improve Apple’s image to the general public whilst making sure that as part of the Board of Directors, you are maximizing profits for Apple. Our committee will focus on key issues that Apple faces such as privacy, corporate social responsibility, and treatment of workers. I hope that all of you have a great time in committee and acquire an even greater understanding of crisis procedure when dealing with a company such as Apple. Please keep in mind that in this crisis committee; there is so much space for all of you to move committee in a certain way with dynamic, and two dimensional characters. The possibilities are limitless! I am currently a freshman and this will be my first time chairing for AmeriMUNC and I can’t wait to see the creativity and different character arcs you all bring. I participated in Model United Nations all throughout high school- starting in general assembly committees and then moving onto crisis committees (which were my favorite). It was being in Model United Nations that fostered my interest in international relations and consequently, I am majoring in international relations and minoring in economics at American University. -

Spring 2021 TE TA UN S E ST TH at I F E V a O O E L F a DITAT DEUS

Commencement 2021 Spring 2021 TE TA UN S E ST TH AT I F E V A O O E L F A DITAT DEUS N A E R R S I O Z T S O A N Z E I A R I T G R Y A 1912 1885 ARIZONA STATE UNIVERSITY COMMENCEMENT AND CONVOCATION PROGRAM Spring 2021 May 3, 2021 THE NATIONAL ANTHEM CONTENTS THE STAR-SPANGLED BANNER The National Anthem and O say can you see, by the dawn’s early light, Arizona State University Alma Mater ................................. 2 What so proudly we hailed at the twilight’s last gleaming? Whose broad stripes and bright stars through the perilous fight Letter of Congratulations from the Arizona Board of Regents ............... 5 O’er the ramparts we watched, were so gallantly streaming? History of Honorary Degrees .............................................. 6 And the rockets’ red glare, the bombs bursting in air Gave proof through the night that our flag was still there. Past Honorary Degree Recipients .......................................... 6 O say does that Star-Spangled Banner yet wave Conferring of Doctoral Degrees ............................................ 9 O’er the land of the free and the home of the brave? Sandra Day O’Connor College of Law Convocation ....................... 29 ALMA MATER Conferring of Masters Degrees ............................................ 36 ARIZONA STATE UNIVERSITY Craig and Barbara Barrett Honors College ................................102 Where the bold saguaros Moeur Award ............................................................137 Raise their arms on high, Praying strength for brave tomorrows Graduation with Academic Recognition ..................................157 From the western sky; Summa Cum Laude, 157 Where eternal mountains Magna Cum Laude, 175 Kneel at sunset’s gate, Cum Laude, 186 Here we hail thee, Alma Mater, Arizona State. -

Published by the Assistive Technology Industry Association (ATIA) Implementing at in Practice: New Technologies and Techniques

Published by the Assistive Technology Industry Association (ATIA) Volume 12 Summer 2018 Implementing AT in Practice: New Technologies and Techniques Jennifer L. Flagg Editor-in-Chief Kate Herndon & Carolyn P. Phillips Associate Editors Volume 12, Summer 2018 Copyright Ó 2018 Assistive Technology Industry Association ISSN 1938-7261 Assistive Technology Outcomes and Benefits | i Implementing AT in Practice: New Technologies and Techniques Volume 12, Summer 2018 Assistive Technology Outcomes and Benefits Implementing AT in Practice: New Technologies and Techniques Volume 12, Summer 2018 Editor-in-Chief JenniFer L. Flagg Center on KT4TT & TCIE, University at Buffalo Publication Managers Associate Editors Victoria A. Holder Kate Herndon Tools For LiFe, Georgia Institute oF Technology American Printing House For the Blind Elizabeth A. Persaud Carolyn P. Phillips Tools For LiFe, Georgia Institute oF Technology Tools For LiFe, Georgia Institute oF Technology Caroline Van Howe Copy Editor Assistive Technology Industry Association Beverly Nau Assistive Technology Outcomes and BeneFits is a collaborative peer-reviewed publication of the Assistive Technology Industry Association (ATIA). Editing policies oF this issue are based on the Publication Manual oF the American Psychological Association (6th edition) and may be Found online at www.atia.org/atob/editorialpolicy. The content herein does not reflect the position or policy of ATIA and no ofFicial endorsement should be inferred. Editorial Board Members and Managing Editors David Banes Beth Poss