Case Studies in Bayesian Statistics Workshop 9 Poster Session

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CHRISTINA “TINA” WARINNER (Last Updated October 18, 2018)

CHRISTINA “TINA” WARINNER (last updated October 18, 2018) Max Planck Institute University of Oklahoma for the Science of Human History (MPI-SHH) Department of Anthropology Department of Archaeogenetics Laboratories of Molecular Anthropology Kahlaische Strasse 10, 07743 Jena, Germany And Microbiome Research (LMAMR) +49 3641686620 101 David L. Boren Blvd, [email protected] Norman, OK 73019 USA www.christinawarinner.com [email protected] http://www.shh.mpg.de/employees/50506/25522 www.lmamr.org APPOINTMENTS W2 Group Leader, Max Planck Institute for the Science of Human History, Germany 2016-present University Professor, Friedrich Schiller University, Jena, Germany 2018-present Presidential Research Professor, Univ. of Oklahoma, USA 2014-present Assistant Professor, Dept. of Anthropology, Univ. of Oklahoma, USA 2014-present Adjunct Professor, Dept. of Periodontics, College of Dentistry, Univ. of Oklahoma, USA 2014-present Visiting Associate Professor, Dept. of Systems Biology, Technical University of Denmark 2015 Research Associate, Dept. of Anthropology, Univ. of Oklahoma, USA 2012-2014 Acting Head of Group, Centre for Evolutionary Medicine, Univ. of Zürich, Switzerland 2011-2012 Research Assistant, Centre for Evolutionary Medicine, Univ. of Zürich, Switzerland 2010-2011 EDUCATION Ph.D., Anthropology, Dept. of Anthropology, Harvard University 2010 Thesis Title: “Life and Death at Teposcolula Yucundaa: Mortuary, Archaeogenetic, and Isotopic Investigations of the Early Colonial Period in Mexico” A.M., Anthropology, Dept. of Anthropology, Harvard University 2008 B.A., with Honors, Anthropology, University of Kansas 2003 B.A., Germanic Literatures and Languages, University of Kansas 2003 SELECTED HONORS, AWARDS, AND FELLOWSHIPS Invited speaker, British Academy, Albert Reckitt Archaeological Lecture (forthcoming) 2019 Invited speaker, EMBL Science and Society (forthcoming, Nov. -

Ayshwarya Subramanian Updated June 10, 2021

Ayshwarya Subramanian Updated June 10, 2021 Contact Kuchroo Lab and Klarman Cell Observatory Information Broad Institute twitter: @ayshwaryas 5407G, 415 Main Street website: ayshwaryas.github.io Cambridge, MA 02412 USA email:[email protected] Areas of Computational Biology (Kidney disease, Cancer, Inflammatory Bowel Disease, RNA Biology), Ge- expertise nomic Data Analysis (Single-cell and Bulk-RNAseq, Metagenomics, Exome and single-nucleus DNA sequencing), Machine Learning, Probabilistic modeling, Phylogenetics, Applied Statistics Education 2013 Ph.D., Biological Sciences Carnegie Mellon University, Pittsburgh, PA USA Doctoral Advisor: Russell Schwartz, Ph.D. Dissertation: Inferring tumor evolution using computational phylogenetics 2007 M.Sc. (Hons), Biological Sciences (Undergraduate degree) Birla Institute of Technology and Science (BITS{Pilani), Rajasthan, India CGPA 9.21/10, Major GPA 10/10, with Distinction Undergraduate Honors Thesis: A mathematical model for phototactic responses in Halobac- terium salinarium, Max Planck Institute for Complex Technical Systems, Germany. Current Computational Scientist, Cambridge MA 2017{Present Appointment Mentors: Aviv Regev, Ph.D. & Vijay Kuchroo, Ph.D., D.V.M Research Summary: Single-cell portraits of disease and normal states using human data, mouse and organoid models Klarman Cell Observatory Broad Institute, Cambridge, MA 02142 Publications Pre-prints/Under review [1] Subramanian A†, Vernon KA†, Zhou Y† et al. Obesity-instructed TREM2high macrophages identified by comparative analysis of diabetic mouse and human kidney at single cell resolution. bioRxiv 2021.05.30.446342; doi: https://doi.org/10.1101/2021.05.30.446342. [2] Subramanian A†,Vernon KA†, Slyper M, Waldman J, et al. RAAS blockade, kidney dis- ease, and expression of ACE2, the entry receptor for SARS-CoV-2, in kidney epithelial and endothelial cells. -

UNIVERSITY of CALIFORNIA SAN DIEGO Making Sense of Microbial

UNIVERSITY OF CALIFORNIA SAN DIEGO Making sense of microbial populations from representative samples A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Computer Science by James T. Morton Committee in charge: Professor Rob Knight, Chair Professor Pieter Dorrestein Professor Rachel Dutton Professor Yoav Freund Professor Siavash Mirarab 2018 Copyright James T. Morton, 2018 All rights reserved. The dissertation of James T. Morton is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Chair University of California San Diego 2018 iii DEDICATION To my friends and family who paved the road and lit the journey. iv EPIGRAPH The ‘paradox’ is only a conflict between reality and your feeling of what reality ‘ought to be’ —Richard Feynman v TABLE OF CONTENTS Signature Page . iii Dedication . iv Epigraph . .v Table of Contents . vi List of Abbreviations . ix List of Figures . .x List of Tables . xi Acknowledgements . xii Vita ............................................. xiv Abstract of the Dissertation . xvii Chapter 1 Methods for phylogenetic analysis of microbiome data . .1 1.1 Introduction . .2 1.2 Phylogenetic Inference . .4 1.3 Phylogenetic Comparative Methods . .6 1.4 Ancestral State Reconstruction . .9 1.5 Analysis of phylogenetic variables . 11 1.6 Using Phylogeny-Aware Distances . 15 1.7 Challenges of phylogenetic analysis . 18 1.8 Discussion . 19 1.9 Acknowledgements . 21 Chapter 2 Uncovering the horseshoe effect in microbial analyses . 23 2.1 Introduction . 24 2.2 Materials and Methods . 34 2.3 Acknowledgements . 35 Chapter 3 Balance trees reveal microbial niche differentiation . 36 3.1 Introduction . -

Bioinformatics Opening Workshop September 8 - 12, 2014

Bioinformatics Opening Workshop September 8 - 12, 2014 Mihye Ahn James (Paul) Brooks University of North Carolina Virginia Commonwealth Alexander Alekseyenko Greg Buck New York University Virginia Commonwealth Baiguo An Mark Burch University of North Carolina Ohio State University Jeremy Ash Christopher Burke North Carolina State University University of Cincinnati Deepak Nag Ayyala Hongyuan Cao University of Maryland University of Missouri Keith Baggerly Vincent Carey MD Anderson Center Harvard University Veera Baladandayuthapani Luis Carvalho MD Anderson Center Boston University Pallavi Basu Alberto Cassese University of Southern California Rice University Munni Begum Changgee Chang Ball State University Emory University Emanuel Ben-David Hegang Chen Columbia University University of Maryland Anindya Bhadra Chen Chen Purdue University Purdue University Yuanyuan Bian Mengjie Chen University of Missouri University of North Carolina Huybrechts Frazier Achard Bindele Hyoyoung Choo-Wosoba University of South Alabama University of Louisville Kristen Borchert Pankaj Choudhary BD Technologies University of Texas Russell Bowler Hyonho Chun National Jewish Health Purdue University Bioinformatics Opening Workshop September 8 - 12, 2014 Robert Corty Yang Feng University of North Carolina Columbia University Xinping Cui Hua Feng University of California, Riverside University of Idaho Hongying Dai Jennifer Fettweis Children's Mercy Hospital Virginia Commonwealth Susmita Datta Dayne Filer University of Louisville EPA Sujay Datta Christopher Fowler -

Integrating Taxonomic, Functional, and Strain-Level Profiling of Diverse

TOOLS AND RESOURCES Integrating taxonomic, functional, and strain-level profiling of diverse microbial communities with bioBakery 3 Francesco Beghini1†, Lauren J McIver2†, Aitor Blanco-Mı´guez1, Leonard Dubois1, Francesco Asnicar1, Sagun Maharjan2,3, Ana Mailyan2,3, Paolo Manghi1, Matthias Scholz4, Andrew Maltez Thomas1, Mireia Valles-Colomer1, George Weingart2,3, Yancong Zhang2,3, Moreno Zolfo1, Curtis Huttenhower2,3*, Eric A Franzosa2,3*, Nicola Segata1,5* 1Department CIBIO, University of Trento, Trento, Italy; 2Harvard T.H. Chan School of Public Health, Boston, United States; 3The Broad Institute of MIT and Harvard, Cambridge, United States; 4Department of Food Quality and Nutrition, Research and Innovation Center, Edmund Mach Foundation, San Michele all’Adige, Italy; 5IEO, European Institute of Oncology IRCCS, Milan, Italy Abstract Culture-independent analyses of microbial communities have progressed dramatically in the last decade, particularly due to advances in methods for biological profiling via shotgun metagenomics. Opportunities for improvement continue to accelerate, with greater access to multi-omics, microbial reference genomes, and strain-level diversity. To leverage these, we present bioBakery 3, a set of integrated, improved methods for taxonomic, strain-level, functional, and *For correspondence: phylogenetic profiling of metagenomes newly developed to build on the largest set of reference [email protected] (CH); sequences now available. Compared to current alternatives, MetaPhlAn 3 increases the accuracy of [email protected] (EAF); taxonomic profiling, and HUMAnN 3 improves that of functional potential and activity. These [email protected] (NS) methods detected novel disease-microbiome links in applications to CRC (1262 metagenomes) and †These authors contributed IBD (1635 metagenomes and 817 metatranscriptomes). -

Continuous Chromatin State Feature Annotation of the Human Epigenome

bioRxiv preprint doi: https://doi.org/10.1101/473017; this version posted November 18, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY 4.0 International license. Continuous chromatin state feature annotation of the human epigenome Bowen Chen, Neda Shokraneh Kenari, Maxwell W Libbrecht∗ School of Computing Science, Simon Fraser University, Burnaby BC, Canada Abstract Semi-automated genome annotation (SAGA) methods are widely used to understand genome activity and gene regulation. These methods take as input a set of sequencing-based assays of epigenomic activity (such as ChIP-seq measurements of histone modification and transcription factor binding), and output an annotation of the genome that assigns a chromatin state label to each genomic position. Existing SAGA methods have several limitations caused by the discrete annotation framework: such annotations cannot easily represent varying strengths of genomic elements, and they cannot easily represent combinatorial elements that simultaneously exhibit multiple types of activity. To remedy these limitations, we propose an annotation strategy that instead outputs a vector of chromatin state features at each position rather than a single discrete label. Continuous modeling is common in other fields, such as in topic modeling of text documents. We propose a method, epigenome-ssm, that uses a Kalman filter state space model to efficiently annotate the genome with chromatin state features. We show that chromatin state features from epigenome-ssm are more useful for several downstream applications than both continuous and discrete alternatives, including their ability to identify expressed genes and enhancers. -

Daniel Aalberts Scott Aa

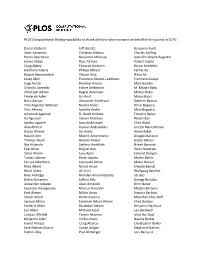

PLOS Computational Biology would like to thank all those who reviewed on behalf of the journal in 2015: Daniel Aalberts Jeff Alstott Benjamin Audit Scott Aaronson Christian Althaus Charles Auffray Henry Abarbanel Benjamin Althouse Jean-Christophe Augustin James Abbas Russ Altman Robert Austin Craig Abbey Eduardo Altmann Bruno Averbeck Hermann Aberle Philipp Altrock Ferhat Ay Robert Abramovitch Vikram Alva Nihat Ay Josep Abril Francisco Alvarez-Leefmans Francisco Azuaje Luigi Acerbi Rommie Amaro Marc Baaden Orlando Acevedo Ettore Ambrosini M. Madan Babu Christoph Adami Bagrat Amirikian Mohan Babu Frederick Adler Uri Amit Marco Bacci Boris Adryan Alexander Anderson Stephen Baccus Tinri Aegerter-Wilmsen Noemi Andor Omar Bagasra Vera Afreixo Isabelle Andre Marc Baguelin Ashutosh Agarwal R. David Andrew Timothy Bailey Ira Agrawal Steven Andrews Wyeth Bair Jacobo Aguirre Ioan Andricioaei Chris Bakal Alaa Ahmed Ioannis Androulakis Joseph Bak-Coleman Hasan Ahmed Iris Antes Adam Baker Natalie Ahn Maciek Antoniewicz Douglas Bakkum Thomas Akam Haroon Anwar Gabor Balazsi Ilya Akberdin Stefano Anzellotti Nilesh Banavali Eyal Akiva Miguel Aon Rahul Banerjee Sahar Akram Lucy Aplin Edward Banigan Tomas Alarcon Kevin Aquino Martin Banks Larissa Albantakis Leonardo Arbiza Mukul Bansal Reka Albert Murat Arcak Shweta Bansal Martí Aldea Gil Ariel Wolfgang Banzhaf Bree Aldridge Nimalan Arinaminpathy Lei Bao Helen Alexander Jeffrey Arle Gyorgy Barabas Alexander Alexeev Alain Arneodo Omri Barak Leonidas Alexopoulos Markus Arnoldini Matteo Barberis Emil Alexov -

Roles of the Mathematical Sciences in Bioinformatics Education

Roles of the Mathematical Sciences in Bioinformatics Education Curtis Huttenhower 09-09-14 Harvard School of Public Health Department of Biostatistics U. Oregon Who am I, and why am I here? • Assoc. Prof. of Computational Biology in Biostatistics dept. at Harvard Sch. of Public Health – Ph.D. in Computer Science – B.S. in CS, Math, and Chemistry • My teaching focuses on graduate level: – Survey courses in bioinformatics for biologists – Applied training in systems for research computing – Reproducibility and best practices for “computational experiments” 2 HSPH BIO508: Genomic Data Manipulation http://huttenhower.sph.harvard.edu/bio508 3 Teaching quantitative methods to biologists: challenges • Biologists aren’t interested in stats as taught to biostatisticians – And they’re right! – Biostatisticians don’t usually like biology, either – Two ramifications: excitement gap and pedagogy • Differences in research culture matter – Hands-on vs. coursework – Lab groups – Rotations – Length of Ph.D. –… • There are few/no textbooks that are comprehensive, recent, and audience-appropriate – Choose any two of three 4 Teaching quantitative methods to biologists: (some of the) solutions • Teach intuitions – Biologists will never need to prove anything about a t-distribution – They will benefit minimally from looking up when to use a t-test – They will benefit a lot from seeing when a t-test lies (or doesn’t) • Teach applications – Problems/projects must be interactive – IPython Notebook / RStudio / etc. are invaluable • Teach writing • Demonstrate -

Curtis Huttenhower Associate Professor of Computational Biology

Curtis Huttenhower Associate Professor of Computational Biology and Bioinformatics Department of Biostatistics, Chan School of Public Health, Harvard University 655 Huntington Avenue • Boston, MA 02115 • 617-432-4912 • [email protected] Academic Appointments July 2009 - Present Department of Biostatistics, Harvard T.H. Chan School of Public Health April 2013 - Present Associate Professor of Computational Biology and Bioinformatics July 2009 - March 2013 Assistant Professor of Computational Biology and Bioinformatics Education November 2008 - June 2009 Lewis-Sigler Institute for Integrative Genomics, Princeton University Supervisor: Dr. Olga Troyanskaya Postdoctoral Researcher August 2004 - November 2008 Computer Science Department, Princeton University Adviser: Dr. Olga Troyanskaya Ph.D. in Computer Science, November 2008; M.A., June 2006 August 2002 - May 2004 Language Technologies Institute, Carnegie Mellon University Adviser: Dr. Eric Nyberg M.S. in Language Technologies, December 2003 August 1998 - November 2000 Rose-Hulman Institute of Technology B.S. summa cum laude, November 2000 Majored in Computer Science, Chemistry, and Math; Minored in Spanish August 1996 - May 1998 Simon's Rock College of Bard A.A., May 1998 Awards, Honors, and Scholarships • ISCB Overton PriZe (Harvard Chan School, 2015) • eLife Sponsored Presentation Series early career award (Harvard Chan School, 2014) • Presidential Early Career Award for Scientists and Engineers (Harvard Chan School, 2012) • NSF CAREER award (Harvard Chan School, 2010) • Quantitative -

BMC Genomics Biomed Central

BMC Genomics BioMed Central Research article Open Access Finding function: evaluation methods for functional genomic data Chad L Myers1,2, Daniel R Barrett1,2, Matthew A Hibbs1,2, Curtis Huttenhower1,2 and Olga G Troyanskaya*1,2 Address: 1Department of Computer Science, Princeton University, Princeton, NJ 08544, USA and 2Lewis-Sigler Institute for Integrative Genomics, Princeton University, Princeton NJ, 08544, USA Email: Chad L Myers - [email protected]; Daniel R Barrett - [email protected]; Matthew A Hibbs - [email protected]; Curtis Huttenhower - [email protected]; Olga G Troyanskaya* - [email protected] * Corresponding author Published: 25 July 2006 Received: 10 May 2006 Accepted: 25 July 2006 BMC Genomics 2006, 7:187 doi:10.1186/1471-2164-7-187 This article is available from: http://www.biomedcentral.com/1471-2164/7/187 © 2006 Myers et al; licensee BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract Background: Accurate evaluation of the quality of genomic or proteomic data and computational methods is vital to our ability to use them for formulating novel biological hypotheses and directing further experiments. There is currently no standard approach to evaluation in functional genomics. Our analysis of existing approaches shows that they are inconsistent and contain substantial functional biases that render the resulting evaluations misleading both quantitatively and qualitatively. These problems make it essentially impossible to compare computational methods or large-scale experimental datasets and also result in conclusions that generalize poorly in most biological applications. -

Ismb/Eccb 2019 Proceedings Papers Committee

Bioinformatics, 35, 2019, i3 doi: 10.1093/bioinformatics/btz494 ISMB/ECCB 2019 ISMB/ECCB 2019 PROCEEDINGS PAPERS COMMITTEE Downloaded from https://academic.oup.com/bioinformatics/article/35/14/i3/5529265 by guest on 04 October 2021 PROCEEDINGS COMMITTEE Systems Biology and Networks Sushmita Roy, University of Wisconsin, US PROCEEDINGS CO-CHAIRS Roded Sharan, Tel-Aviv University, Israel Yana Bromberg, Rutgers University, US General Computational Biology Nadia El-Mabrouk, Universite´ de Montre´al, Canada Olga Vitek, Northeastern University, US Predrag Radivojac, Northeastern University, US Daisuke Kihara, Purdue University, US Area Chairs: Bioinformatics Education Anne Rosenwald, Georgetown University, US Bioinformatics of Microbes and Microbiomes ISMB/ECCB 2019 STEERING COMMITTEE Curtis Huttenhower, Harvard University, US Yuzhen Ye, Indiana University, US Yana Bromberg, Proceedings Co-chair, Rutgers University, US Comparative and Functional Genomics Nadia El-Mabrouk, Proceedings Co-chair, Universite´deMontre´al, Can Alkan, Bilkent University, Turkey Canada Carl Kingsford, Carnegie Mellon University, US Bruno Gaeta, Treasurer, University of New South Wales, Australia Genome Privacy and Security Janet Kelso, Conference Advisory Council Co-chair, Max Planck Haixu Tang, Indiana University, US Institute for Evolutionary Anthropology, Germany Genomic Variation Analysis Diane E. Kovats, ISCB Executive Director, US Martin Kircher, Berlin Institute of Health, Germany Steven Leard, ISMB Conference Director, Canada Sriram Sankararaman, UCLA, US Thomas -

Changes in the Composition and Function of the Gut Microbiome Accompany Type 1 Diabetes in Pregnancy

Changes in the composition and function of the gut microbiome accompany type 1 diabetes in pregnancy Alexandra J. Roth-Schulze Walter and Eliza Hall Institute of Medical Research Bioinformatics Division Megan A. S. Penno The University of Adelaide Robinson Research Institute Katrina M. Ngui Walter and Eliza Hall Institute of Medical Research Helena Oakey School of agriculture & wine and waite research institute, University of Adelaide Rebecca L. Thomson The University of Adelaide Robinson Research Institute Peter J. Vuillermin Faculty of school os Medicine, Deakin University and Child Health Unit, Barwon Health Geelong Maria E Craig School of women's and children's health, faculty of medicine, Univerisy of New South Wales, Sydney, NSW William D Rawlinson Virology reasearch laboratory, Serology and virology division, South eastern area, Laboratory services microbiology, Prince of Wales hospital, Syndey, NSW Elizabeth A Davis Telethon Institute for Child health research, Center for child health research, University of Western Australia, Perth Mark Harris The University of Queensland Diamantina Institute Georgia Soldatos Monash centre for health research and implementation, School of public health and preventive medicine, Monash University, Melobourne and diabetes and vascular medicine unit, Monash Health, Melbourne, VIC 3168 Peter G Colman Department of diabetes and endocrinology, Royal Melbourne Hospital, Melbourne, VIC. 3050 John M Wentworth Walter and Eliza Hall Institute of Medical Reseach, Melbourne, VIC, 3052 Page 1/37 Aveni Haynes Western Australia Department of Health Grant Morahan Centre for diabetes research, Harris Perkins Institute of Medical research, The University of Western Australia, Perth, WA Naiara G. Bediaga Walter and Eliza Hall Institute of Medical Research Gordon K Smyth Walter and Eliza Hall Institute of Medical Research Bioinformatics Division Anthony T.