Signature Redacted Author

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

India's Take on Sports Analytics

PSYCHOLOGY AND EDUCATION (2020) 57(9): 5817-5827 ISSN: 00333077 India’s Take on Sports Analytics Rohan Mehta1, Dr.Shilpa Parkhi2 Student, Symbiosis Institute of Operations Mangement, Nashik, India Deputy Director, Symbiosis Institute of Operations Mangement, Nashik, India Email Id: [email protected] ABSTRACT Purpose – The aim of this paper is to study what is sports analytics, what are the different roles in this field, which sports are prominently using this, how big data has impacted this field, how this field is shaping up in Indian context. Also, the aim is to study the growth of job opportunities in this field, how B-schools are shaping up in this aspect and what are the interests and expectations of the B-school grads from this sector. Keywords Sports analytics, Sabermetrics, Moneyball, Technologies, Team sports, IOT, Cloud Article Received: 10 August 2020, Revised: 25 October 2020, Accepted: 18 November 2020 Design Approach analysis, he had done on approximately 10000 deliveries. Another writer, for one of the US The paper starts by explaining about the origin of magazines, F.C Lane was of the opinion that the sports analytics, the most naïve form of it, then batting average of the individual doesn’t reflect moves towards explaining the evolution of it over the complete picture of the individual’s the years (from emergence of sabermetrics to the performance. There were other significant efforts most advanced applications), how it has spread made by other statisticians or writers such as across different sports and how the applications of George Lindsey, Allan Roth, Earnshaw Cook till it has increased with the advent of different 1969. -

Topps - UEFA Champions League Match Attax 2015/16 (08) - Checklist

Topps - UEFA Champions League Match Attax 2015/16 (08) - Checklist 2015-16 UEFA Champions League Match Attax 2015/16 Topps 562 cards Here is the complete checklist. The total of 562 cards includes the 32 Pro11 cards and the 32 Match Attax Live code cards. So thats 498 cards plus 32 Pro11, plus 32 MA Live and the 24 Limited Edition cards. 1. Petr Ĉech (Arsenal) 2. Laurent Koscielny (Arsenal) 3. Kieran Gibbs (Arsenal) 4. Per Mertesacker (Arsenal) 5. Mathieu Debuchy (Arsenal) 6. Nacho Monreal (Arsenal) 7. Héctor Bellerín (Arsenal) 8. Gabriel (Arsenal) 9. Jack Wilshere (Arsenal) 10. Alex Oxlade-Chamberlain (Arsenal) 11. Aaron Ramsey (Arsenal) 12. Mesut Özil (Arsenal) 13. Santi Cazorla (Arsenal) 14. Mikel Arteta (Arsenal) - Captain 15. Olivier Giroud (Arsenal) 15. Theo Walcott (Arsenal) 17. Alexis Sánchez (Arsenal) - Star Player 18. Laurent Koscielny (Arsenal) - Defensive Duo 18. Per Mertesacker (Arsenal) - Defensive Duo 19. Iker Casillas (Porto) 20. Iván Marcano (Porto) 21. Maicon (Porto) - Captain 22. Bruno Martins Indi (Porto) 23. Aly Cissokho (Porto) 24. José Ángel (Porto) 25. Maxi Pereira (Porto) 26. Evandro (Porto) 27. Héctor Herrera (Porto) 28. Danilo (Porto) 29. Rúben Neves (Porto) 30. Gilbert Imbula (Porto) 31. Yacine Brahimi (Porto) - Star Player 32. Pablo Osvaldo (Porto) 33. Cristian Tello (Porto) 34. Alberto Bueno (Porto) 35. Vincent Aboubakar (Porto) 36. Héctor Herrera (Porto) - Midfield Duo 36. Gilbert Imbula (Porto) - Midfield Duo 37. Joe Hart (Manchester City) 38. Bacary Sagna (Manchester City) 39. Martín Demichelis (Manchester City) 40. Vincent Kompany (Manchester City) - Captain 41. Gaël Clichy (Manchester City) 42. Elaquim Mangala (Manchester City) 43. Aleksandar Kolarov (Manchester City) 44. -

The Numbers Game: Baseball's Lifelong Fascination with Statistics (Book Review)

The Numbers Game: Baseball's Lifelong Fascination with Statistics (Book Review) The American Statistician May 1, 2005 | Cochran, James J. The Numbers Game: Baseball's Lifelong Fascination with Statistics. Alan SCHWARZ. New York: Thomas Dunne Books, 2004, xv + 270 pp. $24.95 (H), ISBN: 0‐312‐322222‐4. I am amazed at how frequently I walk into a colleague's office for the first time and spot a copy of The Baseball Encyclopedia or Total Baseball on a bookshelf. How many statisticians developed and nurtured their interest in probability and statistics by playing Strat‐O‐ Matic[R] and/or APBA[R] baseball board games; reading publications like the annual The Bill James Baseball Abstract; or scanning countless box scores and current summary statistics in the Sporting News or in the sports section of the local newspaper, looking for an edge in a rotisserie baseball league? For many of us, baseball is what initially opened our eyes to the power of probability and statistics, and the sport continues to be an integral part of our lives (for evidence of this, attend the sessions or business meeting of the ASA's Statistics in Sports section at the next Joint Statistical Meetings). This is why so many probabilists and statisticians will enjoy reading Alan Schwarz's The Numbers Game: Baseball's Lifelong Fascination with Statistics. In this book, Schwarz effectively chronicles the ongoing association between baseball and statistics by describing the evolution of the sport from the mid‐nineteenth century through the beginning of the twenty‐first century, and looking at several of its most influential characters. -

El Somni Continua

FUTBOL COPA BÀSQUET EUROLLIGA El Barça no pot El somni continua ensopegar avui al Palau contra l’Estrella Roja L’Espanyol acarona les semifinals de la copa després de superar totalment el Sevilla, que va fer el gol en el minut 90 3-1 (20.45 h, Esport 3) 0,5 ALBERT SALAMÉ EUROS ANY 14. NÚM. 4717 ANY 14. · www.lesportiudecatalunya.cat · @lesportiucat · www.lesportiudecatalunya.cat DIVENDRES, 23 DE GENER DEL 2015 · 2015 DE GENER DEL 23 DIVENDRES, Futbol i eleccions XAVI TORRES OPINIÓ La marca Dakar TONI ROMERO Bon dia, què ha fet Bekele? MARC NEGRE EL CAS NEYMAR I LA SANCIÓ DE LA FIFA MARQUEN L’ANY DE BARTOMEU 2·OPINIÓ L’ESPORTIU. DIVENDRES, 23 DE GENER DEL 2015 AVUI FA... Jordi Camps DIARI DE REDACCIÓ Lluís Simon Odegaard Fitxar jugadors de setze anys per amagar el fracàs d’una eliminació no ho havia fet ni Núñez. De vegades, ja se sap, l’alumne supera el mestre. PUGEN I BAIXEN Sandro Rosell plega 2014 FUTBOL. El president del FC Barcelona, Sandro Rosell, va anunciar, inesperadament, la se- va dimissió. Rosell va presentar la renúncia enmig de la polèmica pel cost del fitxatge del brasiler Neymar i va denunciar amenaces a la seva família. El dirigent havia estat escollit el 2010 amb més de 35.000 vots. El vicepresident esportiu, Josep Ma- ria Bartomeu, va agafar el relleu. ■ FOTO: EFE VELIMIR PERASOVIC VALERO RIVERA CIUTAT BARCELONA TER STEGEN BÀSQUET HANDBOL ESGRIMA FUTBOL L’entrenador croat ha L’extrem català és el Les millors especialis- El porter alemany del estat acomiadat del Va- màxim golejador de la tes de l’espasa esta- Barça va deixar a zero lència pels mals resul- selecció espanyola en ran aquest cap de set- la seva porteria contra tats de la temporada: el mundial d’handbol i mana en el trofeu Ciu- l’Atlético i encara no eliminat de l’Eurolliga, va ser decisiu en el tat de Barcelona d’es- ha rebut cap gol en els li va anar d’un pèl no triomf contra Qatar, grima que es farà a quatre partits que ha entrar en la copa. -

PDF Numbers and Names

CeLoMiManca Checklist UEFA Champions League 2014-2015 1 Panini special sticker 21 Logo 41 Guilherme Siqueira 61 Andrea Pirlo 81 Alejandro 2 Trophy 22 Logo 42 Gabi 62 Paul Pogba Domínguez 3 Official ball 23 Logo 43 Mario Suárez 63 Claudio Marchisio 82 Ibrahim Afellay 4 UEFA respect 24 Logo 44 Koke 64 Carlos Tévez 83 Kostas Mitroglou 5 Logo 25 Logo 45 Antoine Griezmann 65 Fernando Llorente 84 Balázs Megyeri 6 Logo 26 Logo 46 Arda Turan 66 Marco Storari 85 Omar Elabdellaoui 7 Logo 27 Logo 47 Mario Mandžukić 67 Leonardo Bonucci 86 Kostas Giannoulis 8 Logo 28 Logo 48 Jan Oblak 68 Martín Cáceres 87 Delvin Ndinga 9 Logo 29 Logo 49 Cristian Ansaldi 69 Kwadwo Asamoah 88 Luka Milivojević 10 Logo 30 Logo 50 Jesús Gámez 70 Kingsley Coman 89 Jorge Benítez 11 Logo 31 Logo 51 Tiago 71 Sebastian Giovinco 90 Dimitris Diamantakos 12 Logo 32 Logo 52 Raúl García 72 Álvaro Morata 91 Robin Olsen 13 Logo 33 Logo 53 Alessio Cerci 73 Roberto Jiménez 92 Anton Tinnerholm 14 Logo 34 Logo 54 Raúl Jiménez 74 Leandro Salino 93 Erik Johansson 15 Logo 35 Logo 55 Gianluigi Buffon 75 Alberto Botía 94 Filip Helander 16 Logo 36 Logo 56 Stephan Lichtsteiner 76 Éric Abidal 95 Ricardinho 17 Logo 37 Miguel Ángel Moyà 57 Andrea Barzagli 77 Arthur Masuaku 96 Simon Kroon 18 Logo 38 Juanfran 58 Giorgio Chiellini 78 Pajtim Kasami 97 Enoch Adu 19 Logo 39 Miranda 59 Patrice Evra 79 Giannis Maniatis 98 Markus Halsti 20 Logo 40 Diego Godín 60 Arturo Vidal 80 David Fuster 99 Emil Forsberg CeLoMiManca www.celomimanca.it UEFA Champions League 2014-2015 Page: 1 100 Markus Rosenberg 127 Tomáš -

Chivas No Piensa En Pagar 120 Millones

FUTBOL INTERNACIONAL 8 C DEPORTES VIERNES 19 DE JULIO DE 2019 : MERIDIANO.MX MERIDIANO.MX : VIERNES 19 DE JULIO DE 2019 Leagues Cup crecerá Viernes 19 de Julio de 2019 a 16 equipos en 2020 Aún no inicia la primera edición de la Leagues Cup y este jueves se anunció que en la segunda aumentará de ocho a 16 el número de participantes entre la Liga MX y la Major League Soccer (MLS). FUTBOL INTERNACIONAL Wolverhampton y Raúl DEPORTES Jiménez conocen a Carlos Vela advierte: Queremos rival en Europa League ganarle a Zlatan y el LA Galaxy Wolverhampton, donde milita el delantero mexicano Raúl Jiménez, El próximo viernes, 19 de julio, se disputará la primera edición de conoció este jueves a su rival de la “El Tráfico” en este 2019, donde verán las caras los goleadores de la Europa League 2019-2020 una vez que MLS, Carlos Vela y Zlatan Ibrahimovic. Crusaders venció en su serie al B36. Al concluir el Torneo Apertura 2019 Salcido anuncia su retiro El experimentado defensa profesional, así que disfrutaré Carlos Salcido, quien cada día, cada entrenamiento, cada partido o estado en el que me milita en el equipo de toque participar. De antemano, Veracruz, anunció este agradecer a los equipos, las jueves su retiro del futbol directivas y dueños de los clubes en los que tuve la fortuna de profesional una vez pertenecer”, señaló. que concluya el Torneo El jugador de 39 años de edad Apertura 2019 de la Liga correspondió a Chivas de Guadalajara por la oportunidad MX. de debutar en la Primera División Por Guillermo Abogado González del futbol mexicano, así como a los clubes de Tigres de la UANL Veracruz, 18 Jul (Notimex).- A y Veracruz, el PSV Eindhoven, de través de un escrito, el de Ocotlán Holanda, y Fulham, de Inglaterra, informó que colgará los botines en en los cuales también jugó. -

A Case of Mexican Football Team for the 2018 World Cup in Russia

Revista del Centro de Investigación de la Universidad La Salle Vol. 13, No. 49, enero-junio, 2018: 43-66 DOI : https://doi.org/10.26457/recein.v13i49.1510 Personnel selection in complex organizations: A case of Mexican football team for the 2018 World Cup in Russia Selección de personal en organizaciones complejas: un caso del equipo de fútbol mexicano para la Copa Mundial 2018 en Rusia Martin Flegl1 Universidad La Salle México (Mexico) Carlos Alberto Jiménez-Bandala Universidad La Salle México (Mexico) Carmen Lozano Universidad La Salle México (Mexico) Luis Andrade Universidad La Salle México (Mexico) Fecha de recepción: 21 de febrero de 2018 Fecha de aceptación: 07 de noviembre de 2018 Disponible en línea: 30 de noviembre de 2018 Abstract Selection of personnel in organizations is usually a difficult task. The process gets even more complicated when the selection takes place in complex organizations where different areas can work towards multiple objectives. As many areas might be involved in the selection, the process can become complex and hard to manage. Therefore, it is desirable to use decision-making tools 1 Email: [email protected] Revista del Centro de Investigación. Universidad La Salle por Dirección de Posgrado e Investigación. Universidad La Salle Ciudad de México se distribuye bajo una Licencia Creative Commons Atribución-NoComercial- CompartirIgual 4.0 Internacional. Flegl, M.; Jiménez-Bandala, C.; Lozano, C.; Andrade, L. to make the process easier. In this article, we propose new methodology for personnel selection based on Multi-criteria Decision Analysis and the integration of qualitative and quantitative data. We demonstrate the selection process on the case of the Mexican football team selection for the 2018 World Cup in Russia. -

A Terra a Terra

Fundació OPINIÓ Ramon Armadàs VALORACIÓ Cristóbal Parralo L'entrenador del Juvenil A analitza l'inici de temporada pil ta FUNDAT L’any 1954 a terra PUBLICACIÓ GratUÏTA OCTUBRE 2013 LA REVISTA DE LA FAMÍLIA DAMM 30 CRISTIAN TELLO "VAIG JUGAR D’EXTREM, PER PRIMERA VEGADA, A LA DAMM" FOTO: EDU BAYER PRESENTACIÓ Inici dels Campionats de Lliga 2013-2014 Tots els equips del CF Damm ja estan competint en les respectives Lligues. En aquest número PÀDEL DAMM de Pilota a Terra, presentem tots els Subcampiones d’Espanya equips de la família Talaván i Castro es van proclamar Damm. FOTO: PEP MORATA subcampiones d’Espanya Júnior. editorial sumari Un pas endavant Ja fa uns mesos que els responsables del CF Damm van decidir donar un impuls al procés evolutiu de l’entitat i potenciar els valors que el caracteritzen. Institucionalment, s’han reforçat les relacions amb diferents clubs cata- lans i s’ha facilitat la col·laboració bidireccional i la fluïdesa en el movi- ment de jugadors. En aquest sentit, la Damm ha dedicat molts esforços per millorar la qualitat i la quantitat d’ofertes que reben els jugadors que acaben l’etapa al nostre club, després de jugar en el primer equip. Un altre àmbit en el qual s’ha treballat ha estat en l’organitzatiu. La primera conseqüència ha estat la reagrupació dels 9 equips de pre- 06 Reportatge benjamins a infantils a les instal·lacions d’Hebron-Teixonera. Un club El Club de Pàdel Damm va participar al necessita estar agrupat per tal que el sentiment de pertinença entre els Campionat d’Espanya i va obtenir bons seus membres pugui florir. -

2021 Topps MLS Checklist(1).Xls

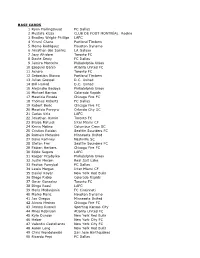

BASE CARDS 1 Ryan Hollingshead FC Dallas 2 Mustafa Kizza CLUB DE FOOT MONTRÉAL Rookie 3 Bradley Wright-Phillips LAFC 4 Yimmi Chara Portland Timbers 5 Memo Rodriguez Houston Dynamo 6 Jonathan dos Santos LA Galaxy 7 Jozy Altidore Toronto FC 8 Dante Sealy FC Dallas 9 Jamiro Monteiro Philadelphia Union 10 Ezequiel Barco Atlanta United FC 11 Achara Toronto FC 12 Sebastian Blanco Portland Timbers 13 Julian Gressel D.C. United 14 Bill Hamid D.C. United 15 Alejandro Bedoya Philadelphia Union 16 Michael Barrios Colorado Rapids 17 Mauricio Pineda Chicago Fire FC 18 Thomas Roberts FC Dallas 19 Robert Beric Chicago Fire FC 20 Mauricio Pereyra Orlando City SC 21 Carlos Vela LAFC 22 Jonathan Osorio Toronto FC 23 Blaise Matuidi Inter Miami CF 24 Kevin Molino Columbus Crew SC 25 Cristian Roldan Seattle Sounders FC 26 Romain Metanire Minnesota United 27 Dave Romney Nashville SC 28 Stefan Frei Seattle Sounders FC 29 Fabian Herbers Chicago Fire FC 30 Eddie Segura LAFC 31 Kacper Przybylko Philadelphia Union 32 Justin Meram Real Salt Lake 33 Paxton Pomykal FC Dallas 34 Lewis Morgan Inter Miami CF 35 Daniel Royer New York Red Bulls 36 Diego Rubio Colorado Rapids 37 Omar Gonzalez Toronto FC 38 Diego Rossi LAFC 39 Haris Medunjanin FC Cincinnati 40 Marko Maric Houston Dynamo 41 Jan Gregus Minnesota United 42 Alvaro Medran Chicago Fire FC 43 Johnny Russell Sporting Kansas City 44 Miles Robinson Atlanta United FC 45 Kyle Duncan New York Red Bulls 46 Heber New York City FC 47 Valentin Castellanos New York City FC 48 Aaron Long New York Red Bulls 49 Chris Wondolowski -

Madrid Close In

PREVIEW ISSUE FOUR – 24TH APRIL 2012 MADRID CLOSE IN REAL TAKE THE SPOILS IN THE EL CLASICO AND CLOSE IN ON THE SILVERWARE After a poor week for both sides in Europe, Real and Barca were desperate to grab a win against their biggest rivals on a dramatic, rain-lashed Saturday night at the Nou Camp. It was Real that grabbed the vital win and probably clinched the La Liga title. Los Merengues went ahead through German midfielders Sami Khedira and were comfortable for long periods, but Barca’s Chilean substitute Alexis Sánchez equalised with just 20 minutes remaining. Cristiano Ronaldo’s clinical strike just three minutes later settled the game with Madrid now seven points clear, with only four games remaining. Madrid coach José Mourinho named an very attacking team and his side began the better. Ronaldo’s early header from a corner brought a superb flying save from goalkeeper Víctor Valdés. His opposite number Iker Casillas was then quickly off his line when full-back Dani Alves dispossessed Sergio Ramos. The game was being played at a fearsome pace and French striker Karim Benzema brought the best out of Valdés again. Barca thought they had gone front when Alves had the ball in the net after a Cristian Tello run, but the youngster had been flagged offside. INSIDE THIS WEEK’S WFW Real’s brave start was rewarded when Pepe’s header from another corner was half- - RESULTS ROUND-UP ON ALL MAJOR stopped by Valdes and Khedira tackled an COMPETITIONS FROM AROUND THE hesitant Carles Puyol to push the ball into the WORLD net to put Madrid ahead. -

MLS As a Sports Product – the Prominence of the World's Game in the U.S

MLS as a Sports Product – the Prominence of the World’s Game in the U.S. Stephen A. Greyser Kenneth Cortsen Working Paper 21-111 MLS as a Sports Product – the Prominence of the World’s Game in the U.S. Stephen A. Greyser Harvard Business School Kenneth Cortsen University College of Northern Denmark (UCN) Working Paper 21-111 Copyright © 2021 by Stephen A. Greyser and Kenneth Cortsen. Working papers are in draft form. This working paper is distributed for purposes of comment and discussion only. It may not be reproduced without permission of the copyright holder. Copies of working papers are available from the author. Funding for this research was provided in part by Harvard Business School. MLS as a Sports Product – the Prominence of the World’s Game in the U.S. April 8, 2021 Abstract The purpose of this Working Paper is to analyze how soccer at the professional level in the U.S., with Major League Soccer as a focal point, has developed over the span of a quarter of a century. It is worthwhile to examine the growth of MLS from its first game in 1996 to where the league currently stands as a business as it moves past its 25th anniversary. The 1994 World Cup (held in the U.S.) and the subsequent implementation of MLS as a U.S. professional league exerted a major positive influence on soccer participation and fandom in the U.S. Consequently, more importance was placed on soccer in the country’s culture. The research reported here explores the league’s evolution and development through the cohesion existing between its sporting and business development, as well as its performance. -

Games Goals Glory: the A-Leagues Teams, Players, Coaches and Greatest Moments Pdf

FREE GAMES GOALS GLORY: THE A-LEAGUES TEAMS, PLAYERS, COACHES AND GREATEST MOMENTS PDF Roy Hay | 208 pages | 01 Oct 2016 | HARDIE GRANT BOOKS | 9781743791806 | English | South Yarra, Australia A-League records and statistics - Wikipedia The season was originally scheduled to begin in late February but postponed before a ball was kicked; however, a rejigged version with two groups of eight teams will begin on Saturday, with Group A based in Dalian and Group B in Suzhou. Each side will play the other teams in their group twice before those finishing in the top four progress to a knockout round Championship Stage to decide the Chinese Super League winners, Players the bottom four entering into a Relegation Stage where they will face off in a bid to avoid dropping into the second tier. Guangzhou Evergrande have been by far the most Games Goals Glory: The A-Leagues Teams side in the Chinese Super League in the last Coaches and Greatest Moments. The Guangdong-based club have picked up eight of the last nine championships and also claimed two Continental titles when they lifted the AFC Champions League trophy in and Their stranglehold did momentarily slip inhowever, when Shanghai SIPG edged them out and they were run close last season by the Shanghainese and traditional heavyweights Beijing FC, with the two sides likely to be their main rivals for the foreseeable future. For several years, Guangzhou have boasted the best foreign and domestic stars in the Chinese game and there remains a Coaches and Greatest Moments core from the two-time Continental Games Goals Glory: The A-Leagues Teams side that includes Zhang Linpeng, Huang Bowen and Elkeson.