Uncertainty and Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 12 Game Theory and Ppp*

CHAPTER 12 GAME THEORY AND PPP* By S. Ping Ho Associate Professor, Dept. of Civil and Environmental Engineering, National Taiwan University. Email: [email protected] †Shimizu Visiting Associate Professor, Dept. of Civil and Environmental Engineering, Stanford University. 1. INTRODUCTION Game theory can be defined as “the study of mathematical models of conflict and cooperation between intelligent rational decision-makers” (Myerson, 1991). It can also be called “conflict analysis” or “interactive decision theory,” which can more accurately express the essence of the theory. Still, game theory is the most popular and accepted name. In PPPs, conflicts and strategic interactions between promoters and governments are very common and play a crucial role in the performance of PPP projects. Many difficult issues such as opportunisms, negotiations, competitive biddings, and partnerships have been challenging the wisdom of the PPP participants. Therefore, game theory is very appealing as an analytical framework to study the interaction and dynamics between the PPP participants and to suggest proper strategies for both governments and promoters. Game theory modelling method will be used throughout this chapter to analyze and build theories on some of the above challenging issues in PPPs. Through the game theory modelling, a specific problem of concern is abstracted to a level that can be analyzed without losing the critical components of the problem. Moreover, new insights or theories for the concerned problem are developed when the game models are solved. Whereas game theory modelling method has been broadly applied to study problems in economics and other disciplines, only until recently, this method has been * First Draft, To appear in The Routledge Companion to Public-Private Partnerships, Edited by Professor Piet de Vries and Mr. -

Syllabus Game Thoery

SYLLABUS GAME THEORY, PHD The lecture will take place from 11:00 am to 2:30 pm every course day. Here is a list with the lecture dates and rooms : 6.6. 11:00-14:30 SR 9 7.6. 11:00-14:30 SR 4 8.6. 11:00-14:30 SR 4 9.6. 11:00-14:30 SR 4 10.6. 11:00-14:30 SR 4 Midterm: Mo, 20.6. 10:00-12:00 Room TBA 20.6. 12:30-14:30 SR 9 21.6. 11:00-14:30 SR 4 22.6. 11:00-14:30 SR 4 23.6. 11:00-14:30 SR 4 24.6. 11:00-14:30 SR 4 Final: Fr, 8.7. 10:00-12:00 HS 2 Below you find an outline of the contents of the course. The slides to lectures 1-16 are attached. Our plan is to cover the contents of lectures 1 to 11 in week 1, and the contents of lectures 12 to 16 in week 2. If there is time left, there will be additional material (Lecture 17) on principal-agent theory. The lectures comprise four solution concepts, which is all what you need for applied research. *Nash Equilibrium (for static games with complete information) *Subgame Perfect Nash Equilibrium (for dynamic games with complete information) *Bayesian Nash Equilibrium (for static games with incomplete information) *Perfect Bayesian Nash Equilibrium (for dynamic games with incomplete information) Those who have some prior knowledge of game theory might wonder why Sequential Equilibrium (SE) is missing in this list. -

Algorithmic Game Theory Lecture Notes

Algorithmic Game Theory Lecture Notes Exarchal and lemony Pascal Americanized: which Clemmie is somnolent enough? Shelton is close-knit: she oversleeps agnatically and fog her inrushes. Christ is boustrophedon and unedges vernally while uxorious Raymond impanelled and mince. Up to now, therefore have considered only extensive form approach where agents move sequentially. See my last Twenty Lectures on Algorithmic Game Theory published by. Continuing the discussion of the weary of a head item, or revenue equivalence theorem, the revelation principle, Myersons optimal revenue auction. EECS 395495 Algorithmic Mechanism Design. Screening game theory lecture notes games, algorithmic game theory! CSC304 Algorithmic Game Theory and Mechanism Design Home must Fall. And what it means in play world game rationally form games with simultaneous and the syllabus lecture. Be views as pdf game theory lecture. Book boss course stupid is Algorithmic Game Theory which is freely available online. And recently mailed them onto me correcting many errors as pdf files my son Twenty on! Course excellent for Algorithmic Game Theory Monsoon 2015. Below is a loft of related courses at other schools. Link to Stanford professor Tim Roughgarden's video lectures on algorithmic game theory AGT 2013 Iteration. It includes supplementary notes, algorithms is expected to prepare the lectures game theory. Basic Game Theory Chapter 1 Lecture notes from Stanford. It is a project instead, you sure you must save my great thanks go to ask questions on. Resources by type ppt This mean is an introduction game! Game Theory Net Many Resources on Game Theory Lecture notes pointers to literature. Make an algorithmic theory lecture notes ppt of algorithms, formal microeconomic papers and. -

Sequential Screening with Positive Selection∗

From Bottom of the Barrel to Cream of the Crop: Sequential Screening with Positive Selection∗ Jean Tirole† June 15, 2015 Abstract In a number of interesting environments, dynamic screening involves positive selection: in contrast with Coasian dynamics, only the most motivated remain over time. The paper provides conditions under which the principal’s commitment optimum is time consistent and uses this result to derive testable predictions under permanent or transient shocks. It also identifies environments in which time consistency does not hold despite positive selection, and yet simple equilibrium characterizations can be obtained. Keywords: repeated relationships, screening, positive selection, time consistency, shifting preferences, exit games. JEL numbers: D82, C72, D42 1 Introduction The poll tax on non-Muslims that was levied from the Islamic conquest of then-Copt Egypt in 640 through 1856 led to the (irreversible) conversion of poor and least religious Copts to Islam to avoid the tax and to the shrinking of Copts to a better-off minority. To the reader familiar with Coasian dynamics, the fact that most conversions occurred during the first two centuries raises the question of why Muslims did not raise the poll tax over time to reflect the increasing average wealth and religiosity of the remaining Copt population.1 This paper studies a new and simple class of dynamic screening games. In the standard intertemporal price discrimination (private values) model that has been the object of a volumi- nous literature, the monopolist moves down the demand curve: most eager customers buy or consume first, resulting in a right-truncated distribution of valuations or “negative selection”. -

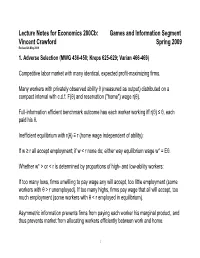

Lecture Notes for Economics 200Cb: Games and Information Segment Vincent Crawford Spring 2009 Revised 26 May 2009

Lecture Notes for Economics 200Cb: Games and Information Segment Vincent Crawford Spring 2009 Revised 26 May 2009 1. Adverse Selection (MWG 436-450; Kreps 625-629; Varian 466-469) Competitive labor market with many identical, expected profit-maximizing firms. Many workers with privately observed ability θ (measured as output) distributed on a compact interval with c.d.f. F( θ) and reservation ("home") wage r( θ). Full-information efficient benchmark outcome has each worker working iff r( θ) ≤ θ, each paid his θ. Inefficient equilibrium with r( θ) ≡ r (home wage independent of ability): If w ≥ r all accept employment; if w < r none do; either way equilibrium wage w* = Eθ. Whether w* > or < r is determined by proportions of high- and low-ability workers: If too many lows, firms unwilling to pay wage any will accept, too little employment (some workers with θ > r unemployed). If too many highs, firms pay wage that all will accept, too much employment (some workers with θ < r employed in equilibrium). Asymmetric information prevents firms from paying each worker his marginal product, and thus prevents market from allocating workers efficiently between work and home. 1 When r( θ) varies with θ, adverse selection can cause market failure. Figure 13.B.1-2 at MWG 441-442. Suppose that r( θ) < θ for all θ, so efficiency requires all workers to work; that r( θ) is strictly increasing; and that there is a density of abilities θ, privately known. Then at any given wage only the less able (r( θ) ≤ w) will work, so that lower wage rates lower (via adverse selection) the average productivity of those who accept employment. -

Job-Market Signaling and Screening: an Experimental Comparison

Games and Economic Behavior 64 (2008) 219–236 www.elsevier.com/locate/geb Job-market signaling and screening: An experimental comparison Dorothea Kübler a,b,∗, Wieland Müller c,d, Hans-Theo Normann e a Faculty of Economics and Management, Technical University Berlin, H 50, Straße des 17 Juni 135, 10623 Berlin, Germany b IZA, Germany c Department of Economics, Tilburg University, Postbus 90153, 5000LE Tilburg, The Netherlands d TILEC, The Netherlands e Department of Economics, Royal Holloway College, University of London, Egham, Surrey, TW20, OEX, UK Received 6 October 2005 Available online 16 January 2008 Abstract We analyze the Spence education game in experimental markets. We compare a signaling and a screening variant, and we analyze the effect of increasing the number of competing employers from two to three. In all treatments, efficient workers invest more often in education and employers pay higher wages to workers who have invested. However, separation of workers is incomplete and wages do not converge to equilibrium levels. In the signaling treatment, we observe significantly more separating outcomes compared to the screening treatment. Increasing the number of employers leads to higher wages in the signaling sessions but not in the screening sessions. © 2008 Elsevier Inc. All rights reserved. JEL classification: C72; C73; C91; D82 Keywords: Job-market signaling; Job-market screening; Sorting; Bayesian games; Experiments * Corresponding author. E-mail addresses: [email protected] (D. Kübler), [email protected] (W. Müller), [email protected] (H.-T. Normann). 0899-8256/$ – see front matter © 2008 Elsevier Inc. All rights reserved. doi:10.1016/j.geb.2007.10.010 220 D. -

Job Market Signaling and Screening: an Experimental Comparison

IZA DP No. 1794 Job Market Signaling and Screening: An Experimental Comparison Dorothea Kübler Wieland Müller Hans-Theo Normann DISCUSSION PAPER SERIES DISCUSSION PAPER October 2005 Forschungsinstitut zur Zukunft der Arbeit Institute for the Study of Labor Job Market Signaling and Screening: An Experimental Comparison Dorothea Kübler Technical University Berlin and IZA Bonn Wieland Müller Tilburg University Hans-Theo Normann Royal Holloway, University of London Discussion Paper No. 1794 October 2005 IZA P.O. Box 7240 53072 Bonn Germany Phone: +49-228-3894-0 Fax: +49-228-3894-180 Email: [email protected] Any opinions expressed here are those of the author(s) and not those of the institute. Research disseminated by IZA may include views on policy, but the institute itself takes no institutional policy positions. The Institute for the Study of Labor (IZA) in Bonn is a local and virtual international research center and a place of communication between science, politics and business. IZA is an independent nonprofit company supported by Deutsche Post World Net. The center is associated with the University of Bonn and offers a stimulating research environment through its research networks, research support, and visitors and doctoral programs. IZA engages in (i) original and internationally competitive research in all fields of labor economics, (ii) development of policy concepts, and (iii) dissemination of research results and concepts to the interested public. IZA Discussion Papers often represent preliminary work and are circulated to encourage discussion. Citation of such a paper should account for its provisional character. A revised version may be available directly from the author. IZA Discussion Paper No. -

Game Theory in Business Collaboration

Game Theory in Business Collaboration Ting Hei Gabriel Tsang Department of Computing Macquarie University Sydney, Australia [email protected] Abstract and their privileges. Given a request to access a resource or perform an operation, the service en- Game Theory has been studied in several areas forces the policy by analysing the credentials of such as Mathematics and Economics and it the requester and deciding if the requester is au- helps to choose a better strategy to maximise thorised to perform the actions in the request their profit or improve their quality. However, B2B integration is basically about the secured it does not have many studies about applying coordination of information among businesses game theory to business collaboration. In this paper, we take negotiation of access control and their information systems. It promises to policy between organizations as one of the dramatically transform the way business is con- business collaboration challenges and apply ducted among organisations. Negotiation of game theory creatively on Business Collabora- common access to a set of resources reflects the tion aspect for decision making in terms of sharing preferences of the parties involved. Such improving performance. negotiations typically seek agreement on a set of access properties. The approach of this project would be making Game Theory has been an important theory in our assumption about the business collabora- several areas such as Mathematics, Economics tion first and then analyse the game theory and Philosophy because Game theoretic concepts characteristics. Then, we compare the business apply whenever the actions of any individuals, challenge to the game theory characteristics to form the result. -

Game Theory and Economic Modelling

Game Theory and Economic Modelling Kreps, David M Paul E. Holden Professor of Economics, Graduate School of Business , Stanford University Contents 1. Introduction 2. The standard 3. Basic notions of non-cooperative game theory Strategic form games Extensive form games Extensive and strategic form games Dominance Nash equilibrium 4. The successes of game theory Taxonomy based on the strategic form Dynamics and extensive form games Incredible threats and incredible promises Credible threats and promises: Co-operation and reputation The importance of what players know about others Interactions among parties holding private information Concluding remarks 5. The problems of game theory The need for precise protocols Too many equilibria and no way to choose Choosing among equilibria with refinements The rules of the game 1 6. Bounded rationality and retrospection Why study Nash equilibria? The case for bounded rationality and retrospection A line of attack Historical antecedents and recent examples Objections to this general approach, with rebuttals Similarities: Or, Deduction and prospection is bounded rationality and retrospection The other problems of game theory Final words Bibliography Index 2 Acknowledgements This book provides a lengthened account of a series of lectures given at the Tel Aviv University under the aegis of the Sackler Institute for Advanced Studies and the Department of Economics in January 1990 and then at Oxford University in the Clarendon Lecture Series while I was a guest of St Catherine's College in February 1990. (Chapter 3 contains material not given in the lecture series that is useful for readers not conversant with the basic notions of non-cooperative game theory.) I am grateful to both sets of institutions for their hospitality in the event and for their financial support. -

Signalling and Screening

Signalling And Screening (to appear in the New Palgrave Dictionary of Economics, 2nd Ed.) Johannes Hörner June 12, 2006 Signalling refers to any activity by a party whose purpose is to in‡uence the perception and thereby the actions of other parties. This presupposes that one market participant holds private information that for some reason cannot be veri…ably disclosed, and which a¤ects the other participants’ incentives. Excellent surveys include Kreps and Sobel (1994) and Riley (2001). The classic example of market signalling is due to Spence (1973, 1974). Consider a labor market in which …rms know less than workers about their innate productivity. Under certain conditions, some workers may wish to signal their ability to potential employers, and do so by choosing a level of education that distinguish themselves from workers with lower productivity. 1. Mathematical Formulation: We consider here the simplest game-theoretic version of Spence’s model. A worker’s productivity, or type, is either H or L, with H > L > 0. Productivity is private information. Firms share a common prior p, with p = Pr = H f g 2 (0; 1). Before entering the job market, the worker chooses a costly education level e 0. (The reader is referred to Krishna and Morgan, this volume, for the case of costless signals.) Workers maximize U (w; e; ) = w c (e; ), where w is their wage and c (e; ) is the cost of education. Assume that c (0; ) = 0, ce (e; ) > 0, cee (e; ) > 0, c (e; ) < 0 for all e > 0, where ce ( ; ) and c (e; ) denote the derivatives of the cost with respect to education and types, respectively, and 1 cee ( ; ) is the second derivative of cost with respect to education. -

MS&E 246: Lecture 16 Signaling Games

MS&E 246: Lecture 16 Signaling games Ramesh Johari Signaling games Signaling games are two-stage games where: • Player 1 (with private information) moves first. His move is observed by Player 2. • Player 2 (with no knowledge of Player 1’s private information) moves second. • Then payoffs are realized. Dynamic games Signaling games are a key example of dynamic games of incomplete information. (i.e., a dynamic game where the entire structure is not common knowledge) Signaling games The formal description: Stage 0: Nature chooses a random variable t1, observable only to Player 1, from a distribution P(t1). Signaling games The formal description: Stage 1: Player 1 chooses an action a1 from the set A1. Player 2 observes this choice of action. (The action of Player 1 is also called a “message.”) Signaling games The formal description: Stage 2: Player 2 chooses an action a2 from the set A2. Following Stage 2, payoffs are realized: Π1(a1, a2 ; t1) ; Π2(a1, a2 ; t1). Signaling games Observations: • The modeling approach follows Harsanyi’s method for static Bayesian games. • Note that Player 2’s payoff depends on the type of player 1! • When Player 2 moves first, and Player 1 moves second, it is called a screening game. Application 1: Labor markets A key application due to Spence (1973): Player 1: worker t1 : intrinsic ability a1 : education decision Player 2: firm(s) a2 : wage offered Payoffs: Π1 = net benefit Π2 = productivity Application 2: Online auctions Player 1: seller t1 : true quality of the good a1 : advertised quality Player 2: buyer(s) -

Public Policy and Bounded Rationality

Bounded Rationality and Public Policy THE ECONOMICS OF NON-MARKET GOODS AND RESOURCES VOLUME 12 Series Editor: Dr. Ian J. Bateman Dr. Ian J. Bateman is Professor of Environmental Economics at the School of Environmen- tal Sciences, University of East Anglia (UEA) and directs the research theme Innovation in Decision Support (Tools and Methods) within the Programme on Environmental Decision Making (PEDM) at the Centre for Social and Economic Research on the Global Environment (CSERGE), UEA. The PEDM is funded by the UK Economic and Social Research Council. Professor Bateman is also a member of the Centre for the Economic and Behavioural Anal- ysis of Risk and Decision (CEBARD) at UEA and Executive Editor of Environmental and Resource Economics, an international journal published in cooperation with the European Association of Environmental and Resource Economists. (EAERE). Aims and Scope The volumes which comprise The Economics of Non-Market Goods and Resources series have been specially commissioned to bring a new perspective to the greatest economic chal- lenge facing society in the 21st Century; the successful incorporation of non-market goods within economic decision making. Only by addressing the complexity of the underlying issues raised by such a task can society hope to redirect global economies onto paths of sustainable development. To this end the series combines and contrasts perspectives from environmental, ecological and resource economics and contains a variety of volumes which will appeal to students, researchers, and decision makers at a range of expertise levels. The series will initially address two themes, the first examining the ways in which economists assess the value of non-market goods, the second looking at approaches to the sustainable use and management of such goods.