Context-Aware Adversarial Training for Name Regularity Bias in Named Entity Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Phillips' Boot Delivers Points to Cougars

MONDAY APRIL 28 2014 SPORT 23 Sheens springs no surprises By IAN McCULLOUGH RUGBY LEAGUE LINE UPS FOR ANZAC TEST MATCH AUSTRALIA coach Tim AUSTRALIA: Billy Slater (Mel), Darius Boyd (New), Greg Inglis (Sths), Josh Morris Sheens admits his team will be (Cant), Brett Morris (SGI), Johnathan Thurston (NQ), Cooper Cronk (Mel), James overwhelming favourites to Tamou (NQ), Cameron Smith (Mel) (c), Matt Scott (NQ), Sam Thaiday (Bris), Greg beat an inexperienced New Bird (Gold), Paul Gallen (Cro); Inter: Daly Cherry-Evans (Man), Nate Myles (Gold), Zealand side in Friday’s Anzac Boyd Cordner (Syd), Corey Parker (Bris). 18th: Matt Gillett (Bris). Test but expects the Kiwi play- NEW ZEALAND (squad): Gerard Beale (SGI), Adam Blair (WT), Jesse Bromwich ers to have a point to prove. (Mel), Kenny Bromwich (Mel), Greg Eastwood (Cant), Tohu Harris Mel), Siliva Havili Sheens’ 17-man squad (NZW), Ben Henry (NZW), Peta Hiku (Man), Isaac John (Pen), Shaun Johnson contained few surprises with 14 (NZW), Simon Mannering (NZW) (c), Sam Moa (Syd), Jason Nightingale (SGI), Kevin of the side that won the Rugby Proctor (Mel), Martin Taupau (WT), Roger Tuivasa-Sheck (Syd), Dean Whare (Pen). League World Cup in Manchester last November selected. returns to rugby at the end of has a little bit more football It’s in complete contrast to this year, was also overlooked under his belt and he plays Stephen Kearney’s squad with Tohu Harris, who the right centre for NSW,” Sheens which has just five survivors dual international replaced in said. “In a short preparation he from the 34-2 hammering in the World Cup squad, recalled. -

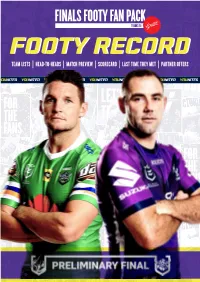

Team Lists | Head-To-Heads | Match Preview | Scorecard | Last Time They Met | Partner Offers Join Relish Today and Enter ‘Storm’ to Get a Free Burger!

FOOTYFOOTY RECORDRECORD TEAM LISTS | HEAD-TO-HEADS | MATCH PREVIEW | SCORECARD | LAST TIME THEY MET | PARTNER OFFERS JOIN RELISH TODAY AND ENTER ‘STORM’ TO GET A FREE BURGER! VISIT: GRILLD.COM.AU/RELISH 1.FULLBACK 2. RIGHT WING 3. RIGHT CENTRE 4. LEFT CENTRE 5. LEFT WING 1.FULLBACK 2. RIGHT WING 3. RIGHT CENTRE 4. LEFT CENTRE 5. LEFT WING RYAN PAPENHUYZEN SULIASI VUNIVALU BRENKO LEE JUSTIN OLAM JOSH ADDO-CARR CHARNZE SEMI VALEMEI JARROD CROKER JORDAN RAPANA NICK COTRIC NICOLL-KLOKSTAD 6. FIVE-EIGHTH 7. HALFBACK 6. FIVE-EIGHTH 7. HALFBACK CAMERON MUNSTER JAHROME HUGHES JACK WIGHTON GEORGE WILLIAMS 8. PROP 9. HOOKER 10. PROP 11. SECOND ROW 12. SECOND ROW 8. PROP 9. HOOKER 10. PROP 11. SECOND ROW 12. SECOND ROW JESSE BROMWICH CAMERON SMITH CHRISTIAN WELCH KENNY BROMWICH FELISE KAUFUSI JOSH PAPALII TOM STARLING IOSIA SOLIOLA JOHN BATEMAN ELLIOTT WHITEHEAD 13. LOCK 13. LOCK NELSON ASOFA-SOLOMONA JOSEPH TAPINE INTERCHANGE INTERCHANGE INTERCHANGE INTERCHANGE COACH: INTERCHANGE INTERCHANGE INTERCHANGE INTERCHANGE COACH: BRANDON SMITH TINO TOM EISENHUTH NICHO HYNES CRAIG BELLAMY SILIVA HAVILI DUNAMIS LUI HUDSON YOUNG COREY HARAWIRA-NAERA RICKY STUART FA’ASUAMALEAUI *TEAM SUBJECT TO CHANGE While Raiders stars Josh Papalii, hopes by football manager Frank CANBERRA WENT SOME Joe Tapine and Jack Wighton Ponissi last week. were willing their side to a WAY TO VANQUISHING LAST famously gritty victory on Raiders: With no major injuries Friday, the Storm enjoyed a rest from the Roosters game, Ricky YEAR’S GRAND FINAL PAIN BY having beaten Parramatta the Stuart could lock in an unchanged EXACTING PLAYOFF REVENGE week prior. -

36 Years Old 2018 KING GAL and Still the NRLCEO MVP

SEASON GUIDE 36 years old 2018 KING GAL and still the NRLCEO MVP... 500+ Player Stats Team Previews Top 100 from 2017 Ask The Experts Rookie Watch Super League Scouting NRLCEO.com.au - $10.00 25 METRE EATERS INTRODUCTION Welcome CEOs, Table of Contents Broncos........................................ 14 2018 marks the sixth year of producing ‘The Bible’ – we can’t believe it! What started off as a bit of office procrastination over the Christmas period has now (Click on any link below to go straight to the page) Bulldogs....................................... 16 blossomed into what you see before you here – the ultimate asset for success in NRLCEO! We have been busy this off-season scouring news articles, blogs and forums Tips From The Board Room.............................. 3 Cowboys...................................... 18 for any ‘gold’ that can be used in the draft. After all, knowledge is power and in purchasing this Guide, you have made the first step towards NRLCEO success! Steve Menzies Stole Our Name......................... 4 Dragons........................................ 20 We can’t wait for the NRL season to kick off and as loyal NRLCEO fans, we are certain that you can’t wait for your respective drafts – arguably the best time Eels............................................... 22 of the year! Interview with the Champion............................. 5 A big thank you goes to the small NRLCEO Crew that helps put this Guide Knights......................................... 24 together – there’s only four of us! Our graphic designer, Scott is the master Supporters League............................................ 6 of the pixel. He’s given the Season Guide a real professional look. If you like his work and want to hire him for some design services, then check out Panthers...................................... -

The Greatest Weekend! >> Sat 4 & Sun 5 February 2017 >> Eden Park, Auckland

THE GREATEST WEEKEND! >> SAT 4 & SUN 5 FEBRUARY 2017 >> EDEN PARK, AUCKLAND NEW ZEALAND REPRESENTATIVE PLAYERS # NAME Country/Rep # NAME Country/Rep # NAME Country/Rep 1 Gerard Beale NZ 17 Konrad Hurrell NZ 33 Elijah Taylor NZ 2 Adam Blair NZ 18 Isaac John NZ 34 Roger Tuivasa-Sheck NZ 3 Kenny Bromwich NZ 19 Shaun Johnson NZ 35 Manu Vatuvei NZ 4 Lewis Brown NZ 20 Sam Kasiano NZ 36 Jared Waerea-Hargreaves NZ 5 Matt Duffie NZ 21 Shaun Kenny-Dowall NZ 37 Dallin Watene Zelezniak NZ 6 Greg Eastwood NZ 22 Issac Luke NZ 38 Suaia Matagi NZ, Samoa 7 Glen Fisiiahi NZ 23 Sika Manu NZ 39 Frank Pritchard NZ, Samoa 8 Kieran Foran NZ 24 Benji Marshall NZ 40 Martin Taupau NZ, Samoa 9 Alex Glenn NZ 25 Ben Matulino NZ 41 Tui Lolohea NZ, Tonga 10 Bryson Goodwin NZ 26 Sam McKendry NZ 42 Manu Ma’u NZ, Tonga 11 Corey Harawira-Naera NZ 27 Jason Nightingale NZ 43 Sam Moa NZ, Tonga 12 Tohu Harris NZ 28 Kodi Nikorima NZ 44 Fuifui Moimoi NZ, Tonga 13 Bronson Harrison NZ 29 Sam Perrett NZ 45 Sio Siua Taueiaho NZ, Tonga 14 Ben Henry NZ 30 Kevin Proctor NZ 46 Jason Taumalolo NZ, Tonga 15 Peta Hiku NZ 31 Jeremy Smith NZ 16 Josh Hoffman NZ 32 Chase Stanley NZ AUSTRALIAN REPRESENTATIVE PLAYERS # NAME Country/Rep # NAME Country/Rep # NAME Country/Rep 1 Matt Bowen AUS, QLD 20 Jason Croker AUS, NSW 39 Brett Morris AUS, NSW 2 Alex Johnston AUS 21 Josh Dugan AUS, NSW 40 Josh Morris AUS, NSW 3 Chris Lawrence AUS 22 Mick Ennis AUS, NSW 41 Matt Moylan AUS, NSW 4 Tom Learoyd-Lahrs AUS, NSW 23 Robbie Farah AUS, NSW 42 Corey Parker AUS, QLD 5 Sione Mata’utia AUS 24 Andrew Fifita -

Attention Kiwis and Samoa Fans! Catch the New Zealand Kiwis Take on Samoa on the 28Th of October at Mt Smart Stadium!

Sir Peter Leitch Club Newsletter RLWC 2017 4th October 2017 Only 3 weeks until the first game! #190 Attention Kiwis and Samoa Fans! Catch the New Zealand Kiwis take on Samoa on the 28th of October at Mt Smart Stadium! Get your tickets now at: www.rlwc2017.com Build-Up To Rugby League World Cup Goes Into Overdrive As Squads Including The Kiwis Get Named This Week By Daniel Fraser New Zealand Media & PR Manager RLWC 2017 P TO 10 countries are set to announce their Naiqama and Daryl Millard. Another former Fiji Usquad for the Rugby League World Cup next international Joe Dakuitoga is coach of the Residents week, including host nations Australia, New Zealand team. and Papua New Guinea. Wayne Bennett’s England squad will be announced Kiwis coach David Kidwell will name his squad on on Monday, 9 October, following the Super League Thursday, October 5 in Auckland with Australian Grand Final. coach Mal Meninga to have announced his 24-man Kangaroos squad two days earlier at a press confer- Wales coach John Kear and Scotland’s Steve McCor- ence in Sydney on Tuesday, 3 October. mack are set to name their squads on Tuesday, 10 October. The announcements of the Kiwis and Kangaroos pave the way for Tonga coach Kristian Woolf, Sa- No changes will be permitted after 13 October, when moa’s Matt Parrish, Italy’s Cameron Ciraldo and the final squads for the tournament are due to be Lebanon’s Brad Fittler to name their squads as some officially announced by RLWC2017. players have dual eligibility for Australia, New Zea- The 15th instalment of the Rugby League World Cup land or England. -

Waller's Gelding Comin' Through

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll SPORT McCrone a Roo in the making Faith in Moses to deliver title New rules to end mass debates NEWS Canberra coach Ricky Stuart dangled a big, fat MOSES Wigness has taken over the reins again at MANLY coach Geoff Toovey says captain Jamie Lyon representative jersey carrot in front of Josh McCrone Brothers Rugby League club for the 2014 season. won’t have to change his on-field approach to when convincing him to switch to hooker full-time. Wigness, who coached the club for three years and referees despite recent NRL rule changes. Lyon and Stuart, who left Parramatta to coach the Raiders won the 2010 and 2011 premierships, replaces Canterbury’s Michael Ennis are seen as two of the after the sacking of David Furner, has wasted no time Anthoiny Castro who had held the position for the game’s leading debaters when it comes to arguing stamping his authority on his new team, training past two seasons. The club’s seniors sign-on day is at with match officials. But they are set to be stymied them in 40C heat and sending them to a week-long Airport Tavern today from 1pm. Brothers spokesman by a new law that limits where and when captains elite training camp. The former NSW and Australia John Adams said the club wanted to regain the can speak to refs. Under new rules captains will only coach has seen big potential for McCrone at hooker. premiership that they lost to Palmerston last year. be able to speak to refs during a stoppage in play. -

Biggest Week of the Year for Rugby League It’S the NRL Grand Final THIS Sunday

Sir Peter Leitch Club AT MT SMART STADIUM, HOME OF THE MIGHTY VODAFONE WARRIORS 28th September 2016 Newsletter #141 It’s the Biggest Week of the year for Rugby League It’s the NRL Grand Final THIS Sunday Kick Off: 9.15PM New Zealand Time Who Wins the 2016 Grand Final? By John Coffey QSM Author of ten rugby league books, Christchurch Press sports writer (44 years), NZ correspondent for Rugby League Week (Australia) and Open Rugby (England) HE AUSTRALIAN airlines will profit from Grand Final weekend, with Melbourne Storm fans heading Tto Sydney for the NRL decider on Sunday, and Sydney Swans supporters going the other way for the AFL showdown on Saturday. Both have been dominant clubs in the last decade, in contrast to their rivals. The Cronulla Sharks are seeking their first title in their 50th season, while the Western Bulldogs have not played a final since 1961. I know nothing about Aussie Rules but I do know I have mixed feelings about whether I want the Sharks or the Storm to take home the trophy. Here are some arguments for and against: Why I want the Storm to win: More Kiwis: With the Bromwich brothers, Kevin Proctor and Tohu Harris, the Storm have a distinct Kiwis connection, not to mention flying wing Suliasi Vunivalu, who went to school in Auckland. Best team all season: The Storm are the most consistent team, as evidenced by their winning the minor premiership being without champion fullback Billy Slater. The coach: Craig Bellamy has been blessed with some superstars but also has the knack of turning journey- men into influential players. -

The Warriors Clash with the Raiders at Canberra Came in the 64Th Minute

Sir Peter Leitch Club AT MT SMART STADIUM, HOME OF THE MIGHTY VODAFONE WARRIORS 19th April 2017 Newsletter #166 Vodafone Warriors v Canberra Raiders Ryan Hoffman evades a tackle. Roger Tuivasa-Sheck gets tackled. Shaun Johnson in action. Photos courtesy of www.photosport.nz Matulino Named on Bench by Richard Becht EN MATULINO’S comeback moves a step closer with his inclusion in the extended 21-man squad for Bthe Vodafone Warriors’ traditional Anzac Day NRL clash against the Storm at AMII Park in Melbourne next Tuesday (7.00pm kick-off local time; 9.00pm NZT). While the match is still a week away, the Vodafone Warriors are required to submit their squad as usual to- day. In doing so, head coach Stephen Kearney has included the 28-year-old Matulino in his line-up of eight inter- change contenders. The senior prop, four games short of becoming just the club’s fourth 200-game player, has been sidelined following knee surgery. “Ben’s getting closer,” said Kearney. “He has been making good progress since returning to full training and should be ready to play soon.” When he does make his comeback, Matulino will join the handful of players who have appeared across 10 NRL seasons for the Vodafone Warriors – Stacey Jones, Awen Guttenbeil, Lance Hohaia, Jerome Ropati, Manu Vatuvei, Simon Mannering and Sam Rapira. Matulino’s inclusion on the bench is the only change from the 21-man squad initially named for last Satur- day’s clash against Canberra. He joins Nathaniel Roache, Charlie Gubb, Ligi Sao, Sam Lisone, Bunty Afoa, Ata Hingano and Mason Lino on the extended bench. -

2020 Annual 1 What’S Inside Welcome

2020 Annual 1 What’s Inside Welcome. Welcome 2 Andrew Ferguson Rugby League & the ‘Spanish Flu’ 3 Nick Tedeschi Making the Trains Run on Time 4 Hello and welcome to the first ever Rugby League Suzie Ferguson Being a rugby league fan in lockdown 5 Project Annual. Yearbooks of the past have always Will Evans Let’s Gone Warriors 6-7 been a physical book detailing every minutiae of the RL Eye Test How the game changed statisically 8-10 particular season, packed full of great memories, Jason Oliver & Oscar Pannifex statistics and history. Take the Repeat Set: NRL Grand Final 11-13 Ben Darwin Governance vs Performance 14-15 This yearbook is a twist on the usual yearbook as it 2020 NRL Season & Grand Final 16-18 not only looks at the Rugby League season of 2020, 2020 State of Origin series 19-21 but most importantly, it celebrates the immensely NRL Club Reviews Brisbane 22-23 brilliant, far-reaching and diverse community of Canberra 24-25 Canterbury-Bankstown 26-27 independent Rugby League content creators, from Cronulla-Sutherland 28-29 Australia, New Zealand, England and even Canada! Gold Coast 30-31 Manly-Warringah 32-33 This is not about one individual website, writer Melbourne 34-35 or creator. This is about a community of fans who Newcastle 36-37 are uniquely skilled and talented and who all add North Queensland 38-39 to the match day experience for supporters of Parramatta 40-41 Rugby League around the world, in ways that the Penrith 42-43 mainstream media simply cannot. -

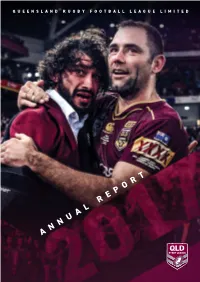

Annual Report Snapshot

QUEENSLAND RUGBY FOOTBALL LEAGUE LIMITED ANNUAL REPORT SNAPSHOT 60,857 4646 The number of players registered The number of registered female with a Queensland club in 2017. club players this year increased by 21%. $151,000 Revenue generated by clubs that hosted Country Week matches in Round 20 of the Intrust Super Cup. 11,260 961 14,401 The Intrust Super Cup decider The overall number of Intrust Super between PNG Hunters and Cup games played by members Sunshine Coast Falcons attracted a of the 2017 Melbourne Storm and Maroon Membership grew record grand final crowd. North Queensland Cowboys Grand significantly in 2017. Final teams. 39,000 The number of people who attended the Maroon Festival in 1,307,508 Brisbane in the lead up to State of The number of social media followers on QRL platforms. Origin Game I. 2 3 OUR COMMUNITY 52 LOOKING FORWARD TO 2018 74 Volunteers 54 On Field 74 CONTENTS Acknowledgement 55 Off Field 75 Charities & Donations 56 Wellbeing & Education 57 GOVERNANCE & FINANCIALS 76 Zaidee’s Rainbow Foundation 58 Country Week 59 Fan Day 60 OUR LEADERS 6 DELIVERING OUR GAME 40 QRL History Committee 61 Board of Directors 6 Digital 42 Chairman’s Message 7 Marketing & Brand 44 OUR REPRESENTATIVE 62 Managing Director’s Message 8 Maroon Membership 46 TEAMS The Coach: Kevin Walters 9 Maroon Festival 47 XXXX Queensland Maroons 64 Attendances 48 XXXX Queensland Residents 66 OUR FRAMEWORK 10 Media & Communications 49 Queensland Under 20 67 Renita Garard 12 Television Ratings 50 Queensland Under 18 68 Government Partnerships 13 Partners -

Kyrgios Eyes Higher Rank

WEDNESDAY MARCH 30 2016 SPORT 33 GP W D L GF GA Pts Basketball Burton Albion 39 23 6 10 50 33 75 NBA Wigan Athletic 39 20 14 5 65 35 74 Walsall 37 19 11 7 57 38 68 CHICAGO BULLS 100 Atlanta Hawks 102; Gillingham 38 19 9 10 65 44 66 MEMPHIS GRIZZLIES 87 San Antonio Spurs Millwall 39 19 8 12 60 44 65 101; MINNESOTA TIMBERWOLVES 121 Phoenix Suns 116; NEW ORLEANS PELICANS 99 New Bradford City 39 18 10 11 45 38 64 York Knicks 91; DENVER NUGGETS 88 Dallas Barnsley 39 19 5 15 60 48 62 Mavericks 97; UTAH JAZZ 123 L.A. Lakers 75; Rochdale 39 16 9 14 55 50 57 Geitz buzzing PORTLAND TRAIL BLAZERS 105 Sacramento Coventry 38 15 11 12 59 43 56 Kings 93; MIAMI HEAT 110 Brooklyn Nets Rugby League Scunthorpe 39 15 11 13 49 43 56 99; TORONTO RAPTORS 100 Oklahoma City NRL Port Vale 40 15 11 14 45 47 56 Thunder 119; L.A. CLIPPERS 114 Boston Sheffield United 38 15 10 13 55 50 55 Celtics 90 rouND 5 TEAMS (all times local): Southend United 38 15 10 13 50 49 55 Eastern Conference MANLY SEA EAGLES v SouTh SYDNEY Peterborough Utd 38 15 6 17 65 61 51 Atlantic Division Thursday at Brookvale oval, 8.05pm Swindon Town 38 14 8 16 56 63 50 W L PCT GB SEA EAGLES: Brett Stewart, Jorge Taufua, Bury 39 13 11 15 48 62 50 Toronto Raptors 49 24 0.671 - Jamie Lyon (c), Steve Matai, Tom Trbojevic, Shrewsbury Town 38 12 10 16 47 57 46 Boston Celtics 43 31 0.581 6.5 Dylan Walker, Apisai Koroisau, Nate Myles, Matt Chesterfield 39 12 7 20 48 60 43 Parcell, Brenton Lawrence, Martin Taupau, about season New York Knicks 30 45 0.400 20.0 Blackpool 40 11 9 20 37 50 42 Brooklyn Nets 21 52 0.288 28.0 Feleti Mateo, Jake Trbojevic. -

Dunedin These of Course Are All Fantastic Initiatives, But

ISSUE 11 GET SWEET LOOT WITH A 2016 ONECARD ACTIVATE YOURS ONLINE AT R1.CO.NZ/ONECARD FLASH YOUR 2016 ALTO CAFE PITA PIT - GEORGE ST Any two breakfasts for the price of one Buy any petita size pita and get ONECARD AT ANY Monday - Friday, 7am - 11.30am upgraded to a regular OF THESE FINE BEAUTÉ SKIN BAR & PIZZA BELLA BEAUTY CLINIC Lunch size pizza & 600ml Coke range for $10 BUSINESSES AND $45 brazilians, $20 brow shape, $45 spray - or - any waffle and coffee for $10 tans + 10% off any full price service or product SAVE CA$H MONEY! POPPA’S PIZZA BENDON Free garlic bread with any regular or large AMAZON SURF, Free wash bag with purchase over $50* pizza* SKATE & DENIM CRUSTY CORNER RAPUNZEL’S HAIR DESIGN $5 BLTs, Monday - Friday $99 for pre-treatment, 1/2 head of foils or 10% off full-priced items* global colour, blow wave & H2D finish ESCAPE - or - 20% off cuts BOWL LINE 20% off regular-price games* 2 games of bowling for $15* RELOAD JUICE BAR FILADELFIOS GARDENS Buy any small juice, smoothie, or coffee and 1x medium pizza, 1x fries, and 2x pints of Fillies upsize to a large for free* CAPERS CAFE Draught or fizzy for $40, Sun-Thurs 2 for 1 gourmet pancakes* ROB ROY DAIRY FRIDGE FREEZER ICEBOX Free upgrade to a waffle cone every Monday COSMIC 15% discount off the regular retail price & Tuesday 10% off all in-store items* GOVERNOR’S CAFE SHARING SHED $6 for a slice, scone, or muffin $5 off all tertiary-student hair cuts LUMINO THE DENTISTS and a medium coffee $69 new patient exams and SUBWAY HALLENSTEIN BROTHERS Buy any six-inch meal deal &