A Study of Gaps in Attack Analysis 5B

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Address Space Layout Permutation (ASLP): Towards Fine-Grained Randomization of Commodity Software

Address Space Layout Permutation (ASLP): Towards Fine-Grained Randomization of Commodity Software Chongkyung Kil,∗ Jinsuk Jun,∗ Christopher Bookholt,∗ Jun Xu,† Peng Ning∗ Department of Computer Science∗ Google, Inc.† North Carolina State University {ckil, jjun2, cgbookho, pning}@ncsu.edu [email protected] Abstract a memory corruption attack), an attacker attempts to alter program memory with the goal of causing that program to Address space randomization is an emerging and behave in a malicious way. The result of a successful at- promising method for stopping a broad range of memory tack ranges from system instability to execution of arbitrary corruption attacks. By randomly shifting critical memory code. A quick survey of US-CERT Cyber Security Alerts regions at process initialization time, address space ran- between mid-2005 and 2004 shows that at least 56% of the domization converts an otherwise successful malicious at- attacks have a memory corruption component [24]. tack into a benign process crash. However, existing ap- Memory corruption vulnerabilities are typically caused proaches either introduce insufficient randomness, or re- by the lack of input validation in the C programming lan- quire source code modification. While insufficient random- guage, with which the programmers are offered the free- ness allows successful brute-force attacks, as shown in re- dom to decide when and how to handle inputs. This flex- cent studies, the required source code modification prevents ibility often results in improved application performance. this effective method from being used for commodity soft- However, the number of vulnerabilities caused by failures ware, which is the major source of exploited vulnerabilities of input validation indicates that programming errors of this on the Internet. -

Security Enhancements in Red Hat Enterprise Linux (Beside Selinux)

Security Enhancements in Red Hat Enterprise Linux (beside SELinux) Ulrich Drepper Red Hat, Inc. [email protected] December 9, 2005 Abstract Bugs in programs are unavoidable. Programs developed using C and C++ are especially in danger. If bugs cannot be avoided the next best thing is to limit the damage. This article will show improvements in Red Hat Enterprise Linux which achieve just that. 1 Introduction These problems are harder to protect against since nor- mally2 all of the OS’s functionality is available and the Security problems are one of the biggest concerns in the attacker might even be able to use self-compiled code. industry today. Providing services on networked comput- ers which are accessible through the intranet and/or Inter- Remotely exploitable problems are more serious since net potentially to untrusted individuals puts the installa- the attacker can be anywhere if the machine is available tion at risk. A number of changes have been made to the through the Internet. On the plus side, only applications Linux OS1 which help a lot to mitigate the risks. Up- accessible through the network services provided by the coming Red Hat Enterprise Linux versions will feature machine can be exploited, which limits the range of ex- the SELinux extensions, originally developed by the Na- ploits. Further limitations are the attack vectors. Usually tional Security Agency (NSA), with whom Red Hat now remote attackers can influence applications only by pro- collaborates to productize the developed code. SELinux viding specially designed input. This often means cre- means a major change in Linux and is a completely sep- ating buffer overflows, i.e., situations where the data is arate topic by itself. -

Telecom Sud Paris

Sécurité des OS et des systèmes Telecom Sud Paris Aurélien Wailly, Emmanuel Gras Amazon Dublin ANSSI Paris 2 Juin 2017 Présentation des intervenants Emmanuel Gras I Agence nationale de la sécurité des systèmes d’information depuis trop longtemps I Bureau inspections en SSI I http://pro.emmanuelgras.com I [email protected] Aurélien Wailly I Amazon depuis 2 ans I Thèse : End-to-end Security Architecture and Self-Protection Mechanisms for Cloud Computing Environments I Sécurité OS, reverse-engineering, réponse à incidents I http://aurelien.wail.ly I [email protected] A. Wailly, E. Gras (Amazon / ANSSI) Sécurité des OS et des systèmes 2 Juin 2017 2 / 259 Sécurité des sysèmes et des OS Objectifs I Fonctionnement des OS modernes, notamment du point de vue de la sécurité I Failles, exploitation, compromission I Dans le domaine de la sécurité, il est indispensable de comprendre comment marche un système pour l’exploiter Cibles I Linux, les principes restent valables pour les UNIX I Windows A. Wailly, E. Gras (Amazon / ANSSI) Sécurité des OS et des systèmes 2 Juin 2017 3 / 259 Plan 1 Introduction 2 OS, CPU et sécurité 3 Mémoire, segmentation et pagination (et sécurité !) 4 Sécurité des utilisateurs - UNIX 5 Sécurité des utilisateurs - Windows 6 Analyse de binaires - Concepts et outils 7 Failles applicatives 8 Protection des OS A. Wailly, E. Gras (Amazon / ANSSI) Sécurité des OS et des systèmes 2 Juin 2017 4 / 259 Votre OS ? www.dilbert.com/ A. Wailly, E. Gras (Amazon / ANSSI) Sécurité des OS et des systèmes 2 Juin 2017 5 / 259 Ce qui ne sera pas abordé La sécurité est un sujet vaste ! Réseau Virus et malwares Techniques logicielles avancées I Shellcoding poussé I Protection logicielle Exploitation Web I Cross Site Scripting I Injections SQL A. -

Linux-Professional-Institute-LPI-303

LPIC-3: Linux Enterprise Professional Certification LPIC-3 303: Security LPIC-3 is a professional certification program program that covers enterprise Linux specialties. LPIC-3 303 covers administering Linux enterprise-wide with an emphasis on Security. To become LPIC-3 certified, a candidate with an active LPIC-1 and LPIC-2 certification must pass at least one of the following specialty exams. Upon successful completion of the requirements, they will be entitled to the specialty designation: LPIC-3 Specialty Name. For example, LPIC-3 Virtualization & High Availability. Specialties: • 300: Mixed Environment • 303: Security • 304: Virtualization and High Availability TOPIC 325: CRYPTOGRAPHY • Configure Apache HTTPD with mod_ssl to that performs DNSSEC validation on behalf of 325.1 X.509 Certificates and Public Key provide HTTPS service, including SNI and its clients Infrastructures (5) HSTS • Key Signing Key, Zone Signing Key, Key Tag Candidates should understand X.509 certificates • Configure Apache HTTPD with mod_ssl to • Key generation, key storage, key management and public key infrastructures. They should know authenticate users using certificates and key rollover how to configure and use OpenSSL to implement • Configure Apache HTTPD with mod_ssl to • Maintenance and re-signing of zones certification authorities and issue SSL certificates provide OCSP stapling • Use DANE to publish X.509 certificate for various purposes. • Use OpenSSL for SSL/TLS client and server information in DNS Key knowledge areas: tests • Use TSIG for secure communication with BIND • Understand X.509 certificates, X.509 certificate 325.3 Encrypted File Systems (3) TOPIC 326: HOST SECURITY lifecycle, X.509 certificate fields and X.509v3 Candidates should be able to setup and 326.1 Host Hardening (3) certificate extensions configure encrypted file systems. -

6.2 Release Notes

Red Hat Enterprise Linux 6 6.2 Release Notes Release Notes for Red Hat Enterprise Linux 6.2 Edition 2 Last Updated: 2017-10-24 Red Hat Enterprise Linux 6 6.2 Release Notes Release Notes for Red Hat Enterprise Linux 6.2 Edition 2 Red Hat Engineering Content Services Legal Notice Copyright © 2011 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. Node.js ® is an official trademark of Joyent. Red Hat Software Collections is not formally related to or endorsed by the official Joyent Node.js open source or commercial project. -

New Security Enhancements in Red Hat Enterprise Linux V.3, Update 3

New Security Enhancements in Red Hat Enterprise Linux v.3, update 3 By Arjan van de Ven Abstract This whitepaper describes the new security features that have been added to update 3 of Red Hat Enterprise Linux v.3: ExecShield and support for NX technology. August 2004 Table of Contents Introduction 2 Types of Security Holes 2 Buffer Overflows 3 Countering Buffer Overflows 4 Randomization 6 Remaining Randomization: PIE Binaries 7 Compatibility 8 How Well Does it Work? 9 Future Work 10 ¢¡¤£¦¥¨§ © ¤ ¦ ¨¢ ¨ ! ¨! "¨ $#!© ¨%¦&¨¦ ¨! ¤' ¨¡(*)+¤-, ¡ ¡¤.!+ !£§ ¡¤%/ 01, © 02 ".§ + § ¨.)+.§ 3¦01¡.§4§ .© 02 .§ + § ¨.)+.§ 3¦01¡54¢! . !! !© 6¢'7.!"¡ .§.8¡.%¨¦ § © ¨0 #© %8&9© 0:§ ¨ ¨© 02 .§ ¨+ § ¨.)+¤§ 3;¡!54#!© %!0;<=¡.§ >!¨, ¨0 ¦?@BA$¨C.6B'ED8F ! Introduction The world of computer security has changed dramatically in the last few years. Network security used to be about one dedicated hacker trying to get into one government computer, but now it is often about automated mass attacks. The SQL Slammer and Code Red worms were the first wide-scale computer security incidents to get mainstream press coverage. Linux has had similar, less-invasive worms in the past, such as the Slapper worm of 2002. Another relatively new phenomenon is that compromised computers are primarily being used for other purposes, including sending spam or participating in Distributed Denial of Service (DDOS) attacks. A contributing factor to the mass-compromise problem is that a large portion1 of users and system administrators generally do not apply the security fixes that are provided by the operating system vendor. This leaves a significant number of vulnerable machines connected to the Internet at all times. Providing security updates after the fact, however, is not sufficient. -

Enabling Address Space Layout Randomization for SGX Programs

SGX-Shield: Enabling Address Space Layout Randomization for SGX Programs Jaebaek Seo∗x , Byounyoung Leeyx, Seongmin Kim∗, Ming-Wei Shihz, Insik Shin∗, Dongsu Han∗, Taesoo Kimz ∗KAIST yPurdue University zGeorgia Institute of Technology {jaebaek, dallas1004, ishin, dongsu_han}@kaist.ac.kr, [email protected], {mingwei.shih, taesoo}@gatech.edu Abstract—Traditional execution environments deploy Address system and hypervisor. It also offers hardware-based measure- Space Layout Randomization (ASLR) to defend against memory ment, attestation, and enclave page access control to verify the corruption attacks. However, Intel Software Guard Extension integrity of its application code. (SGX), a new trusted execution environment designed to serve security-critical applications on the cloud, lacks such an effective, Unfortunately, we observe that two properties, namely, well-studied feature. In fact, we find that applying ASLR to SGX confidentiality and integrity, do not guarantee the actual programs raises non-trivial issues beyond simple engineering for security of SGX programs, especially when traditional memory a number of reasons: 1) SGX is designed to defeat a stronger corruption vulnerabilities, such as buffer overflow, exist inside adversary than the traditional model, which requires the address space layout to be hidden from the kernel; 2) the limited memory SGX programs. Worse yet, many existing SGX-based systems uses in SGX programs present a new challenge in providing a tend to have a large code base: an entire operating system as sufficient degree of entropy; 3) remote attestation conflicts with library in Haven [12] and a default runtime library in SDKs the dynamic relocation required for ASLR; and 4) the SGX for Intel SGX [28, 29]. -

Guide to the Secure Configuration of Red Hat Enterprise Linux 5

Guide to the Secure Configuration of Red Hat Enterprise Linux 5 Revision 4.2 August 26, 2011 Operating Systems Division Unix Team of the Systems and Network Analysis Center National Security Agency 9800 Savage Rd. Suite 6704 Ft. Meade, MD 20755-6704 2 Warnings Do not attempt to implement any of the recommendations in this guide without first testing in a non- production environment. This document is only a guide containing recommended security settings. It is not meant to replace well- structured policy or sound judgment. Furthermore this guide does not address site-specific configuration concerns. Care must be taken when implementing this guide to address local operational and policy concerns. The security changes described in this document apply only to Red Hat Enterprise Linux 5. They may not translate gracefully to other operating systems. Internet addresses referenced were valid as of 1 Dec 2009. Trademark Information Red Hat is a registered trademark of Red Hat, Inc. Any other trademarks referenced herein are the property of their respective owners. Change Log Revision 4.2 is an update of Revision 4.1 dated February 28, 2011. Added section 2.5.3.1.3, Disable Functionality of IPv6 Kernel Module Through Option. Added discussion to section 2.5.3.1.1, Disable Automatic Loading of IPv6 Kernel Module, indicating that this is no longer the preferred method for disabling IPv6. Added section 2.3.1.9, Set Accounts to Disable After Password Expiration. Revision 4.1 is an update of Revision 4 dated September 14, 2010. Added section 2.2.2.6, Disable All GNOME Thumbnailers if Possible. -

Linux Exploit Mitigation

Linux Exploit Mitigation Dobin Rutishauser V1.3, March 2016 Compass Security Schweiz AG Tel +41 55 214 41 60 Werkstrasse 20 Fax +41 55 214 41 61 Postfach 2038 [email protected] CH-8645 Jona www.csnc.ch About me At Compass Security since 2011 Spoke at OWASP Zürich, Bsides Vienna On the internet: www.broken.ch, www.haking.ch, www.r00ted.ch, phishing.help @dobinrutis github.com/dobin © Compass Security Schweiz AG www.csnc.ch Seite 2 About this presentation To understand exploit mitigations Need to understand exploit techniques I’ll lead you all the way, from zero In 45 minutes! © Compass Security Schweiz AG www.csnc.ch Seite 3 About this presentation Content of 8 hours for BFH It will get very technical Not possible to: Cover all the topics And be easy to understand And handle all the details This should give more of an … overview Don’t worry if you don’t understand everything © Compass Security Schweiz AG www.csnc.ch Seite 4 Overview of the presentation Compass Security Schweiz AG Tel +41 55 214 41 60 Werkstrasse 20 Fax +41 55 214 41 61 Postfach 2038 [email protected] CH-8645 Jona www.csnc.ch Overview 1. Memory Layout 2. Stack 3. Exploit Basics 4. Exploit Mitigation • DEP • Stack Protector • ASLR 5. Contemporary Exploiting 6. Hardening 7. Container 8. Kernel © Compass Security Schweiz AG www.csnc.ch Seite 6 Exploit Intention Attacker wants: Execute his own code on the server rm –rf / Connect-back shellcode echo “sysadmin:::” >> /etc/passwd © Compass Security Schweiz AG www.csnc.ch Seite 7 Exploit Requirements Attacker needs: Be able to upload code -

Reportable Items for Linux®

DSET 2.2 Reportable items for Linux® Dell System E-Support Tool Version 2.2 Reportable Items for Linux Information in this document is subject to change without notice. © 2011 Dell Inc. All rights reserved. Reproduction of these materials in any manner whatsoever without the written permission of Dell Inc. is strictly forbidden. Trademarks used in this text: Dell™, the DELL logo, PowerEdge™, OpenManage™ are trademarks of Dell Inc. Intel®, is a registered trademark of Intel Corporation in the U.S. and other countries. Red Hat Enterprise Linux® and Enterprise Linux® are registered trademarks of Red Hat, Inc. in the United States and/or other countries. SUSE ™ is a trademark of Novell Inc. in the United States and other countries. VMware® is a registered trademark of VMWare, Inc. in the United States or other countries. Other trademarks and trade names may be used in this publication to refer to either the entities claiming the marks and names or their products. Dell Inc. disclaims any proprietary interest in trademarks and trade names other than its own. Initial Release Date: 23rd May 2011 Last Revised Date: 29th June 2011 Revision Number: A01 1 DSET 2.2 Reportable items for Linux® List of Reportable Items 476 reported items (varies by system) 1401 log files collected The reportable items have been divided into the four sections listed below. Each section corresponds to notable data categories viewable in the DSET graphical user interface (GUI). System Items List 194 unique items collected Storage Items List 88 unique items collected Software Items List 186 unique items collected Log Files List 1401 log files (if present) collected from: o RedHat Enterprise Linux 4.0 o RedHat Enterprise Linux 5.0 o SUSE Linux Enterprise Server 10 o SUSE Linux Enterprise Server 11 o VMWare ESX 4.0 and ESX 4.1 This document also lists other configuration or log files that may be collected but are only viewable by navigating to the DSET report ZIP archive contents. -

End-To-End Verification of Memory Isolation

Secure System Virtualization: End-to-End Verification of Memory Isolation HAMED NEMATI Doctoral Thesis Stockholm, Sweden 2017 TRITA-CSC-A-2017:18 KTH Royal Institute of Technology ISSN 1653-5723 School of Computer Science and Communication ISRN-KTH/CSC/A--17/18-SE SE-100 44 Stockholm ISBN 978-91-7729-478-8 SWEDEN Akademisk avhandling som med tillstånd av Kungl Tekniska högskolan framlägges till offentlig granskning för avläggande av teknologie doktorsexamen i datalogi fre- dagen den 20 oktober 2017 klockan 14.00 i Kollegiesalen, Kungl Tekniska högskolan, Brinellvägen 8, Stockholm. © Hamed Nemati, October 2017 Tryck: Universitetsservice US AB iii Abstract Over the last years, security kernels have played a promising role in re- shaping the landscape of platform security on today’s ubiquitous embedded devices. Security kernels, such as separation kernels, enable constructing high-assurance mixed-criticality execution platforms. They reduce the soft- ware portion of the system’s trusted computing base to a thin layer, which enforces isolation between low- and high-criticality components. The reduced trusted computing base minimizes the system attack surface and facilitates the use of formal methods to ensure functional correctness and security of the kernel. In this thesis, we explore various aspects of building a provably secure separation kernel using virtualization technology. In particular, we examine techniques related to the appropriate management of the memory subsystem. Once these techniques were implemented and functionally verified, they pro- vide reliable a foundation for application scenarios that require strong guar- antees of isolation and facilitate formal reasoning about the system’s overall security. We show how the memory management subsystem can be virtualized to enforce isolation of system components. -

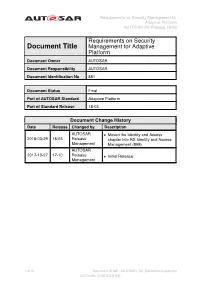

Requirements on Security Management for Adaptive Platform AUTOSAR AP Release 18-03

Requirements on Security Management for Adaptive Platform AUTOSAR AP Release 18-03 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status Final Part of AUTOSAR Standard Adaptive Platform Part of Standard Release 18-03 Document Change History Date Release Changed by Description AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 31 Document ID 881: AUTOSAR_RS_SecurityManagement — AUTOSAR CONFIDENTIAL — Requirements on Security Management for Adaptive Platform AUTOSAR AP Release 18-03 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. This work may be utilized or reproduced without any modification, in any form or by any means, for informational purposes only. For any other purpose, no part of the work may be utilized or reproduced, in any form or by any means, without permission in writing from the publisher. The work has been developed for automotive applications only. It has neither been developed, nor tested for non-automotive applications. The word AUTOSAR and the AUTOSAR logo are registered trademarks.