Natural Language Processing As a Predictive Feature in Financial Forecasting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE CASE of CLOS Efraim Benmelech Jennifer Dlugosz Victoria

NBER WORKING PAPER SERIES SECURITIZATION WITHOUT ADVERSE SELECTION: THE CASE OF CLOS Efraim Benmelech Jennifer Dlugosz Victoria Ivashina Working Paper 16766 http://www.nber.org/papers/w16766 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 February 2011 We thank Paul Gompers, Jeremy Stein, Greg Nini, Gary Gorton, Charlotte Ostergaard, James Vickery, Paul Willen, seminar participants at the Federal Reserve Bank of New York, the Federal Reserve Board, Harvard University, UNC, London School of Economics, Wharton, University of Florida, Berkeley, NERA, the World Bank, the Brattle Group, and participants at the American Finance Association annual meeting, the Yale Conference on Financial Crisis, and Financial Intermediation Society Annual Meeting for helpful comments. Jessica Dias and Kate Waldock provided excellent research assistance. We acknowledge research support from the Division of Research at Harvard Business School. We are especially grateful to Markit for assisting us with CDS data. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research. NBER working papers are circulated for discussion and comment purposes. They have not been peer- reviewed or been subject to the review by the NBER Board of Directors that accompanies official NBER publications. © 2011 by Efraim Benmelech, Jennifer Dlugosz, and Victoria Ivashina. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Securitization without Adverse Selection: The Case of CLOs Efraim Benmelech, Jennifer Dlugosz, and Victoria Ivashina NBER Working Paper No. -

What Happened to the Quants in August 2007?∗

What Happened To The Quants In August 2007?∗ Amir E. Khandaniy and Andrew W. Loz First Draft: September 20, 2007 Latest Revision: September 20, 2007 Abstract During the week of August 6, 2007, a number of high-profile and highly successful quan- titative long/short equity hedge funds experienced unprecedented losses. Based on empir- ical results from TASS hedge-fund data as well as the simulated performance of a specific long/short equity strategy, we hypothesize that the losses were initiated by the rapid un- winding of one or more sizable quantitative equity market-neutral portfolios. Given the speed and price impact with which this occurred, it was likely the result of a sudden liqui- dation by a multi-strategy fund or proprietary-trading desk, possibly due to margin calls or a risk reduction. These initial losses then put pressure on a broader set of long/short and long-only equity portfolios, causing further losses on August 9th by triggering stop-loss and de-leveraging policies. A significant rebound of these strategies occurred on August 10th, which is also consistent with the sudden liquidation hypothesis. This hypothesis suggests that the quantitative nature of the losing strategies was incidental, and the main driver of the losses in August 2007 was the firesale liquidation of similar portfolios that happened to be quantitatively constructed. The fact that the source of dislocation in long/short equity portfolios seems to lie elsewhere|apparently in a completely unrelated set of markets and instruments|suggests that systemic risk in the hedge-fund industry may have increased in recent years. -

What Do Fund Flows Reveal About Asset Pricing Models and Investor Sophistication?

What do fund flows reveal about asset pricing models and investor sophistication? Narasimhan Jegadeesh and Chandra Sekhar Mangipudi☆ Goizueta Business School Emory University January, 2019 ☆Narasimhan Jegadeesh is the Dean’s Distinguished Professor at the Goizueta Business School, Emory University, Atlanta, GA 30322, ph: 404-727-4821, email: [email protected]., Chandra Sekhar Mangipudi is a Doctoral student at Emory University, email: [email protected] . We would like to thank Jonathan Berk, Kent Daniel, Amit Goyal, Cliff Green, Jay Shanken, and the seminar participants at the 2018 SFS Cavalcade North America, Louisiana State University and Virginia Tech for helpful discussions, comments, and suggestions. What do fund flows reveal about asset pricing models and investor sophistication? Recent literature uses the relative strength of the relation between fund flows and alphas with respect to various multifactor models to draw inferences about the best asset pricing model and about investor sophistication. This paper analytically shows that such inferences are tenable only under certain assumptions and we test their empirical validity. Our results indicate that any inference about the true asset pricing model based on alpha-flow relations is empirically untenable. The literature uses a multifactor model that includes all factors as the benchmark to assess investor sophistication. We show that the appropriate benchmark excludes some factors when their betas are estimated from the data, but even with this benchmark the rejection of investor sophistication in the literature is empirically tenable. An extensive literature documents that net fund flows into mutual funds are driven by funds’ past performance. For example, Patel, Zeckhauser, and Hendricks (1994) document that equity mutual funds with bigger returns attract more cash inflows and they offer various behavioral explanations for this phenomenon. -

Skin Or Skim? Inside Investment and Hedge Fund Performance

Skin or Skim?Inside Investment and Hedge Fund Performance∗ Arpit Guptay Kunal Sachdevaz September 7, 2017 Abstract Using a comprehensive and survivor bias-free dataset of US hedge funds, we docu- ment the role that inside investment plays in managerial compensation and fund per- formance. We find that funds with greater investment by insiders outperform funds with less “skin in the game” on a factor-adjusted basis, exhibit greater return persis- tence, and feature lower fund flow-performance sensitivities. These results suggest that managers earn outsize rents by operating trading strategies further from their capac- ity constraints when managing their own money. Our findings have implications for optimal portfolio allocations of institutional investors and models of delegated asset management. JEL classification: G23,G32,J33,J54 Keywords: hedge funds, ownership, managerial skill, alpha, compensation ∗We are grateful to our discussant Quinn Curtis and for comments from Yakov Amihud, Charles Calomiris, Kent Daniel, Colleen Honigsberg, Sabrina Howell, Wei Jiang, Ralph Koijen, Anthony Lynch, Tarun Ramado- rai, Matthew Richardson, Paul Tetlock, Stijn Van Nieuwerburgh, Jeffrey Wurgler, and seminar participants at Columbia University (GSB), New York University (Stern), the NASDAQ DRP Research Day, the Thirteenth Annual Penn/NYU Conference on Law and Finance, Two Sigma, IRMC 2017, the CEPR ESSFM conference in Gerzensee, and the Junior Entrepreneurial Finance and Innovation Workshop. We thank HFR, CISDM, eVestment, BarclaysHedge, and Eurekahedge for data that contributed to this research. We gratefully acknowl- edge generous research support from the NYU Stern Center for Global Economy and Business and Columbia University. yNYU Stern School of Business, Email: [email protected] zColumbia Business School, Email: [email protected] 1 IIntroduction Delegated asset managers are commonly seen as being compensated through fees imposed on outside investors. -

FT PARTNERS RESEARCH 2 Fintech Meets Alternative Investments

FT PARTNERS FINTECH INDUSTRY RESEARCH Alternative Investments FinTech Meets Alternative Investments Innovation in a Burgeoning Asset Class March 2020 DRAFT ©2020 FinTech Meets Alternative Investments Alternative Investments FT Partners | Focused Exclusively on FinTech FT Partners’ Advisory Capabilities FT Partners’ FinTech Industry Research Private Capital Debt & Raising Equity Sell-Side / In-Depth Industry Capital Buy-Side Markets M&A Research Reports Advisory Capital Strategic Structuring / Consortium Efficiency Proprietary FinTech Building Advisory FT Services FINTECH Infographics Partners RESEARCH & Board of INSIGHTS Anti-Raid Advisory Directors / Advisory / Monthly FinTech Special Shareholder Committee Rights Plans Market Analysis Advisory Sell-Side Valuations / LBO Fairness FinTech M&A / Financing Advisory Opinion for M&A Restructuring Transaction Profiles and Divestitures Named Silicon Valley’s #1 FinTech Banker Ranked #1 Most Influential Person in all of Numerous Awards for Transaction (2016) and ranked #2 Overall by The FinTech in Institutional Investors “FinTech Excellence including Information Finance 40” “Deal of the Decade” • Financial Technology Partners ("FT Partners") was founded in 2001 and is the only investment banking firm focused exclusively on FinTech • FT Partners regularly publishes research highlighting the most important transactions, trends and insights impacting the global Financial Technology landscape. Our unique insight into FinTech is a direct result of executing hundreds of transactions in the sector combined with over 18 years of exclusive focus on Financial Technology FT PARTNERS RESEARCH 2 FinTech Meets Alternative Investments I. Executive Summary 5 II. Industry Overview and The Rise of Alternative Investments 8 i. An Introduction to Alternative Investments 9 ii. Trends Within the Alternative Investment Industry 23 III. Executive Interviews 53 IV. -

Stronger Together

MAKING STONY BROOK STRONGER, TOGETHER From state-of-the-art healthcare facilities for STONY BROOK CHILDREN’S HOSPITAL EMERGENCY DEPARTMENT EXPANSION GOAL MET Long Island’s children to Joining dozens of community friends, three Knapp foundations help complete $10 million investments in the greatest philanthropic goal minds in medicine and in Since it opened in 2010, Stony Brook Children’s Pediatric Emergency Department has seen a 50 percent increase in patient volume, creating our remarkable students an emergency of its own: an urgent need for more space, specialists and services to care for the 25,000 children seen each year. and programs, you make Now, thanks to two dozen area families, and a collective $4 million gift from the Knapp Swezey, Gardener and Island Outreach foundations, us stronger in every way. Stony Brook Children’s is one step closer to creating an expanded pediatric emergency facility to serve Suffolk County’s children and the families who love them. “The Pediatric Emergency Department has been a remarkably successful program in terms of the quality of care we’re providing and how it’s resonated with the community,” said Chair of Pediatrics Carolyn Milana, MD. “Now, thanks to the Knapp family foundations and our surrounding community of generous friends and grateful patients, we will be able to move ahead to ensure we can continue to meet the need now and into the future.” “Time and again, the Knapp families and their foundation boards have shown such incredible commitment to the people of Long Island by their personal and philanthropic engagement with Stony Brook,” said Deborah Lowen-Klein, interim vice president for Advancement. -

The Hedge Fund Industry in New York City Alternative Assets

The Facts The Hedge Fund Industry in New York City alternative assets. intelligent data. The Hedge Fund Industry in New York City New York City is home to over a third of all the assets in the hedge fund industry today. We provide a comprehensive overview of the hedge fund industry in New York City, including New York City-based investors’ plans for the next 12 months, the number of hedge fund launches each year, fund terms and conditions, the largest hedge fund managers and performance. Fig. 1: Top 10 Leading Cities by Hedge Fund Assets under Fig. 2: Breakdown of New York City-Based Hedge Fund Management ($bn) (As at 31 December 2014) Investors by Type Manager City Headquarters Assets under Management ($bn) Fund of Hedge Funds Manager New York City 1,024 Foundation 2% London 395 3%3% 6% Private Sector Pension Fund Boston 171 31% Westport 168 8% Family Office Greenwich 164 Endowment Plan San Francisco 87 Wealth Manager Chicago 75 15% Hong Kong 61 Insurance Company 21% Dallas 52 11% Asset Manager Stockholm 39 Other Source: Preqin Hedge Fund Analyst Source: Preqin Hedge Fund Investor Profi les Fig. 3: Breakdown of Strategies Sought by New York City- Fig. 4: Breakdown of Structures Sought by New York City- Based Hedge Fund Investors over the Next 12 Months Based Hedge Fund Investors over the Next 12 Months 60% 100% 97% 50% 90% 50% 80% 40% 70% 31% 30% 26% 60% 20% 19% 50% 13% 11% 40% 9% 9% 10% 7% 7% 30% Proportion of Fund Searches 0% 20% 17% Proportion of Fund Searches 10% 5% Macro 1% Equity Credit 0% Diversified Neutral Long/Short Long/Short Commingled Managed Alternative Listed Fund Arbitrage Event Driven Fixed Income Fixed Opportunistic Equity Market Multi-Strategy Account Mutual Fund Relative Value Source: Preqin Hedge Fund Investor Profi les Source: Preqin Hedge Fund Investor Profi les Fig. -

David Eisenbud Professorship

For Your Information wife, Marilyn, Simons manages the Simons Foundation, a News from MSRI charitable organization devoted to scientific research. The Simonses live in Manhattan. MSRI Receives Major Gift Simons is president of Renaissance Technologies James H. Simons, mathematician, philanthropist, and Corporation, a private investment firm dedicated to the one of the world’s most successful hedge fund manag- use of mathematical methods. Renaissance presently has ers, announced in May 2007 a US$10 million gift from over US$30 billion under management. Previously he was the Simons Foundation to the Mathematical Sciences Re- chairman of the mathematics department at the State Uni- search Institute (MSRI), the largest single cash pledge in versity of New York at Stony Brook. Earlier in his career he the institute’s twenty-five-year history. The new funding was a cryptanalyst at the Institute of Defense Analyses in is also the largest gift of endowment made to a U.S.-based Princeton and taught mathematics at the Massachusetts institute dedicated to mathematics. Institute of Technology and Harvard University. The monies will create a US$5 million endowed chair, Simons holds a B.S. in mathematics from MIT and a the “Eisenbud Professorship”, named for David Eisenbud, Ph.D. in mathematics from the University of California, director of MSRI, to support distinguished visiting profes- sors at MSRI. The gift comes as Eisenbud nears the end of Berkeley. His scientific research was in the area of geom- his term as MSRI director (from July 1997 to July 2007) and etry and topology. He received the AMS Veblen Prize in as MSRI prepares to celebrate its twenty-fifth anniversary Geometry in 1975 for work that involved a recasting of in the late fall/winter 2007–08. -

The Evolution of Algorithmic Classes (DR17)

The evolution of algorithmic classes The Future of Computer Trading in Financial Markets - Foresight Driver Review – DR17 The Evolution of Algorithmic Classes 1 Contents The evolution of algorithmic classes ....................................................................................................2 1. Introduction ..........................................................................................................................................3 2. Typology of Algorithmic Classes..........................................................................................................4 3. Regulation and Microstructure.............................................................................................................4 3.1 Brief overview of changes in regulation .........................................................................................5 3.2 Electronic communications networks.............................................................................................6 3.3 Dark pools......................................................................................................................................8 3.4 Future ............................................................................................................................................9 4. Technology and Infrastructure ...........................................................................................................10 4.1 Future ..........................................................................................................................................10 -

Largest Hedge Fund and Fund of Hedge Funds Managers

View the full Quarterly Update at: https://www.preqin.com/docs/quarterly/hf/Preqin-Quarterly-Hedge-Fund-Update-Q2-2016.pdf The Preqin Quarterly Update: Download the data pack at: Hedge Funds, Q2 2016 www.preqin.com/quarterlyupdate Largest Hedge Fund and Fund of Hedge Funds Managers Fig. 1: Top Hedge Fund Managers by Assets under Management Manager Location Year Established Assets under Management Bridgewater Associates US 1975 $147.4bn as at 31 March 2016 AQR Capital Management US 1998 $84.1bn as at 31 March 2016 Man Group UK 1983 $53.1bn as at 31 March 2016 Och-Ziff Capital Management US 1994 $42.0bn as at 1 April 2016 Standard Life Investments UK 2006 $37.7bn as at 31 March 2016 Two Sigma Investments US 2001 $35.0bn as at 31 March 2016 Winton Capital Management Ltd. UK 1997 $34.5bn as at 31 March 2016 Millennium Management US 1989 $33.0bn as at 1 March 2016 Renaissance Technologies US 1982 $32.3bn as at 31 March 2016 BlackRock Alternative Investors US 1997 $30.2bn as at 31 March 2016 Viking Global Investors US 1999 $29.0bn as at 29 February 2016 Adage Capital Management US 2001 $27.5bn as at 31 December 2015 Elliott Management US 1977 $27.0bn as at 31 December 2015 Baupost Group US 1982 $26.9bn as at 31 December 2015 D.E. Shaw & Co. US 1988 $26.0bn as at 31 March 2016 Marshall Wace UK 1997 $26.0bn as at 31 May 2016 Davidson Kempner Capital Management US 1990 $25.4bn as at 31 March 2016 Citadel Advisors US 1990 $24.0bn as at 31 March 2016 Brevan Howard Capital Management Jersey 2002 $22.1bn as at 31 March 2016 GAM UK 1983 $22.0bn as at 30 June 2015 York Capital Management US 1991 $22.0bn as at 31 December 2015 Source: Preqin Hedge Fund Online Fig. -

ALGORITHMIC TRADING of Quantitative Investing

The Brand New World of Quantitative Investing www.tokens.quantor.co The Brand New World ALGORITHMIC TRADING of Quantitative Investing Algorithmic trading is a method of executing orders on financial markets by using automated TECHNOLOGIES pre-programmed trading algorithms in order to generate profits. CHANGING Trading algorithms are created by developers and THE INVESTMENT used for analyzing financial data and executing orders according to defined instructions in trading strategy. INDUSTRY The biggest investing companies employ trading algorithms every day. www.tokens.quantor.co 2 The Brand New World ALGORITHMIC TRADING IN VOLUMES of Quantitative Investing Volumes of trading generated by Foreign exchange futures volumes 80% algorithmic trading systems in different US financial markets For example, 67% of trading Interest rates futures volumes 67% volumes with interest rates futures in the US was generated by using trading algorithms in Equity futures volumes 62% 2014 Energy resources futures 47% Agricultural futures 38% Source: CFTC 2015 www.tokens.quantor.co 3 The Brand New World TRENDS IN ALGORITHMIC TRADING of Quantitative Investing $2.2 trn Assets under management of algorithmic hedge funds; According to McKinsey forecasts, the total amount of assets managed by funds using $879 bln trading algorithms can reach $2.2 trn by 2020; $408 bln $140 bln Source: AT Kearney/My private Banking/ KPMG/McKinsey 2000 2009 2016 2020* www.tokens.quantor.co 4 The Brand New World ALGORITHMIC HEDGE FUND vs CLASSIC INVESTMENT FUND of Quantitative Investing Renaissance Technologies Berkshire Hathaway Comparison of annual 100% performance of the best algorithmic hedge fund 75% Renaissance Technologies vs Berkshire Hathaway investment 50% company run by Warren Buffett * The biggest investing companies 25% employ algorithms every day. -

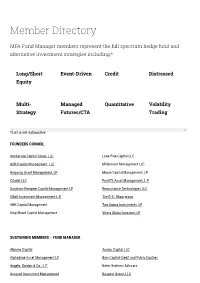

Member Directory

Member Directory MFA Fund Manager members represent the full spectrum hedge fund and alternative investment strategies including:* Long/Short Event-Driven Credit Distressed Equity Multi- Managed Quantitative Volatility Strategy Futures/CTA Trading *List is not exhaustive FOUNDERS COUNCIL Anchorage Capital Group, LLC Lone Pine Capital LLC AQR Capital Management, LLC Millennium Management LLC Balyasny Asset Management, LP Moore Capital Management, LP Citadel LLC Point72 Asset Management, L.P. Davidson Kempner Capital Management LP Renaissance Technologies LLC Elliott Investment Management L.P. The D. E. Shaw group HBK Capital Management Two Sigma Investments, LP King Street Capital Management Viking Global Investors LP SUSTAINING MEMBERS – FUND MANAGER Abrams Capital Axonic Capital LLC Alphadyne Asset Management LP Bain Capital Credit and Public Equities Angelo, Gordon & Co., L.P. Baker Brothers Advisors Assured Investment Management Baupost Group, LLC BlackRock Alternative Investors IONIC Capital Management LLC Bracebridge Capital, LLC Junto Capital Management LP Bridgewater Associates, LP. Kensico Capital Management Brigade Capital Management, LP Kepos Capital LP Cadian Capital Management Kingdon Capital Management, LLC Campbell & Company, LP Laurion Capital Management LP Capula Investment Management LLP Magnetar Capital LLC CarVal Investors Man Group Casdin Capital Marathon Asset Management, L.P. Castle Hook Partners LP Marshall Wace North America LP Centerbridge Partners, L.P. Melvin Capital CIFC Asset Management Meritage Group LP Coatue Management LLC Millburn Ridgeeld Corporation D1 Capital Partners MKP Capital Management Diameter Capital Partners LP Monarch Alternative Capital LP EJF Capital, LLC Napier Park Global Capital Element Capital Management LLC One William Street Capital Management LP Eminence Capital, LP P. Schoenfeld Asset Management LP Empyrean Capital Partners, LP Palestra Capital Management LLC Emso Asset Management Limited Paloma Partners Management Company ExodusPoint Capital Management, LP PAR Capital Management, Inc.