Predictive Bayesian Selection of Multistep Markov Chains, Applied To

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Coos Bay Chapel, Cemetery

C M C M Y K Y K TRIPLE THREAT TWENTY INJURED Tigers’ Cabrera may win triple crown, B1 Train slams into big rig in Calif., A7 Serving Oregon’s South Coast Since 1878 TUESDAY, OCTOBER 2, 2012 theworldlink.com I 75¢ Schools: State gets an F in communication BY JESSIE HIGGINS student success, and take the place He wasn’t instructed on how to intended for the Bandon School her she must use data from the The World of No Child Left Behind. write the compact until May, with a District. 2010/11 school year to set improve- But the three local districts say July 1 deadline to submit. Now, the compacts take into ment goals, a year in which her dis- Coquille, Bandon and Port their “low goals” are a result of The compacts initially com- account only third-grade test trict scored 10 percent higher in Orford/Langlois school districts poor communication from the prised data from the previous five scores and graduation rates. math. Bandon School District had join 66 other Oregon districts that state, and a hurried planning years, Sweeney said. The state “They really rushed us through the same issue with their compact, must set higher academic achieve- process. Two of the three districts required districts to measure it,”said Chris Nichols, the superin- and has made the adjustment, ment goals for the upcoming said they were told after the fact attendance, test scores, whether tendent for Port Orford/Langlois Buche said. school year, per the state’s order. that they used data from the wrong students were on track to graduate, Schools. -

2008-09 Review

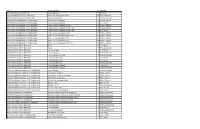

2008-09 REVIEW 2008-09 SEASON RESULTS Overall Home Away Neutral All Games 26-9 18-1 6-4 2-4 Pac-10 Conference 14-4 8-1 6-3 0-0 Non-Conference 12-5 10-0 0-1 2-4 Date Opponent W / L Score Attend High Scorer High Rebounder HUSKY ATHLETICS 11/15/08 at Portland L 74-80 2617 (30)Brockman, Jon (14)Brockman, Jon 11/18/08 # CLEVELAND STATE W 78-63 7316 (23)Brockman, Jon (13)Brockman, Jon 11/20/08 # FLORIDA INT'L W 74-51 7532 (21)Dentmon, Justin (7)Brockman, Jon (7)Holiday, Justin 11/24/08 ^ vs Kansas L 54-73 14720 (17)Thomas, Isaiah (18)Brockman, Jon 11/25/08 ^ vs Florida L 84-86 16988 (22)Brockman, Jon (11)Brockman, Jon 11/29/08 PACIFIC W 72-54 7527 (16)Pondexter, Quincy (12)Pondexter, Quincy 12/04/08 + OKLAHOMA STATE W 83-65 7789 (18)Thomas, Isaiah (11)Brockman, Jon 12/06/08 TEXAS SOUTHERN W 88-52 7241 (18)Bryan-Amaning, Matt (11)Brockman, Jon 12/14/08 PORTLAND STATE W 84-83 7280 (23)Bryan-Amaning, Matt (12)Bryan-Amaning, Matt 12/20/08 EASTERN WASHINGTON W 83-50 7401 (17)Brockman, Jon (7)Bryan-Amaning, Matt 12/28/08 MONTANA W 75-53 9045 (13)Brockman, Jon (15)Bryan-Amaning, Matt THIS IS HUSKY BASKETBALL (13)Thomas, Isaiah 12/30/08 MORGAN STATE W 81-67 8260 (27)Thomas, Isaiah (6)Pondexter, Quincy 01/03/09 * at Washington State W 68-48 8107 (19)Thomas, Isaiah (7)Pondexter, Quincy 01/08/09 * STANFORD W 84-83 9291 (19)Brockman, Jon (18)Brockman, Jon 01/10/09 * CALIFORNIA L 3ot 85-88 9946 (24)Dentmon, Justin (18)Brockman, Jon 01/15/09 * at Oregon W 84-67 8237 (23)Thomas, Isaiah (10)Brockman, Jon OUTLOOK 01/17/09 * at Oregon State W 85-59 6648 (16)Brockman, -

Cleveland Cavaliers (37-22) at Indiana Pacers (23-34)

FRI., FEB. 27, 2015 BANKERS LIFE FIELDHOUSE – INDIANAPOLIS, IN TV: FSO RADIO: WTAM 1100 AM/100.7 WMMS/LA MEGA 87.7 FM 7:00 PM EST CLEVELAND CAVALIERS (37-22) AT INDIANA PACERS (23-34) 2014-15 CLEVELAND CAVALIERS GAME NOTES REGULAR SEASON GAME #60 ROAD GAME #29 PROBABLE STARTERS 2014-15 SCHEDULE POS NO. PLAYER HT. WT. G GS PPG RPG APG FG% MPG 10/30 vs. NYK Lost, 90-95 10/31 @ CHI WON, 114-108* F 23 LEBRON JAMES 6-8 250 14-15: 49 49 26.0 5.8 7.3 .491 36.3 11/4 @ POR Lost, 82-101 11/5 @ UTA Lost, 100-102 11/7 @ DEN WON, 110-101 F 0 KEVIN LOVE 6-10 243 14-15: 56 56 16.9 10.3 2.3 .433 34.6 11/10 vs. NOP WON, 118-111 11/14 @ BOS WON, 122-121 11/15 vs. ATL WON, 127-94 C 20 TIMOFEY MOZGOV 7-1 250 14-15: 58 57 9.3 7.9 0.5 .543 26.0 11/17 vs. DEN Lost, 97-106 11/19 vs. SAS Lost, 90-92 11/21 @ WAS Lost, 78-91 G 5 J.R. SMITH 6-6 225 14-15: 48 29 11.6 2.7 3.0 .410 28.7 11/22 vs. TOR Lost, 93-110 11/24 vs. ORL WON, 106-74 G 8 MATTHEW DELLAVEDOVA 6-4 200 14-15: 44 9 4.3 1.8 2.8 .363 20.0 11/26 vs. WAS WON, 113-87 11/29 vs. -

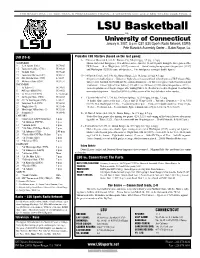

LSU Basketball Vs

THE BRADY ERA | In 10th YEAR, 6 POSTSEASON TOURN., 3 WESTERN DIV. and 2 SEC TITLES; 2006 FINAL 4 LSU Basketball vs. University of Connecticut January 6, 2007, 8 p.m. CST (LSU Sports Radio Network, ESPN) Pete Maravich Assembly Center -- Baton Rogue, La. LSU (10-3) Probable LSU Starters (based on the last game): G -- 2Dameon Mason (6-6, 183, Jr., Kansas City, Mo.) 8.0 ppg, 3.5 rpg, 1.2 apg NOVEMBER Mason started last four games, 11 in all this season ... Had 14, 13 and 11 points during the three games of the 9 E. A. Sports (Exh.) W, 70-65 HCF Classic ... 14 vs. Wright State (12/27) season est ... Out of starting lineup against Oregon State (12/17) 15 Louisiana College (Exh.) W, 94-41 and Washington (12/20) because of migraines ... Five total games scoring in double figures. 17 Nicholls State W, 96-42 19 Louisiana-Monroe (CST) W, 88-57 G -- 14 Garrett Temple (6-5, 190, So., Baton Rouge, La.) 10.2 ppg, 2.8 rpg, 4.1 apg 25 #24 Wichita State (CST) L, 53-57 Six games in double figures ... Had career highs of seven assists in back-to-back games of HCF Classic (Miss. 29 McNeese State (CST) W, 91-57 Valley, 12/28; Samford 12/29) with just five combined turnovers ... In first seven games had 23 assists and just DECEMBER 7 turnovers ... Career high of 18 at Tulane (12/2) with 17 vs. McNeese (11/29) and at Oregon State (12/17) ... 2 At Tulane (1) W, 74-67 Earned reputation as defensive stopper after holding Duke’s J.J. -

Set Subset/Insert # Player

SET SUBSET/INSERT # PLAYER 2014 Panini Golden Age Baseball Historic Signatures 12 Bo Derek 2014 Panini National Treasures Baseball Rookie Material Signatures Gold 197 Gregory Polanco 2015 Panini Black Gold (15-16) Basketball Black Gold Signatures 17 Wesley Matthews 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs 213 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs 230 R.J. Hunter 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Double 251 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Double Prime 251 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Double Prime 267 R.J. Hunter 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Jumbo 313 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Jumbo Prime 313 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Prime 213 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Triple 282 Stanley Johnson 2015 Panini Gold Standard (15-16) Basketball Rookie Jersey Autographs Triple Prime 282 Stanley Johnson 2015 Panini Luxe (15-16) Basketball DeLuxe 21 Terry Rozier 2015 Panini Luxe (15-16) Basketball DeLuxe 79 Wesley Matthews 2015 Panini Luxe (15-16) Basketball Luxe Autographs 21 Terry Rozier 2015 Panini Luxe (15-16) Basketball Luxe Autographs 79 Wesley Matthews 2015 Panini Luxe (15-16) Basketball Luxe Autographs Emerald 79 Wesley -

2018-19 Phoenix Suns Media Guide 2018-19 Suns Schedule

2018-19 PHOENIX SUNS MEDIA GUIDE 2018-19 SUNS SCHEDULE OCTOBER 2018 JANUARY 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 SAC 2 3 NZB 4 5 POR 6 1 2 PHI 3 4 LAC 5 7:00 PM 7:00 PM 7:00 PM 7:00 PM 7:00 PM PRESEASON PRESEASON PRESEASON 7 8 GSW 9 10 POR 11 12 13 6 CHA 7 8 SAC 9 DAL 10 11 12 DEN 7:00 PM 7:00 PM 6:00 PM 7:00 PM 6:30 PM 7:00 PM PRESEASON PRESEASON 14 15 16 17 DAL 18 19 20 DEN 13 14 15 IND 16 17 TOR 18 19 CHA 7:30 PM 6:00 PM 5:00 PM 5:30 PM 3:00 PM ESPN 21 22 GSW 23 24 LAL 25 26 27 MEM 20 MIN 21 22 MIN 23 24 POR 25 DEN 26 7:30 PM 7:00 PM 5:00 PM 5:00 PM 7:00 PM 7:00 PM 7:00 PM 28 OKC 29 30 31 SAS 27 LAL 28 29 SAS 30 31 4:00 PM 7:30 PM 7:00 PM 5:00 PM 7:30 PM 6:30 PM ESPN FSAZ 3:00 PM 7:30 PM FSAZ FSAZ NOVEMBER 2018 FEBRUARY 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 2 TOR 3 1 2 ATL 7:00 PM 7:00 PM 4 MEM 5 6 BKN 7 8 BOS 9 10 NOP 3 4 HOU 5 6 UTA 7 8 GSW 9 6:00 PM 7:00 PM 7:00 PM 5:00 PM 7:00 PM 7:00 PM 7:00 PM 11 12 OKC 13 14 SAS 15 16 17 OKC 10 SAC 11 12 13 LAC 14 15 16 6:00 PM 7:00 PM 7:00 PM 4:00 PM 8:30 PM 18 19 PHI 20 21 CHI 22 23 MIL 24 17 18 19 20 21 CLE 22 23 ATL 5:00 PM 6:00 PM 6:30 PM 5:00 PM 5:00 PM 25 DET 26 27 IND 28 LAC 29 30 ORL 24 25 MIA 26 27 28 2:00 PM 7:00 PM 8:30 PM 7:00 PM 5:30 PM DECEMBER 2018 MARCH 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 1 2 NOP LAL 7:00 PM 7:00 PM 2 LAL 3 4 SAC 5 6 POR 7 MIA 8 3 4 MIL 5 6 NYK 7 8 9 POR 1:30 PM 7:00 PM 8:00 PM 7:00 PM 7:00 PM 7:00 PM 8:00 PM 9 10 LAC 11 SAS 12 13 DAL 14 15 MIN 10 GSW 11 12 13 UTA 14 15 HOU 16 NOP 7:00 -

2016‐17 Flawless Basketball Player Card Totals

2016‐17 Flawless Basketball Player Card Totals 2015 Flawless Extra Cards not included (unknown print runs, players highlighted in yellow) Add'l Card Counts (Not print runs) for 2015 Extras: Khris Middleton x1, Pau Gasol x1, Kenny Smith x5, Draymond Green x40, Goran Dragic x28, Dennis Schroder x11, Nicolas Batum x14, Marcin Gortat x11, Nikola Vucevic x10, Rudy Gay x10, Tony Parker x10 266 Players with Cards 7 of those players have 6 cards or under, 11 Players only have Diamond Cards (Nash and Dennis Johnson only have 6) Auto Auto TOTAL Auto Diamond Relic Auto Relic Logo Logoman Champ Patch Team Auto Logo‐ Champ Cards Total Only Total Total Patch Patch Man Diamond Tag Diamond Man Tag Aaron Gordon 369 369 112 257 Al Horford 122 122 119 1 2 Al Jefferson 34 34 34 Alex English 57 57 57 Allen Crabbe 98 98 57 41 Allen Iverson 359 309 49 1 229 80 1 Alonzo Mourning 184 178 6 178 Amar'e Stoudemire 41 41 41 Andre Drummond 155 153 2 115 38 2 Andre Iguodala 43 43 41 2 Andre Roberson 1 1 1 Andrei Kirilenko 97 97 97 Andrew Bogut 41 41 41 Andrew Wiggins 311 138 43 130 138 122 2 1 5 Anfernee Hardaway 112 112 112 Anthony Davis 324 274 43 7 212 62 1 1 5 Artis Gilmore 261 232 29 232 29 Austin Rivers 82 82 82 Ben Simmons 43 43 Ben Wallace 186 180 6 174 6 Bernard King 113 113 113 Bill Russell 215 172 43 172 Blake Griffin 290 117 43 130 117 122 2 1 5 Bobby Portis 16 16 16 Bojan Bogdanovic 113 113 56 57 Bradley Beal 43 43 Brandon Ingram 376 255 43 78 60 194 1 74 2 2 Brandon Jennings 41 41 41 Brandon Knight 39 39 39 Brook Lopez 82 82 82 Buddy Hield 413 253 43 117 58 194 1 115 2 C.J. -

By Joe Kaiser ESPN It Took Danny Ferry Exactly Seven Days in His

By Joe Kaiser ESPN Smith had a great 2011 -12, but he is an unrestricted free agent after this season. It took Danny Ferry exactly seven days in his new role as the Atlanta Hawks' president of basketball operations and general manager to completely change the face of the franchise. His ability to swiftly orchestrate separate deals to part with high-priced veterans Joe Johnson and Marvin Williams signaled a commitment to rebuilding and shed as much as $77 million still owed to the two veterans beyond next season. Fast-forward two-and-a-half months to today, and Ferry's rebuilt roster has only a handful of contracts that go beyond the 2012-13 season: • Al Horford : Signed through 2015-16. • Lou Williams : Signed through 2013-14. • John Jenkins : 2012 first-round pick. • Mike Scott : 2012 second-round pick. • Jeff Teague : Due to become a restricted free agent at season's end, barring an extension. Notice one big name we didn't mention: Josh Smith . The tantalizingly talented, yet often frustrating, forward is among the many Hawks entering the final year of their deals, and he represents arguably the biggest challenge for Ferry to date: what to do with Smith. If handled correctly, this could be the next step toward eventually turning the Hawks into a perennial playoff contender. Mishandled, and this could undo everything good that came out of the trades of Johnson and Williams. So the question is, what's the smarter move for Ferry and the Hawks? a) Negotiate an extension that will keep Smith in Atlanta for the long term. -

Chimezie Metu Demar Derozan Taj Gibson Nikola Vucevic

DeMar Chimezie DeRozan Metu Nikola Taj Vucevic Gibson Photo courtesy of Fernando Medina/Orlando Magic 2019-2020 • 179 • USC BASKETBALL USC • In The Pros All-Time The list below includes all former USC players who had careers in the National Basketball League (1937-49), the American Basketball Associa- tion (1966-76) and the National Basketball Association (1950-present). Dewayne Dedmon PLAYER PROFESSIONAL TEAMS SEASONS Dan Anderson Portland .............................................1975-76 Dwight Anderson New York ................................................1983 San Diego ..............................................1984 John Block Los Angeles Lakers ................................1967 San Diego .........................................1968-71 Milwaukee ..............................................1972 Philadelphia ............................................1973 Kansas City-Omaha ..........................1973-74 New Orleans ..........................................1975 Chicago .............................................1975-76 David Bluthenthal Sacramento Kings ..................................2005 Mack Calvin Los Angeles (ABA) .................................1970 Florida (ABA) ....................................1971-72 Carolina (ABA) ..................................1973-74 Denver (ABA) .........................................1975 Virginia (ABA) .........................................1976 Los Angeles Lakers ................................1977 San Antonio ............................................1977 Denver ...............................................1977-78 -

Box Score Spurs

NATIONAL BASKETBALL ASSOCIATION OFFICIAL SCORER'S REPORT FINAL BOX Thursday, May 13, 2021 Madison Square Garden, New York, NY Officials: #15 Zach Zarba, #50 Gediminas Petraitis, #62 JB DeRosa Game Duration: 2:17 Attendance: 1981 (Sellout) VISITOR: San Antonio Spurs (33-37) POS MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS +/- PTS 10 DeMar DeRozan F 37:32 10 18 0 0 7 9 0 8 8 4 2 1 3 0 6 27 3 Keldon Johnson F 30:45 5 11 2 4 1 1 0 2 2 2 3 1 2 0 9 13 25 Jakob Poeltl C 34:17 4 7 0 0 0 0 2 7 9 2 4 1 0 3 0 8 5 Dejounte Murray G 34:30 7 18 0 1 0 1 1 8 9 7 2 1 0 1 -3 14 1 Lonnie Walker IV G 29:21 5 13 2 6 0 0 1 3 4 2 0 0 0 0 13 12 8 Patty Mills 27:40 1 5 1 4 3 3 0 0 0 3 1 0 2 0 -22 6 14 Drew Eubanks 13:27 3 7 0 0 2 2 5 4 9 1 3 0 2 2 -1 8 22 Rudy Gay 19:07 2 10 1 5 3 3 0 3 3 1 1 0 0 0 -14 8 24 Devin Vassell 13:21 1 2 0 1 0 0 0 3 3 0 0 0 0 0 -8 2 31 Keita Bates-Diop DNP - Coach's decision 7 Gorgui Dieng DNP - Coach's decision 33 Tre Jones DNP - Coach's decision 15 Quinndary Weatherspoon DNP - Coach's decision 240:00 38 91 6 21 16 19 9 38 47 22 16 4 9 6 -4 98 41.8% 28.6% 84.2% TM REB: 9 TOT TO: 11 (10 PTS) HOME: NEW YORK KNICKS (39-31) POS MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS +/- PTS 25 Reggie Bullock F 38:51 3 9 2 8 0 0 1 3 4 3 5 3 1 0 8 8 30 Julius Randle F 44:53 7 21 1 5 10 10 0 9 9 9 3 1 3 0 1 25 3 Nerlens Noel C 26:33 2 3 0 0 2 2 4 1 5 0 3 1 0 2 -12 6 9 RJ Barrett G 40:54 8 19 5 9 3 3 1 8 9 5 1 1 2 0 5 24 6 Elfrid Payton G 12:39 0 4 0 0 0 0 0 1 1 2 2 0 1 0 -11 0 5 Immanuel Quickley 11:20 0 4 0 2 0 0 0 0 0 0 1 0 1 0 0 0 67 Taj Gibson -

Hawks Work 4Ots at 1-800-765-4011,Ext

Time: 03-25-2012 23:05 User: rtaylor2 PubDate: 03-26-2012 Zone: IN Edition: 1 Page Name: C2 Color: CyanMagentaYellowBlack C2 | MONDAY,MARCH 26, 2012 | THE COURIER-JOURNAL SPORTS | courier-journal.com/sports IN NBA To report sports scores E-mail [email protected] or call Scorecard the sports desk at 502-582-4361, or toll free Hawks work 4OTs at 1-800-765-4011,ext. 4361 COLLEGE AUTO RACING $79,555. 1:59:50.9863. Margin of Victory: 38. (32) Michael McDowell,Ford, vi- 5.5292 seconds. Cautions:3for 15 Suns making BASKETBALL Sprint Cup bration, 6, $79,307. laps. Lead Changes:9among 7 Auto Club 400 39. (20) David Stremme,Toyota, rear drivers. Men Sunday at Fontana, Calif. gear,5,$75,855. Lap Leaders:Power 1-11, Briscoe runinWest NCAA Tournament Auto Club Speedway 40. (39) MikeBliss,Toyota, transmis- 12-20, Dixon 21-36, Sato 37-46, SOUTH REGIONAL Lap length: 2miles sion, 0, $75,675. Franchitti 47, Dixon 48-68, Castro- At The Georgia Dome (Start position in parentheses) 41. (35) Scott Riggs,Chevrolet, vibra- neves 69-70,Sato 71, Hildebrand 72- Associated Press Atlanta 1. (9) Tony Stewart, Chevrolet, 129 tion, 3, $75,505. 74, Castroneves 75-100. Regional Championship laps,$323,450. 42. (43) Reed Sorenson, Chevrolet, Points:Castroneves 50, Dixon 42, Sunday 2. (2) Kyle Busch, Toyota, 129, vibration, 0, $75,415. Hunter-Reay 35, Hinchcliffe 32, Bris- Joe Johnson scored 37 Kentucky 82, Baylor 70 $259,698. 43. (37) Brendan Gaughan, Chevro- coe 30, Pagenaud 28, Power 27, Viso points, Josh Smith added MIDWEST REGIONAL 3. -

Player Set Card # Team Print Run Al Horford Top-Notch Autographs

2013-14 Innovation Basketball Player Set Card # Team Print Run Al Horford Top-Notch Autographs 60 Atlanta Hawks 10 Al Horford Top-Notch Autographs Gold 60 Atlanta Hawks 5 DeMarre Carroll Top-Notch Autographs 88 Atlanta Hawks 325 DeMarre Carroll Top-Notch Autographs Gold 88 Atlanta Hawks 25 Dennis Schroder Main Exhibit Signatures Rookies 23 Atlanta Hawks 199 Dennis Schroder Rookie Jumbo Jerseys 25 Atlanta Hawks 199 Dennis Schroder Rookie Jumbo Jerseys Prime 25 Atlanta Hawks 25 Jeff Teague Digs and Sigs 4 Atlanta Hawks 15 Jeff Teague Digs and Sigs Prime 4 Atlanta Hawks 10 Jeff Teague Foundations Ink 56 Atlanta Hawks 10 Jeff Teague Foundations Ink Gold 56 Atlanta Hawks 5 Kevin Willis Game Jerseys Autographs 1 Atlanta Hawks 35 Kevin Willis Game Jerseys Autographs Prime 1 Atlanta Hawks 10 Kevin Willis Top-Notch Autographs 4 Atlanta Hawks 25 Kevin Willis Top-Notch Autographs Gold 4 Atlanta Hawks 10 Kyle Korver Digs and Sigs 10 Atlanta Hawks 15 Kyle Korver Digs and Sigs Prime 10 Atlanta Hawks 10 Kyle Korver Foundations Ink 23 Atlanta Hawks 10 Kyle Korver Foundations Ink Gold 23 Atlanta Hawks 5 Pero Antic Main Exhibit Signatures Rookies 43 Atlanta Hawks 299 Spud Webb Main Exhibit Signatures 2 Atlanta Hawks 75 Steve Smith Game Jerseys Autographs 3 Atlanta Hawks 199 Steve Smith Game Jerseys Autographs Prime 3 Atlanta Hawks 25 Steve Smith Top-Notch Autographs 31 Atlanta Hawks 325 Steve Smith Top-Notch Autographs Gold 31 Atlanta Hawks 25 groupbreakchecklists.com 13/14 Innovation Basketball Player Set Card # Team Print Run Bill Sharman Top-Notch Autographs