Electrical Measuring Instruments and Instrumentation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

U1733C Handheld LCR Meter

Keysight U1731C/U1732C/ U1733C Handheld LCR Meter User’s Guide U1731C/U1732C/U1733C User’s Guide I Notices © Keysight Technologies 2011 – 2014 Warranty Safety Notices No part of this manual may be reproduced in The material contained in this document is any form or by any means (including elec- provided “as is,” and is subject to change, tronic storage and retrieval or translation without notice, in future editions. Further, CAUTION into a foreign language) without prior agree- to the maximum extent permitted by the ment and written consent from Keysight applicable law, Keysight disclaims all A CAUTION notice denotes a haz- Technologies as governed by United States ard. It calls attention to an operat- and international copyright laws. warranties, either express or implied, with regard to this manual and any information ing procedure, practice, or the likes Manual Part Number contained herein, including but not limited of that, if not correctly performed to the implied warranties of merchantabil- or adhered to, could result in dam- U1731-90077 ity and fitness for a particular purpose. Keysight shall not be liable for errors or for age to the product or loss of impor- Edition incidental or consequential damages in tant data. Do not proceed beyond a Edition 8, August 2014 connection with the furnishing, use, or CAUTION notice until the indicated performance of this document or of any conditions are fully understood and Keysight Technologies information contained herein. Should Key- met. 1400 Fountaingrove Parkway sight and the user have a separate written Santa Rosa, CA 95403 agreement with warranty terms covering the material in this document that conflict with these terms, the warranty terms in WARNING the separate agreement shall control. -

1920 Precision LCR Meter User and Service Manual

♦ PRECISION INSTRUMENTS FOR TEST AND MEASUREMENT ♦ 1920 Precision LCR Meter User and Service Manual Copyright © 2014 IET Labs, Inc. Visit www.ietlabs.com for manual revision updates 1920 im/February 2014 IET LABS, INC. www.ietlabs.com Long Island, NY • Email: [email protected] TEL: (516) 334-5959 • (800) 899-8438 • FAX: (516) 334-5988 ♦ PRECISION INSTRUMENTS FOR TEST AND MEASUREMENT ♦ IET LABS, INC. www.ietlabs.com Long Island, NY • Email: [email protected] TEL: (516) 334-5959 • (800) 899-8438 • FAX: (516) 334-5988 ♦ PRECISION INSTRUMENTS FOR TEST AND MEASUREMENT ♦ WARRANTY We warrant that this product is free from defects in material and workmanship and, when properly used, will perform in accordance with applicable IET specifi cations. If within one year after original shipment, it is found not to meet this standard, it will be repaired or, at the option of IET, replaced at no charge when returned to IET. Changes in this product not approved by IET or application of voltages or currents greater than those allowed by the specifi cations shall void this warranty. IET shall not be liable for any indirect, special, or consequential damages, even if notice has been given to the possibility of such damages. THIS WARRANTY IS IN LIEU OF ALL OTHER WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO, ANY IMPLIED WARRANTY OF MERCHANTABILITY OR FITNESS FOR ANY PARTICULAR PURPOSE. IET LABS, INC. www.ietlabs.com 534 Main Street, Westbury, NY 11590 TEL: (516) 334-5959 • (800) 899-8438 • FAX: (516) 334-5988 i WARNING OBSERVE ALL SAFETY RULES WHEN WORKING WITH HIGH VOLTAGES OR LINE VOLTAGES. -

878B and 879B LCR Meter User Manual

Model 878B, 879B Dual Display LCR METER INSTRUCTION MANUAL Safety Summary The following safety precautions apply to both operating and maintenance personnel and must be observed during all phases of operation, service, and repair of this instrument. DO NOT OPERATE IN AN EXPLOSIVE ATMOSPHERE Do not operate the instrument in the presence of flammable gases or fumes. Operation of any electrical instrument in such an environment constitutes a definite safety hazard. KEEP AWAY FROM LIVE CIRCUITS Instrument covers must not be removed by operating personnel. Component replacement and internal adjustments must be made by qualified maintenance personnel. DO NOT SUBSTITUTE PARTS OR MODIFY THE INSTRUMENT Do not install substitute parts or perform any unauthorized modifications to this instrument. Return the instrument to B&K Precision for 1 service and repair to ensure that safety features are maintained. WARNINGS AND CAUTIONS WARNING and CAUTION statements, such as the following examples, denote a hazard and appear throughout this manual. Follow all instructions contained in these statements. A WARNING statement calls attention to an operating procedure, practice, or condition, which, if not followed correctly, could result in injury or death to personnel. A CAUTION statement calls attention to an operating procedure, practice, or condition, which, if not followed correctly, could result in damage to or destruction of part or all of the product. Safety Guidelines To ensure that you use this device safely, follow the safety guidelines listed below: 2 This meter is for indoor use, altitude up to 2,000 m. The warnings and precautions should be read and well understood before the instrument is used. -

AC & DC True RMS Current and Voltage Transducer Wattmeter

AC & DC true RMS current and voltage transducer Wattmeter, Voltmeter, Ammeter,…. Type CPL35 • Continuous or alternative RMS measures: Single-phase or balanced three-phase 0...440 Hz PWM, wave train, Phase angle variation, AC All high level harmonics signals • multi-sensor for current measurement: Shunt, transformer, Rogowski coil, Hall effect sensor or direct input 1A/ 5A • Programmable: Voltmeter, ammeter, wattmeter, varmeter, power factor, Cos phi, frequency meter • 4 digits measure display U, I, Cos, P, Q, Hz DC+AC • Up to 2 isolated analog outputs and 2 relay outputs • Wide range universal ac/dc power supply The CPL35 is a converter for measuring, monitoring and retransmission of electrical parameters. Implementa- tion is fast by simple configuration of transformer ratio or shunt sensitivity. The various output options allow a wide range of application: measurement, protection, control. Measurement: Block diagram: - DC or AC, single-phase or balanced three-phase network (configurable TP, CT ratio or shunt sensitivity), - 2 voltage calibers: 150V, 600V others on request up to 1000V, - 3 current calibers: 200mV (external shunt) ,1A or 5A internal shunt, - Hall effect current sensor (+/-4V input) - active (P), reactive (Q), apparent (S) powers, - cos (power factor) , frequency 1Hz…..440 Hz, - configurable integration time from 10 ms to 60 seconds for the measurement in slow waves train applications. Front face: - 4 digit alphanumeric LED matrix display for the measurement, - 2 red LEDs to display the status of relays, - 2 push buttons for: * The complete configuration of the device * Selecting the displayed value (U, I, Cos, P, Q, S, Hz) * Setting of alarm thresholds, ....... Relays (/R option): Up to 2 configurable relays: - In alarm, monitoring measure selection (U, I, Cos, P, Q, S, Hz), - Threshold, direction, hysteresis and delay individually adjustable on Associated current sensors each relay (on & off delay), shunt current transformer Hall effect sensor Rogowski coil - HOLD function (alarm storage with RESET by front face). -

A Rapid Inductance Estimation Technique by Frequency Manipulation

Latest Trends in Circuits, Control and Signal Processing A Rapid Inductance Estimation Technique By Frequency Manipulation LUM KIN YUN, TAN TIAN SWEE Medical Implant Technology Group, Materials and Manufacturing Research Alliance, Faculty of Biosciences and Medical Engineering, Universiti Teknologi Malaysia, Skudai 81310, Johor, Malaysia [email protected]; [email protected] Abstract:- Inductors or coils are one of the basic electronics elements. Measurement of the inductance values does play an important role in circuit designing and evaluation process. However, the inductance cannot be measured easily like resistor or capacitor. Thus, here would like to introduce an inductance estimation technique which can estimate the inductance value in a short time interval. No complex and expensive equipment are involved throughout the measurement. Instead, it just required the oscilloscope and function generator that is commonly available in most electronic laboratory. The governed theory and methods are being stated. The limitation of the method is being discussed alongside with the principles and computer simulation. From the experiment, the error in measurement is within 10%. This method does provide promising result given the series resistance of the inductor is small and being measured using proper frequency. Key-Words: -Inductor, Inductance measurement, phasor, series resistance, phase difference, impedance. • Current and voltage method which is based on the determination of impedance. 1 Introduction • Bridge and differential method which is based on the relativity of the voltages and There are many inductors being used in electronics currents between the measured and reference circuits. In order to evaluate the performance of impedances until a balancing state is electronic system, it is particular important in achieved. -

THAM 4-4 Programmable Impedance Transfer Standard to Support LCR Meters

THAM 4-4 Programmable Impedance Transfer Standard to Support LCR Meters N.M. Oldham S.R. Booker Electricity Division Primary Standards Lab National Institute of Standards and Technology' Sandia National Laboratories Gaithersburg, MD 20899-0001 Albuquerque, NM Summary Abstract n. Programmable ImpedanceStandards A programmable transfer standard for calibrating impedance (LCR)meters is described. The standard makes use of low loss chip Miniature impedances components and an electronic impedance generator (to synthesize Conventional impedance standards like air-core inductors, arbitrary complex impedances) that operate up to I MHz. gas-dielectric capacitors, and wire-wound resistors have served Intercomparison data between several LCR meters, including the standards community for decades. They were designed for estimated uncertainties will be provided in the final paper. stability and a number of other qualities, but not compactness. They are typically mounted in cases that are 10 to 20 cm on a I. Introduction side with large connectors, and are not easily incorporated into a programmableimpedance standard. To overcome this problem, chip Commercial digital impedance meters, often referred to as LCR components were evaluated as possible impedance standards and it meters, are used much like digital multimeters (DMMs) as diagnostic was discovered that some of these chips are approaching the quality and quality control tools in engineering and manufacturing. Like the of the older laboratory standards. For example, I of ceramic chip best DMMs, the accuracies of the best LCR meters are approaching capacitors are available with temperature coefficients of 30 ppml°C those of the standards that support them. The standards are 2- to 4- compared to 5 ppml°C for gas capacitors. -

Voltage and Power Measurements Fundamentals, Definitions, Products 60 Years of Competence in Voltage and Power Measurements

Voltage and Power Measurements Fundamentals, Definitions, Products 60 Years of Competence in Voltage and Power Measurements RF measurements go hand in hand with the name of Rohde & Schwarz. This company was one of the founders of this discipline in the thirties and has ever since been strongly influencing it. Voltmeters and power meters have been an integral part of the company‘s product line right from the very early days and are setting stand- ards worldwide to this day. Rohde & Schwarz produces voltmeters and power meters for all relevant fre- quency bands and power classes cov- ering a wide range of applications. This brochure presents the current line of products and explains associated fundamentals and definitions. WF 40802-2 Contents RF Voltage and Power Measurements using Rohde & Schwarz Instruments 3 RF Millivoltmeters 6 Terminating Power Meters 7 Power Sensors for URV/NRV Family 8 Voltage Sensors for URV/NRV Family 9 Directional Power Meters 10 RMS/Peak Voltmeters 11 Application: PEP Measurement 12 Peak Power Sensors for Digital Mobile Radio 13 Fundamentals of RF Power Measurement 14 Definitions of Voltage and Power Measurements 34 References 38 2 Voltage and Power Measurements RF Voltage and Power Measurements The main quality characteristics of a parison with another instrument is The frequency range extends from DC voltmeter or power meter are high hampered by the effect of mismatch. to 40 GHz. Several sensors with differ- measurement accuracy and short Rohde & Schwarz resorts to a series of ent frequency and power ratings are measurement time. Both can be measures to ensure that the user can required to cover the entire measure- achieved through utmost care in the fully rely on the voltmeters and power ment range. -

Circuit-Analysis-With-Multisim.Pdf

BÁEZ-LÓPEZ • GUERRERO-CASTRO SeriesSeriesSeries ISSN:ISSN: ISSN: 1932-31661932-3166 1932-3166 BÁEZ-LÓPEZ • GUERRERO-CASTRO BÁEZ-LÓPEZ • GUERRERO-CASTRO SSSYNTHESISYNTHESISYNTHESIS L LLECTURESECTURESECTURES ON ONON MMM MorganMorganMorgan & & & ClaypoolClaypoolClaypool PublishersPublishersPublishers DDDIGITALIGITALIGITAL C CCIRCUITSIRCUITSIRCUITS AND ANDAND S SSYSTEMSYSTEMSYSTEMS &&&CCC SeriesSeriesSeries Editor:Editor: Editor: Mitchell MitchellMitchell Thornton,Thornton, Thornton, Southern SouthernSouthern Methodist MethodistMethodist University UniversityUniversity CircuitCircuitCircuit Analysis AnalysisAnalysis with withwith Multisim MultisimMultisim DavidDavidDavid Báez-LópezBáez-López Báez-López andand and FélixFélix Félix E.E. E. Guerrero-CastroGuerrero-Castro Guerrero-Castro CircuitCircuitCircuit AnalysisAnalysisAnalysis withwithwith UniversidadUniversidadUniversidad dede de laslas las Américas-Puebla,Américas-Puebla, Américas-Puebla, MéxicoMéxico México ThisThisThis bookbook book isis is concernedconcerned concerned withwith with circuitcircuit circuit simulationsimulation simulation usingusing using NationalNational National InstrumentsInstruments Instruments Multisim.Multisim. Multisim. ItIt It focusesfocuses focuses onon on MultisimMultisimMultisim thethethe useuse use andand and comprehensioncomprehension comprehension ofof of thethe the workingworking working techniquestechniques techniques forfor for electricalelectrical electrical andand and electronicelectronic electronic circuitcircuit circuit simulation.simulation. simulation. -

LCR Measurement Primer 2Nd Edition, August 2002 Comments: [email protected]

LCLCRR MEASUREMENMEASUREMENTT PRIMEPRIMERR ISO 9001 Certified 5 Clock Tower Place, 210 East, Maynard, Massachusetts 01754 TELE: (800) 253-1230, FAX: (978) 461-4295, INTL: (978) 461-2100 http:// www.quadtech.com 2 Preface The intent of this reference primer is to explain the basic definitions and measurement of impedance parameters, also known as LCR. This primer provides a general overview of the impedance characteristics of an AC cir- cuit, mathematical equations, connection methods to the device under test and methods used by measuring instruments to precisely characterize impedance. Inductance, capacitance and resistance measuring tech- niques associated with passive component testing are presented as well. LCR Measurement Primer 2nd Edition, August 2002 Comments: [email protected] 5 Clock Tower Place, 210 East Maynard, Massachusetts 01754 Tel: (978) 461-2100 Fax: (978) 461-4295 Intl: (800) 253-1230 Web: http://www.quadtech.com This material is for informational purposes only and is subject to change without notice. QuadTech assumes no responsibility for any error or for consequential damages that may result from the misinterpretation of any procedures in this publication. 3 Contents Impedance 5 Recommended LCR Meter Features 34 Definitions 5 Test Frequency 34 Impedance Terms 6 Test Voltage 34 Phase Diagrams 7 Accuracy/Speed 34 Series and Parallel 7 Measurement Parameters 34 Connection Methods 10 Ranging 34 Averaging 34 Two-Terminal Measurements 10 Median Mode 34 Four-Terminal Measurements 10 Computer Interface 35 Three-Terminal (Guarded) -

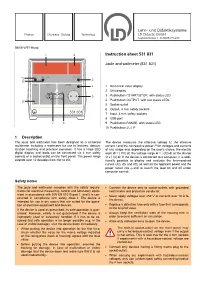

Instruction Sheet 531 831

06/05-W97-Hund Instruction sheet 531 831 Joule and wattmeter (531 831) 1 Numerical value display 2 Unit display 3 Pushbutton t START/STOP, with status LED 4 Pushbutton OUTPUT, with two status LEDs 5 Socket-outlet 6 Output, 4 mm safety sockets 7 Input, 4 mm safety sockets 8 USB port 9 Pushbutton RANGE, with status LED 10 Pushbutton U, I, P 1 Description The joule and wattmeter has been designed as a universal The device measures the effective voltage U, the effective multimeter including a wattmeter for use in lectures, demon- current I and the nonreactive power P for voltages and currents stration teaching and practical exercises. It has a large LED of any shape and, depending on the user’s choice, the electric digital display and loads can be connected via 4 mm safety work W = ∫ P(t) dt, the voltage surge Φ = ∫ U(t) dt or the charge sockets or a socket-outlet on the front panel. The power range Q = ∫ I(t) dt. If the device is connected to a computer, it is addi- extends over 12 decades from nW to kW. tionally possible to display and evaluate the time-resolved curves U(t), I(t) and P(t) as well as the apparent power and the power factor cos ϕ and to switch the load on and off under computer control. Safety notes The joule and wattmeter complies with the safety require- • Connect the device only to socket-outlets with grounded ments for electrical measuring, control and laboratory equip- neutral wire and protective conductor. -

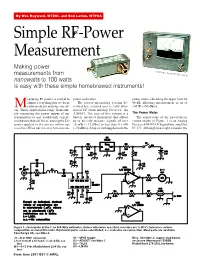

Simple RF-Power Measurement

By Wes Hayward, W7ZOI, and Bob Larkin, W7PUA Simple RF-Power Measurement Making power PHOTO S BY JOE BO TTIGLIERI, AA1G measurements from W nanowatts to 100 watts is easy with these simple homebrewed instruments! easuring RF power is central to power indicators. power meter, extending the upper limit by almost everything that we do as The power-measuring system de- 40 dB, allowing measurement of up to Mradio amateurs and experiment- scribed here is based on a recently intro- 100 W (+50 dBm). ers. Those applications range from sim- duced IC from Analog Devices: the ply measuring the power output of our AD8307. The core of this system is a The Power Meter transmitters to our workbench experi- battery operated instrument that allows The cornerstone of the power-meter mentations that call for measuring the LO us to directly measure signals of over circuit shown in Figure 1 is an Analog power applied to the mixers within our 20 mW (+13 dBm) to less than 0.1 nW Devices AD8307AN logarithmic amplifier receivers. Even our receiver S meters are (−70 dBm). A tap circuit supplements the IC, U1. Although you might consider the 1 Figure 1—Schematic of the 1- to 500-MHz wattmeter. Unless otherwise specified, resistors are /4-W 5%-tolerance carbon- composition or metal-film units. Equivalent parts can be substituted; n.c. indicates no connection. Most parts are available from Kanga US; see Note 2. J1—N or BNC connector S1—SPST toggle Misc: See Note 2; copper-clad board, 3 L1—1 turn of a C1 lead, /16-inch ID; see U1—AD8307; see Note 1. -

A History of Impedance Measurements

A History of Impedance Measurements by Henry P. Hall Preface 2 Scope 2 Acknowledgements 2 Part I. The Early Experimenters 1775-1915 3 1.1 Earliest Measurements, Dc Resistance 3 1.2 Dc to Ac, Capacitance and Inductance Measurements 6 1.3 An Abundance of Bridges 10 References, Part I 14 Part II. The First Commercial Instruments 1900-1945 16 2.1 Comment: Putting it All Together 16 2.2 Early Dc Bridges 16 2.3 Other Early Dc Instruments 20 2.4 Early Ac Bridges 21 2.5 Other Early Ac Instruments 25 References Part II 26 Part III. Electronics Comes of Age 1946-1965 28 3.1 Comment: The Post-War Boom 28 3.2 General Purpose, “RLC” or “Universal” Bridges 28 3.3 Dc Bridges 30 3.4 Precision Ac Bridges: The Transformer Ratio-Arm Bridge 32 3.5 RF Bridges 37 3.6 Special Purpose Bridges 38 3,7 Impedance Meters 39 3.8 Impedance Comparators 40 3.9 Electronics in Instruments 42 References Part III 44 Part IV. The Digital Era 1966-Present 47 4.1 Comment: Measurements in the Digital Age 47 4.2 Digital Dc Meters 47 4.3 Ac Digital Meters 48 4.4 Automatic Ac Bridges 50 4.5 Computer-Bridge Systems 52 4.6 Computers in Meters and Bridges 52 4.7 Computing Impedance Meters 53 4.8 Instruments in Use Today 55 4.9 A Long Way from Ohm 57 References Part IV 59 Appendices: A. A Transformer Equivalent Circuit 60 B. LRC or Universal Bridges 61 C. Microprocessor-Base Impedance Meters 62 A HISTORY OF IMPEDANCE MEASUREMENTS PART I.