Project #1 Answers STAT 875 Spring 2015

Complete the following problems below. Within each part, include your R program output with code inside of it and any additional information needed to explain your answer. Your R code and output should be formatted in the exact same manner as in the lecture notes.

1) (11 total points) Exercise #3 parts (a)-(c). Use = 0.05 for part (b). For extra credit, perform the same experiment yourself with Milk Chocolate Hershey’s Kisses. Complete part (b) for your data. To prove you actually did the experiment, provide a YouTube link to a video of you performing the experiment! Note that the web address for the cited paper in the problem is http://www.amstat.org/publications/jse/v10n3/haller.html. a) (3 points) The intent of this problem was for you to go through the five characteristics listed on page 1.5 of the notes. A big assumption that needs to be made for this experiment is that the position of the Kisses in the cup will not affect the result. For example, do these Kisses interfere with each other as they are poured out of the cup? If so, this would be a violation of the independence assumption. Also, because there are 10 separate cups rather than 100 trials all at once (thus, there are actually 10 binomial distributions each with 10 trials), one needs to assume that there are no differences among the cups and how they are poured onto the table.

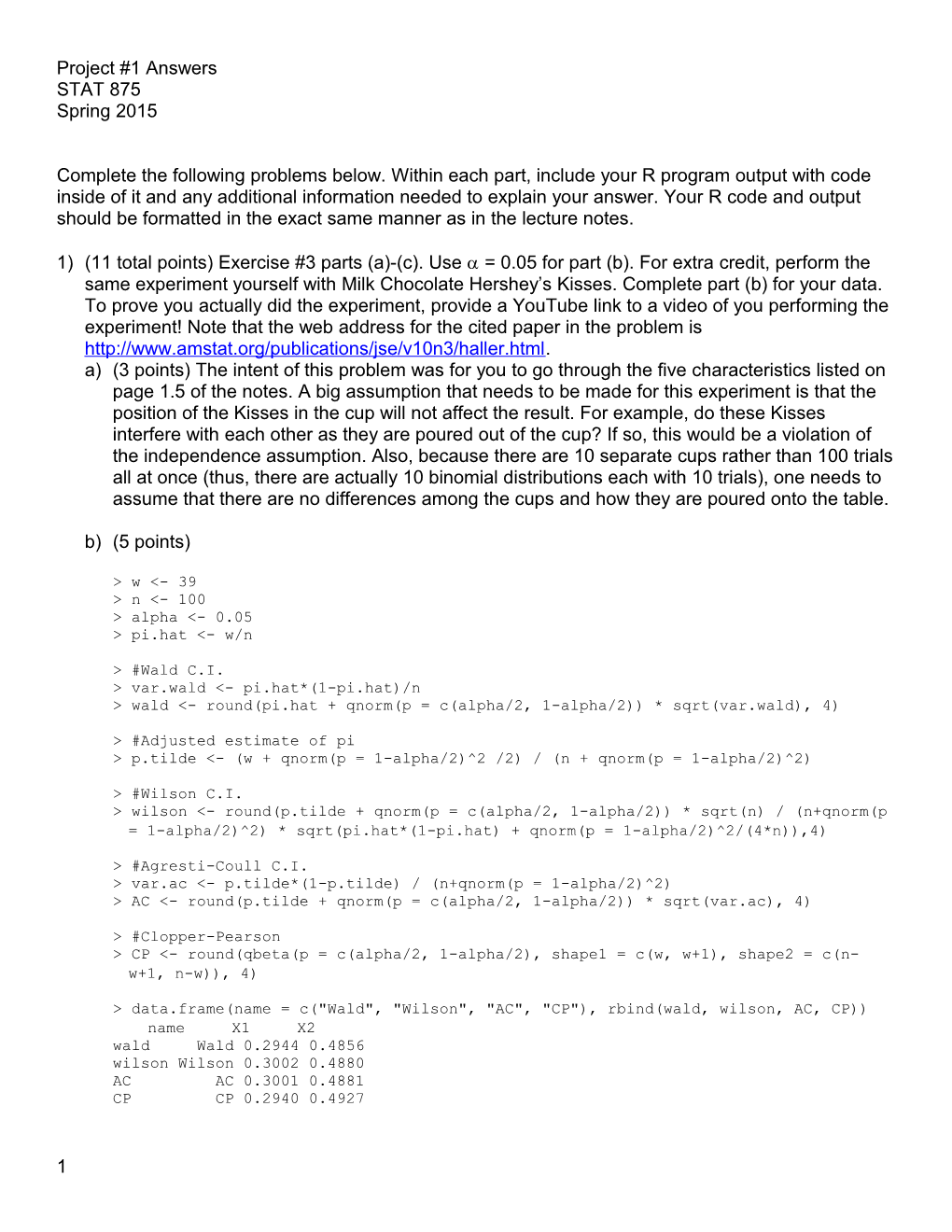

b) (5 points)

> w <- 39 > n <- 100 > alpha <- 0.05 > pi.hat <- w/n

> #Wald C.I. > var.wald <- pi.hat*(1-pi.hat)/n > wald <- round(pi.hat + qnorm(p = c(alpha/2, 1-alpha/2)) * sqrt(var.wald), 4)

> #Adjusted estimate of pi > p.tilde <- (w + qnorm(p = 1-alpha/2)^2 /2) / (n + qnorm(p = 1-alpha/2)^2)

> #Wilson C.I. > wilson <- round(p.tilde + qnorm(p = c(alpha/2, 1-alpha/2)) * sqrt(n) / (n+qnorm(p = 1-alpha/2)^2) * sqrt(pi.hat*(1-pi.hat) + qnorm(p = 1-alpha/2)^2/(4*n)),4)

> #Agresti-Coull C.I. > var.ac <- p.tilde*(1-p.tilde) / (n+qnorm(p = 1-alpha/2)^2) > AC <- round(p.tilde + qnorm(p = c(alpha/2, 1-alpha/2)) * sqrt(var.ac), 4)

> #Clopper-Pearson > CP <- round(qbeta(p = c(alpha/2, 1-alpha/2), shape1 = c(w, w+1), shape2 = c(n- w+1, n-w)), 4)

> data.frame(name = c("Wald", "Wilson", "AC", "CP"), rbind(wald, wilson, AC, CP)) name X1 X2 wald Wald 0.2944 0.4856 wilson Wilson 0.3002 0.4880 AC AC 0.3001 0.4881 CP CP 0.2940 0.4927

1 The Wald interval is 0.29 < < 0.49. We would expect that 95% of all similarly constructed intervals to contain . The same types of statements can be made regarding the other three intervals.

c) (3 points) All except for the Wald interval are o.k. to use. Below are true confidence level plots demonstrating that generally the Agresti-Coull and Wilson intervals are close to the stated confidence level (I used ConfLevel4Intervals.R to construct the plot).

Wald Agresti-Coull 0 0 0 0 . . l l 1 1 e e v v e e l l

5 5 e e 9 9 c c . . n n 0 0 e e d d i i f f 0 0 n n 9 9 o o . . c c 0 0

e e u u r r 5 5 T T 8 8 . . 0 0 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0

Wilson Clopper-Pearson 0 0 0 0 . . l l 1 1 e e v v e e l l

5 5 e e 9 9 c c . . n n 0 0 e e d d i i f f 0 0 n n 9 9 o o . . c c 0 0

e e u u r r 5 5 T T 8 8 . . 0 0 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0

The Wald interval actually is not too bad for values of between 0.3 and 0.7. Given the calculated confidence intervals, I would expect to be within the range where the Wald interval is relatively o.k. to use. This is likely why it is similar to the other intervals.

2) (12 total points) Exercise #13. Do not use binom.plot() to complete the exercise. Hint: The true confidence level at = 0.157 is 0.9448. I recommend verifying you can obtain this value first BEFORE calculating ALL of the true confidence levels in part (b)! a) (3 points)

> w <- 4 > n <- 10 > alpha <- 0.05 > pi.hat <- w/n > library(binom) > binom.confint(x = w, n = n, conf.level = 1-alpha, methods = "logit") method x n mean lower upper 1 lrt 4 10 0.4 0.1456425 0.7000216

The 95% LR interval is 0.1456 < < 0.7000.

b) (6 points) I decided to re-run the true confidence level code also for the other four intervals.

> #Verify true confidence level for = 0.157 is 0.9448

2 > pi<-0.157 > w<-0:n > pi.hat<-w/n > pmf<-dbinom(x = w, size = n, prob = pi) > LRT.int<-binom.confint(x = sum.y, n = n, conf.level = 1-alpha, methods = "lrt") > save.LRT<-ifelse(test = pi>LRT.int$lower, yes = ifelse(test = pi

> alpha<-0.05 > n<-40 > sum.y<-0:n > pi.hat<-sum.y/n > p.tilde<-(sum.y + qnorm(p = 1-alpha/2)^2 /2) / (n+qnorm(1-alpha/2)^2) > pi.seq<-seq(from = 0.001, to = 0.999, by = 0.0005)

> save.true.conf<-matrix(data = NA, nrow = length(pi.seq), ncol = 6)

> #Create counter for the loop > counter<-1

> #Loop over each pi that the true confidence level is calculated on > for(pi in pi.seq) { pmf<-dbinom(x = sum.y, size = n, prob = pi) #Wald lower.wald<-pi.hat - qnorm(p = 1-alpha/2) * sqrt(pi.hat*(1-pi.hat)/n) upper.wald<-pi.hat + qnorm(p = 1-alpha/2) * sqrt(pi.hat*(1-pi.hat)/n) save.wald<-ifelse(test = pi>lower.wald, yes = ifelse(test = pi #Wilson lower.wilson<-p.tilde - qnorm(p = 1-alpha/2) * sqrt(n) / (n+qnorm(1- alpha/2)^2) * sqrt(pi.hat*(1-pi.hat) + qnorm(1-alpha/2)^2/(4*n)) upper.wilson<-p.tilde + qnorm(p = 1-alpha/2) * sqrt(n) / (n+qnorm(1- alpha/2)^2) * sqrt(pi.hat*(1-pi.hat) + qnorm(1-alpha/2)^2/(4*n)) save.wilson<-ifelse(test = pi>lower.wilson, yes = ifelse(test = pi #LRT LRT.int<-binom.confint(x = sum.y, n = n, conf.level = 1-alpha, methods = "lrt") save.LRT<-ifelse(test = pi>LRT.int$lower, yes = ifelse(test = pi > save.true.conf[save.true.conf[,1] == 0.157,] [1] 0.1570000 0.8759050 0.9506532 0.9506532 [5] 0.9739795 0.9447823 > #Plots > x11(width = 10, height = 6, pointsize = 12) > par(mfrow = c(2,3)) > plot(x = save.true.conf[,1], y = save.true.conf[,2], main = "Wald", xlab = expression(pi), ylab = "True confidence level", type = "l", ylim = c(0.85,1)) > abline(h = 1-alpha, lty = "dotted") > plot(x = save.true.conf[,1], y = save.true.conf[,3], main = "Agresti-Coull", xlab = expression(pi), ylab = "True confidence level", type = "l", ylim = c(0.85,1)) > abline(h = 1-alpha, lty = "dotted") > plot(x = save.true.conf[,1], y = save.true.conf[,6], main = "LR", xlab = expression(pi), ylab = "True confidence level", type = "l", ylim = c(0.85,1)) > abline(h = 1-alpha, lty = "dotted") > plot(x = save.true.conf[,1], y = save.true.conf[,4], main = "Wilson", xlab = expression(pi), ylab = "True confidence level", type = "l", ylim = c(0.85,1)) > abline(h = 1-alpha, lty = "dotted") > plot(x = save.true.conf[,1], y = save.true.conf[,5], main = "Clopper-Pearson", xlab = expression(pi), ylab = "True confidence level", type = "l", ylim = c(0.85,1)) > abline(h = 1-alpha, lty = "dotted") 4 Wald Agresti-Coull LR 0 0 0 0 0 0 . . . 1 1 1 l l l e e e v v v e e e 5 5 5 l l l 9 9 9 . . . e e e 0 0 0 c c c n n n e e e d d d i i i f f f n n n o o o 0 0 0 c c c 9 9 9 . . . e e e 0 0 0 u u u r r r T T T 5 5 5 8 8 8 . . . 0 0 0 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0 Wilson Clopper-Pearson 0 0 0 0 . . 1 1 l l e e v v e e 5 5 l l 9 9 . . e e 0 0 c c n n e e d d i i f f n n o o 0 0 c c 9 9 . . e e 0 0 u u r r T T 5 5 8 8 . . 0 0 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0 c) (3 points) The interval's true confidence level is similar to the Wilson interval from values of approximately 0.2 to 0.8. There is a large reduction in the true confidence level close to 0 and 1, like the Wilson interval, but this reduction occurs farther away from 0 and 1 than it does for the Wilson interval. The LR interval can be quite conservative, like the Agresti-Coull interval, very close to 0 and 1. Overall, the LR interval is a good interval (much better than the Wald), but the Wilson interval generally is a little better from 0 to 0.2 and 0.8 to 1. 3) (18 points) Olestra is a fat substitute that was first used in the late 1990s. It is not used very often now due to gastrointestinal side effects that some people reported after consumption of food with Olestra. The paper Cheskin, L., Miday, R., Zorich, N. and Filloon, T. (1998). Gastrointestinal symptoms following consumption of Olestra or regular triglyceride potato chips: A controlled comparison. Journal of the American Medical Association 279(2), 150-152. examined a controlled experiment to determine if side effects truly occurred. Below is a 22 contingency table summarizing some of their results: Side effects No side effects Olestra 89 474 Regula 93 436 r Using this data, complete the following: a) (3 points) Examine the paper. Describe the sample used for the study and the intended population. What assumptions are needed to make this sample representative of the intended population? 5 The sample included 1,123 individuals aged 13 to 88 in the Chicago area. Because some people did not respond to follow-up questions, a total of 1,092 individuals were actually included in the statistical analysis. The intended population would be everyone in the US or maybe even in the world! We need to assume that those who volunteered were truly representative of the population. For example, only people from the Chicago area participated, so we need to assume they are representative of the larger population. b) (9 points) Will Olestra cause side effects for people in the population? Use a Pearson chi- square test for independence, a relative risk, and an odds ratio to answer this question. While normally only one of these would be needed in practice, I want you to examine all three so that I can assess your understanding of these measures. Make sure to FULLY interpret all results! This includes making a statement such as “The odds of a …” and “The probability of a … ” as shown in the notes. 2 b.i)Pearson chi-square test: H0: 1 = 2 vs. H0: 1 2; X = 0.62, p-value = 0.43. Because 0.43 > 0.05, do not reject H0. There is not sufficient evidence to indicate that Olestra causes side effects. > c.table<-array(data = c(89, 93, 474, 436), dim = c(2,2), dimnames = list(Treatment = c("Olestra", "Regular"), Response=c("Side effects", "No Side effects"))) > c.table Response Treatment Side effects No Side effects Olestra 89 474 Regular 93 436 > chisq.test(x = c.table, correct = FALSE) Pearson's Chi-squared test data: c.table X-squared = 0.6167, df = 1, p-value = 0.4323 b.ii) Relative risk: . The 95% confidence interval for RR is 0.69 < RR < 1.17. With 95% confidence, the probability of having side effects are between 0.69 and 1.17 times as large in the Olestra group than in the Regular group. Because 1 is within the interval, there is not sufficient evidence to indicate that Olestra causes side effects. > pi.hat1<-pi.hat.table[1,1] > pi.hat2<-pi.hat.table[2,1] > alpha<-0.05 > n1<-sum(c.table[1,]) > n2<-sum(c.table[2,]) > round(pi.hat1/pi.hat2, 2) [1] 0.9 > # Wald confidence interval > ci<-exp(log(pi.hat1/pi.hat2) + qnorm(p = c(alpha/2, 1-alpha/2)) * sqrt((1-pi.hat1)/(n1*pi.hat1) + (1-pi.hat2)/(n2*pi.hat2))) > round(ci, 4) [1] 0.6897 1.1724 > rev(round(1/ci, 4)) # inverted [1] 0.853 1.450 6 b.iii) Odds ratio: . The 95% confidence interval for OR is 0.64 < OR < 1.21. With 95% confidence, the odds of having side effects are between 0.64 and 1.21 times as large in the Olestra group than in the Regular group. Because 1 is within the interval, there is not sufficient evidence to indicate that Olestra causes side effects. > OR.hat<-c.table[1,1]*c.table[2,2] / (c.table[2,1]*c.table[1,2]) > round(OR.hat, 2) [1] 0.88 > round(1/OR.hat, 2) [1] 1.14 > alpha<-0.05 > var.log.or<-1/c.table[1,1] + 1/c.table[1,2] + 1/c.table[2,1] + 1/c.table[2,2] > OR.CI<-exp(log(OR.hat) + qnorm(p = c(alpha/2, 1-alpha/2)) * sqrt(var.log.or)) > round(OR.CI, 4) [1] 0.6402 1.2103 > rev(round(1/OR.CI, 2)) [1] 0.83 1.56 c) (3 points) Answer the question below that corresponds to your answer from part b): c.i) Olestra cause side effects: Would you recommend that people eat food with Olestra? Explain. This is not the correct answer! c.ii) There is not sufficient evidence that Olestra cause side effects: The introduction of the problem said that Olestra is not often used in foods now due to side effects that some people reported. What could be a STATISTICAL reason for why your conclusion is not the same? Possible reasons include: 1) Not enough power (thus, a type II error) 2) There were a number of other factors in the study, such as the amount consumed, that are not included by looking at the responses in the 22 table format. These other factors could be contributing to the “do not reject H0” results. While not a statistical reason, it is interesting to note that p. 152 indicates that Proctor & Gamble, the company that made Olestra then, funded the study. d) (3 points) The news media will often report on results from medical research when it is first published. Suppose this paper on Olestra was just published. Write a one-paragraph report about the study that is at a level appropriate for the Lincoln Journal-Star. Note that this means that you cannot assume someone has had any statistics courses! Focus only on the data analyzed for this problem. Recent research published in the Journal of the American Medical Association reports that Olestra, the new fat substitute developed by Proctor & Gamble, does not show signs that it causes gastrointestinal side effects. The study found that 89 of 563 individuals who ate potato chips with Olestra reported side effects, while 93 of 529 individuals who ate potato chips without Olestra reported side effects. While the probability of having side effects was actually 10% less for the non-Olestra group, there was not sufficient evidence to indicate either group would have more or less side effects in the general population. Despite the anecdotal reports 7 by some individuals that gastrointestinal side effects occur after consumption of food containing Olestra, there still is not conclusive evidence that this truly occurs. 8