MEDIUM SIZE MOBILE ROBOT FOR VISITOR GUIDANCE

Philip Norton MEng Cybernetics, [email protected]

ABSTRACT The initial brief of the project is to create a mobile robot which will meet visitors in the department foyer, This paper documents the research, design, and interact with them, and then guide them around the implementation of a mobile robot used as a guide for department; providing relevant information during the visitors to the department. The report begins by outlining tour. the problem, and the initial approaches to solving them. The project is conducted as it would be in industry. Then it looks at the issues of distributed processing, There is the design team of five students, who have intelligent exploration and navigation, sensing, control different administrative and research responsibilities, a and user interaction. Finally, the future developments consultancy team of staff, and a client who has provided and implementations are investigated, and the overall a functional specification and a budget. success of the project evaluated. There are few examples of mobile robots currently being used in this type of application. Rhino [1], Minerva [2] and Sage [3] are robots from Bonn University, which are designed to serve as guides for museums. However, no reference has been found about any robot guiding at university open days, and this is what is addressed in this paper.

2. INITIAL APPROACHES

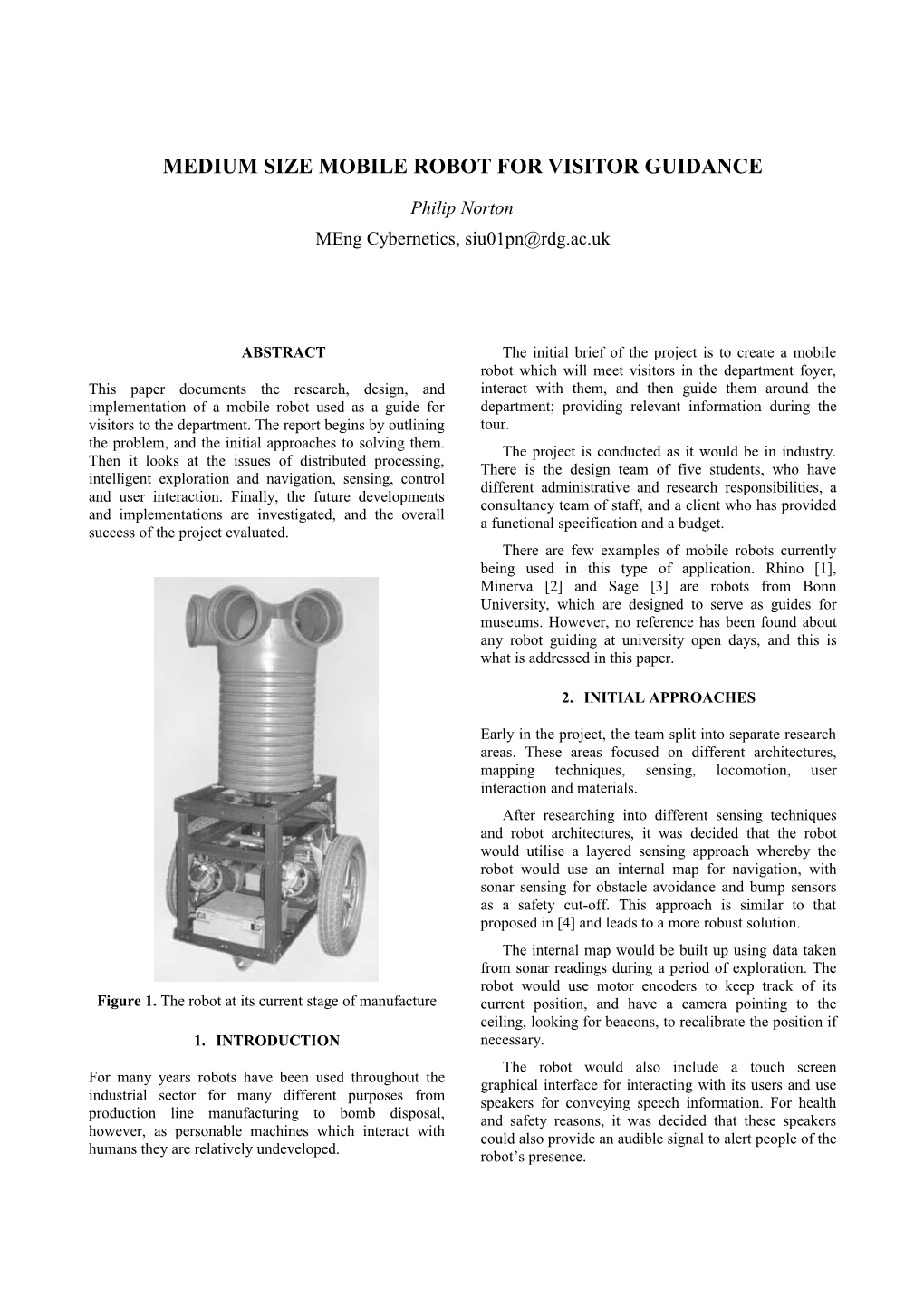

Early in the project, the team split into separate research areas. These areas focused on different architectures, mapping techniques, sensing, locomotion, user interaction and materials. After researching into different sensing techniques and robot architectures, it was decided that the robot would utilise a layered sensing approach whereby the robot would use an internal map for navigation, with sonar sensing for obstacle avoidance and bump sensors as a safety cut-off. This approach is similar to that proposed in [4] and leads to a more robust solution. The internal map would be built up using data taken from sonar readings during a period of exploration. The robot would use motor encoders to keep track of its Figure 1. The robot at its current stage of manufacture current position, and have a camera pointing to the ceiling, looking for beacons, to recalibrate the position if 1. INTRODUCTION necessary. The robot would also include a touch screen For many years robots have been used throughout the graphical interface for interacting with its users and use industrial sector for many different purposes from speakers for conveying speech information. For health production line manufacturing to bomb disposal, and safety reasons, it was decided that these speakers however, as personable machines which interact with could also provide an audible signal to alert people of the humans they are relatively undeveloped. robot’s presence. It was decided that by using distributed, parallel In 9 the concept of frontier based exploration is processing around the robot, the computational costs discussed, for application in cluttered workspaces. Using would be reduced, the speed of processing would be this approach the robot moves to the boundary between increased, and a generally more robust system would open space and uncharted territory, and then moves result. This means that the sonar boards, motor boards, along it – therefore charting the area, and pushing bump sensor board, and the computer parts all process forward the frontier. This technique should find a their own data, and only communicate meaningful complete or nearly complete map in a reasonable amount information. Using this approach also separates the of time. project into smaller, less complicated modules for the If the robot is then moved to a new location, or the team to work on. layout of the rooms change, the old map can be erased, and the robot can embark on a new exploration. After 3. DISTRIBUTED PROCESSING creation, the map can then be dynamically updated during each tour to keep it constantly up to date. Controller Area Network (CAN) is a network that was originally designed for the automotive industry, and has 4.2. Map Structure become a popular bus in industrial automation as well as The map is composed of a grid of cells which hold other applications. It is a robust and reliable high-speed values determining the danger of travelling to each network system; which makes it suitable for this adjacent cell. If the robot detects an obstacle in its path application. Due to the high speed of the network it is then it will increase the danger value in the relevant possible to carry out real time control between nodes. direction. If however, at a later time, the robot does not detect an obstacle in that cell and direction, it will decrease the value. This leads to a dynamically changing map which reduces the problem of temporary obstacles such as stools and people.

5. SENSING, ODOMETRY AND CONTROL

5.1. Sensors The robot is using multiple levels of sensing to give it as Figure 2. How the robot components are linked using much real world information as possible. Sonar is used the Canbus for proximity sensing, with sensors at ground level and Using this approach, all the sonar boards, motor top level, facing in different directions. The sonar gives boards and the bump sensor board can process data accurate distance information for the mapping software locally using PICs. This means the robot can process a to record. If the sonar sensors miss an object then bump great deal of data very quickly, and each component only sensors positioned around the robot base should trigger – needs to communicate the information which is of immediately cutting off power to the motors. interest. Also, a computer can be connected to the network via a CAN-bridge, therefore integrating the lower level sensing and actuation with the higher level mapping and interface software. Using a filtering system, each node only considers messages which are meant for it. An example of how it is used in the robot would be when a collision is made. The bump sensor PIC would communicate this to the motor drivers and the computer. Therefore, the motors immediately cut out and reverse, and the computer adjusts the map. (a) (b) Figure 3. Shows positions of sonar sensors and bump 4. MAPPING sensors on the (a) base and (b) top level 5.2. Odometry 4.1. Frontier based exploration for map making Shaft encoders attached to the motors are used to As the robot could be used in museums whose layout is monitor the motor position, and angular velocity. These constantly changing, it is important that it can easily values can then be used to control the motor and provide adapt to new environments. Therefore hard coding a map current location and bearing information to the mapping into the memory is not suitable, and some form of software. Although these encoders have a high level of exploration strategy is necessary. accuracy there are still errors between where the robot considers itself to be and where it actually is, therefore Ki some further system of recalibration is needed. G( s ) = K + + K s ps d 5.3. Beacons K= 0.6 K A system of beacon relocation is required to reduce the p u problem of errors in robot positioning and orientation. 2T K The robot has an upward facing camera looking for K = z p beacons on the ceiling. Each beacon is individually i Pu colour coded and directional. When the robot spots a beacon it can then process the colours, and recalibrate Pu K p both position and direction. Kd = 8Tz The PID coefficients are important as they provide the control for the motors. The proportional gain gives the system stability, the integral gain help to reduce any steady state error, and the differential gain improves transient response. By tuning these values, the robot will move to the desired point faster and without error.

Figure 4. Beacon coding Each beacon follows a colour code which can be easily understood. The outer ring is coloured red, so that the camera can easily identify them, the horizontal line indicates east, and the three colour areas can be colour coded to allow for unique identification. As there is very little space for the robot to navigate through doors, special beacons are used to help. These beacons have the horizontal line pointing through the door, which the robot can then follow. Beacons are also positioned in key places to inform the robot of an information point. At these points the robot can give information to the users, much like a human guide would do. Figure 6. Carrying out the Zeigler-Nichols tuning procedure 5.5. Wireless Access The robot includes wireless networking capabilities, which can be used to remotely control the robot, update (a) (b) programs, and extract information from the robot such as user information, current position or battery level. This Figure 5. Shows the beacon identification software wireless access is crucial to the final developments of the processing a beacon (a) before (b) after robot as it allows total manipulation of the robot 5.4. Motor control software remotely. Using the CAN-bridge connection, all of the sonar, motor driver and bump sensor programs Much of the motor control software was already written can also be altered remotely. prior to the project and so does not need to be altered. However, as a cost cutting measure the motors used for In a multi agent environment such as a museum this robot were salvaged from a second hand electric where there could be many such guide robots, this wheelchair. Therefore the PID control coefficients differ wireless access could be vital in keeping track of current from the standard ones set in the motor control software. positions and tour progress.

The method used for tuning the closed loop system 6. HUMAN INTERACTION was the Zeigler-Nichols procedure [6] where the system proportional-only gain is increased until the system 6.1. User Interface becomes marginally stable. The gain Ku and oscillation period Pu at this point are then used to calculate the PID The overall aim of the project is to create a robot which control coefficients, where Tz is the sampling period. can guide humans. To do this it must be engaging and make the user want to follow it. It must provide interesting information at all stages of the tour, be able to interact with humans, and take information from the user which may be of use for market research purposes. The nature of the application means that it will be used for short-term spontaneous interactions, and therefore the user must not need any prior training to use it [7]. The most user friendly method of human-machine interaction currently available is the touch screen, and for this reason it is used here. Interface navigation is very important; it must be intuitive and users of all levels must be able to easily understand what to do, and how to interact with it. In 8 many techniques are given to maximise user friendliness and the point is made that ‘a fundamental reality of application development is that (a) (b) the user interface is the system to the users’ - if a visitor cannot navigate the user interface then the robot Figure 7. CAD drawings (a) ride-able concept design, becomes useless. (b) final shell design Interactive robots can communicate more efficiently Figure 7b shows the final shell design, it is very with humans when they have human like characteristics functional, with a low centre of gravity, almost centrally themselves [7]. For this reason, human voice recordings placed drive wheels and two support casters. This are used throughout the tour, by asking questions and configuration was used to allow the robot to turn on narrating video clips at interest points. itself easily, and because it would simplify the mapping and route planning. The sonar positioning was chosen to It is also important that the interface be fully maximise the field of sensing on both levels. customisable for different situations; through some administration software. For instance, in a museum The majority of weight is kept to the bottom of the questions such as ‘which degree course are you most shell. The base structure holds batteries, motors and most interested in’ are not relevant. The software is totally of the electrical components. It is constructed using customisable through a series of text files which stipulate welded steel angle, with an aluminium casing. The upper what beacon codes stand for, which question need to be section houses the screen, speakers, and sonar asked, which information needs to be presented, and in components, and is constructed using plastic tubing. which order the tour should be given. These tour During the course of manufacture, a trade off was customising files can then be modified easily using the made between the strength and weight of the robot. As administration software. large wheelchair motors are used for locomotion, it was Interaction aids interest, if humans do not feel like necessary for a strong structure to be used. This then they have any control over the robot then they will pushed the robot to its maximum weight allowance and quickly lose interest in both the robot and the tour. The many health and safety issues arose. As this robot is an robot engages the visitor by asking them questions about early prototype, the client agreed to increase the weight themselves and allows them to ask for more information specification, however, for future development it should when they want. This enables the robot to maintain the be considered. user’s interest and improve the standard of the tour. 7. FUTURE DEVELOPMENT 6.2. Robot Shell Initially a visitor will see the robot stationary, waiting for This project has many aspects which could be developed use. It is important that the visitor can immediately in future years. Greater sensing capabilities could be recognise that it is a robot and that it should be used for added, possibly with the addition of laser guided sonar guidance. It is also important that the robot has some [9], which would greatly enhance the accuracy of form of personality associated with it, which will appeal sensing, whilst retaining the benefits of sonar. The to users and attract attention. present system of collision detection is not very robust, and could be greatly improved by 360° protection using During the design phase the shell concept drawings a more reliable technique. centred on the idea of a ride-able robot (figure 7a), which would drive the visitor around whilst giving the A more sophisticated method for implementing robot tour. However, after further research this idea had to be navigation, involving the use of a neural network [10] abandoned due to health and safety issues and the project could be implemented. This would improve the ability of time-frame constraints. the robot to recognise features, and learn from experience. Also, by involving an element of intelligence to the robot, the future development possibilities become far greater. It would also be interesting to further develop the [2] S. Thrun, M. Bennewitz, W. Burgard, F. Dellaert, character of the robot. By incorporating a kinetic face, D. Fox, D. Haehnel, C. Rosenberg, N. Roy, J. which could display emotions, and respond to user Schulte, and D. Schulz. MINERVA: A second actions, a new level of human interaction would result. generation mobile tour-guide robot., Proc. Many current research projects are looking into this field ICRA’99, May, 1999. [11] and for this type of application, it is ideally suited. Also, speech recognition as a form of user input would [3] I. Nourbakhsh, J. Bobenage, S. Grange, R. Lutz, R. greatly improve the human-machine interaction. Meyer, A. Soto. An Affective Mobile Educator with a Full-time Job., Artificial Intelligence, Vol. 114, 8. CONCLUSIONS No. 1 - 2, Oct 1999. [4] Brooks, Rodney A., A Robust Layered Control The project incorporates a wide ranging field of research System for a Mobile Robot, IEEE Journal of and having a team working on it was crucial to the Robotics and Automation vol. RA-2, no. 1, pp. 14- progress. The approach adopted by the team gave the 23, Apr 1986. robot a good level of robustness and adaptability which is crucial in its intended environment. Many new ideas [5] B. Yamauchi, "A frontier-based approach for were utilised in the robot and many problems were autonomous exploration," in Proc. Int. Syrup. on overcome. Computational Intelligence in Robotics and Automation, 1997, pp. 146 151 The robot has three levels of sensing capability, can interact with humans, and can map and navigate an [6] J. G. Ziegler and N. B. Nichols, “Optimum Setting environment. Although some problems with weight for Automatic Controllers,” Trans. ASME,Vol. 64, limitations were encountered during the project, the 759-768, Nov. 1942 specification given by the client has been successfully [7] J. Schulte, C. Rosenberg, and S. Thrun, met. "Spontaneous Short-term Interaction with Mobile The report documents the overall project, and goes Robots in Public Places," 1999 into more depth in sections where I have had particular [8] Ambler, S.W., “The Object Primer 2nd Edition: The involvement. It is written as an introduction to the robot, Application Developer’s Guide to Object and a full talk based on the research will be given at the Orientation,” New York: Cambridge University Scarp Conference. Press, 2001 Acknowledgement: Many people were helpful in the [9] Gregory Dudek, Paul Freedman, and Ioannis M. design and implementation of this robot. Ben Hutt and Rekleitis. Just-in-time sensing: efficiently Ian Goodhew have provided useful assistance at all combining sonar and laser range data for exploring stages of the project, and Geoff Pearce has been unknown worlds. In Proc. IEEE Conf. on Robotics instrumental as overall administrator. All the team and Automation, 1996, pages 667--672, April 1996. members also deserve acknowledgement, they are Alex McMahon, David Devereoux, Sean Moran and [10] Puneet Goel and G.S. Sukhatme, "Sonar-based Christopher Kershaw. Feature Recognition and Robot Navigation Using a Neural Network", IEEE/RSJ International 9. REFERENCES Conference on Intelligent Robots and Systems, 2000 [1] W. Burgard, A.B. Cremers, D. Fox, D. Hahnel, G. [11] Breazeal C. and Scassellati, How to Build Robots Lakemeyer, D. Schulz, W. Steiner, and S. Thrun. that Make Friends and Influence People, In Proc. Of The interactive museum tour-guide robot., 15th IROS-99 Kyonju, Korea National Conference on Artificial Intelligence, Madison, Wisconsin, July 1998.