Estimating the Resilience of Networks Against Zero Day Attacks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

15 Essential Open Source Tools for Windows Admins – Raj Kumar Maurya

Tools 15 ESSENTIAL OpEN SOURCE TOOLS FOR WINDOWS ADMINS – Raj Kumar Maurya 1: Wireshark Wireshark is used for network troubleshooting, analysis, software and communications protocol development, and education. Wireshark is cross-platform, using the Qt widget toolkit in current releases to implement its user interface, and using pcap to capture packets, it runs on GNU/Linux, OS X, BSD, Solaris, some other Unix-like operating systems, and Microsoft Windows. 2: AMANDA AMANDA, the Advanced Maryland Automatic Network Disk Archiver, is a backup solution that allows the IT administrator to set up a single master backup server to back up multiple hosts over network to tape drives/ changers or disks or optical media. Amanda uses native utilities and formats (e.g. dump and/or GNU tar) and can back up a large number of servers and workstations running multiple versions of Linux or Unix. Amanda is a fully integrated business system which contains everything from customer details, offer, order, production, logistics, project, economy, salary to market and personal planning. 3: MailArchiva MailArchiva is a powerful, full featured email archiving and compliance solution for mail systems such as Microsoft Exchange. It stores all incoming, outgoing and internal emails for long term storage. MailArchiva is the development, to provide, dual license of e-mail archiving software free of the Open Source Edition and paid Enterprise Edition. Various OS, can respond to the mail server environment, because of the multi-lingual, have been used in various countries and regions. 46 PCQUEST FEBRUARY 2017 pcquest.com twitter.com/pcquest facebook.com/pcquest linkd.in/pcquest [email protected] 4: Exchange 2010 RBAC Manager Exchange 2010 RBAC Manager is a tool for admins working with role-based access control and Exchange. -

Defraggler Windows 10 Download Free - Reviews and Testimonials

defraggler windows 10 download free - Reviews and Testimonials. It's great to hear that so many people have found Defraggler to be the best defrag tool available. Here's what people are saying in the media: "Defraggler is easy to understand and performs its job well. if you want to improve computer performance, this is a great place to start." Read the full review. LifeHacker. "Freeware file defragmentation utility Defraggler analyzes your hard drive for fragmented files and can selectively defrag the ones you choose. The graphical interface is darn sweet." Read the full review. PC World. "Defraggler will show you all your fragmented files. You can click one to see where on the disk its various pieces lie, or defragment just that one. This can be useful when dealing with very large, performance critical files such as databases. Piriform Defraggler is free, fast, marginally more interesting to watch than the default, and has useful additional features. What's not to like?" Read the full review. - Features. Most defrag tools only allow you to defrag an entire drive. Defraggler lets you specify one or more files, folders, or the whole drive to defragment. Safe and Secure. When Defraggler reads or writes a file, it uses the exact same techniques that Windows uses. Using Defraggler is just as safe for your files as using Windows. Compact and portable. Defraggler's tough on your files – and light on your system. Interactive drive map. At a glance, you can see how fragmented your hard drive is. Defraggler's drive map shows you blocks that are empty, not fragmented, or needing defragmentation. -

Principe Et Logiciels Rappel Sur Le Fonctionnement D'un Disque

http://www.clubic.com/article-92386-1-guide-defragmentation-principe-logiciels.html Sommaire La défragmentation : principe et logiciels..................................................................................................................................... 1 Rappel sur le fonctionnement d'un disque dur............................................................................................................................. 1 Le système de fichiers, ou comment sont agencées vos données............................................................................................... 2 Quand survient la fragmentation.................................................................................................................................................. 2 ... il vous faut défragmenter ! ....................................................................................................................................................... 2 La question la plus fréquente : quand ?....................................................................................................................................... 2 La défragmentation sous Windows.............................................................................................................................................. 3 Et Windows Vista ? ..................................................................................................................................................................... 4 La longue liste des logiciels payants .......................................................................................................................................... -

Ultradefrag Handbook Enterprise Edition

UltraDefrag Handbook 9.0.0 Enterprise Edition Chapter 1 UltraDefrag Handbook Authors Stefan Pendl <[email protected]> Dmitri Arkhangelski <[email protected]> Table of Contents 1. Introduction 2. Installation 3. Graphical Interface 4. Console Interface 5. Boot Time Defragmentation 6. Automatic Defragmentation 7. File Fragmentation Reports 8. Tips and Tricks 9. Frequently Asked Questions 10. Troubleshooting 11. Translation 12. Development 13. Credits and License License UltraDefrag is licensed under the terms of the End User License Agreement. This documentation is licensed under the terms of the GNU Free Documentation License. 2 UltraDefrag Handbook Generated by Doxygen Chapter 2 Introduction UltraDefrag is a powerful disk defragmenter for Windows. It can quickly boost performance of your computer and is easy to use. Also it can defragment your disks automatically so you won’t need to take care about that yourself. UltraDefrag has the following features: • automatic defragmentation • fast and efficient defragmentation algorithms • safe environment preventing files corruption • detailed file fragmentation reports • defragmentation of individual files/folders • defragmentation of locked system files • defragmentation of NTFS metafiles (including MFT) and streams • exclusion of files by path, size and number of fragments • fully configurable disk optimization • disk processing time limit • defragmentation of disks having the specified fragmentation level • automatic hibernation or shutdown after the job completion • multilingual graphical interface (over 60 languages available) • one click defragmentation via Windows Explorer’s context menu • powerful command line interface • easy to use portable edition • full support of 64-bit editions of Windows UltraDefrag can defragment both FAT and NTFS disks with just a couple of restrictions: • It cannot defragment FAT directories, because their first clusters are immovable. -

Discovering Program Topoi Carlo Ieva

Unveiling source code latent knowledge : discovering program topoi Carlo Ieva To cite this version: Carlo Ieva. Unveiling source code latent knowledge : discovering program topoi. Programming Lan- guages [cs.PL]. Université Montpellier, 2018. English. NNT : 2018MONTS024. tel-01974511 HAL Id: tel-01974511 https://tel.archives-ouvertes.fr/tel-01974511 Submitted on 8 Jan 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE POUR OBTENIR LE GRADE DE DOCTEUR DE L’UNIVERSITÉ DE MONTPELLIER En Informatique École doctorale : Information, Structures, Systèmes Unité de recherche : LIRMM Révéler le contenu latent du code source A la découverte des topoi de programme Unveiling Source Code Latent Knowledge Discovering Program Topoi Présentée par Carlo Ieva Le 23 Novembre 2018 Sous la direction de Prof. Souhila Kaci Devant le jury composé de Michel Rueher, Professeur, Université Côte d’Azur, I3S, Nice Président du jury Yves Le Traon, Professeur, Université du Luxembourg, Luxembourg Rapporteur Lakhdar Sais, Professeur, Université d’Artois, CRIL, Lens Rapporteur -

Freeware-List.Pdf

FreeWare List A list free software from www.neowin.net a great forum with high amount of members! Full of information and questions posted are normally answered very quickly 3D Graphics: 3DVia http://www.3dvia.com...re/3dvia-shape/ Anim8or - http://www.anim8or.com/ Art Of Illusion - http://www.artofillusion.org/ Blender - http://www.blender3d.org/ CreaToon http://www.creatoon.com/index.php DAZ Studio - http://www.daz3d.com/program/studio/ Freestyle - http://freestyle.sourceforge.net/ Gelato - http://www.nvidia.co...ge/gz_home.html K-3D http://www.k-3d.org/wiki/Main_Page Kerkythea http://www.kerkythea...oomla/index.php Now3D - http://digilander.li...ng/homepage.htm OpenFX - http://www.openfx.org OpenStages http://www.openstages.co.uk/ Pointshop 3D - http://graphics.ethz...loadPS3D20.html POV-Ray - http://www.povray.org/ SketchUp - http://sketchup.google.com/ Sweet Home 3D http://sweethome3d.sourceforge.net/ Toxic - http://www.toxicengine.org/ Wings 3D - http://www.wings3d.com/ Anti-Virus: a-squared - http://www.emsisoft..../software/free/ Avast - http://www.avast.com...ast_4_home.html AVG - http://free.grisoft.com/ Avira AntiVir - http://www.free-av.com/ BitDefender - http://www.softpedia...e-Edition.shtml ClamWin - http://www.clamwin.com/ Microsoft Security Essentials http://www.microsoft...ity_essentials/ Anti-Spyware: Ad-aware SE Personal - http://www.lavasoft....se_personal.php GeSWall http://www.gentlesec...m/download.html Hijackthis - http://www.softpedia...ijackThis.shtml IObit Security 360 http://www.iobit.com/beta.html Malwarebytes' -

E D G E the Outer

August 2012 The Outer Edge Page 1 | The Outer E August 2012 Vol. 26, No. 1 d Whole No. 303 ISSN 1055-4399 g e The Friendly Computer Club Helping Make Computers Friendly. On the Web at www.cipcug.org COMMAND.COM Attendance at the By Jim Brown, President July general meeting: “Promoting the Harmony of Computer Education, and Camaraderie” 44 members and guests. t is true: “Time Marches On,“ and we are going to celebrate it. At our To Contact CIPCUG September regular meeting (Sept. 22, 2012), we will celebrate our The Outer Edge......................805-485-7121 computer club’s 26 th birthday. General Information………...805-289-3960 Mailing Address...P.O. Box 51354, Oxnard, CA The planning for the celebration is ongoing, and we plan to have fun Iwith a look to the past, reconnecting with longtime members, especially 93031-1354 On the Web: cipcug.org those who were instrumental in the establishment of this club, and honoring On Facebook: Facebook.com/groups/cipcug their dedication and sacrifices. Following the meeting we plan to have a social lunch at the nearby Texas Cattle Company restaurant, which has a moderately priced ($10-$15) menu, which we all can afford. Costs might force The planning committee does have a few requests for our membership- changes in TOE Please sign up for the luncheon. We just need to know how The time has come to do some seri- many people plan to attend (we encourage you to bring your ous thinking about our newsletter, The Brown significant other) so that we can give an approximate count to Outer Edge. -

D:\My Documents\My Godaddy Website\Pdfs\Word

UTILITY PROGRAMS Jim McKnight www.jimopi.net Utilities1.lwp revised 10-30-2016 My Favorite download site for common utilities is www.ninite.com. Always go there first to see if what you want is available there. WARNING: These days, pretty much every available free utility tries to automatically install Potenially Unsafe Programs (PUP’s) during the install process of the desired utility. To prevent this, you must be very careful during the install to uncheck any boxes for unwanted programs (like Chrome, Ask Toolbar, Conduit Toolbar, Yahoo Toolbar, etc.). Also when asked to choose a “Custom” or “Express” Install, ALWAYS choose “Custom”, because “Express” will install unwanted programs without even asking you. Lastly, there are also sneaky windows that require you to “Decline” an offer to prevent an unwanted program from installing. After clicking “I Decline”, the program install will continue. This is particularly sneaky. Always read carefully before clicking Next. Both download.com (aka download.cnet.com) and sourcforge.net have turned to the dark side. In other words, don’t trust ANY download website to be PUP or crapware free. CONTENTS: GENERAL TOOLS & UTILITIES HARD-DRIVE & DATA RECOVERY UTILITIES MISC PROGRAM TIPS & NOTES - listed by Category ADDITIONAL PROGRAM TIPS & NOTES - listed by Program Name : ADOBE READER BELARC ADVISOR CCLEANER Setup & Use CODESTUFF STARTER FAB’s AUTOBACKUP MOZBACKUP REVO Uninstaller SANDBOXIE SECUNIA Personal Software Inspector SPEEDFAN SPINRITE SYNCBACK UBCD 4 WINDOWS Tips GENERAL TOOLS & UTILITIES (Mostly Free) (BOLD = Favorites) ANTI-MALWARE UTILITIES: (See my ANTI~MALWARE TOOLS & TIPS) ABR (Activation Backup & Restore) http://directedge.us/content/abr-activation-backup-and-restore BART PE (Bootable Windows Environment ) http://www.snapfiles.com/get/bartpe.html BOOTABLE REPAIR CD's. -

50 Essential Open Source Tools for Emerging Enterprises

Software of the Month 50 ESSENTIAL OPEN SOURCE TOOLS FOR EMERGING ENTERPRISES AND WEB DEVELOPERS Set up a single master backup web server, do network troubleshooting and analysis, email archiving, deploy security solutions around networks, manage projects, conduct web- based bug tracking and more… - Compiled by Sonam Yadav 15 SECURITY AND BACKUP TOOLS FOR WINDOWS ADMINS 1. WIRESHARK 2. AMANDA 3. MAILARCHIVA Wireshark is used for network AMANDA, the Advanced Maryland MailArchiva is a powerful, full featured troubleshooting, analysis, software Automatic Network Disk Archiver, is email archiving and compliance and communications protocol a backup solution that allows the IT solution for mail systems such as development, and education. administrator to set up a single master Microsoft Exchange. It stores all Wireshark is cross-platform, using the backup server to back up multiple hosts incoming, outgoing and internal emails Qt widget toolkit in current releases over network to tape drives/changers for long term storage. MailArchiva is to implement its user interface, and or disks or optical media. Amanda uses the development, to provide, a dual using pcap to capture packets, it runs native utilities and formats (e.g. dump license of e-mail archiving software on GNU/Linux, OS X, BSD, Solaris, and/or GNU tar) and can back up a large free of the Open Source Edition some other Unix-like operating number of servers and workstations and paid Enterprise Edition. Various systems, and Microsoft Windows. running multiple versions of Linux or OS can respond to the mail server Unix. Amanda is a fully integrated busi- environment, because of the multi- ness system which contains everything lingual, have been used in various from customer details, offer, order, countries and regions. -

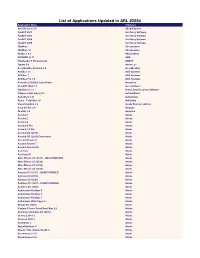

List of Applications Updated in ARL #2554

List of Applications Updated in ARL #2554 Application Name Publisher SyncBackLite 8.6 2BrightSparks TaxACT 2001 2nd Story Software TaxACT 2003 2nd Story Software TaxACT 2004 2nd Story Software TaxACT 2005 2nd Story Software 3DxWare 3Dconnexion 3DxWare 10 3Dconnexion HotDocs 6.3 AbacusNext PROMOD IV 11 ABB FineReader 6 Professional ABBYY Typora 0.9 Abner Lee AccuWeather Desktop 1.8 AccuWeather ACDSee 3.1 ACD Systems ACDSee 7 ACD Systems ACDSee Pro 7.0 ACD Systems Acoustica CD/DVD Label Maker Acoustica CutePDF Writer 3 Acro Software ISO Burner 1.1 Active Data Recovery Software FlySpeed SQL Query 3.3 ActiveDBSoft ActivePerl 5.16 ActiveState Rome - Total War 1.0 Activision Visual Lighting 2.4 Acuity Brands Lighting Easy-CD Pro 2.0 Adaptec OneSite 5.6 Adaptiva Acrobat 1 Adobe Acrobat 2 Adobe Acrobat 4 Adobe Acrobat 6 Pro Adobe Acrobat 7.0 Pro Adobe Acrobat DC (2019) Adobe Acrobat DC (2020) Continuous Adobe Acrobat Reader 6 Adobe Acrobat Reader 7 Adobe Acrobat Reader DC Adobe AcroTray Adobe AcroTray XI Adobe After Effects CC (2015) - UNAUTHORIZED Adobe After Effects CC (2018) Adobe After Effects CC (2019) Adobe After Effects CC (2020) Adobe Animate CC (2017) - UNAUTHORIZED Adobe Animate CC (2019) Adobe Animate CC (2020) Adobe Audition CC (2015) - UNAUTHORIZED Adobe Audition CC (2020) Adobe Authorware Runtime 4 Adobe Authorware Runtime 5 Adobe Authorware Runtime 7 Adobe Authorware Web Player 6 Adobe Bridge CC (2020) Adobe Capture Classic FormFlow Filler 2.2 Adobe Character Animator CC (2020) Adobe Connect 2019.3 Adobe Connect 2020.1 Adobe Contribute -

Defragment Software Freeware

Defragment software freeware click here to download Reviews of the Best Free Disk Defragmenter Programs for Windows. Defrag software programs are tools that arrange the bits of data that make up the files on your computer so they're stored closer together. Piriform's Defraggler tool is easily the best free defrag software program A Full Review of Defraggler, a · Auslogics Disk Defrag · Smart Defrag. Disk Defrag Free is a product of Auslogics, certified Microsoft® Gold Application Developer. The solution: At a click of a button Auslogics Disk Defrag Free will quickly defragment files on your hard drive, optimize file placement and consolidate free space to ensure the highest Disk Defrag Pro · Version History · Disk-defrag-pro/compare. Defraggler - Defragment and Optimize hard disks and individual files for more Softpedia; Clean Download; www.doorway.ru; Chip Online; Software Informer; PC. Looking for defragmentation software download from a trusted, free source? Then visit FileHippo today. Our software programs are from official sources.Auslogics Disk Defrag · Defraggler · Deutsch · Français. Top 5 best free defrag, defragmenters or defragmentation software for Windows 10/8/7. Download these freeware defragmentation tools here. Disk defrag your Windows with Smart Defrag freeware, Your first choice for defragging windows 10, 8, 7, XP and Vista. Download Free disk defragmenter now! Formerly JKDefrag, MyDefrag is a disk defragmentation tool that's easy to use and difficult to master. The app is simple enough that you can fire. UltraDefrag is an open source disk defragmenter for Windows. Get UltraDefrag at www.doorway.ru Fast, secure and Free Open Source software downloads. -

Troubleshooting Computers

Troubleshooting Computers There are basic rules of troubleshooting a problem, whether it is a hard drive or any other problem. 1. Identify the problem (gather information from the customer to identify the nature of the problem). o Can customer show you the problem? o How often does the problem occur? o Has any new hardware or software been installed recently? o Does the problem occur in a specific application? 2. Isolate the problem. 3. Correct the problem 4. Verify corrective action taken 5. Document your steps. This is useful for two reasons. For you or others to refer back to on the next possible service call for the same equipment and because you may not be the only one servicing the computer and the next person will need to know what steps you have taken. 6. Follow-up - contact the customer after they take back the equipment and see if they are problem-free. *** Some problems are hard to duplicate, especially intermittent hardware or software problems. Refer to customer's information on exactly what error message or problem occurred, what applications was the customer using, how many applications were open, etc..... Sometimes the computer works fine in the shop. but when the customer takes it back, the problem returns. This is always frustrating to the customer, and technician. Could an externally connected peripheral that the customer has have caused the problem? Communicate with the customer as often as needed. Overheat issues? *** If the laptop or desktop overheats at the customer site but not in the shop, ask if the location the computer is kept is in a area where the air vents could be blocked.