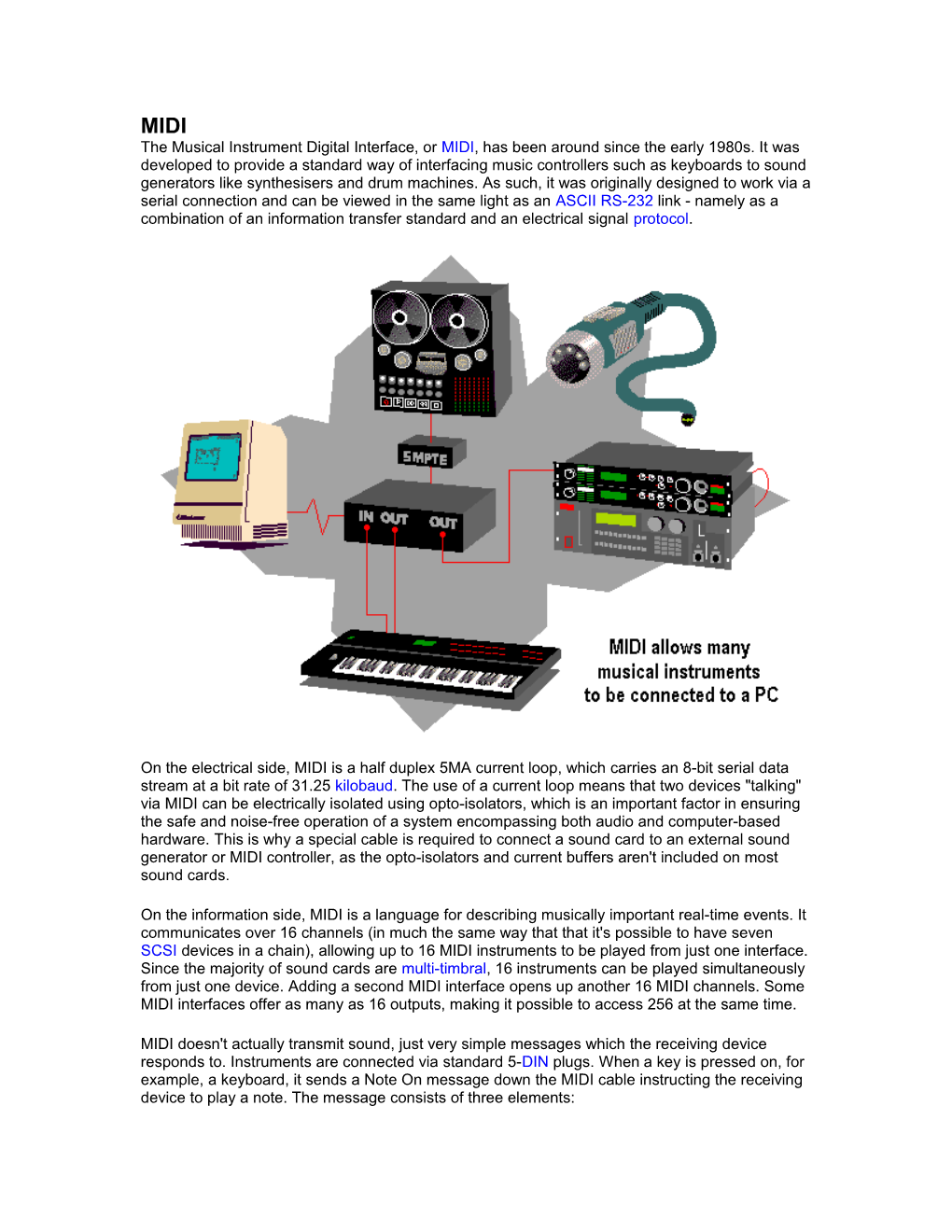

MIDI The Musical Instrument Digital Interface, or MIDI, has been around since the early 1980s. It was developed to provide a standard way of interfacing music controllers such as keyboards to sound generators like synthesisers and drum machines. As such, it was originally designed to work via a serial connection and can be viewed in the same light as an ASCII RS-232 link - namely as a combination of an information transfer standard and an electrical signal protocol.

On the electrical side, MIDI is a half duplex 5MA current loop, which carries an 8-bit serial data stream at a bit rate of 31.25 kilobaud. The use of a current loop means that two devices "talking" via MIDI can be electrically isolated using opto-isolators, which is an important factor in ensuring the safe and noise-free operation of a system encompassing both audio and computer-based hardware. This is why a special cable is required to connect a sound card to an external sound generator or MIDI controller, as the opto-isolators and current buffers aren't included on most sound cards.

On the information side, MIDI is a language for describing musically important real-time events. It communicates over 16 channels (in much the same way that that it's possible to have seven SCSI devices in a chain), allowing up to 16 MIDI instruments to be played from just one interface. Since the majority of sound cards are multi-timbral, 16 instruments can be played simultaneously from just one device. Adding a second MIDI interface opens up another 16 MIDI channels. Some MIDI interfaces offer as many as 16 outputs, making it possible to access 256 at the same time.

MIDI doesn't actually transmit sound, just very simple messages which the receiving device responds to. Instruments are connected via standard 5-DIN plugs. When a key is pressed on, for example, a keyboard, it sends a Note On message down the MIDI cable instructing the receiving device to play a note. The message consists of three elements: a Status Byte a Note Number a Velocity Value.

The Status Byte contains information about the event type (in this case a Note On) and which channel it is to be sent on (1-16). The Note Number describes the key that was pressed, say middle C, and the Velocity Value indicates the force at which the key was struck. The receiving device will play this note until a Note Off message is received containing the same data.

Depending on what sound is being played, synthesisers will respond differently to velocity. A piano sound, for example, will get louder as the key is struck more firmly. Tonal qualities also change. Professional synthesisers often introduce extra timbres to imitate the sound of the hammers striking the strings.

Continuous Controllers (CCs) are used to control settings such as volume, effects levels and pan (the positioning of sound across a stereo field). Many MIDI devices make it possible to assign internal parameters to a CC: there are 128 to choose from. From those, the MMA (MIDI Manufacturers Association) developed a specification for synthesisers known as General MIDI.

The first MIDI application was to allow keyboard players to "layer" the sounds produced by several synthesisers. Today, though, it is used mainly for sequencing. - although it has also been adopted by theatrical lighting companies as a convenient way of controlling light shows and projection systems.

Essentially, a sequencer is a digital tape recorder which records and plays MIDI messages rather than audio signals. The first sequencers had very little memory, which limited the amount of information they could store: most were only capable of holding one or two thousand events. As sequencers became more advanced, so did MIDI implementations. Not content with just playing notes over MIDI, manufacturers developed ways to control individual sound parameters and onboard digital effects using Continuous Controllers. The majority of sequencers today are PC- based applications and have the facility to adjust these parameters using graphical sliders. Most have an extensive array of features for editing and fine-tuning performances, so its not necessary to be an expert keyboard player to produce good music.

MIDI hasn't just affected the way musicians and programmers work; it has also changed the way lighting and sound engineers work. Because almost any electronic device can be made to respond to MIDI in some way or other, the automation of mixing desks and lighting equipment has evolved and MIDI has been widely adopted by theatrical lighting companies as a convenient way of controlling light shows and projection systems. When used with a sequencer, every action from a recording desk can be recorded, edited, and synchronised to music or film. It also provides an economical means for multimedia authors to deliver high quality audio to the listener. The current alternative is to sample the music, but at around 10 MB/min a 40GB hard disk would soon become a necessity. MIDI data requires only a fraction of this.

General MIDI In September of 1991 the MIDI Manufacturers Association (MMA) and the Japan MIDI Standards Committee (JMSC) created the beginning of a new era in MIDI technology, by adopting the "General MIDI System Level 1", referred to as GM or GM1. The specification is designed to provide a minimum level of performance compatibility among MIDI instruments, and has helped pave the way for MIDI in the growing consumer and multimedia markets.

The specification imposes a number of requirements on compliant sound generating devices (keyboard, sound module, sound card, IC, software program or other product), including that: A minimum of either 24 fully dynamically allocated voices are available simultaneously for both melodic and percussive sounds, or 16 dynamically allocated voices are available for melody plus 8 for percussion All 16 MIDI Channels are supported, each capable of playing a variable number of voices (polyphony) or a different instrument (sound/patch/timbre) A minimum of 16 simultaneous and different timbres playing various instruments are supported as well as a minimum of 128 preset instruments (MIDI program numbers) conforming to the GM1 Instrument Patch Map and 47 percussion sounds which conform to the GM1 Percussion Key Map.

When MIDI first evolved it allowed musicians to piece together musical arrangements using whatever MIDI instruments they had. But when it came to playing the files on other synthesisers, there was no guarantee that it would sound the same, because different instrument manufacturers may have assigned instruments to different program numbers: what might have been a piano on the original synthesiser may play back as a trumpet on another. General MIDI compliant modules now allow music to be produced and played back regardless of manufacturer or product.