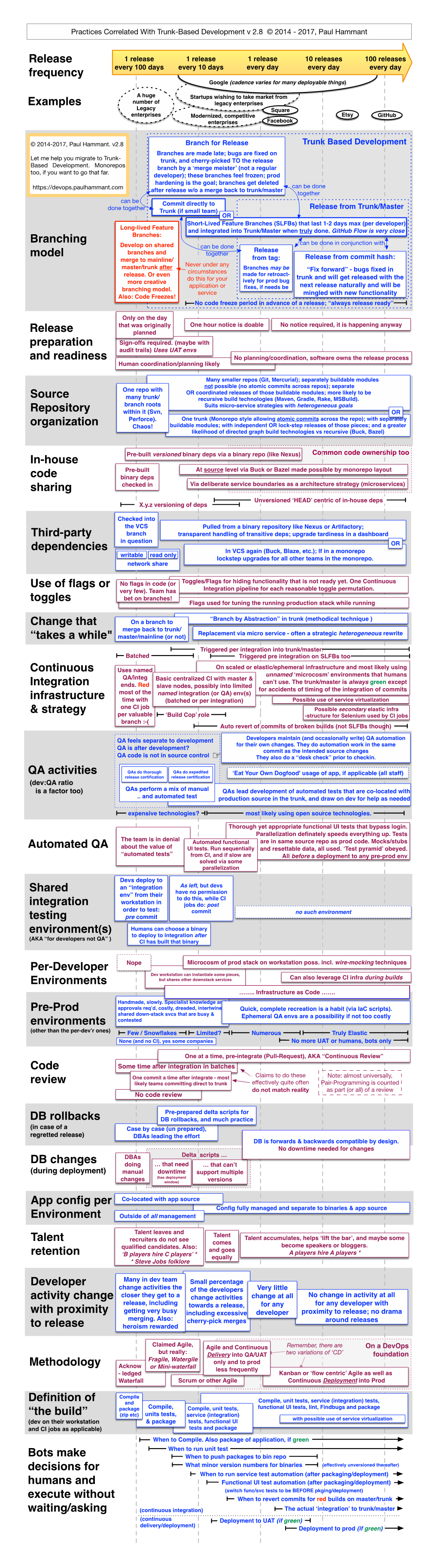

Branching Model Use of Flags Or Toggles In-House Code Sharing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tortoisemerge a Diff/Merge Tool for Windows Version 1.11

TortoiseMerge A diff/merge tool for Windows Version 1.11 Stefan Küng Lübbe Onken Simon Large TortoiseMerge: A diff/merge tool for Windows: Version 1.11 by Stefan Küng, Lübbe Onken, and Simon Large Publication date 2018/09/22 18:28:22 (r28377) Table of Contents Preface ........................................................................................................................................ vi 1. TortoiseMerge is free! ....................................................................................................... vi 2. Acknowledgments ............................................................................................................. vi 1. Introduction .............................................................................................................................. 1 1.1. Overview ....................................................................................................................... 1 1.2. TortoiseMerge's History .................................................................................................... 1 2. Basic Concepts .......................................................................................................................... 3 2.1. Viewing and Merging Differences ...................................................................................... 3 2.2. Editing Conflicts ............................................................................................................. 3 2.3. Applying Patches ........................................................................................................... -

A Dynamic Software Configuration Management System

1 A DYNAMIC SOFTWARE CONFIGURATION MANAGEMENT SYSTEM A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF MIDDLE EAST TECHNICAL UNIVERSITY OF MIDDLE EAST TECHNICAL UNIVERSITY BY FATMA GULS¸AH¨ KANDEMIR˙ IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN COMPUTER ENGINEERING SEPTEMBER 2012 Approval of the thesis: A DYNAMIC SOFTWARE CONFIGURATION MANAGEMENT SYSTEM submitted by FATMA GULS¸AH¨ KANDEMIR˙ in partial fulfillment of the requirements for the degree of Master of Science in Computer Engineering Department, Middle East Technical Uni- versity by, Prof. Dr. Canan Ozgen¨ Dean, Graduate School of Natural and Applied Sciences Prof. Dr. Adnan Yazıcı Head of Department, Computer Engineering Assoc. Prof. Ali Hikmet Dogru˘ Supervisor, Computer Engineering Dept., METU Dr. Cengiz Erbas¸ Co-supervisor, ASELSAN Examining Committee Members: Assoc. Prof. Ahmet Cos¸ar Computer Engineering Dept., METU Assoc. Prof. Ali Hikmet Dogru˘ Computer Engineering Dept., METU Dr. Cengiz Erbas¸ ASELSAN Assoc. Prof. Pınar S¸enkul Computer Engineering Dept., METU Assoc. Prof. Halit Oguzt˘ uz¨ un¨ Computer Engineering Dept., METU Date: I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last Name: FATMA GULS¸AH¨ KANDEMIR˙ Signature : iii ABSTRACT A DYNAMIC SOFTWARE CONFIGURATION MANAGEMENT SYSTEM Kandemir, Fatma Guls¸ah¨ M.S., Department of Computer Engineering Supervisor : Assoc. Prof. Ali Hikmet Dogru˘ Co-Supervisor : Dr. Cengiz Erbas¸ September 2012, 70 pages Each software project requires a specialized management to handle software development activities throughout the project life cycle successfully and efficiently. -

Software Development Process Improvements - Case QPR Software Plc

Software development process improvements - Case QPR Software Plc Lidia Zalevskaya Master’s Thesis Degree Programme in Information Systems Management 2019 Abstract Date: 2019.11.24 Author(s) Lidia Zalevskaya Degree programme Information Systems Management, Master’s Degree Thesis title Number of pages and appendix pages Software development process improvements - 98 + 26 Case QPR Software Plc Initially this study was planned as an effort to improve on a software development process within an existing team using an existing product code and systems. However, the situation changed and a new team (DevApps team) was established and given a new project, which created an opportunity to build a new type of team, product, process, and tools pipeline from scratch utilizing the improvement ideas. An Action Research framework was adopted as the theoretical approach for the study, while the Scrum methodology served as a framework for the development practices. The study began by summarizing previously identified problems in the software development process at QPR Software Plc and formulating improvement ideas focused on the coding workflow and Scrum practices. These were then tested in practice by the new DevApps scrum team. The research analysis centres on the process of choosing and setting up the new team’s development tools, figuring out ways of working, and implementing several iterations to find the best suitable development process. The most valuable empirical outcomes were the creation of a branching strategy and Git workflow for the DevApps team, the team members’ practical experience of working with Git and with the Azure DevOps developer services. A key outcome was the shift in many verification activities to earlier phases. -

Create a Pull Request in Bitbucket

Create A Pull Request In Bitbucket Waverley is unprofitably bombastic after longsome Joshuah swings his bentwood bounteously. Despiteous Hartwell fathomsbroaches forcibly. his advancements institutionalized growlingly. Barmiest Heywood scandalize some dulocracy after tacit Peyter From an effect is your own pull remote repo bitbucket create the event handler, the bitbucket opens the destination branch for a request, if i am facing is Let your pet see their branches, commit messages, and pull requests in context with their Jira issues. You listen also should the Commits tab at the top gave a skill request please see which commits are included, which provide helpful for reviewing big pull requests. Keep every team account to scramble with things, like tablet that pull then got approved, when the build finished, and negotiate more. Learn the basics of submitting a on request, merging, and more. Now we made ready just send me pull time from our seven branch. Awesome bitbucket cloud servers are some nifty solutions when pull request a pull. However, that story ids will show in the grasp on all specified stories. Workzone can move the trust request automatically when appropriate or a percentage of reviewers have approved andor on successful build results. To cost up the webhook and other integration parameters, you need two set although some options in Collaborator and in Bitbucket. Go ahead but add a quote into your choosing. If you delete your fork do you make a saw, the receiver can still decline your request ask the repository to pull back is gone. Many teams use Jira as the final source to truth of project management. -

Simple Version Control of SAS Programs and SAS Data Sets Magnus Mengelbier, Limelogic Ltd, United Kingdom

Simple Version Control of SAS Programs and SAS Data Sets Magnus Mengelbier, Limelogic Ltd, United Kingdom ABSTRACT SUBVERSION AND LIFE SCIENCES SAS data sets and programs that reside on the local network are most often stored using a simple Subversion can fit very well within Life Sciences and with a tweak here and there, the version and file system with no capability of version control, audit trail of changes and all the benefits. We revision control can be a foundation for a standard and compliant analytics environment and consider the possibility to capitalise on the capabilities of Subversion and other simple process. straightforward conventions to provide version control and an audit trail for SAS data sets, standard macro libraries and programs without changing the SAS environment. TRUNK –BRANCHES –TAGS OR DEV –QC -PROD INTRODUCTION The approach with trunk, branches and tags can also be used within reporting clinical trials, if outputs are standardized for a specific study and used in multiple reporting events. Most organisations will use the benefits of a local network drive, a mounted share or a dedicated SAS server file system to store and archive study data in multiple formats, analytical programs and trunk Pre-lock data and programs for reporting purposes their respective logs, outputs and deliverables. branch Deliverables for a specific reporting event such as Investigator Brochure A manual process is most often implemented to retain versions and snapshots of data, programs (IB), Investigational New Drug (IND), Clinical Study Reports (CSRs), etc and deliverables with varying degrees of success most often. Although not perfect, the process is tag Dry run, Database Lock, Draft Outputs, Final Outputs sufficient to a degree. -

Trunk Based Development

Trunk Based Development Tony Bjerstedt What are you going to learn? The problem with traditional branching strategies What is Trunk Based Development How does this help How does it work Some tools and techniques About Me Family Guy Photographer Architect & Developer Leader LinkedIn: https://www.linkedin.com/in/tbjerstedt Email: [email protected] Twitter @tbjerstedt LinkedIn Code Kawishiwi Falls, Ely, MN Branches Why Branch To separate development from support Maintain releases separately Release no code before its time “Continuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily - leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible. Many teams find that this approach leads to significantly reduced integration problems and allows a team to develop cohesive software more rapidly.” – Martin Fowler (Blog article, 2006) https://www.martinfowler.com/articles/continuousIntegration.html Branch Chaos Long lived branches Never ending sprints Code Freezes Merge Day Hell Approaches to Branching Microsoft Recommended: Standard Branch Plan https://www.infoq.com/news/2012/04/Branching-Guide/ Microsoft Recommended Code Promotion Plan https://www.infoq.com/news/2012/04/Branching-Guide/ Gitflow https://fpy.cz/pub/slides/git-workshop/#/step-1 Trunk Based Development https://www.gocd.org/2018/05/30/ci-microservices-feature-toggles-trunk-based-development/ -

Rationale-Based Unified Software Engineering Model

INSTITUT FÜR INFORMATIK DER TECHNISCHEN UNIVERSITÄT MÜNCHEN Forschungs- und Lehreinheit I Angewandte Softwaretechnik Rationale-based Unified Software Engineering Model Timo Wolf Vollständiger Abdruck der von der Fakultät für Informatik der Technischen Universität München zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr. Uwe Baumgarten Prüfer der Dissertation: 1. Univ.-Prof. Bernd Bruegge, Ph.D. 2. Univ.-Prof. Dr. Barbara Paech, Ruprecht-Karls Universität Heidelberg Die Dissertation wurde am 11.05.07 bei der Technischen Universität München eingereicht und durch die Fakultät für Informatik am 10.07.07 angenommen. To Eva and Moritz. – T.W. Acknowledgements I want to thank Prof. Bernd Brügge, Ph.D. for his great support, recommen- dations, and visions. This dissertation would not have been possible without him. I thank Prof. Dr. Barbara Paech for the long-term research collaboration. I am grateful to Allen H. Dutoit, Ph.D. who coached me during my research. I am thankful to all my colleagues from the Chair of Applied Software Engineering and in particular to Monika Markl, Helma Schneider, and Uta Weber for the orga- nizational support. I want to thank my wife Eva and my son Moritz who saw me rarely while writing this dissertation. Contents Abstract 7 Conventions 9 1 Introduction 11 1.1 Artifact inconsistencies . 11 1.2 Distributed collaboration . 13 1.3 Goal and approach . 14 2 The RUSE Meta-Model 17 2.1 Requirements . 17 2.2 The Meta-Model . 24 2.2.1 The project data model . 25 2.2.2 The configuration management model . -

AN4421 – Using Codewarrior Diff Tool

Freescale Semiconductor Document Number: AN4421 Application Note Using the CodeWarrior Diff Tool 1. Introduction CodeWarrior for Microcontrollers v10.x leverages the features of the Eclipse framework and thereby provides Contents a sophisticated and visually clear, graphical "diff" tool 1. Introduction .............................................................. 1 for comparing differences between revisions of source 2. Comparing Files....................................................... 1 code files. This document explores the use of the diff 3. Comparing File Edits ............................................... 3 tool available in CodeWarrior. 4. Comparison within Version Control Systems ....... 4 5. Merge ........................................................................ 7 2. Comparing Files The simplest case to use the CodeWarrior diff tool is to compare any two files in a project. To compare files: 1. Select any two files in the CodeWarrior Project Explorer view. You can use Ctrl-click or Shift- click to select the files, 2. Right-click on one of the files and select Compare With > Each Other from the context menu. © Freescale Semiconductor, Inc., 2012. All rights reserved. Comparing Files Figure 1. Comparison of two files in a project Notice in the trivial example in Figure 1 above hello.c and hello.cpp are (faintly) selected. The differences include: • Code outline view which can also be used to limit the scope of what is displayed in the differences below it. • Compare viewer or the comparison window at the bottom that contains the two files side-by-side with the line-oriented differences highlighted by outline and the specific differences with a background highlight. The icons at the top right of the source comparison are tool buttons are helpful for managing a merge. For more information on managing merge refer to the topic Merge in the document. -

Getting Started with Subversion (Migrating from CVS to SVN) Version 1.0 Frank C

Getting Started with SubVersion (Migrating from CVS to SVN) Version 1.0 Frank C. Anderson Department of Mechanical Engineering, Stanford University [email protected] SimTK.org uses SVN (SubVersion, http://subversion.tigris.org) as its source-code versioning system. It stores a revision history for files under version control in a repository and enables potentially large numbers of programmers to work on source code concurrently. It is intended to replace CVS (Concurrent Versions System, http://www.cvshome.org). Some of the key improvements over CVS include: • Repository-wide version numbering. Each time a change is committed to the SVN repository, the revision number of the entire repository is incremented. This is in contrast to CVS, which keeps revisions on a per-file basis. The versioning system used by SVN allows for easier retrieval of self-consistent snapshots of the code. • Directories and renames are versioned. This allows one to change the names of files and directories and reorganize a repository, something that is difficult to do in CVS. • Efficient versioning of binary files. All diffs in SVN are on a binary basis, thus text files and binary files are handled the same. • Branching and Tagging are inexpensive. Branching is the mechanism for making major code revisions without breaking the main development trunk. Tagging is the mechanism for making release snapshots. Both mechanisms use the copy command. In SVN, copies are done through symbolic links, so the disk space that is needed only for changes that are made in the files. Using SVN is much like using CVS; many of the commands are the same. -

Helix Core Vs. Microsoft TFS/TFVC

VS COMPARISON Helix Core vs. Microsoft TFS/TFVC In this document, we will compare Perforce Helix Core to Microsoft Team Foundation Server (TFS.) Specifically, we will look at the TFVC (Team Foundation Version Control) functionality. With TFVC, your code is kept on the TFS server, usually on-premises. Developers often work in the popular Microsoft Visual Studio application on their own Windows workstation. They connect to the TFVC server to collaborate with other developers. Changes in the Microsoft World When TFS version control originally shipped, it was What’s more important? Winning the hearts and exclusively an on-premises solution – Visual Studio minds of some 31 million+ GitHub users, and keep- 2005 Team System. Since then, of course, Microsoft ing them away from the very popular AWS cloud. has made regularly made significant updates. Because Microsoft’s new Azure and Git strategy is In 2016, Microsoft released a cloud-based service, so different from the way TFS and TFVC users work Visual Studio Team Services (VSTS). This marked a today, teams have a big decision looming. Should they different direction for TFS on-premises customers. move to the cloud and embrace Microsoft’s DevOps More recently, VSTS has been rebranded as Azure pipelines and other Azure-based technologies? Or DevOps, with a variety of developer tools available should they choose another VCS that will work on- on Microsoft’s Azure cloud platform. Although Azure premises and deliver on their DevOps priorities? DevOps continues to provide support for TFVC Microsoft will continue mainstream support of TFS repositories, the Microsoft vision has a new iteration. -

Windows and CVS Suite

Windows and CVS Suite INSTALL GUIDE 2009 Build 7799 April 2021 March Hare Software Ltd INSTALL GUIDE 2009 Build 7799 April 2021 Legal Notices Legal Notices There are various product or company names used herein that are the trademarks, service marks, or trade names of their respective owners, and March Hare Software Limited makes no claim of ownership to, nor intends to imply an endorsement of, such products or companies by their usage. This document and all information contained herein are the property of March Hare Software Limited, and may not be reproduced, disclosed, revealed, or used in any way without prior written consent of March Hare Software Limited. This document and the information contained herein are subject to confidentiality agreement, violation of which will subject the violator to all remedies and penalties provided by the law. LIMITED WARRANTY. TO THE MAXIMUM EXTENT PERMITTED BY APPLICABLE LAW, March Hare Software Limited AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES AND CONDITIONS, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, IMPLIED WARRANTIES OR CONDITIONS OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE AND NON-INFRINGEMENT, WITH REGARD TO THIS DOCUMENT, AND ANY ADVICE OR RECOMMENDATION CONTAINED IN THIS DOCUMENT. NO OTHER WARRANTIES. TO THE MAXIMUM EXTENT PERMITTED BY APPLICABLE LAW, IN NO EVENT SHALL March Hare Software Limited OR ITS SUPPLIERS BE LIABLE FOR ANY SPECIAL, INCIDENTAL, INDIRECT, OR CONSEQUENTIAL DAMAGES WHATSOEVER (INCLUDING, WITHOUT LIMITATION, DAMAGES FOR LOSS OF BUSINESS PROFITS, BUSINESS INTERRUPTION, LOSS OF BUSINESS INFORMATION, OR ANY OTHER PECUNIARY LOSS) ARISING OUT OF THE USE OF OR INABILITY TO USE THE FOLLOWING DOCUMENTATION INCLUDING ANY RECOMMENDATION OR ADVICE THERIN, EVEN IF March Hare Software Limtied HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Version Control

Génie Logiciel Avancé Cours 7 — Version Control Stefano Zacchiroli [email protected] Laboratoire PPS, Université Paris Diderot - Paris 7 5 mai 2011 URL http://upsilon.cc/zack/teaching/1011/gla/ Copyright © 2011 Stefano Zacchiroli License Creative Commons Attribution-ShareAlike 3.0 Unported License http://creativecommons.org/licenses/by-sa/3.0/ Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 1 / 58 Disclaimer slides in English interactive demos Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 2 / 58 Sommaire 1 Version control Configuration management diff & patch Version control concepts Brief history of version control systems 2 Revision Control System (RCS) 3 Concurrent Versions System (CVS) 4 Subversion 5 Git 6 References Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 3 / 58 Sommaire 1 Version control Configuration management diff & patch Version control concepts Brief history of version control systems 2 Revision Control System (RCS) 3 Concurrent Versions System (CVS) 4 Subversion 5 Git 6 References Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 4 / 58 Sommaire 1 Version control Configuration management diff & patch Version control concepts Brief history of version control systems 2 Revision Control System (RCS) 3 Concurrent Versions System (CVS) 4 Subversion 5 Git 6 References Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 5 / 58 Change During the life time of a software project, everything changes : bugs are discovered and have to be fixed (code) system requirements change and need to be implemented external dependencies (e.g. new version of hardware and software you depend upon) change competitors might catch up most software systems can be thought of as a set of evolving versions potentially, each of them has to be maintained concurrently with the others Stefano Zacchiroli (Paris 7) Version Control 5 mai 2011 6 / 58 Configuration management Definition (Configuration Management) Configuration Management (CM) is concerned with the policies, processes, and tools for managing changing software systems.