The Software Testing Challenges and Methods

Ljubomir Lazić, SIEMENS d.o.o, Radoja Dakića 7, 11070 Beograd, Serbia&Montenegro, http://www.siemens.co.yu

Abstract:- The Software Testing Process (STP) raised many challenging issues in past decades of software development practice, several of which remain open. The System/Software under test (SUT) continually increases complexity of applied technology, software application domain model and corresponding process knowledge and experience. Today’s SUT have billions of possible inputs and outputs. How does one obtain adequate test coverage with reasonable or even optimal number of test events i.e. test cases? How does one measure test effectiveness, efficacy, benefits, risks (confidence) of project success, availability of resources, budget, time allocated to STP? How does one plan, estimate, predict, control, evaluate and choose “the best” test scenario among hundreds of possible (considered, available, feasible) number of test events (test cases)? How does one judge, decide if satisfied/not satisfied program behavior, Pass/Fail result , Go/Ngo decision after test run i.e. does have Test Oracle? This paper describes the major issues that are encountered while developing framework of Integrated and Optimized Software Testing Process (IOSTP). IOSTP framework combines few engineering and scientific areas such as: Design of Experiments, Modeling & Simulation, integrated practical software measurement, Six Sigma strategy, Earned (Economic) Value Management (EVM) and Risk Management (RM) methodology through simulation-based software testing scenarios at various abstraction levels of the SUT to manage stable (predictable and controllable) software testing process at lowest risk, at an affordable price and time. In order to significantly improve software testing efficiency and effectiveness for the detection and removal of requirements and design defects in our framework of IOSTP, during 3 years of our IOSTP framework deployment to STP we calculated overall value returned on each dollar invested i.e. ROI of 100:1.

Key-Words:- software testing, optimization, simulation, continuous risk management, earned value management, test evaluation, measurement.

1 Introduction software testing has become extremely important The increasing cost and complexity of software software engineering activity. Software development development is leading software organizations in the activities, in every phase, are error prone so defects play industry to search for new ways through process a crucial role in software development. We usually methodology and tools for improving the quality of the think of testing in software development as something software they develop and deliver. However, the overall we do when we run out of time or after we have process is only as strong as its weakest link. This critical developed code. However, the fundamental approach as link is software quality engineering as an activity and as presented here focuses on testing as a fully integrated a process. Testing is the key instrument for making this but independent activity with development that has a process happen. lifecycle all its own, and that the people, the process and Software testing has traditionally been viewed by many the appropriate automated technology are crucial for the as a necessary evil, dreaded by both software developers successful delivery of the software based system. and management alike, and not as an integrated and Planning, managing, executing, and documenting parallel activity staged across the entire software testing as a key process activity during all stages of development life cycle. One thing is clear - by development is an incredibly difficult process. definition, testing is still considered by many as only a Software vendors typically spend 30 to 70 percent of negative step usually occurring at the end of the their total development budget i.e. of an organization’s software development process while others now view software development resources on testing. Software testing as a “competitive edge” practice and strategy. engineers generally agree that the cost to correct a Solutions in software engineering are more complex- defect increase, as the time elapsed between error interconnect in more and more intricate technologies injection and detection increases several times across multiple operation environments. With the depending on defect severity and software testing increasing business demand for more software coupled process maturity level [1,2]. with the advent of newer, more productive languages Until coding phase of software development, testing and tools, more code is being produced in very short activities are mainly test planning and test case design. periods of time. Computer based Modeling and Simulation (M&S) is In software development organizations, increased valuable technique in Test Process planning in testing complexity of product, shortened development cycles, complex Software/System under test (SUT) to evaluate and higher customer expectations of quality proves that the interactions of large, complex systems with many 1 hardware, user, and other interfacing software 2 Fundamental challenges in software components such are Spacecraft Software, Air Traffic testing Control Systems, in DoD Test and Evaluation (T&E) activities [4-6]. 2.1 Software quality engineering - as a discipline There is strong demand for software testing and as a practice (process and product) effectiveness and efficiency increases. Software/System Software Quality Engineering is composed of two testing effectiveness is mainly measured by percentage primary activities - process level quality which is of defect detection and defect leakage (containment), normally called quality assurance, and product oriented i.e. late defect discovery. Software testing efficiency is quality that is normally called testing. Process level mainly measured by dollars spent per defect found and quality establishes the techniques, procedures, and tools hours spent per defect found. To reach ever more that help promote, encourage, facilitate, and create a demanding goals for effectiveness and efficiency, software development environment in which efficient, software developers and testers should apply new optimized, acceptable, and as fault-free as possible techniques such as computer-based modeling and software code is produced. Product level quality focuses simulation - M&S [6-9]. on ensuring that the software delivered is as error-free The results of computer-based simulation experiments as possible, functionally sound, and meets or exceeds with a particular embedded software system, an the real user’s needs. Testing is normally done as a automated target tracking radar system (ATTRS), are vehicle for finding errors in order to get rid of them. presented in our paper [6]. The aim is to raise awareness This raises an important point - then just what is testing? about the usefulness and importance of computer-based Common definitions for testing - A Set of Testing simulation in support of software testing. Myths: At the beginning of the software testing task the “Testing is the process of demonstrating that defects are following question arises: how should the results of test not present in the application that was developed.” execution be inspected in order to reveal failures? “ Testing is the activity or process which shows or Testing by nature is measurement, i.e. test results must demonstrates that a program or system performs all be analyzed and compared with desired behavior. intended functions correctly.” This paper is contribution to software testing “ Testing is the activity of establishing the necessary engineering by presenting challenges and corresponding “confidence” that a program or system does what it is methods implemented in Integrated and Optimized supposed to do, based on the set of requirements that the Software Testing Process framework (IOSTP). IOSTP user has specified.” framework combines few engineering and scientific All of the above myths are very common and still areas such as: Design of Experiments, Modeling & prevalent definitions of testing. However, there is Simulation, integrated practical software measurement, something fundamentally wrong with each of these Six Sigma strategy, Earned (Economic) Value myths. The problem is this - each of these myths takes a Management (EVM) and Risk Management (RM) positive approach towards testing. In other words, each methodology through simulation-based software testing of these testing myths represents an activity that proves scenarios at various abstraction levels of the SUT to that something works. manage stable (predictable and controllable) software However, it is very easy to prove that something works testing process at lowest risk, at an affordable price and but not so easy to prove that it does not work! In fact, if time [6-22]. In order to significantly improve software one were to use formal logic, it is nearly impossible to testing efficiency and effectiveness for the detection and prove that defects are not present. Just because a removal of requirements and design defects in our particular test does not find a defect does not prove that framework of IOSTP, during 3 years of our IOSTP a defect is not present. What it does mean is that the test framework deployment to STP of embedded-software did not find it. critical system such as Automated Target Tracking These myths are still entrenched in much of how we Radar System [6,16,19], we calculated overall value collectively view testing and this mind-set sets us up for returned on each dollar invested i.e. ROI of 100:1 . failure even before we start really testing! So what is the The paper begins with an outline of fundamental real definition of testing? challenges in software testing in section 2, then the “Testing is the process of executing a program/system problems with software testing and Integrated and with the intent of finding errors.” Optimized Software Testing Process framework state- The emphasis is on the deliberate intent of finding of-the-art methods implementation is described in errors. This is much different than simply proving that a section 3. In section 4, some details and experience of program or system works. This definition of testing methods implemented are presented. Finally in section comes from The Art of Software Testing by Glenford 5, some concluding remarks are given. Myers. It was his opinion that computer software is one of the most complex products to come out of the human mind.

2 So why test in the first place? You know you can’t find Five Fundamental Challenges to Competent Testing all of the bugs. You know you can’t prove the code is Complete testing is impossible (budget, correct. And you know that you will not win any schedule, resources, quality constraints) popularity contests finding bugs in the first place. So Testers misallocate resources because they fall why even bother testing when there are all these for the company’s process myths constraints? The fundamental purpose of software Test groups operate under multiple missions, testing is to find problems in the software. Finding often conflicting, rarely articulated problems and having them fixed is the core of what a Test groups often lack skilled programmers, and test engineer does. A test engineer should WANT to a vision of appropriate projects that would keep find as many problems as possible and the more serious programming testers challenged the problems the better. So it becomes critical that the Software testing as a part of software testing process is made as efficient and as cost-effective development process is human intensive work as possible in finding those software problems. The with high uncertainty i.e. risks. primary axiom for the testing equation within software development is this: 2.3 Complete testing is impossible “ A test when executed that reveals a problem in the There are enormous numbers of possible tests. To test software is a success.” everything, you would have to, but list is not complete: The purpose of finding problems is to get them fixed. Test every possible input to every variable The benefit is code that is more reliable, more robust, (physical, operator input). more stable, and more closely matches what the real Test every possible combination of inputs to end-user wanted or thought they asked for in the first every combination of variables because of place! A tester must take a destructive attitude toward inputs interactions. the code, knowing that this activity is, in the end, Test every possible sequence through the constructive. Testing is a negative activity conducted program. with the explicit intent and purpose of creating a Test every hardware / software configuration, stronger software product and is operatively focused on including configurations of servers not under the “weak links” in the software. So if a larger software your control. quality engineering process is established to prevent and Test every way in which the user might try to find errors, we can then change our collective mind-set use the program (use case scenarios). about how to ensure the quality of the software developed. One approach to the problem has been to (attempt to) The other problem is that you will really never have simplify it away, by saying that you achieve “complete enough time to test. We need to change our testing” if you achieve “complete coverage”. understanding and use the testing time we do have, by What is coverage? applying it to the earlier phases of the software . Extent of testing of certain attributes or pieces of development life cycle. You need to think about testing the program, such as statement coverage or the first day you think about the system. Rather then branch coverage or condition coverage (If, Case, viewing testing as something that takes place after While and other structure). development, focus instead on the testing of everything . Extent of testing is completed, compared to a as you go along to include the concept of operations, the population of possible tests, software requirements and specifications, the design, the code, requirements, specifications, environment and of course, the tests! characteristics etc. What’s So Special About Testing? Typical definitions are oversimplified. They miss, for . Wide array of issues: technical, psychological, example: project management, marketing, application o Interrupts and other parallel operations domain. o Interesting data values and data combinations . Toward the end of the project, there is little o Missing code slack left. Decisions have impact now. The In practice, the number of variables we might difficult decisions must be faced and made. o . Testing plays a make-or-break role on the measure is stunning, but many we cannot project. control such are environment characteristics. Coverage measurement is an interesting way to tell that o An effective test manager and senior testers you are far away from complete testing, but testing in can facilitate the release of a high-quality order to achieve a “high” coverage is likely to result in product. development of a mass of low-power tests. o Less skilled testing staff creates more discord People optimize what we measure them against, at the than their technical contributions (such as expense of what we don’t measure. Brian Marick, raises they are) are worth. this and several other issues in his papers at 3 www.testing.com (e.g. How to Misuse Code Coverage). Report bugs informally, keeping them out of Brian has been involved in development of several of the tracking system the commercial coverage tools. Testers get sent to the movies before Another way people measure completeness, or extent, measurement checkpoints. of testing is by plotting bug curves, such as: Programmers ignore bugs they find until New bugs found per week testers report them. Bugs still open (each week) Bugs are taken personally. Ratio of bugs found to bugs fixed (per week) More bugs are rejected. We fit the curve to a theoretical curve, often a When you get past the simplistic answers, you realize probability distribution, and read our position that the time needed for test-related tasks is infinitely from the curve. larger than the time available. Example: Time you At some point, it is “clear” from the curve that we’re spend on: analyzing, troubleshooting, and effectively done. A Common Model (Weibull) and its Assumptions describing a failure. The fact is, the time is no longer 1. Testing occurs in a way that is similar to the way the available for: software will be operated. Designing tests; Documenting tests; Executing 2. All defects are equally likely to be encountered. tests; Automating tests; Reviews, inspections; 3. All defects are independent. Supporting tech support; Retooling; Training 4. There is a fixed, finite number of defects in the other staff. software at the start of testing. Today’s business mantra better, faster, cheaper require 5. The time to arrival of a defect follows the Weibull even more tradeoffs: distribution. From an infinitely large population of tests, we 6. The number of defects detected in a testing interval can only run a few. Which few do we select? is independent of the number detected in other Competing characteristics of good tests. One testing intervals for any finite collection of test is better than another if it is: intervals. More powerful In reality, is absurd to rely on a distributional model More likely to yield significant (more when every assumption it makes about testing is motivating, more persuasive) results obviously false for actual project. More credible BUT WHAT DOES THAT TELL US? HOW Representative of events more likely to be SHOULD WE INTERPRET IT? encountered by the user Earlier in testing history: Pressure is to increase bug counts Easier to evaluate. o Run tests of features known to be broken or More useful for troubleshooting incomplete. More informative o Run multiple related tests to find multiple More appropriately complex related bugs. More likely to help the tester or the o Look for easy bugs in high quantities rather programmer develop insight into some than hard bugs. aspect of the product, the customer, or the o Less emphasis on infrastructure, automation environment architecture, tools and more emphasis of bug No test satisfies all of these characteristics. How do we finding (short term payoff but long term balance them? inefficiency). 2.3 Process myths in which we trust too much Later in testing history: Pressure is to decrease new Every actor in SDP say, you can trust me on this: bug rate We follow the waterfall lifecycle We collect all of the product requirements at the Run lots of already-run regression tests start of the project, and we can rely on the Don’t look as hard for new bugs. requirements document throughout the project. Shift focus to appraisal, status reporting. We write thorough, correct specifications and keep Classify unrelated bugs as duplicates them up to date. Class related bugs as duplicates (and closed), The customer will accept a program whose behavior hiding key data about the symptoms / causes exactly matches the specification. of the problem. We fix every bug of severity (or priority) level X Postpone bug reporting until after the and we never lower the severity level to avoid measurement checkpoint (milestone). (Some having to fix the bug. bugs are lost.)

4 Amazingly, many testers believe statements like this, . Strength in programming is seen as assuring Project after Project, and rely on them, Project after strength in testing. Project. . Many common testing practices do not require Effects of relying on process myths are: programming knowledge or skill. . Testers design their tests from the specs / . People who want to be in Product Development requirements, long before they get the code. but who can’t code have nowhere else to go. After all, we know what the program will be like. . People who are skilled programmers are afraid of . Testers evaluate program capability in terms of dead-ending in a test group. conformance to the written requirements, The capabilities of the testing team can greatly affect suspending their own judgment. After all, we the success, or failure, of the testing effort. An effective know what the customer wants. testing team includes a mixture of technical and domain . Testers evaluate program correctness only in expertise relevant to the software problem at hand. It is terms of conformance to specification, not enough for a testing team to be technically suspending their own judgment. After all, this is proficient with the testing techniques and tools what the customer wants. necessary to perform the actual tests. Depending on the . Testers build extensive, fragile, GUI-level complexity of the domain, a test team should also regression test suites. After all, the UI is fully include members who have a detailed understanding of specified. We know it’s not going to change. the problem domain. This knowledge enables them to The oracle problem is not considered at all in spite of create effective test artifacts and data and to effectively difficulties of finding a method that lets you determine implement test scripts and other test mechanisms. whether a program passed or failed a test. In addition, the testing team must be properly structured, with defined roles and responsibilities that 2.4. Multiple Missions allow the testers to perform their functions with minimal If your TMM level is low, tester’s multiple missions are overlap and without uncertainty regarding which team rarely articulated, wrongly to: member should perform which duties. One way to � Find defects divide testing resources is by specialization in particular � Block premature product releases application areas and non functional areas. The testing team may also have more role requirements than it has � Help managers make ship / no-ship decisions members, which must be considered by the test � Minimize technical support costs manager. � Assess conformance to specification As with any team, continual evaluation of the � Conform to regulations effectiveness of each test team member is important to � Minimize safety-related lawsuit risk ensuring a successful test effort. Evaluation of testers is performed by examining a variety of areas, including � Find safe scenarios for use of the product the types of defects generated, and the number and types � Assess quality of defects missed. It is never good practice to evaluate a � Verify correctness of the product test engineer's performance using numbers of defects � Assure quality generated alone, since this metric by itself does not tell Do you know what your group’s mission is? Does the whole story. Many factors must be considered everyone in your company agree? during this type of evaluation, such as complexity of functionality tested, time constraints, test engineer role 2.5. Weak team composition and responsibilities, experience, and so on. Regularly A systematic approach to product development evaluating team members using valid criteria makes it (acquisition) which increases customer satisfaction possible to implement improvements that increase the through a timely collaboration of necessary disciplines effectiveness of the overall effort. throughout the life cycle is required such as Integrated Product and Process Development (IPPD) [23]. 2.6. Software testing, as a part of software Particular note should be made of the Team sub- development process, is human intensive work with process, as the creation and implementation of an high uncertainty i.e. risks Integrated Product Team (IPT) is, perhaps, the most There are at list four domains of software engineering critical ingredient for the success of the entire IPPD where uncertainty is evident: uncertainty in process. Some wrong understanding of team requirements analysis, uncertainty in the transition from composition causes several confusions listed below. system requirements to design and code, uncertainty in The optimal test group has diversity of skills and software re-engineering and uncertainty in software knowledge. This is easily misunderstood: reuse [24]. Software testing, like other development . Weakness in programming skill is seen as activities, is human intensive and thus introduces weakness in testing skill (and vice-versa). uncertainties and obeys Maxim of Uncertainty in

5 Software Engineering-MUSE [24]. Afore mentioned the context of electrical, mechanical, or civil uncertainties may affect the development effort and engineering the world has come to expect defect-free should therefore be accounted for in the test plan. We circuit boards, appliances, vehicles, machines, identify three aspects of test planning where uncertainty buildings, bridges, etc. is present: the artifacts under test, the test activities planned, the plans and their fulfillments themselves. 3.1.1 Follow the basics According to MUSE, uncertainty permeates these All models of the software development life cycle processes and products. Plans to test these artifacts, center upon four phases: requirements analysis, design, therefore, will carry their uncertainties forward. In implementation, and testing. The waterfall model particular, many testing activities, such as test result requires each phase to act on the entire project. Other checking, are highly routine and repetitious and thus are models use the same phases, but for intermediate likely to be error-prone if done manually, which releases or individual software components. introduces additional uncertainty. Humans carry out test Software components should not be designed until their planning activities at an early stage of development, requirements have been identified and analyzed. thereby introducing uncertainties into the resulting test Software components should not be implemented until plan. Also, test plans are likely to reflect uncertainties they have been designed. Even if a software component that are, as described above, inherent in software contains experimental features for a prototype, or artifacts and activities. Care must be taken during test contains only some of the final system's features as an planning to decide on the method of results comparison. increment, that prototype or incremental software Oracles, also, are required for validation and the nature component should be designed before it is implemented. of an oracle depends on several factors under the control Software components cannot be tested until after they of the test designer and automation architect [14,20]. have been implemented. Defects in software cannot be Different oracles may be used for a single automated removed until they have been identified. Defects are test and a single oracle may serve many test cases. If often injected during requirements analysis or design, test results are to be analyzed, some type of oracle is but testing cannot detect them until after required: a) oracle that gives exact outcome for every implementation. Testing is therefore inefficient for the program case, b) oracle that provides range of program detection of requirements and design defects, and thus outcome and c) oracle that can not provide program inefficient for their removal. outcome for some test cases. 3.1.2 Testing in the life cycle 3. Integrated and Optimized Software Burnstein, et al. have developed a Testing Maturity Testing Process Framework Model (TMM) [28] similar to the Capability Maturity Model® [29]. The TMM states that to view testing as 3.1 The problems with testing the fourth phase of software development is at best Testing is inefficient for the detection and removal of Level 2. However, it is physically impossible to test a requirements and design defects. As a result, lessons software component until it has been implemented. learned in testing can only help prevent defects in the The solution to this difference of viewpoint can be development of subsequent software and subsequent found in TMM Level 3, which states that one should process improvement. Unlike other engineering analyze test requirements at the same time as analyzing disciplines, software development produces products of software requirements, design tests at the same time as undetermined quality. Testing is then used to find designing software, and write tests at the same time as defects to be corrected. Instead of testing to produce a implementing code. Thus, test development parallels quality product, software engineers should design in software development. Nevertheless, the tests quality [13,18,25]. The purpose of testing should not be themselves can only identify defects after the fact. to identify defects inserted in earlier phases, but to Furthermore, testing can only prove the existence of demonstrate, validate, and certify the absence of defects. defects, not their absence. If testing finds few or no Beginning with the Industrial Revolution, many defects, it is either because there are no defects, or technical fields evolved into engineering fields, but because the testing is not adequate. If testing finds too sometimes not until after considerable damage and loss many defects, it may be the product's fault, or the of life. In each case, the less scientific, less systematic, testing procedures themselves. and less mathematically rigorous approaches resulted in Branch coverage testing cannot exercise all paths under designs of inefficient safety, reliability, efficiency, or all states with all possible data. Regression testing can cost. Furthermore, while other engineering practices only exercise portions of the software, essentially characteristically attempt to consciously prevent sampling usage in the search for defects. mistakes, software engineering seems only to correct The clean-room methodology uses statistical quality defects after testing has uncovered them [26]. certification and testing based on anticipated usage. Many software professionals have espoused the opinion Usage probability distributions are developed to that there are "always defects in software [27]." Yet in 6 determine the systems most likely used most often [30]. defects, moves their removal from detection to However, clean-room testing is predicated upon comparative analysis [31]. mathematical proof of each software product; testing is TMM Level 3 integrates testing into the software supposed to confirm quality, not locate defects. This lifecycle. This includes testing each procedure or scenario-based method of simulation and statistically module as soon as possible after each is written. driven testing has been reported as 30 times better than Integration testing is also done as soon as possible after classical coverage testing [31]. the components are integrated. Nevertheless, the Page-Jones dismisses mathematical proofs of concept of defect prevention is not addressed until correctness because they must be based on assumptions TMM Level 4, and then only as a philosophy for the [32], yet both testing and correctness verification are entire testing process. done against the software's requirements. Both are Testing cannot prevent the occurrence of defects; it can therefore based on the same assumptions; incorrect only aid in the prevention of their recurrence in future assumptions result in incorrect conclusions. This components. This is why neither CMM nor TMM indictment of proofs of correctness must also condemn discusses actual defect prevention, or more accurately, testing for the same reason. subsequent defect prevention until Level 5. Waiting The clean-room methodology's rigorous correctness until one has reached Level 5 before trying to prevent verification approaches zero defects prior to any defects can be very costly, both in terms of correcting execution [33], and therefore prior to any testing. defects not prevented and in lost business and goodwill Correctness verification by mathematical proof seems from providing defective software. better than testing to answer the question, "Does the Waiting until implementation to test a component for software product meet requirements?" defects caused in much earlier phases seems too much Properly done test requirements analysis, design, and of a delay; yet, an emphasis in testing for defect implementation that parallels the same phases of prevention is exactly that. An ounce of prevention may software development may help in early defect be worth a pound of cure, but one cannot use a cure as if detection. However, done improperly (as when it were a preventative. developers test their own software), this practice may There are several methods currently available to result in tests that only test the parts that work, and in accomplish defect prevention at earlier levels of software that passes its tests but nevertheless contains maturity such as Cleanroom Software Engineering [33], defects. Increasingly frustrated users insist that there are Zero Defect Software [35], and other provably correct serious defects in the software, while increasingly software methods [36]. adversarial developers insist that the tests reveal no defects. 3.1.4 Software quality and process improvement Test requirements analysis done separately from Gene Krinz, Mission Operations' director for the NASA software requirements analysis can make successful space shuttle, is quoted as saying about the quality of testing impossible. A multi-million dollar project was the flight software, "You can't test quality into the only given high-level requirements, from which the software [35]." Clean-room methods teach that one can software developers derived their own set of (often- neither test in nor debug in quality [33]. undocumented) lower-level requirements, to which they If quality was not present in the requirements analysis, designed and implemented the software. After the design, or implementation, testing cannot put it there. software had been implemented, a test manager derived One of TMM's Level 3 maturity goals is software his own set of lower-level requirements, one of which quality evaluation. While many quality attributes may had not even been considered by the developers. The be measured by testing, and many quality goals may be design and test requirements were mutually exclusive in linked to testing's ultimate objectives, most aspects of this area, so it was impossible for the software to pass software quality come from the quality of its design. testing. This failure scrapped the entire project and Procedure coupling and cohesion [32], measures of destroyed several careers [34]. object-oriented design quality such as encapsulation, inheritance, encumbrance, class cohesion, type 3.1.3 Defect removal and prevention conformance, closed behavior, and other quantitative Test-result-driven defect removal is detective work; the measures of software quality, are established in the maintenance programmer must identify and track down design phase. They should be measured soon after each the cause within the software. Defect removal is also component is designed; do not wait until after similar to archeology, since all previous versions of the implementation to measure them with testing. software, and documentation of all previous phases of Some authors have suggested that analyzing, designing, the development may have to be researched, if and implementing tests in parallel with the products to available. Using testing to validate that software is not be tested will somehow improve the processes used to defective [33], rather than to identify and remove develop those products. However, since the software product testers should be different from those who

7 developed it, there needs to be some way for the testers exploit the information available to them through their to communicate their process improvement lessons test program. Rather, they view testing as a necessary learned to the developers. Testers and developers should evil - something that must be "endured" with as little communicate effectively; every developer should also pain as possible. This attitude often clouds their horizon act as a tester (but only for components developed by and effectively shrouds the valuable information others). available via the test program. Used effectively the test program can provide a rapid 3.1.5 Designing in quality and effective feedback mechanism for the development One of the maturity subgoals of subsequent defect process, identifying failures in the software construction prevention is establishing a causal analysis mechanism process and allowing for early correction and process to identify the root causes of defects. Already there is improvement. These benefits only accrue, however, if evidence that most defects are created in the the test program is managed like a "program", with the requirements analysis and design phases. Some have put discipline, rigor, and structure expected of a program. the percentage of defects caused in these two phases at This includes establishing objectives, devising an 70 percent [27]. implementation strategy, assigning resources and Clear communication and careful documentation are monitoring results. required to prevent injecting defects during the Used in this context, testing becomes a pervasive, requirements analysis phase. Requirements are systematic, objective confirmation that the other parts of characteristically inconsistent, incomplete, ambiguous, the development process are achieving the desired nonspecific, duplicate, and inconstant. Interface results. The program manager that exploits the descriptions, prototypes, use cases, models, contracts, information available through testing recognizes that the pre-and post-conditions, etc. are all useful tools. test program, not only evaluates the product, it also To prevent injecting defects during the design phase, evaluates the process, by which the product was created. software components must never be designed until a If a defect is found in testing, other checks and balances large part of their requirements have been identified and installed further upstream failed. This information can analyzed. The design should be thorough, using such then be used for real-time improvement of the process. things as entities and relationships, data and control This is not to say that testing is a panacea for all flow, state transitions, algorithms, etc. Peer reviews, program problems. For software systems of any correctness proofs and verifications, etc. are good ways significance, it is a practical impossibility to test every to demonstrate that a design satisfies its requirements. possible sequence of inputs and situations that might be Preventing the injection of defects during the encountered in an operational environment. The implementation phase requires that software objective of a test program is to instill confidence in components never be implemented until they have been users and developers that the software product will designed. It is far too easy to implement a software operate as expected when put into service. Because it is component while the design is still evolving, sometimes impossible to check all possible situations, testing is, by just in the developer's mind. Poor documentation and a its very nature, a sampling discipline. A typically small lack of structure in the code usually accompany an number of test cases will be executed and, from the increased number of defects per 100 lines of code [27]. results, the performance of the system will be forecast. As I mentioned earlier, this applies even to prototype Testing is by its very nature a focused snapshot of a and incremental software components; those system. It is also inherently inefficient. Like the oil field experimental or partial features should be designed mentioned earlier, for all the logic and analysis done before implementation. beforehand, many probes come up "dry", with the The clean-room method has an excellent track record of system operating exactly as expected. near defect-free software development, as documented This is not to slight the value of testing. A test that is by the Software Technology Support Center, Hill AFB, tailored to and consistent with development Utah, regardless of Daich's statements to the contrary methodologies provides a traceable and structured [27]. Clean-room is compatible with CMM Levels 2 approach to verifying requirements and quantifiable through 5 [33], and can be implemented in phases at all performance. these levels [30]. Preparation of test cases is something that should start very early in a program. The best place to start them is 3.1.6 Manage testing as a continuous process during requirements definition. During the infancy The test program is one of the most valuable resources stages of a program, focus on testing provides available to a program manager. Like a diamond mine significant advantages. Not only does it ensure that the or an oil field, the test program can be the source of vast requirements are testable, but the process of riches for the program manager trying to gauge the constructing test cases forces the developer to clarify the success of his/her program. Unfortunately, it is often a requirement, providing for better communication and resource that goes untapped. Few program managers understanding. As test cases are designed, they should

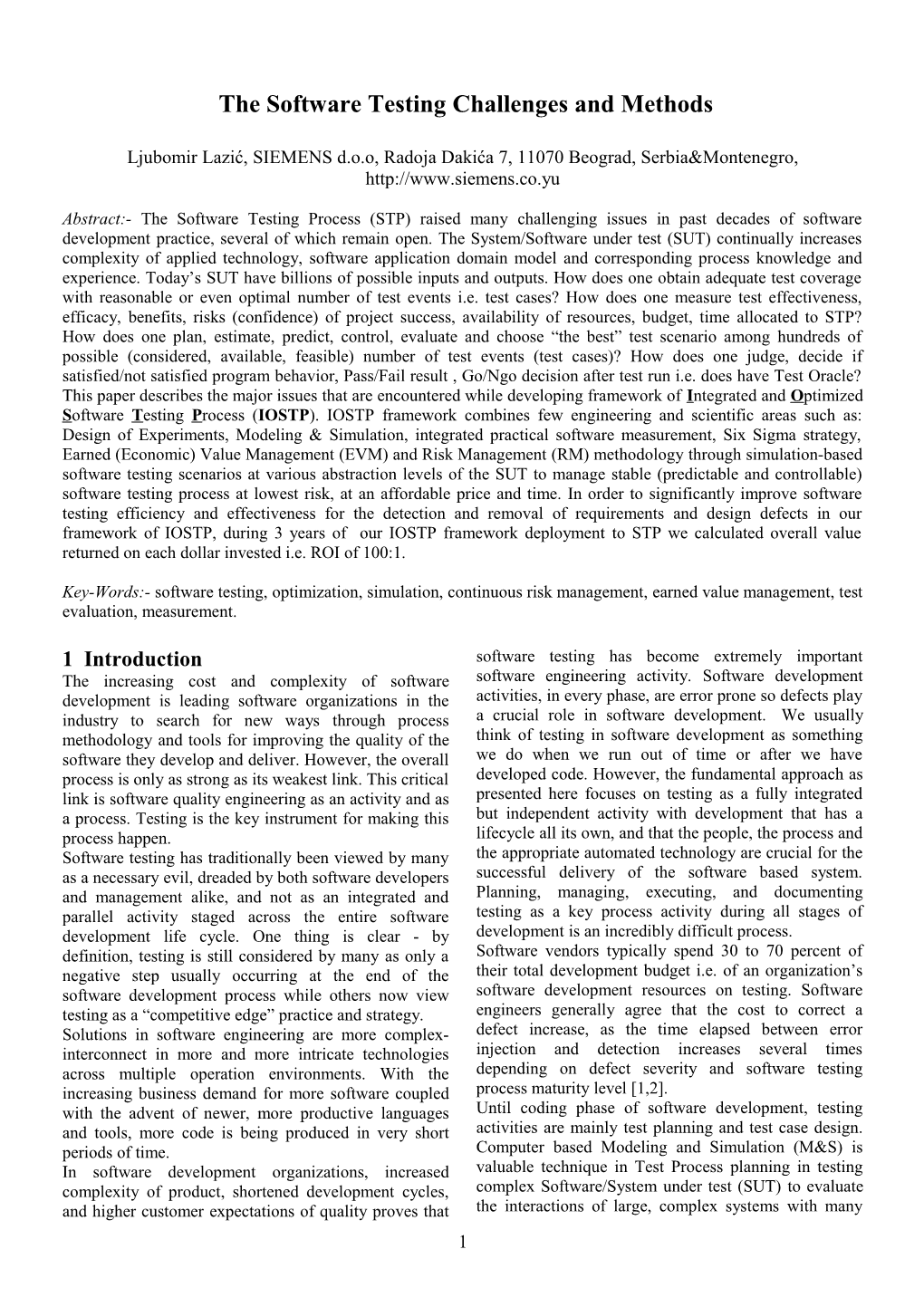

8 be documented along with their assumptions. These test and maintenance. Quality estimates must consider products should become contract deliverables and defect potentials, defect removal efficiency, and should form the basis for regression tests needed during maintenance. the maintenance phase of the software life cycle. All system defects are not equally easy to remove. However, test cases are not immune to errors. As such Requirements errors, design problems, and bad fixes they, too, should be inspected to eliminate defects. tend to be the most difficult. At the time of delivery, Failed software projects are usually easy enough to defects originating in requirements and design tend to analyze after the fact. You can almost always spot some far outnumber coding defects. While a number of fundamental sound practice that wasn't followed; if it approaches can be used to help remove defects, formal had been followed, the project would have succeeded, design and code inspections have been found to have or at least failed less completely. Often the good the highest defect removal efficiency. practices that weren't followed are basic ones that Typically, more than 50% of the global IT population is practically everyone knows are essential. When that engaged in modifying existing applications rather than happens, project management can always cite a reason developing new applications. Estimates are required for why the practice wasn't followed--an excuse or maintenance for initial releases to repair defects in rationalization. To save everyone a lot of time, we have software applications to correct errors and for collected some of the most common bad excuses for not enhancements to add new features to software using good practices... The next time you're considering applications. Enhancements can be major, in which managing a project without a careful risk management features are added or changed in response to explicit plan. user requests, or minor, in which some small Think of a test case as a question you ask of the improvement from the user’s point of view is added. program. What are the attributes of the excellent Major enhancements are formal and rigorous, while question? minor enhancements may not be budgeted or charged • Information, because? back to users. » Help to reduce project’s uncertainty i.e. STP risks. Maintenance or normal defect repairs involve keeping How much do you expect to learn from this test? software in operational condition after a crash or defect • Power, because? report. Warranty repairs are technically similar to » If two tests have the potential to expose the same type normal maintenance defect repairs, but they differ in a of error, one test is more powerful if it is more likely to business or legal sense because the clients who report expose the error. the defects are covered by an explicit warranty. • Credibility, because? Maintenance or defect repairs have many complicating » Failure of the test should motivate someone to fix it, factors. These include the severity level of the defect, not to dismiss it. which affects the turnaround time required for the • Feasibility, because? maintenance activity, and other factors such as: » Must give answer, how hard is it to set up the test, run . Abeyant defects – the maintenance team is unable the test, and evaluate the result? to make the same problem occur . Invalid defects – the error is not actually caused by 3.1.7 Estimating quality the software application One of the reasons why projects are late and exceed . Bad fix injection – the repair itself actually contains their budgets is because they have so many defects that a new defect they cannot be released to users. Many of these defects . Duplicate defects – defects are reported by multiple escape detection until late in the testing cycle when it is users that only need to be repaired once but involve difficult to repair them easily. Then, the testing process a substantial amount of customer support time. stretches out indefinitely. The cost and effort for Customer support estimates are also required. They are finding and fixing defects may be the largest identifiable based in part on the anticipated number of bugs in the IT system cost estimate, so defects cannot be ignored in application, but also on the number of users or clients. the estimating process. Typically, the customer-support personnel do not fix the Defect potentials are the sum of errors that occur problems themselves, but record the symptoms and because of: route the problem to the correct repair group. However, . Requirements errors the same problems tend to occur repeatedly. Therefore, . Design errors after a problem has been fixed, customer-support . Coding errors personnel are often able to guide clients through . User documentation errors workarounds or temporary repairs. . Bad fixes or secondary errors. Error-prone module removal also is a significant cost. Research has shown that software bugs in large systems Defect levels affect IT project costs and schedules. The are seldom randomly distributed and instead tend to number and efficiency of defect removal operations can accumulate in a small number of portions, called error- have major impacts on schedules, cost, effort, quality, 9 prone modules. They may never stabilize because the as validation, verification, and accreditation techniques. bad fix injection rate can approach 100%, which means The use of state-of-the-art methods and tools for that each attempt to repair a bug may introduce a new planning, information, management, design, cost trade- bug. The cost of defect repairs then may exceed the off analysis, and modeling and simulation, Six Sigma original development cost and may continue to grow strategy significantly improves STP effectiveness. indefinitely until the error-prone module is totally Figure 1 graphically illustrates a generic IOSTP eliminated. The use of code inspections is framework [12]. recommended. The focus in this paper is description of IOSTP with Other maintenance efforts involve code restructuring, embedded RBOSTP Approach to Testing Services that: performance optimization, migration across platforms, Integrate testing into the entire development conversion to new architectures, reverse engineering, process restructuring, dead code removal, dormant application Implement test planning early in the life cycle removal, and retirement or withdrawal of the application via Simulation based assessment of test scenarios from active service. Automate testing, where practical to increase To estimate maintenance requirements, analogy, testing efficiency parametric models, or a fixed budget can be used. Measure and manage testing process to Overall, it is recommended to: maximize risk reduction . Detect and repair defects early Exploit Design of Experiments techniques . Collect and analyze defect metrics (optimized design plans, Orthogonal Arrays etc.) . Recognize the tradeoffs between productivity and Apply Modeling and Simulation combined with quality Prototyping . Tailor the quality measurements to fit the project Continually improve testing process by pro- and the phase of development. active, preventive (failure mode analysis) Six Define quality carefully and often and agree upon Sigma DMAIC model acceptable defect rates early in the process. Then, Continually monitor Cost-Performance Trade- include defect repair rates in estimates. Offs (Risk-based Optimization model, Economic o Reduced cycle time of product development is Value and ROI driven STP) critical to companies’ success o Testing accounts for 40-75% of the effort o Requirement defects account for 40-50% of failures detected during testing o Members spend 50% of test time debugging test scripts o Member reported that feature interaction problem have resulted in as many as 30 test iterations prior to release

3.2 IOSTP framework state-of-the-art methods implementation Unlike conventional approaches to software testing (e.g. structural and functional testing) which are applied to the software under test without an explicit optimization goal, the IOSTP with embedded Risk Based Optimized STP (RBOSTP) approach designs an optimal testing strategy to achieve an explicit optimization goal, given a priori [6,22]. This leads to an adaptive software testing strategy. A non-adaptive software testing strategy specifies what test suite or what next test case should be Fig. 1 Integrated and optimized software testing process generated, e.g. random testing methods, whereas an (IOSTP) framework [12] adaptive software testing strategy specifies what testing policy should be employed next and thus, in turn, what test suite or test case should be generated next in accordance with the new testing policy to maximize test Framework models are similar to the structural view, activity efficacy and efficiency subject to time-schedule but their primary emphasis is on the (usually singular) and budget constraints. The process is based on a coherent structure of the whole system, as opposed to foundation of operations research, experimental design, concentrating on its composition. IOSTP framework mathematical optimization, statistical analyses, as well model targeted specific software testing domains or 10 problem classes described above. IOSTP is a systematic Software Testing Process i.e. RBOSTP is part of a approach to product development (acquisition) which proven and documented IOSTP [12,17] designed to increases customer satisfaction through a timely improve the efficiency and effectiveness of the collaboration of necessary disciplines throughout the testing effort assuring the low project risk of life cycle. Successful definition and implementation of developing and maintaining high quality complex IOSTP can result in: software systems within schedule and budget . Reduced Cycle Time to Deliver a Product constraints [21,22]. Basic considerations of . Reduced System and Product Costs RBOSTP are described in [21] article and some . Reduced Risk RBOSTP implementation issues, experience results . Improved Quality are presented in [22]. In our article [22], we describe how RBOSTP combines Earned 4 IOSTP framework state-of-the-art (Economic) Value Management (EVM) and Risk methods implementation experiences Management (RM) methodology through simulation-based software testing scenarios at The use of state-of-the-art methods and tools for various abstraction levels of the system/software planning, information, management, design, cost trade- under test activities to manage stable (predictable off analysis, and modeling and simulation significantly and controllable) software testing process at lowest improves STP effectiveness. These IOSTP methods and risk, at an affordable price and time. tools include:

Cost-Performance trade-offs i.e. IOSTP Information Technology and Decision Support optimization model Document management, process documentation and Activity-Based Costing (ABC), which focuses on configuration control (i.e., Configuration those activities that bring a product to fruition, is Management) are critical activities in the successful considered a valuabletool for IOSTP framework implementation of IOSTP framework [12]. A cost analysis. Costs are traced from IOSTP common information system is needed to provide activities to products, based on the consumption of opportunities for team members to access product or each product of those activities. The cost of the process information at any time, from any location. product is measured as the sum of all activities Access should be provided to design information; performed, including overheads, capital costs, etc. automated tools; specifications and standards; Stakeholders are most interested in the benefits that schedule and cost data; documentation; process are available and the objectives that can be methodologies; program tracking; program and achieved. The benefit-based test reports present this process metrics; etc.. clearly. Project management is most interested in

the risks that block the benefits and objectives. The Trade-Off Studies and Prioritization benefits-based test reports focus attention on the To fully leverage the IOSTP framework, design blocking risks so that the project manager can push trade-offs that optimize system requirements vs. harder to get the tests that matter through [22].If cost should be performed during the earliest phases testers present risk and benefits based test reports, of the software life cycle. Product/process the pressure on testers is simply to execute the performance parameters can be traded-off against outstanding tests that provide information on risk. design, development, testing, operations and The pressure on developers is to fix the faults that support, training requirements, and overall life cycle block the tests and the risks of most concern. costs. System attributes such as mission capability, Testers need not worry so much about justifying operational safety, system readiness, survivability, doing more testing, completing the test plan or reliability, testability, maintainability, downgrading “high severity” incidents to get supportability, interoperability and affordability also through the acceptance criteria. The case for need to be considered. Quality Function completing testing is always self-evident: has Deployment (QFD) is one technique for evaluating enough test evidence been produced to satisfy the trade-off scenarios [13,18]. It is predicated on stakeholders’ need to deem the risks of most gaining an understanding of what the end user really concern closed? The information required by needs and expects. The QFD methodology allows stakeholders to make a release decision with for tracking/tracing trade-offs through various levels confidence might only be completely available of the project hierarchy, from requirements analysis, when testing is completed. Otherwise, they have to through the software development process, to take the known risks of release. How good is our operational and maintenance support. Testing testing? Our testing is good if we present good test represents a significant portion of the software evidence. Rather than getting so excited about the development efforts that must be integrated in number of faults we find, our performance as testers parallel to QFD. Risk-Based Optimization of 11 is judged on how clear is the test evidence that we portfolio, program, departmental or corporate produce. If we can provide evidence to stakeholders levels, through the use of consistent assessment and for them to make a decision at an acceptable cost reporting frameworks. This integration and we can squeeze this effort into the time we are methodology operates at two levels with exchange given, we are doing a good testing job. This is a of information. The higher, decision making level different way of thinking about testing. The takes into account the efficacy and costs of models, definition of good testing changes from one based simulations, and other testing techniques in devising on faults found to one based on the quality of effective programs for acquiring necessary information provided. Consider what might happen knowledge about the system under test. The lower, if, during a test stage, a regression test detects a execution level considers the detailed dimensions of fault. Because the test fails, the risk that this test the system knowledge sought and the attributes of partially addresses becomes open again. The risk- the models, simulations, and other testing based test report may show risks being closed and techniques that make them more or less suitable to then re-opened because regression faults are gather that knowledge. The OM is designed to allow occurring. The report provides a clear indication planners to select combinations of M&S and/or tests that things are going wrong – bug fixes or that meet the knowledge acquisition objectives of enhancements are causing problems. The report the program. The model is designed to consider the brings these anomalies directly to the attention of system as a whole and to allocate resources to management. Main task is development of a maximize the benefits and credibility of applied versatile Optimization Model (OM) for assessing M&S class associated with the overall IOSTP with the cost and effectiveness of alternative test, embedded RBOSTP program [22]. simulation, and evaluation strategies. The System/Software under test and corresponding Prototyping testing strategy-scenario make up a closed-loop Prototypes provide a representation of a feedback control system. At the beginning of product/system under development. They facilitate software testing our knowledge of the software communications between software designers and under test is limited. As the system/software testing product/system users by allowing users to better proceeds, more testing data are collected and our describe or gauge actual needs. For IOSTP, there is understanding of the software under test is a need to rapidly develop prototypes as early as improved. Software development process possible during software design and development. parameters (e.g. software quality, defect detection Customer manipulation helps clarify requirements rates, cost etc.) of concern may be estimated and and correct misconceptions about what the product updated, and the software testing strategy is should or should not do, thereby reducing the time accordingly adjusted on-line. The important to complete a fully functional design, and reducing ingredients in successful implementation of IOSTP the risk of delivering an unacceptable product. with embedded RBOSTP are (1) a thoughtful and Software prototyping must be controlled to thorough evaluation plan that covers the entire life eliminate unnecessary prototyping cycles that cause cycle process, (2) early identification of all the tools cost and schedule overruns. It is also necessary to and resources needed to execute that software test guard against scope creep, in that users may want to and evaluation process plan and timely investment add features/enhancements that go beyond the scope in those resources, (3) assuring the credibility of the of contracted requirements. tools to be employed, and (4) once testing is accomplished, using the resulting data to improve Modeling and Simulation the efficacy of the test event, models and Modeling and simulation (M&S) techniques are a simulations. In order to provide stable (controlled cost-effective approach to reduce the time, and predictable) IOSTP we integrated two of the resources and risks, and to improve the quality, of leading approaches: Earned (Economic) Value acquired systems. Validated M&S can support all Management (EVM) and Risk Management (RM). phases of IOSTP, and should be appropriately These stand out from other decision support applied throughout the software life cycle for techniques because both EVM and RM can and requirements definition; program management; should be applied in an integrated way across the design and engineering; test and evaluation; and organization that some authors [40,41], recently field operation and maintenance presented in our recognized as Value-Based Testing. Starting at the works [6-9, 14-22]. The model of SUT is designed project level, both EVM and RM offer powerful to consider the system as a whole and to allocate insights into factors affecting project performance. resources to maximize the benefits and credibility of While this information is invaluable in assisting the applied M&S class associated with the overall project management task, it can also be rolled up to IOSTP with embedded RBOSTP program.

12 Test engineers and operational evaluators play a key IOSTP with embedded RBOSTP to develop a role in evaluating system/software performance comprehensive evaluation strategy that uses data from throughout the life cycle. They identify: the most cost-effective sources; this may be a combination of archived, simulation, and software test The information needed and when it must be event data, each one contributing to addressing the available. This includes understanding the issues for which it is best suited. performance drivers and the critical issues to be Success with IOSTP with embedded RBOSTP does not resolved. come easy, nor is it free. IOSTP with embedded The exact priority for what must be modeled RBOSTP, by integrating M&S with software testing first, then simulated, and then tested. This includes techniques, provides additional sources of early data and learning about the subcomponent level, the alternative analysis methods, not generally available in components, and the system level. software tests by themselves. It seeks the total The analysis method to be used for each issue to integration of IOSTP with embedded RBOSTP be resolved. Timing may have a significant effect resources to optimize the evaluation of system/software on this. The design function can use models before worth throughout the life cycle. The central elements of any hardware is available. It will always be more IOSTP with embedded RBOSTP are: the acquisition of expedient to use models and simulations at the early information that is credible; avoiding duplication stage of system design. However, the design itself throughout the life cycle; and the reuse of data, tools, may be affected by operational considerations that and information. require examination of real tests or exercise data. It will, given the training and logistic information Measurement and Metrics required of systems today, be prudent in the long The IOSTP approach stresses defining processes run to develop appropriate models and simulations. and establishing strategic checkpoints to determine process health using accurate measurement and The data requirements and format for the open communications. Defining and using process- analysis chosen. Included in this determination is focused metrics provides for early feedback and the availability of instrumentation, not only for continuous monitoring and management of IOSTP collecting performance data, but also for validating activities [38,39]. Metrics should be structured to appropriate models and simulations. identify the overall effects of IOSTP Models and simulations can vary significantly in size implementation. Measures of success can include and complexity and can be useful tools in several schedule, communications, responsiveness and respects. They can be used to conduct predictive timeliness. Additional important measures include analyses for developing plans for test activities, for productivity, customer satisfaction and cycle time. assisting test planners in anticipating problem areas, and Defect metrics are drivers of a IOSTP with for comparison of predictions to collected data. embedded RBOST. A defect is defined as an Validated models and simulations can also be used to instance where the product does not meet a examine test article and instrumentation configurations, specified characteristic. The finding and correcting scenario differences, conduct what-if tradeoffs and of defects is a normal part of the software sensitivity analyses, and to extend test results development process. Defects should be tracked Testing usually provides highly credible data, but formally at each project phase. Data should be safety, environmental, and other constraints can limit collected on effectiveness of methods used to operational realism, and range cost and scheduling can discover defects and to correct the defects. Through be limiting factors. Modeling, especially credible defect tracking, an organization can estimate the model building may be very expensive although M&S number and severity of software defects and then can be available before hardware is ready to test. A focus their resources (staffing, tools, test labs and prudent mix of simulation and testing is needed to facilities), release, and decision-making ensure that some redesign is possible (based on M&S) appropriately. Two metrics provide a top-level before manufacturing begins. summary of defect-related progress and potential While developing the Software Test strategy, the problems for a project: -defect profile and defect program office with test-team must also develop a plan age. The defect profile chart provides a quick to identify and fund resources that support the summary of the time in the development cycle when evaluation. In determining the best source of data to the defects were found and the number of defects support analyses, IOSTP with embedded RBOSTP still open. It is a cumulative graph. The defect age considers credibility and cost. Resources for chart provides summary information regarding the simulations and software test events are weighed defects identified and the average time to fix defects against desired confidence levels and the limitations of throughout a project. The metric is a snapshot rather both the resources and the analysis methods. The than a rate chart reported on a frequent basis. In program manager works with the test engineers to use 13 addition, this measure provides a top-level summary Project at www.cs-solutions,com. We suggest, also, of the ability of the organization to successfully @RISK for Project Professional from Palisade resolve identified defects in an efficient and Corporation, also an add-in to Project at predictable manner. If this metric indicates that www.palisade.com, Pertmaster from Pertmaster problems are accumulating in the longer time LTD (UK) at www.pertmaster.com reads MS periods, a follow-up investigation should be Project and Primavera files and performs initiated to determine the cause. The metric simulations. Pertmaster is substituting for an older evaluates the rolling wave risk where a project product, Monte Carlo from Primavera Systems defers difficult problems while correcting easier or which links to Primavera Project Planner (P3) less complex problems. In addition this measure www.primavera.com . Risk+ User’s Guide will indicate the ability of the organization to provides a basic introduction to the risk analysis successfully resolve identified defects in an efficient process. The risk analysis process is divided into and predictable manner. If this metric indicates that following five steps. problems are taking longer than expected to close the schedule and cost risks increase in likelihood and a problem may be indicated in the process used to correct problems and in potentially in the resources assigned. If a large number of fixes are ineffective, then the process used for corrections should be analyzed and corrected. The final test metric relates to technical performance testing. The issues in this area vary by type of software being developed, but top-level metrics should be collected and displayed related to performance for any medium- or high- technical risk areas in the development. The maximum rework rate was in the requirements which were not inspected and which were the most subject to interpretation. Resolution of the defects and after the fact inspections reduced the rework dramatically because of Defect Containment. Defect 1. The first step is to plan our IOSTP with containment metric tracks the persistence of software embedded RBOSTP project. It is important to note defects through the life cycle. It measures the that the project must be a complete critical path effectiveness of development and verification activities. network to achieve meaningful risk analysis results. Defects that survive across multiple life-cycle phases Characteristics of a good critical path network suggest the need to improve the processes applied model are: during those phases. The Defect Age Metric will There are no constraint dates. summarize the average time to fix defects. The purpose Lowest level tasks have both of this metric is to determine the efficiency of the defect predecessors and successors. removal process and, more importantly, the risk, Over 80% of the relationships are finish difficulty and focus on correcting difficult defects in a to start. timely fashion. The metric is a snapshot rather than rate In the Risk + tutorial, we use the DEMO.MPP project chart reported on a frequent basis. The metric evaluates file, which has the characteristics of a good critical path the rolling wave risk where a project defers difficult network model. Since the scheduling process itself is problems while correcting easier or less complex well covered in the Project manual we won't repeat it problems. In addition this measure will indicate the here. ability of the organization to successfully resolve 2. The second step is to identify the key or high identified defects in an efficient and predictable manner. risk tasks for which statistical data will be If this metric indicates that problems are taking longer collected. Risk + calls these Reporting Tasks. than expected to close the schedule and cost risks Collecting data on every task is possible; however, increase in likelihood and a problem may be indicated it adds little value and consumes valuable system in the process used to correct problems and in resources. In this step you should also identify the potentially in the resources assigned [22]. Preview Task to be displayed during simulation processing. Risk Management implemented in IOSTP 3. The third step requires the entry of risk In order to implement RBOST we use one of parameters for each non-summary task. For each favorite schedule risk software includes RISK+ non-summary task enter a low, high, and a most from C/S Solutions, Inc an add-in to Microsoft likely estimate for duration and/or cost. Next,

14 assign a probability distribution curve to the cost strategy for integration of schedule and risk begins with and duration ranges. The probability distribution an analysis of the risk of the schedule [21,22]. curve guides Risk + in the selection of sample costs and durations within the specified range. See the The role of the Six Sigma strategy in section titled "Selecting a Probability Distribution software development/testing process Curve" in the Risk+ manual for more information In order to assure controlled and stable (predictive) on selecting a curve type. Update options such as testing process in time, budget and software quality "Quick Setup" and "Global Edit" can dramatically space we need to model, measure and analyze software- reduce the effort required to update the risk testing process by applying Six Sigma methodology parameters. across the IOSTP solution as presented in our works 4. The fourth step is to run the risk analysis. Enter [15-19]. The name, Six Sigma, derives from a statistical the number of iterations to run for the simulation, measure of a process’s capability relative to customer and select the options related to the collection of specifications. Six Sigma is a mantra that many of the schedule and cost data. For each iteration of the most successful organizations in the world swear by and simulation, the Monte Carlo engine will select a the trend is getting hotter by the day. Six Sigma insists random duration and cost for each task (based upon on active management engagement and involvement, it its range of inputs and its probability distribution insists on financial business case for every curve), and recalculate the entire schedule network. improvement, it insists on focusing on only the most Results from each iteration are stored for later important business problems, and it provides clearly analysis. defined methodology, tools, role definitions, and 5. The final and fifth step is to analyze the metrics to ensure success. So, what has this to do with simulation results. Depending on the options software? The key idea to be examined in this article is selected, Risk + will generate one or more of the the notion that estimated costs, schedule delays, following outputs: software functionality or quality of software projects are Earliest, expected, and latest completion date often different than expected based on industry for each reporting task experience. Six Sigma tools and methods can reduce Graphical and tabular displays of the these risks dramatically i.e. Six Sigma (6σ) deployment completion date distribution for each reporting task in SDP/STP called DMAIC for “Define, Measure, The standard deviation and confidence interval Analyze, Improve, and Control”, because it organizes for the completion date distribution for each the intelligent control and improvement of existing reporting task software test process [15-16]. Experience with 6σ has The criticality index (percentage of time on the demonstrated, in many different businesses and industry critical path) for each task segments that the payoff can be quite substantial, but The duration mean and standard deviation for that it is also critically dependent on how it is deployed. each task In this paper we will concentrate on our successful Minimum, expected, and maximum cost for the approach to STP improvement by applying Six Sigma. total project The importance of an effective deployment strategy is Graphical and tabular displays of cost no less in software than in manufacturing or distribution for the total project transactional environments. The main contribution of The standard deviation and confidence interval our [15-19] works is mapping best practices in Software for cost at the total project level Engineering, Design of Experiments, Statistical Process Risk + provides a number of predefined reports and Control, Risk Management, Modeling & Simulation, views to assist in analyzing these outputs. In addition, Robust Test and V&V etc. to deploy Six Sigma to the you can use Project's reporting facilities to generate Software Testing Process (STP). In order to custom reports to suit your particular needs. significantly improve software testing efficiency and Project cost and schedule estimates often seem to be effectiveness for the detection and removal of disconnected. When the optimistic estimate of schedule requirements and design defects in our framework of is retained, in the face of the facts to the contrary, while IOSTP with deployed Six Sigma strategy, during 3 producing an estimate of cost, cost is underestimated. years of our 6σ deployment to STP we calculated Further, when the risk of schedule is disregarded in overall value returned on each dollar invested i.e. ROI estimating cost risk, that cost risk is underestimated. In of 100:1. reality cost and schedule are related and both estimates must include risk factors of this estimating process 5 Conclusions because of uncertainty of test tasks’ cost and time In software development organizations, increased estimation that RBOSTP optimization model testing complexity of product, shortened development cycles, includes described by equation (1) constraints. The and higher customer expectations of quality proves that software testing has become extremely important