Why isn't Oracle using my index ?!

The question in the title of this piece is probably the single most frequently occurring question that appears in the Metalink forums and Usenet newsgroups. This article uses a test case that you can rebuild on your own systems to demonstrate the most fundamental issues with how cost-based optimisation works. And at the end of the article, you should be much better equipped to give an answer the next time you hear that dreaded question.

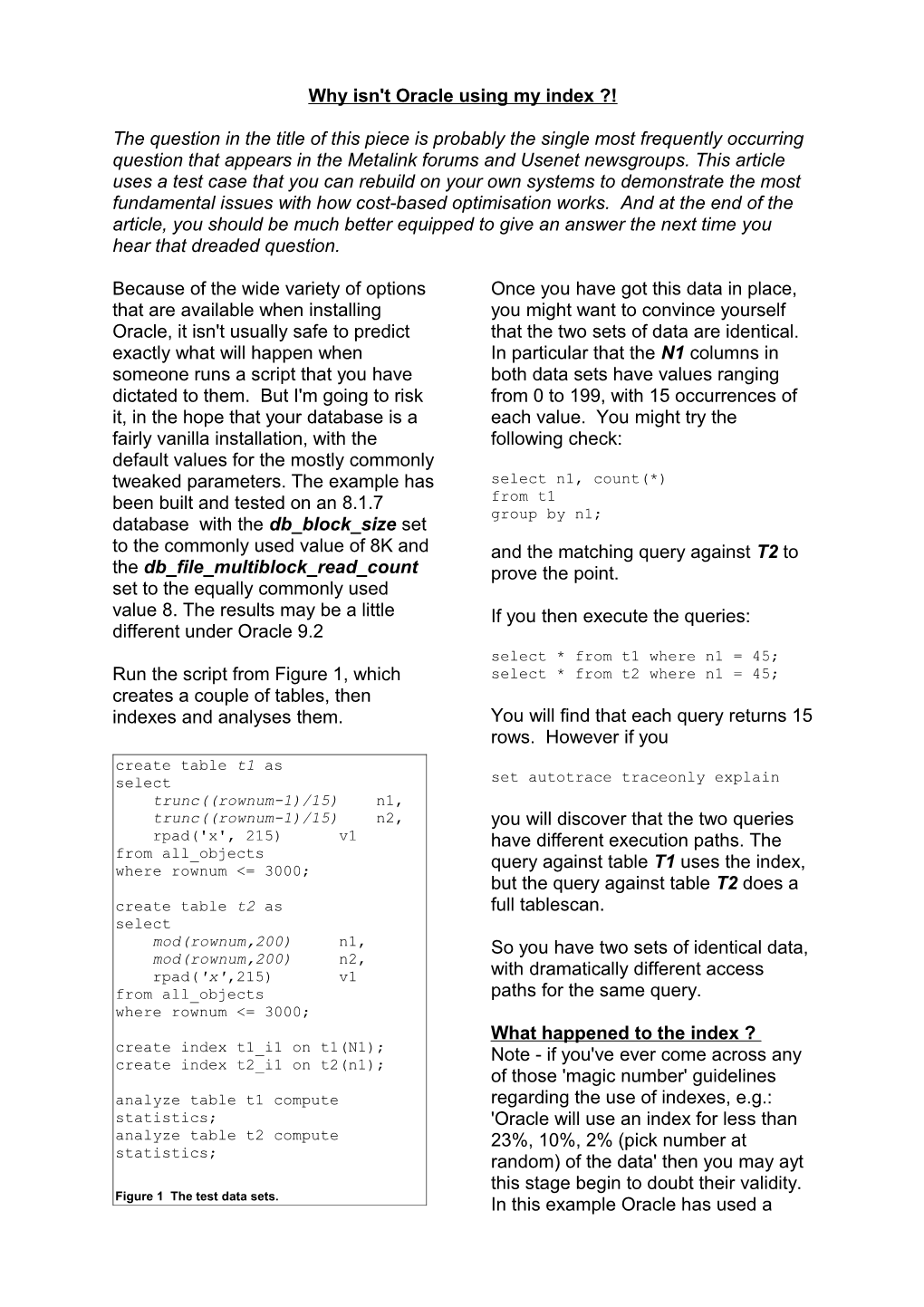

Because of the wide variety of options Once you have got this data in place, that are available when installing you might want to convince yourself Oracle, it isn't usually safe to predict that the two sets of data are identical. exactly what will happen when In particular that the N1 columns in someone runs a script that you have both data sets have values ranging dictated to them. But I'm going to risk from 0 to 199, with 15 occurrences of it, in the hope that your database is a each value. You might try the fairly vanilla installation, with the following check: default values for the mostly commonly tweaked parameters. The example has select n1, count(*) been built and tested on an 8.1.7 from t1 group by n1; database with the db_block_size set to the commonly used value of 8K and and the matching query against T2 to the db_file_multiblock_read_count prove the point. set to the equally commonly used value 8. The results may be a little If you then execute the queries: different under Oracle 9.2 select * from t1 where n1 = 45; Run the script from Figure 1, which select * from t2 where n1 = 45; creates a couple of tables, then indexes and analyses them. You will find that each query returns 15 rows. However if you create table t1 as select set autotrace traceonly explain trunc((rownum-1)/15) n1, trunc((rownum-1)/15) n2, you will discover that the two queries rpad('x', 215) v1 have different execution paths. The from all_objects query against table T1 uses the index, where rownum <= 3000; but the query against table T2 does a create table t2 as full tablescan. select mod(rownum,200) n1, So you have two sets of identical data, mod(rownum,200) n2, rpad('x',215) v1 with dramatically different access from all_objects paths for the same query. where rownum <= 3000; What happened to the index ? create index t1_i1 on t1(N1); Note - if you've ever come across any create index t2_i1 on t2(n1); of those 'magic number' guidelines analyze table t1 compute regarding the use of indexes, e.g.: statistics; 'Oracle will use an index for less than analyze table t2 compute 23%, 10%, 2% (pick number at statistics; random) of the data' then you may ayt this stage begin to doubt their validity. Figure 1 The test data sets. In this example Oracle has used a tablescan for 15 rows out of 3,000 i.e. for just one half of one percent of the By looking into this question you data ! uncover the key mechanisms (and critically erroneous assumptions) of To investigate problems like this, there the Cost Based Optimiser. is one very simple ploy that I always try as the first step. Put in some hints Let's start by examining the indexes by to make Oracle do what I think it ought running the query: to be doing, and see if that gives me any clues. select table_name, blevel, In this case, a simple hint: avg_data_blocks_per_key, avg_leaf_blocks_per_key, /*+ index(t2, t2_i1) */ clustering_factor from user_indexes; is sufficient to switch Oracle from the full tablescan to the indexed access The results are given in the table path. The three paths with costs below: (abbreviated to C=nnn) are shown in Figure 2: T1 T2 Blevel 1 1 select * from t1 where n1 = 45; Data block / key 1 15 EXECUTION PLAN Leaf block / key 1 1 ------Clustering factor 96 3000 TABLE ACCESS BY INDEX ROWID OF T1 (C=2) INDEX(RANGE SCAN) OF T1_I1 (C=1) Note particularly the value for 'data blocks per key'. This is the number of select * from t2 where n1 = 45; different blocks in the table that Oracle EXECUTION PLAN thinks it will have to visit if you execute ------a query that contains an equality test TABLE ACCESS FULL OF T2 (C=15) on a complete key value for this index. select /*+ index(t2 t2_i1) */ So where do the costs for our queries * from t1 come from? As far as Oracle is where n1 = 45; concerned, if we fire in the key value 45 we get the data from table T1 by EXECUTION PLAN ------hitting one index leaf block and one TABLE ACCESS BY INDEX ROWID OF T2 (C=16) table block - two blocks, so a cost of INDEX(RANGE SCAN) OF T2_I1 (C=1) two.

Figure 2 The different queries and their costs. If we try the same with table T2, we have to hit one index leaf block and So why hasn't Oracle used the index fifteen table blocks - a total of 16 by default in for the T2 query ? Easy - blocks, so a cost of 16. as the execution plan shows, the cost of doing the tablescan is cheaper than Clearly, according to this viewpoint, the cost of using the index. the index on table T1 is much more desirable than the index on table T2. Why is the tablescan cheaper ? This leaves two questions outstanding This, of course, is simply begging the though. question. Why is the cost of the tablescan cheaper than the cost of Where does the tablescan cost come using the index ? from, and why are the figures for the avg_data_blocks_per_key so The first is that every block acquisition different between the two tables ? equates to a physical disk read, and the second is that a multiblock read is The answer to the second question is just as quick as a single block read. simple. Look back at the definition of table T1 - it uses the trunc() function So what impact do these assumptions to generate the N1 values, dividing the have on our experiment ? "rownum - 1 "by 15 and truncating. If you query the user_tables view with Trunc(675/15) = 45 the following SQL: Trunc(676/15) = 45 … select table_name, Trunc(689/15) = 45 blocks from user_tables; All the rows with the value 45 do actually appear one after the other in a you will find that our two tables each tight little clump (probably all fitting one cover 96 blocks. data block) in the table. At the start of the article, I pointed out Table T2 uses the mod() function to that the test case was running a generate the N1 values, using version 8 system with the value 8 for modulus 200 on the rownum: the db_file_multiblock_read_count.

mod(45,200) = 45 Roughly speaking, Oracle has decided mod(245,200) = 45 that it can read the entire 96 block … table in 96/8 = 12 disk read requests. mod(2845,200) = 45 Since it takes 16 block (= disk read) The rows with the value 45 appear requests to access the table by index, every two hundredth position in the it is clearer quicker (from Oracle's table (probably resulting in no more sadly deluded perspective) to scan the than one row in every relevant block). table - after all 12 is less than 16.

By doing the analyze, Oracle was able Voila ! If the data you are targetting is to get a perfect description of the data suitably scattered across the table, you scatter in our table. So the optimiser get tablescans even for a very small was able to work out exactly how percentage of the data - a problem that many blocks Oracle would have to visit can be exaggerated in the case of very to answer our query - and, in simple big blocks and very small rows. cases, the number of block visits is the cost of the query. Correction In fact you will have noticed that my But why the tablescan ? calculated number of scan reads was So we see that an indexed access into 12, whilst the cost reported in the T2 is more expensive than the same execution plan was 15. It is a slight path into T1, but why has Oracle simplfication to say that the cost of a switched to the tablescan ? tablescan (or an index fast full scan for that matter) is This brings us to the two simple- minded, and rather inappropriate, 'number of blocks' / assumptions that Oracle makes. db_file_multiblock_read_count. Oracle uses an 'adjusted' multi-block See Tim Gorman's article for a proper read value for the calculation (although description of these parameters, but it then tries to use the actual requested briefly: size when the scan starts to run). Optimizer_index_cost_adj takes a For reference, the following table value between 1 and 10000 with a compares a few of the actual and default of 100. Effectively, this adjusted values parameter describes how cheap a single block read is compared to a Actual Adjusted multiblock read. For example the value 4 4.175 30 (which is often a suitable first guess 8 6.589 for an OLTP system) would tell Oracle 16 10.398 that a single block read costs 30% of a 32 16.409 multiblock read. Oracle would 64 25.895 therefore incline towards using 128 40.865 indexed access paths for low values of this parameter. As you can see, Oracle makes some attempt to protect you from the error of Optimizer_index_caching takes a supplying an unfeasibly large value for value between 0 and 100 with a this parameter. default of 0. This tells Oracle to assume that that percentage of index There is a minor change in version 9, blocks will be found in the buffer by the way, where the tablescan cost cache. In this case, setting values is further adjusted by adding one to close to 100 encourages the use of result of the division - which means indexes over tablescans. tablescans in v9 are generally just a little more expensive than in v8, so The really nice thing about both these indexes are just a little more likely to parameters is that they can be set to be used. 'truthful' values.

Adjustments: Set the optimizer_index_caching to We have seen that there are two something in the region of the 'buffer assumptions built into the optimizer cache hit ratio'. (You have to make that are not very sensible. your own choice about whether this should be the figure derived from the A single block read costs just as default pool, keep pool or both). much as a multi-block read - (not really likely, particularly when The optimizer_index_cost_adj is a running on file systems without little more complicated. Check the directio) typical wait times in v$system_event for the events 'db file scattered read' A block access will be a physical (multi block reads) and 'db file disk read - (so what is the buffer sequential reads' (single block reads). cache for ?) Divide the latter by the former and multiply by one hundred. Since the early days of Oracle 8.1, there have been a couple of Improvements parameters that allow us to correct Don't forget that the two parameters these assumption in a reasonably may need to be adjusted at different truthful way. times of the day and week to reflect the end-user work-load. You can't just derive one pair of figures, and use numerous different strategies that them for ever. Oracle uses to work out more general cases. Happily, in Oracle 9, things have improved. You can now collect Consider some of the cases I have system statistics, which are originally conveniently overlooked: included just the four: Average single block read time Multi-column indexes Average multi block read time Part-used multi-column indexes Average actual multiblock read Range scans Notional usable CPU speed. Unique indexes Non-unique indexes representing Suffice it to say that this feature is unique constraints worth an article in its own right - but do Index skip scans note that the first three allow Oracle to Index only queries discover the truth about the cost of Bitmap indexes multi block reads. And in fact, the CPU Effects of nulls speed allows Oracle to work out the CPU cost of unsuitable access The list goes on and on. There is no mechanisms like reading every single one simple formula that tells you how row in a block to find a specific data Oracle works out a cost - there is only value and behave accordingly. a general guideline that gives you the flavour of the approach and a list of When you migrate to version 9, one of different formulae that apply in the first things you should investigate different cases. is the correct use of system statistics. This one feature alone may reduce the However, the purpose of this article amount of time you spend trying to was to make you aware of the general 'tune' awkward SQL. approach and the two assumptions built into the optimiser's strategy. And I In passing, despite the wonderful hope that this may be enough to take effect of system statistics both of the you a long way down the path of optimizer adjusting parameters still understanding the (apparently) strange apply - although the exact formula for things that the optimiser has been their use seems to have changed known to do. between version 8 and version 9. Further Reading: Variations on a theme. Tim Gorman: www.evdbt.com. The Of course, I have picked one very search for Intelligent Life in the Cost special case - equality on a single Based Optimiser. column non-unique index, where thare are no nulls in the table - and treated it Wolfgang Breitling: very simply. (I haven't even mentioned www.centrexcc.com. Looking under the relevance of the index blevel and the hood of the CBO. clustering_factor yet). There are

Jonathan Lewis is a freelance consultant with more than 17 years experience of Oracle. He specialises in physical database design and the strategic use of the Oracle database engine, is author of 'Practical Oracle 8i - Designing Efficient Databases' published by Addison-Wesley, and is one of the best-known speakers on the UK Oracle circuit. Further details of his published papers, presentations, seminars and tutorials can be found at www.jlcomp.demon.co.uk, which also hosts The Co-operative Oracle Users' FAQ for the Oracle-related Usenet newsgroups.