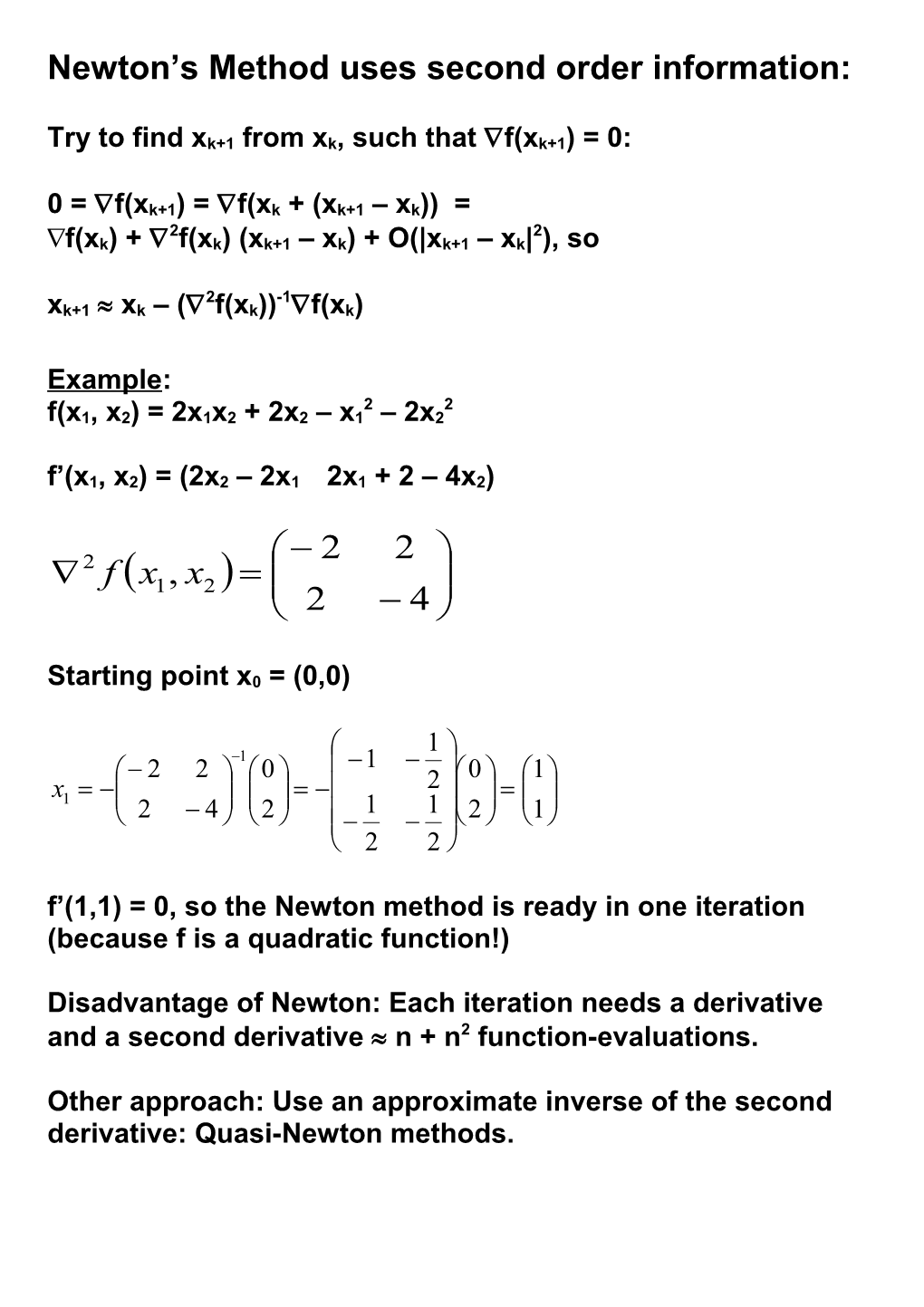

Newton’s Method uses second order information:

Try to find xk+1 from xk, such that f(xk+1) = 0:

0 = f(xk+1) = f(xk + (xk+1 – xk)) = 2 2 f(xk) + f(xk) (xk+1 – xk) + O(|xk+1 – xk| ), so

2 -1 xk+1 xk – ( f(xk)) f(xk)

Example: 2 2 f(x1, x2) = 2x1x2 + 2x2 – x1 – 2x2 f’(x1, x2) = (2x2 – 2x1 2x1 + 2 – 4x2)

2 2 2 f x1, x2 2 4

Starting point x0 = (0,0)

1 1 2 2 0 1 0 1 x 2 1 2 4 2 1 1 2 1 2 2 f’(1,1) = 0, so the Newton method is ready in one iteration (because f is a quadratic function!)

Disadvantage of Newton: Each iteration needs a derivative and a second derivative n + n2 function-evaluations.

Other approach: Use an approximate inverse of the second derivative: Quasi-Newton methods. Multidimensional optimization without derivatives:

Downhill-Simplex (Nelder Mead, 1965) (“amoebe”)

Start with n+1 points in n dimensions (simplex)

Reflect the highest point in the opposite face:

If the value in Pr is between the second highest and lowest of the vertices, change the highest point to Pr. If the value is lower that the lowest point, the value is checked in Prr (expansion).

and the lowest replaces the highest point. If the value in Pr is larger than the highest value, the value in Prr’ is checked and replaced if it is lower (contraction).

If the value in Pr is between the highest and the second highest, the value in Prr’’ is checked and replaced if it is lower.

If no replacement takes place, contract the simplex (multiple contraction).

This algorithm is slow but sure. Types of NLP problems (Ch 12.3)

- NLP without constraints. Only a non-linear function is given, without constraints, for which an optimum must be found. Necessary condition: f(x) = 0. These are non-linear equations, which cannot be solved analytically, in general

- NLP with linear constraints. Non-linear objective function with linear constraints.

- Quadratic programming. The objective function is quadratic, the constraints are linear

- Convex programming. The objective function is convex or concave, the

constraints are of the form gi(x) bi, with gi convex (the feasible region is then convex). An optimum is then global.

- Separable programming. This is a convex programming problem where objective function and constraints are separable, i.e. the sum of functions in the separate coordinates. n f (x1,, xn ) fi (xi ) i1

- Non-convex programmering All other non-linear programming problems. Can have many local optima. - Fractional Programming

Objective function is of the form f(x) = f1(x)/f2(x) These problems can sometimes be transformed into simpler problems.

Example: T c x c0 Max f (x) T d x d 0 s.t. Ax b and x 0

x 1 Let y T , t T d x d0 d x d0 (y is a vector, t is a scalar). Then the problem becomes:

T Max Z = c y + c0t s.t. Ay – bt 0 T d y + d0t = 1 and y 0, t 0

This is an LP problem and can be solved with the simplex method.

- Complementarity problems

Find a feasible solution to w = F(z), w 0, z 0 wTz = 0 (complementarity condition)

For each j we must have: wi = 0 or zi = 0. There is no objective function. Frank-Wolfe algorithm (Ch. 12.9)

The “sequential linear approximation algorithm”, (Frank- Wolfe) can be applied to non-linear optimization problems with linear constraints:

Max. f(x) z.d.d. Ax £ b en x ³ 0

The idea is to linearly approximate the function by means of its Taylor expansion: T f (x) f x k f x k x x k f is replaced by its linear approximation cTx, with

f k ci x xi The resulting LP problem is then solved, and the LP solution is used to get a better approximation to the non-linear problem. This is done by finding an optimal solution between the LP solution and the old approximation by line-search. Example:

2 2 Max f(x1, x2) = 5x1 – x1 + 8x2 – 2x2 s.t. 3x1 + 2x2 £ 6 and x1 , x2 ³ 0

Startinf point x0 = (0, 0), is a feasible point, with objective value 0. The gradient of f is: 5 2x 1 f x1, x2 8 4x2

Without constraints the solution would be: (5/2, 2), but this solution is not feasible.

Linear approximation in (0, 0) is 5x1 + 8x2.

Max 5x1 + 8x2 s.t. 3x1 + 2x2 £ 6 and x1 , x2 ³ 0

1 has an optimal solution x LP = (0, 3).

The next approximation is found on the line between the starting solution and the LP solution: (1-t)(0, 0) + t(0, 3) = (0, 3t). Now f(0, 3t) = 24t – 18t2 is maximal for t = 2/3, so the first approximation is x1 = (0, 2) with objective value 8. In this point we compute the gradient: (5, 0)T to find the new linear approximation to the function: Max 5x1 s.t. 3x1 + 2x2 £ 6 and x1 , x2 ³ 0

2 The optimal LP solution is x LP = (2, 0).

2 1 Now find the optimal solution between x LP and x : (1-t)(0, 2) + t(2, 0) = (2t, 2-2t). the objective value is 10t – 6t2, and is maximal in t = 5/6, so x1 = (5/6, 7/6) with objective value 10.08333.

The solutions approach the optimal solution (1, 3/2) with objective value 11.5