Software Appendix

Chapter 3

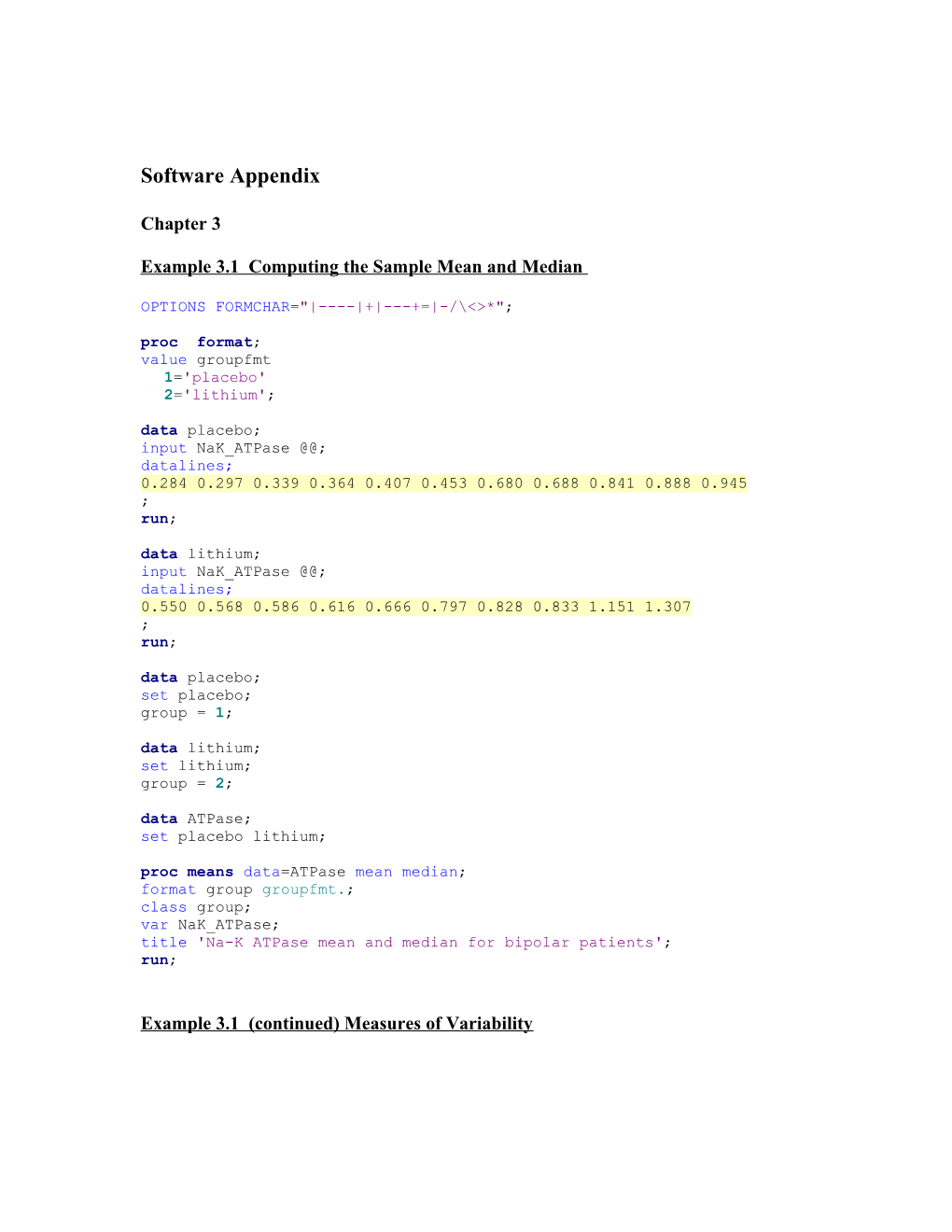

Example 3.1 Computing the Sample Mean and Median

OPTIONS FORMCHAR="|----|+|---+=|-/\<>*"; proc format; value groupfmt 1='placebo' 2='lithium'; data placebo; input NaK_ATPase @@; datalines; 0.284 0.297 0.339 0.364 0.407 0.453 0.680 0.688 0.841 0.888 0.945 ; run; data lithium; input NaK_ATPase @@; datalines; 0.550 0.568 0.586 0.616 0.666 0.797 0.828 0.833 1.151 1.307 ; run; data placebo; set placebo; group = 1; data lithium; set lithium; group = 2; data ATPase; set placebo lithium; proc means data=ATPase mean median; format group groupfmt.; class group; var NaK_ATPase; title 'Na-K ATPase mean and median for bipolar patients'; run;

Example 3.1 (continued) Measures of Variability 2

proc means data=ATPase mean var std stderr cv p25 p75 ; format group groupfmt.; class group; var NaK_ATPase; title 'Measures of variability for Na-K ATPase for bipolar patients'; run;

Example 3.2 Computing the Mode

OPTIONS FORMCHAR="|----|+|---+=|-/\<>*"; data PA; input ESR @@; datalines; 63 23 9 4 1 53 22 4 4 12 6 17 2 4 14 27 38 2 ; run; proc means data=PA mode; var ESR; title 'ESR mode for Psoriatic Arthritis patients'; run;

Example 3.4 Confidence Intervals on a Binomial Proportion data Men; input under$ count; datalines; Y 102 N 159 ; run; title1 'Exact and Approximate Confidence Intervals for the Binomial Proportion'; proc freq data=Men order=data; tables under / binomial; weight count; title2 'Proportion of Men Underreporting Protein Intake'; run; data Women; input under$ count; datalines; Y 65 N 158 3

; run; proc freq data=Women order=data; tables under / binomial; weight count; title2 'Proportion of Women Underreporting Protein Intake '; run;

Example 3.6 Comparison of Two Means proc ttest data=ATPase; class group; var NaK_ATPase; title 't-test comparing bipolar patients on lithium and placebo'; run;

Examples 3.7 and 3.8 1-Way ANOVA and Tukey-Kramer Method data Caucasian; input coil @@; ethnicity = 'Caucasian'; datalines; 1.88 1.94 2.46 3.23 1.72 1.12 2.27 0.95 1.74 2.96 1.60 2.32 5.43 1.94 1.64 1.52 2.06 1.97 5.36 2.83 ; run; data AA; input coil @@; ethnicity = 'AA'; datalines; 2.63 4.94 2.36 4.72 2.22 4.50 2.92 4.40 1.52 4.38 4.44 6.01 4.47 2.25 4.67 2.21 4.83 1.28 4.86 3.75 ; run; data Hispanic; input coil @@; ethnicity = 'Hispanic'; datalines; 2.16 1.78 3.07 5.89 2.87 3.95 1.71 1.75 5.36 1.96 4.03 1.98 2.88 1.27 6.31 1.63 1.53 1.22 7.91 1.64 ; run; data all; set Hispanic AA Caucasian; 4

run; proc glm data=all; class ethnicity; model coil = ethnicity ; means ethnicity / bon tukey lsd ; lsmeans ethnicity / pdiff; title 'comparison of coil lengths among ethnic groups'; run;

Example 3.9 Simple Linear Regression data heart; input Hemoglobin gender $ age ; datalines; 11.1 male 5.1 10.5 female 19.9 12.2 male 15.8 12.8 male 21.6 10.8 male 7.0 9.4 male 1.0 11.9 male 3.5 10.8 male 14.3 13.0 female 3.7 14.2 male 3.9 6.7 female 1.6 8.4 female 1.6 10.2 male 0.5 15.6 male 15.1 9.3 female 0.4 10.2 female 22.2 10.4 male 0.7 12.1 female 11.6 15.2 male 17.4 13.1 male 16.0 8.1 female 12.4 8.4 female 17.5 11.0 female 16.2 11.0 female 0.3 11.0 female 1.6 11.2 male 4.3 11.4 female 18.0 12.1 male 8.5 12.8 female 18.3 16.5 male 19.3 ; run; proc reg data=heart; model Hemoglobin = age/clb; run; quit; 5

Example 3.10 ANCOVA data heart; input Hemoglobin gender $ age; if gender = "male" then sex = 1; else if gender = "female" then sex = 0; gender_age = sex*age; datalines; 11.1 male 5.1 10.5 female 19.9 12.2 male 15.8 12.8 male 21.6 10.8 male 7.0 9.4 male 1.0 11.9 male 3.5 10.8 male 14.3 13.0 female 3.7 14.2 male 3.9 6.7 female 1.6 8.4 female 1.6 10.2 male 0.5 15.6 male 15.1 9.3 female 0.4 10.2 female 22.2 10.4 male 0.7 12.1 female 11.6 15.2 male 17.4 13.1 male 16.0 8.1 female 12.4 8.4 female 17.5 11.0 female 16.2 11.0 female 0.3 11.0 female 1.6 11.2 male 4.3 11.4 female 18.0 12.1 male 8.5 12.8 female 18.3 16.5 male 19.3 ; run; proc reg data=heart; model Hemoglobin = sex age gender_age; run; quit; 6

proc reg data=heart; model Hemoglobin = sex age / influence vif collin; run; quit;

Example 3.11 The χ 2 Test data Ayadi; input PR $ ER $ count; datalines; + + 33 + - 5 - + 4 - - 15 ; run; proc freq data=Ayadi; tables PR*ER / chisq ; weight count; title1 'ER and PR Expression in Ovarian Cancer Patients'; title2 'Chi-square Test for Independence'; run;

Example 3.12 The Odds Ratio data Hobbs; input methionine $ heart $ count; datalines; low defect 103 low normal 27 normal defect 120 normal normal 63 ; run; proc freq data=Hobbs; tables methionine*heart / relrisk; weight count; title1 'Methionine Levels and Heart Defect Status'; title2 'Odds Ratio'; run; 7

Chapter 4

Example 4.1 Probability Plot and Correlation Coefficient Test data SEMA; input semaphorin @@; datalines; 310.0 185.0 237.0 166.5 328.5 319.5 739.5 149.3 168.0 159.8 153.0 322.9 430.5 353.0 130.0 88.0 32.0 121.5 662.5 199.5 388.5 139.0 146.0 269.0 433.0 570.0 173.0 66.0 104.0 170.0 146.0 605.0 370.0 194.0 ; run; proc rank data = SEMA normal = blom out = plot_pos; var semaphorin; ranks quantile; run; ods listing close; ods html; proc sgscatter data = plot_pos; plot quantile * semaphorin / reg; title1 'Normal Probability Plot'; run; ods html close; ods listing; proc corr data = plot_pos nosimple noprob; var quantile; with semaphorin; title2 'Correlation Coefficient Test Statistic'; run;

Example 4.3 Measures of Skewness and Kurtosis

Note that SAS code used to create the SAS data set SEMA is provided in Example 4.1. proc univariate data = SEMA; var semaphorin; title 'Measures of Skewness and Kurtosis'; run; data sema; 8

set sema; log_sema = log(semaphorin); run; proc univariate data = SEMA; var log_sema; title 'Measures of Skewness and Kurtosis for log-transformed data'; run;

Example 4.4 Shapiro-Wilk Test for Normality proc univariate normal data = SEMA; var semaphorin; title 'Shapiro-Wilk Test Adjusted for Ties'; run;

Example 4.5 Finding a Transformation

SAS macro bctrans

Source: Adapted from Dimakos (1995). Reproduced here with permission of the author.

%macro bctrans(data=,out=,var=,r=, min= ,max=, step=) ; proc iml ; use &data; read all var {&var} into x; n=nrow(x) ; one=j(n,1,1); mat=j(&r,2,0); lnx=log(x); sumlog=sum(lnx); start; i=0; min1 = &min; max1 = &max; step1 = &step; r1 = &r; do lam=&min to &max by &step; i =i+1; lambda=round (lam, .01 ) ; x2=x##lambda; x3 = x##lambda - one; x4 = ((x##lambda) - one)/lambda; mean2 = x4[:]; if lambda= 0 then xl=log(x); else xl=((x##lambda) - one)/lambda; mean=xl[:]; d=xl- mean; ss=ssq(d)/n; l = -.5*n*log(ss)+((lambda- 1)*sumlog); 9

mat[i,1] = lambda; mat[i,2] = l; end; finish; run; print "Lambdas and their l(lambda) values", mat[format=8.3]; create lambdas from mat; append from mat; quit; data lambdas; set lambdas; rename col1=lambda col2= l; run; proc plot data=lambdas nolegend; plot l*lambda; title 'lambda vs. l(lambda) values'; run; quit; proc sort data=lambdas; by descending l; run; data &out; set lambdas; if _n_>1 then delete; run; proc print data=&out; title 'Highest lambda and l(lambda) value'; run; proc iml ; use &data; read all var {&var} into old; use &out; read all var {lambda l} into power; if power=0 then new=log(old); else new=old##power[1]; create final from new; append from new; quit; data final; set final; rename col1=&var; run;

%mend bctrans; 10

%bctrans(data=sema,out=boxcox,var=semaphorin,r=31,min=-2,max=1,step=.1)

SAS macro fastbc

Source: Adapted from Coleman (2004). Public Domain.

%macro fastbc(data=, out=, var=, min=, max=); proc iml; use &data; read all var {&var} into y; n=nrow(y); sumlog=sum(log(y)); tol=1e-7; r=0.61803399; a=&min; b=&max;