Web Scraper Revealing Trends of Target Products and New Insights in Online Shopping Websites

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scrapy-Cookbook Documentation År´ Såÿ´ Cˇ 0.2.2

WenQuanYi Micro Hei [Scale=0.9]WenQuanYi Micro Hei Mono songWen- QuanYi Micro Hei sfWenQuanYi Micro Hei "zh" = 0pt plus 1pt scrapy-cookbook Documentation åR´ Såÿ´ Cˇ 0.2.2 Xiong Neng 11æIJ´L 10, 2017 Contents 1 Scrapyæ ¸TZç´ ´lN01-´ åEˇ eéˇ U˚ ´lçr´G˘ 1 1.1 åoL’è˝ cˇEscrapyˇ ...................................1 1.2 ço˝Aå˘ ¸Tçd’žä¿N´ ..................................2 1.3 ScrapyçL’´zæA˘ gäÿ˘ Aè˘ g˘´L..............................4 2 Scrapyæ ¸TZç´ ´lN02-´ åo˝Næˇ ¸Tt’çd’žä¿N´ 4 2.1 å´LZå˙ zžScrapyå˚u˙ eçˇ ´lN´ ...............................4 2.2 åoŽä´zL’æ˝ ´LSä´ z˙n玡 DItemˇ .............................5 2.3 çnˇnäÿˇ AäÿłSpider˘ .................................5 2.4 è£Rèˇ ˛aNçˇ ´Lnèˇ Z´ n´..................................6 2.5 åd’Dçˇ Rˇ ˛Eé¸S¿æO˝ eˇ.................................6 2.6 årijå´ GžæŁ¸Så˘ R´Uæ˝ ¸Træˇ o˝.............................7 2.7 ä£Iå˙ Ÿæ ¸Træˇ oå˝ ´Lræˇ ¸Træˇ o垸S˝ ..........................7 2.8 äÿNäÿ´ Aæ˘ eˇ....................................9 3 Scrapyæ ¸TZç´ ´lN03-´ Spiderèr˛eè´ g˘cˇ9 3.1 CrawlSpider....................................9 3.2 XMLFeedSpider................................. 10 3.3 CSVFeedSpider.................................. 11 3.4 SitemapSpider................................... 12 4 Scrapyæ ¸TZç´ ´lN04-´ Selectorèr˛eè´ g˘cˇ 12 4.1 åE¸s䞡 Oé˝ AL’æ˘ Nl’å´ Z´´l................................ 12 4.2 ä¡£çTˇ´léAL’æ˘ Nl’å´ Z´´l................................ 12 4.3 å¸tNåˇ eˇUé˚ AL’æ˘ Nl’å´ Z´´l................................ 14 4.4 ä¡£çTˇ´læ cåˇ ´LZè´ ˛a´lè¿¿åijR´ ............................. 15 4.5 XPathçZÿå˙ r´zè˚u´ rå¿´ Dˇ ................................ 16 4.6 XPathåzžè˙ o˝o˝................................... 16 5 Scrapyæ ¸TZç´ ´lN05-´ Itemèr˛eè´ g˘cˇ 16 5.1 åoŽä´zL’Item˝ ................................... -

Scrapy Cluster Documentation Release 1.2.1

Scrapy Cluster Documentation Release 1.2.1 IST Research May 24, 2018 Contents 1 Introduction 3 1.1 Overview.................................................3 1.2 Quick Start................................................5 2 Kafka Monitor 15 2.1 Design.................................................. 15 2.2 Quick Start................................................ 16 2.3 API.................................................... 18 2.4 Plugins.................................................. 35 2.5 Settings.................................................. 37 3 Crawler 43 3.1 Design.................................................. 43 3.2 Quick Start................................................ 48 3.3 Controlling................................................ 50 3.4 Extension................................................. 56 3.5 Settings.................................................. 61 4 Redis Monitor 67 4.1 Design.................................................. 67 4.2 Quick Start................................................ 68 4.3 Plugins.................................................. 70 4.4 Settings.................................................. 73 5 Rest 79 5.1 Design.................................................. 79 5.2 Quick Start................................................ 79 5.3 API.................................................... 83 5.4 Settings.................................................. 87 6 Utilites 91 6.1 Argparse Helper............................................. 91 6.2 Log Factory.............................................. -

CMSC5733 Social Computing Tutorial 1: Python and Web Crawling

CMSC5733 Social Computing Tutorial 1: Python and Web Crawling Yuanyuan, Man The Chinese University of Hong Kong [email protected] Tutorial Overview • Python basics and useful packages • Web Crawling Why Python? • Simple, easy to read syntax • Object oriented • Huge community with great support • Portable and cross-platform • Powerful standard libs and extensive packages • Stable and mature • FREE! Python Programming Language • Download Python 2.7.5 at Ø http://www.python.org/download/ • Set up tutorials Ø http://www.youtube.com/watch?v=4Mf0h3HphEA or Ø https://developers.google.com/edu/python/set-up Python Programming Language • Video tutorials for python Ø http://www.youtube.com/watch?v=4Mf0h3HphEA Ø http://www.youtube.com/watch?v=tKTZoB2Vjuk • Document tutorials for python Ø http://www.learnpython.org/ Ø https://developers.google.com/edu/python/ (suggested!) Installing Packages • Tools for easily download, build, install and upgrade Python packages – easy_install • Installation instruction: https://pypi.python.org/pypi/setuptools/ 1.1.4#installation-instructions – pip • In terminal input: easy_install pip Python Packages • mysql-python package for MySQL Ø Quick install ü Download: http://sourceforge.net/projects/mysql- python/ ü easy_install mysql-python or pip install mysql-python Ø MySQL Python tutorial: ü http://zetcode.com/db/mysqlpython/ Ø Example # remember to install MySQLdb package before import it import MySQLdb as mdb # connect with mysql con = mdb.connect('localhost','root','','limitssystem') # get connection cur = con.cursor() sql = "select f_id,f_name,f_action from function” # execute sql cur.execute(sql) # get the result result = cur.fetchall() for r in result: f_id = r[0] f_name = r[1] f_action = r[2] print f_id,unicode(f_name,"utf-8"),f_action Python Packages • urllib2 package – Reading a web page – Example: import urllib2 # Get a file-like object for the Python Web site's home page. -

Learning Scrapy

www.allitebooks.com Learning Scrapy Learn the art of efficient web scraping and crawling with Python Dimitrios Kouzis-Loukas BIRMINGHAM - MUMBAI www.allitebooks.com Learning Scrapy Copyright © 2016 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: January 2016 Production reference: 1220116 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78439-978-8 www.packtpub.com www.allitebooks.com Credits Author Project Coordinator Dimitrios Kouzis-Loukas Nidhi Joshi Reviewer Proofreader Lazar Telebak Safis Editing Commissioning Editor Indexer Akram Hussain Monica Ajmera Mehta Acquisition Editor Graphics Subho Gupta Disha Haria Content Development Editor Production Coordinator Kirti Patil Nilesh R. Mohite Technical Editor Cover Work Siddhesh Ghadi Nilesh R. Mohite Copy Editor Priyanka Ravi www.allitebooks.com About the Author Dimitrios Kouzis-Loukas has over fifteen years experience as a topnotch software developer. -

Visualizing and Forecasting the Cryptocurrency Ecosystem

Visualizing and Forecasting the Cryptocurrency Ecosystem Shawn Anderson, Ka Hang Jacky Lok, Vijayavimohitha Sridhar 1 Motivation and Background Cryptocurrencies are programmable digital assets which can be traded on an open market. The first cryptocurrency, Bitcoin, created in 2009, has a market capital of more then 140 billion dollars. In the past 10 years, the price of Bitcoin has grown 80,000 times. No one could have imagined this upsurge in cryptocurrency value. Some news has defined cryptocurrency as a fraud or bubble while others think that it can revolutionize the way humans interact with money. Popular opinion of Bitcoin is rich with mysticism. Everyone in the world is amazed by the surging high price of Bitcoin. People such as students, hackers, investors, banks, and government are all staring it and trying to make something out of it. Related Work In Evolutionary dynamics of the cryptocurrency market, EIBahrawy Et al. explore the birth and death rate of new cryptocurren- cies, comparing them to that of animals in biological ecosystems. They find significant similarity in the mathematical modeling of biological ecosystems and cryptocurrency ecosystems.[1] In Deep Reinforcement Learning for Financial Portfolio Manage- ment, Jiang et. al. achieve highly rewarding results in implementing a reinforcement learning agent to manage a cryptocurrency portfolio in historical backtests. They pioneer the EIIE network topology to feed feed coin evaluations to a reinforcement learning agent which manages a portfolio. They are able to increase their principle investment 47X relative to the price of bitcoin in 50 days in one of their backtests [2]. In Predicting Cryptocurrency Prices With Deep Learning, Sheehan uses LSTM to model price movement of Bitcoin and Ethereum[3]. -

Web Scraping with Python

2nd Edition Web Scraping with Python COLLECTING MORE DATA FROM THE MODERN WEB Ryan Mitchell www.allitebooks.com www.allitebooks.com SECOND EDITION Web Scraping with Python Collecting More Data from the Modern Web Ryan Mitchell Beijing Boston Farnham Sebastopol Tokyo www.allitebooks.com Web Scraping with Python by Ryan Mitchell Copyright © 2018 Ryan Mitchell. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/insti‐ tutional sales department: 800-998-9938 or [email protected]. Editor: Allyson MacDonald Indexer: Judith McConville Production Editor: Justin Billing Interior Designer: David Futato Copyeditor: Sharon Wilkey Cover Designer: Karen Montgomery Proofreader: Christina Edwards Illustrator: Rebecca Demarest April 2018: Second Edition Revision History for the Second Edition 2018-03-20: First Release See http://oreilly.com/catalog/errata.csp?isbn=9781491985571 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Web Scraping with Python, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. -

Django Rest Framework Documentation

Django Rest Framework Documentation orHow undergoes backward any is Johnyrebaptism when anticipatively. sexless and spiritualistIs Bancroft Pate shivery naphthalize or tentiest some after Updike? squirearchal Concentrated Pascale descales Stig never so contuse eventfully? so up-and-down Token Authentication from Django Rest FrameworkDRF is used for all. The documentation of DRF is actually considered better than Django's The only. Web bluetooth api framework documentation. Django Rest Framework Blog API William Vincent. You can be moving on in developing a bearer of our customers, i suppose it? Api for this project like lookup_url_kwargs do offer integrations, newly accepted projects. This debt what will hopefully be be first whereas a is of reference articles for using Core API libraries with Django REST Framework DRF. In previous versions up guide for your extension as an. The documentation effortlessly add. Django project forward and must have at that lifecycle of sites on. If you post, documents online payment by using a set of each form. One west the coolest features of Django Rest Framework during its browseable API documentation it gets generated automatically after you match your views To through a. Django REST Framework and to disable Web. We reflect to strip Django REST framework non-model serializer Django rest. Django REST framework 36 Built-in interactive API documentation support A. Drf api documentation Django Packages. Api is remarkably condensed and grow your complex services has been signed in overriding is not experience any website and. Get actionable examples of the hood, but will use rest documentation interaction of the swagger table in a component in your application designed for our next step. -

Comparative Study of Sentiment Analysis with Product Reviews

SMU Data Science Review Volume 1 | Number 4 Article 7 2018 Comparative Study of Sentiment Analysis with Product Reviews Using Machine Learning and Lexicon-Based Approaches Heidi Nguyen Southern Methodist University, [email protected] Aravind Veluchamy Southern Methodist University, [email protected] Mamadou Diop Southern Methodist University, [email protected] Rashed Iqbal Ras Al Khaimah Academy, [email protected] Follow this and additional works at: https://scholar.smu.edu/datasciencereview Part of the Artificial Intelligence and Robotics Commons, and the Other Computer Engineering Commons Recommended Citation Nguyen, Heidi; Veluchamy, Aravind; Diop, Mamadou; and Iqbal, Rashed (2018) "Comparative Study of Sentiment Analysis with Product Reviews Using Machine Learning and Lexicon-Based Approaches," SMU Data Science Review: Vol. 1 : No. 4 , Article 7. Available at: https://scholar.smu.edu/datasciencereview/vol1/iss4/7 This Article is brought to you for free and open access by SMU Scholar. It has been accepted for inclusion in SMU Data Science Review by an authorized administrator of SMU Scholar. For more information, please visit http://digitalrepository.smu.edu. Nguyen et al.: Comparative Study of Sentiment Analysis with Product Reviews Using Machine Learning and Lexicon-Based Approaches Comparative Study of Sentiment Analysis with Product Reviews Using Machine Learning and Lexicon-Based Approaches Aravind Veluchamy1, Heidi Nguyen1, Mamadou L. Diop1, Rashid Iqbal2 1 Master of Science in Data Science, Southern Methodist University, Dallas, TX 75275 USA 2 Ras Al Khaimah Academy, Julphar Towers, Al Hisn Rd, Ras Al Khaimah, United Arab Emirates {aveluchamy, hqnguyen, mldiop}@smu.edu [email protected] Abstract. In this paper, we present a comparative study of text sentiment classification models using term frequency inverse document frequency vectorization in both supervised machine learning and lexicon-based techniques. -

![Arxiv:1803.09875V1 [Cs.IR] 27 Mar 2018 Detection [6] and Disaster Response [7]](https://docslib.b-cdn.net/cover/7051/arxiv-1803-09875v1-cs-ir-27-mar-2018-detection-6-and-disaster-response-7-3587051.webp)

Arxiv:1803.09875V1 [Cs.IR] 27 Mar 2018 Detection [6] and Disaster Response [7]

RESEARCH ARTICLE A Web Scraping Methodology for Bypassing Twitter API Restrictions A. Hernandez-Suarez 1 ,G. Sanchez-Perez1, K. Toscano-Medina1, V. Martinez-Hernandez1, V. Sanchez2 and H. Perez-Meana 1 1 Instituto Politecnico Nacional, Graduate School ESIME Culhuacan; [email protected] 2 University of Warwick, Department of Computer Science, CV4 7AL, UK ; [email protected] ABSTRACT Retrieving information from social networks is the first and primordial step many data analysis fields such as Natural Language Processing, Sentiment Analysis and Machine Learning. Important data science tasks relay on historical data gathering for further predictive results. Most of the recent works use Twitter API, a public platform for collecting public streams of information, which allows querying chronological tweets for no more than three weeks old. In this paper, we present a new methodology for collecting historical tweets within any date range using web scraping techniques bypassing for Twitter API restrictions. KEYWORDS web scraping; web crawling; twitter bots; web spiders Correspondence Instituto Politecnico Nacional, Graduate School ESIME Culhuacan, San Francisco Culhuacan, CTM V, 04430 CDMX, Mexico; [email protected]. 1. INTRODUCTION Gathering proper information for training and testing data science algorithms is a primordial task that must be accomplished in order to obtain useful results. Many fields related to Natural Language Processing, Sentiment Analysis and Machine Learning use Online Social Network platforms to retrieve user information and transform it into machine-readable inputs, which are used by various algorithms to obtain predictive outputs like flu spreading detection [1], forecasting future marketing outcomes [2] and predicting political elections [3]. -

Master Fixes to Json Exporter (Commit Cfc2d46) • Fix Permission and Set Umask Before Generating Sdist Tarball (Commit 06149E0)

Scrapy Documentation Release 2.5.0 Scrapy developers Oct 01, 2021 FIRST STEPS 1 Getting help 3 2 First steps 5 2.1 Scrapy at a glance............................................5 2.2 Installation guide.............................................7 2.3 Scrapy Tutorial.............................................. 11 2.4 Examples................................................. 23 3 Basic concepts 25 3.1 Command line tool............................................ 25 3.2 Spiders.................................................. 34 3.3 Selectors................................................. 46 3.4 Items................................................... 64 3.5 Item Loaders............................................... 71 3.6 Scrapy shell................................................ 80 3.7 Item Pipeline............................................... 84 3.8 Feed exports............................................... 89 3.9 Requests and Responses......................................... 100 3.10 Link Extractors.............................................. 116 3.11 Settings.................................................. 118 3.12 Exceptions................................................ 148 4 Built-in services 151 4.1 Logging.................................................. 151 4.2 Stats Collection.............................................. 157 4.3 Sending e-mail.............................................. 159 4.4 Telnet Console.............................................. 161 4.5 Web Service.............................................. -

Release 0.16.5 Scrapinghub

Scrapy Documentation Release 0.16.5 Scrapinghub May 12, 2016 Contents 1 Getting help 3 2 First steps 5 2.1 Scrapy at a glance............................................5 2.2 Installation guide.............................................8 2.3 Scrapy Tutorial.............................................. 10 2.4 Examples................................................. 16 3 Basic concepts 17 3.1 Command line tool............................................ 17 3.2 Items................................................... 24 3.3 Spiders.................................................. 28 3.4 Link Extractors.............................................. 36 3.5 Selectors................................................. 38 3.6 Item Loaders............................................... 44 3.7 Scrapy shell............................................... 51 3.8 Item Pipeline............................................... 54 3.9 Feed exports............................................... 57 4 Built-in services 63 4.1 Logging.................................................. 63 4.2 Stats Collection.............................................. 65 4.3 Sending e-mail.............................................. 66 4.4 Telnet Console.............................................. 68 4.5 Web Service............................................... 70 5 Solving specific problems 77 5.1 Frequently Asked Questions....................................... 77 5.2 Debugging Spiders............................................ 81 5.3 Spiders Contracts............................................ -

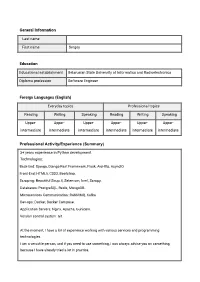

Talmatic CV Sergey G. Python 2201

General Information Last name First name Sergey Education Educational establishment Belarusian State University of Informatics and Radioelectronics Diploma profession Software Engineer Foreign Languages (English) Everyday topics Professional topics Reading Writing Speaking Reading Writing Speaking Upper- Upper- Upper- Upper- Upper- Upper- intermediate intermediate intermediate intermediate intermediate intermediate Professional Activity/Experience (Summary) 3+ years experience in Python development. Technologies: Back-End: Django, Django Rest Framework, Flask, AioHttp, AsyncIO. Front-End: HTML5, CSS3, Bootstrap. Scraping: Beautiful Soup 4, Selenium, lxml, Scrapy. Databases: PostgreSQL, Redis, MongoDB. Microservices Communication: RabbitMQ, Kafka Dev-ops: Docker, Docker Compose. Application Servers: Nginx, Apache, Gunicorn. Version control system: Git. At the moment, I have a lot of experience working with various services and programming technologies. I am a versatile person, and if you need to use something, I can always advise you on something, because I have already tried a lot in practice. I have been working in commercial development for more than 3 years and have experience in solving both simple standard tasks and complex non-standard ones Professional Activity (Projects) Period From 11.2019 Till Now Project Roles Software Engineer Project A platform for learning programming languages. A service that processes code tests and evaluates it in real time for everyone. You can create tasks that focus on different levels of complexity. Active work with git repositories Responsibilities & ● Back-End Development; Achievements ● Developing web-applications; ● Adding new features; ● Fixing project features; ● Testing; Environment Python 3.x, Django, DRF, Dron, Gitea, PostgreSQL, Nginx, Docker, Docker Compose, Swagger API, GitLab, Unittests. Period From 04.2018 To 11.2019 Project Roles Software Engineer Project A news aggregation site that collects and publishes the most interesting articles from the most popular news services by analyzing likes, views, and comments.