Xpath-Tutorial

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

In-Depth Evaluation of Redirect Tracking and Link Usage

Proceedings on Privacy Enhancing Technologies ; 2020 (4):394–413 Martin Koop*, Erik Tews, and Stefan Katzenbeisser In-Depth Evaluation of Redirect Tracking and Link Usage Abstract: In today’s web, information gathering on 1 Introduction users’ online behavior takes a major role. Advertisers use different tracking techniques that invade users’ privacy It is common practice to use different tracking tech- by collecting data on their browsing activities and inter- niques on websites. This covers the web advertisement ests. To preventing this threat, various privacy tools are infrastructure like banners, so-called web beacons1 or available that try to block third-party elements. How- social media buttons to gather data on the users’ on- ever, there exist various tracking techniques that are line behavior as well as privacy sensible information not covered by those tools, such as redirect link track- [52, 69, 73]. Among others, those include information on ing. Here, tracking is hidden in ordinary website links the user’s real name, address, gender, shopping-behavior pointing to further content. By clicking those links, or or location [4, 19]. Connecting this data with informa- by automatic URL redirects, the user is being redirected tion gathered from search queries, mobile devices [17] through a chain of potential tracking servers not visible or content published in online social networks [5, 79] al- to the user. In this scenario, the tracker collects valuable lows revealing further privacy sensitive information [62]. data about the content, topic, or user interests of the This includes personal interests, problems or desires of website. Additionally, the tracker sets not only third- users, political or religious views, as well as the finan- party but also first-party tracking cookies which are far cial status. -

Bibliography of Erik Wilde

dretbiblio dretbiblio Erik Wilde's Bibliography References [1] AFIPS Fall Joint Computer Conference, San Francisco, California, December 1968. [2] Seventeenth IEEE Conference on Computer Communication Networks, Washington, D.C., 1978. [3] ACM SIGACT-SIGMOD Symposium on Principles of Database Systems, Los Angeles, Cal- ifornia, March 1982. ACM Press. [4] First Conference on Computer-Supported Cooperative Work, 1986. [5] 1987 ACM Conference on Hypertext, Chapel Hill, North Carolina, November 1987. ACM Press. [6] 18th IEEE International Symposium on Fault-Tolerant Computing, Tokyo, Japan, 1988. IEEE Computer Society Press. [7] Conference on Computer-Supported Cooperative Work, Portland, Oregon, 1988. ACM Press. [8] Conference on Office Information Systems, Palo Alto, California, March 1988. [9] 1989 ACM Conference on Hypertext, Pittsburgh, Pennsylvania, November 1989. ACM Press. [10] UNIX | The Legend Evolves. Summer 1990 UKUUG Conference, Buntingford, UK, 1990. UKUUG. [11] Fourth ACM Symposium on User Interface Software and Technology, Hilton Head, South Carolina, November 1991. [12] GLOBECOM'91 Conference, Phoenix, Arizona, 1991. IEEE Computer Society Press. [13] IEEE INFOCOM '91 Conference on Computer Communications, Bal Harbour, Florida, 1991. IEEE Computer Society Press. [14] IEEE International Conference on Communications, Denver, Colorado, June 1991. [15] International Workshop on CSCW, Berlin, Germany, April 1991. [16] Third ACM Conference on Hypertext, San Antonio, Texas, December 1991. ACM Press. [17] 11th Symposium on Reliable Distributed Systems, Houston, Texas, 1992. IEEE Computer Society Press. [18] 3rd Joint European Networking Conference, Innsbruck, Austria, May 1992. [19] Fourth ACM Conference on Hypertext, Milano, Italy, November 1992. ACM Press. [20] GLOBECOM'92 Conference, Orlando, Florida, December 1992. IEEE Computer Society Press. http://github.com/dret/biblio (August 29, 2018) 1 dretbiblio [21] IEEE INFOCOM '92 Conference on Computer Communications, Florence, Italy, 1992. -

IBM Cognos Analytics - Reporting Version 11.1

IBM Cognos Analytics - Reporting Version 11.1 User Guide IBM © Product Information This document applies to IBM Cognos Analytics version 11.1.0 and may also apply to subsequent releases. Copyright Licensed Materials - Property of IBM © Copyright IBM Corp. 2005, 2021. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. IBM, the IBM logo and ibm.com are trademarks or registered trademarks of International Business Machines Corp., registered in many jurisdictions worldwide. Other product and service names might be trademarks of IBM or other companies. A current list of IBM trademarks is available on the Web at " Copyright and trademark information " at www.ibm.com/legal/copytrade.shtml. The following terms are trademarks or registered trademarks of other companies: • Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. • Microsoft, Windows, Windows NT, and the Windows logo are trademarks of Microsoft Corporation in the United States, other countries, or both. • Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. • Linux is a registered trademark of Linus Torvalds in the United States, other countries, or both. • UNIX is a registered trademark of The Open Group in the United States and other countries. • Java and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its affiliates. -

QUERYING JSON and XML Performance Evaluation of Querying Tools for Offline-Enabled Web Applications

QUERYING JSON AND XML Performance evaluation of querying tools for offline-enabled web applications Master Degree Project in Informatics One year Level 30 ECTS Spring term 2012 Adrian Hellström Supervisor: Henrik Gustavsson Examiner: Birgitta Lindström Querying JSON and XML Submitted by Adrian Hellström to the University of Skövde as a final year project towards the degree of M.Sc. in the School of Humanities and Informatics. The project has been supervised by Henrik Gustavsson. 2012-06-03 I hereby certify that all material in this final year project which is not my own work has been identified and that no work is included for which a degree has already been conferred on me. Signature: ___________________________________________ Abstract This article explores the viability of third-party JSON tools as an alternative to XML when an application requires querying and filtering of data, as well as how the application deviates between browsers. We examine and describe the querying alternatives as well as the technologies we worked with and used in the application. The application is built using HTML 5 features such as local storage and canvas, and is benchmarked in Internet Explorer, Chrome and Firefox. The application built is an animated infographical display that uses querying functions in JSON and XML to filter values from a dataset and then display them through the HTML5 canvas technology. The results were in favor of JSON and suggested that using third-party tools did not impact performance compared to native XML functions. In addition, the usage of JSON enabled easier development and cross-browser compatibility. Further research is proposed to examine document-based data filtering as well as investigating why performance deviated between toolsets. -

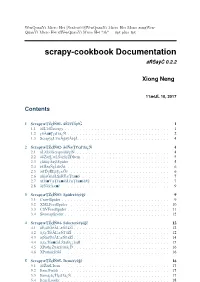

Scrapy-Cookbook Documentation År´ Såÿ´ Cˇ 0.2.2

WenQuanYi Micro Hei [Scale=0.9]WenQuanYi Micro Hei Mono songWen- QuanYi Micro Hei sfWenQuanYi Micro Hei "zh" = 0pt plus 1pt scrapy-cookbook Documentation åR´ Såÿ´ Cˇ 0.2.2 Xiong Neng 11æIJ´L 10, 2017 Contents 1 Scrapyæ ¸TZç´ ´lN01-´ åEˇ eéˇ U˚ ´lçr´G˘ 1 1.1 åoL’è˝ cˇEscrapyˇ ...................................1 1.2 ço˝Aå˘ ¸Tçd’žä¿N´ ..................................2 1.3 ScrapyçL’´zæA˘ gäÿ˘ Aè˘ g˘´L..............................4 2 Scrapyæ ¸TZç´ ´lN02-´ åo˝Næˇ ¸Tt’çd’žä¿N´ 4 2.1 å´LZå˙ zžScrapyå˚u˙ eçˇ ´lN´ ...............................4 2.2 åoŽä´zL’æ˝ ´LSä´ z˙n玡 DItemˇ .............................5 2.3 çnˇnäÿˇ AäÿłSpider˘ .................................5 2.4 è£Rèˇ ˛aNçˇ ´Lnèˇ Z´ n´..................................6 2.5 åd’Dçˇ Rˇ ˛Eé¸S¿æO˝ eˇ.................................6 2.6 årijå´ GžæŁ¸Så˘ R´Uæ˝ ¸Træˇ o˝.............................7 2.7 ä£Iå˙ Ÿæ ¸Træˇ oå˝ ´Lræˇ ¸Træˇ o垸S˝ ..........................7 2.8 äÿNäÿ´ Aæ˘ eˇ....................................9 3 Scrapyæ ¸TZç´ ´lN03-´ Spiderèr˛eè´ g˘cˇ9 3.1 CrawlSpider....................................9 3.2 XMLFeedSpider................................. 10 3.3 CSVFeedSpider.................................. 11 3.4 SitemapSpider................................... 12 4 Scrapyæ ¸TZç´ ´lN04-´ Selectorèr˛eè´ g˘cˇ 12 4.1 åE¸s䞡 Oé˝ AL’æ˘ Nl’å´ Z´´l................................ 12 4.2 ä¡£çTˇ´léAL’æ˘ Nl’å´ Z´´l................................ 12 4.3 å¸tNåˇ eˇUé˚ AL’æ˘ Nl’å´ Z´´l................................ 14 4.4 ä¡£çTˇ´læ cåˇ ´LZè´ ˛a´lè¿¿åijR´ ............................. 15 4.5 XPathçZÿå˙ r´zè˚u´ rå¿´ Dˇ ................................ 16 4.6 XPathåzžè˙ o˝o˝................................... 16 5 Scrapyæ ¸TZç´ ´lN05-´ Itemèr˛eè´ g˘cˇ 16 5.1 åoŽä´zL’Item˝ ................................... -

Scraping HTML with Xpath Stéphane Ducasse, Peter Kenny

Scraping HTML with XPath Stéphane Ducasse, Peter Kenny To cite this version: Stéphane Ducasse, Peter Kenny. Scraping HTML with XPath. published by the authors, pp.26, 2017. hal-01612689 HAL Id: hal-01612689 https://hal.inria.fr/hal-01612689 Submitted on 7 Oct 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Scraping HTML with XPath Stéphane Ducasse and Peter Kenny Square Bracket tutorials September 28, 2017 master @ a0267b2 Copyright 2017 by Stéphane Ducasse and Peter Kenny. The contents of this book are protected under the Creative Commons Attribution-ShareAlike 3.0 Unported license. You are free: • to Share: to copy, distribute and transmit the work, • to Remix: to adapt the work, Under the following conditions: Attribution. You must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work). Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under the same, similar or a compatible license. For any reuse or distribution, you must make clear to others the license terms of this work. -

SVG-Based Knowledge Visualization

MASARYK UNIVERSITY FACULTY}w¡¢£¤¥¦§¨ OF I !"#$%&'()+,-./012345<yA|NFORMATICS SVG-based Knowledge Visualization DIPLOMA THESIS Miloš Kaláb Brno, spring 2012 Declaration Hereby I declare, that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Advisor: RNDr. Tomáš Gregar Ph.D. ii Acknowledgement I would like to thank RNDr. Tomáš Gregar Ph.D. for supervising the thesis. His opinions, comments and advising helped me a lot with accomplishing this work. I would also like to thank to Dr. Daniel Sonntag from DFKI GmbH. Saarbrücken, Germany, for the opportunity to work for him on the Medico project and for his supervising of the thesis during my erasmus exchange in Germany. Big thanks also to Jochen Setz from Dr. Sonntag’s team who worked on the server background used by my visualization. Last but not least, I would like to thank to my family and friends for being extraordinary supportive. iii Abstract The aim of this thesis is to analyze the visualization of semantic data and sug- gest an approach to general visualization into the SVG format. Afterwards, the approach is to be implemented in a visualizer allowing user to customize the visualization according to the nature of the data. The visualizer was integrated as an extension of Fresnel Editor. iv Keywords Semantic knowledge, SVG, Visualization, JavaScript, Java, XML, Fresnel, XSLT v Contents Introduction . .3 1 Brief Introduction to the Related Technologies ..........5 1.1 XML – Extensible Markup Language ..............5 1.1.1 XSLT – Extensible Stylesheet Lang. -

XPATH in NETCONF and YANG Table of Contents

XPATH IN NETCONF AND YANG Table of Contents 1. Introduction ............................................................................................................3 2. XPath 1.0 Introduction ...................................................................................3 3. The Use of XPath in NETCONF ...............................................................4 4. The Use of XPath in YANG .........................................................................5 5. XPath and ConfD ...............................................................................................8 6. Conclusion ...............................................................................................................9 7. Additional Resourcese ..................................................................................9 2 XPath in NETCONF and YANG 1. Introduction XPath is a powerful tool used by NETCONF and YANG. This application note will help you to understand and utilize this advanced feature of NETCONF and YANG. This application note gives a brief introduction to XPath, then describes how XPath is used in NETCONF and YANG, and finishes with a discussion of XPath in ConfD. The XPath 1.0 standard was defined by the W3C in 1999. It is a language which is used to address the parts of an XML document and was originally design to be used by XML Transformations. XPath gets its name from its use of path notation for navigating through the hierarchical structure of an XML document. Since XML serves as the encoding format for NETCONF and a data model defined in YANG is represented in XML, it was natural for NETCONF and XML to utilize XPath. 2. XPath 1.0 Introduction XML Path Language, or XPath 1.0, is a W3C recommendation first introduced in 1999. It is a language that is used to address and match parts of an XML document. XPath sees the XML document as a tree containing different kinds of nodes. The types of nodes can be root, element, text, attribute, namespace, processing instruction, and comment nodes. -

Pearls of XSLT/Xpath 3.0 Design

PEARLS OF XSLT AND XPATH 3.0 DESIGN PREFACE XSLT 3.0 and XPath 3.0 contain a lot of powerful and exciting new capabilities. The purpose of this paper is to highlight the new capabilities. Have you got a pearl that you would like to share? Please send me an email and I will add it to this paper (and credit you). I ask three things: 1. The pearl highlights a capability that is new to XSLT 3.0 or XPath 3.0. 2. Provide a short, complete, working stylesheet with a sample input document. 3. Provide a brief description of the code. This is an evolving paper. As new pearls are found, they will be added. TABLE OF CONTENTS 1. XPath 3.0 is a composable language 2. Higher-order functions 3. Partial functions 4. Function composition 5. Recursion with anonymous functions 6. Closures 7. Binary search trees 8. -- next pearl is? -- CHAPTER 1: XPATH 3.0 IS A COMPOSABLE LANGUAGE The XPath 3.0 specification says this: XPath 3.0 is a composable language What does that mean? It means that every operator and language construct allows any XPath expression to appear as its operand (subject only to operator precedence and data typing constraints). For example, take this expression: 3 + ____ The plus (+) operator has a left-operand, 3. What can the right-operand be? Answer: any XPath expression! Let's use the max() function as the right-operand: 3 + max(___) Now, what can the argument to the max() function be? Answer: any XPath expression! Let's use a for- loop as its argument: 3 + max(for $i in 1 to 10 return ___) Now, what can the return value of the for-loop be? Answer: any XPath expression! Let's use an if- statement: 3 + max(for $i in 1 to 10 return (if ($i gt 5) then ___ else ___))) And so forth. -

Download Forecheck Guide

Forecheck Content 1 Welcome & Introduction 5 2 Overview: What Forecheck Can Do 6 3 First Steps 7 4 Projects and Analyses 9 5 Scheduler and Queue 12 6 Important Details 13 6.1 La..n..g..u..a..g..e..s.,. .C...h..a..r.a..c..t.e..r. .S..e..t.s.. .a..n..d.. .U..n..i.c..o..d..e....................................................................... 13 6.2 Ch..o..o..s..i.n..g.. .t.h..e.. .c.o..r..r.e..c..t. .F..o..n..t............................................................................................. 14 6.3 St.o..r.a..g..e.. .L..o..c..a..t.i.o..n.. .o..f. .D..a..t.a................................................................................................ 15 6.4 Fo..r.e..c..h..e..c..k. .U...s.e..r.-.A...g..e..n..t. .a..n..d.. .W...e..b.. .A..n..a..l.y..s.i.s.. .T..o..o..l.s............................................................. 15 6.5 Er.r.o..r.. .H..a..n..d..l.i.n..g................................................................................................................ 17 6.6 Ro..b..o..t.s...t.x..t.,. .n..o..i.n..d..e..x..,. .n..o..f.o..l.l.o..w......................................................................................... 18 6.7 Co..m...p..l.e..t.e.. .A..n..a..l.y..s..i.s. .o..f. .l.a..r.g..e.. .W....e..b..s.i.t.e..s............................................................................. 20 6.8 Fi.n..d..i.n..g.. .a..l.l. .p..a..g..e..s. .o..f. .a.. .W....e..b..s.i.t.e....................................................................................... 21 6.9 Go..o..g..l.e.. .A...n..a..l.y.t.i.c..s. -

Lxmldoc-4.5.0.Pdf

lxml 2020-01-29 Contents Contents 2 I lxml 14 1 lxml 15 Introduction................................................. 15 Documentation............................................... 15 Download.................................................. 16 Mailing list................................................. 17 Bug tracker................................................. 17 License................................................... 17 Old Versions................................................. 17 2 Why lxml? 18 Motto.................................................... 18 Aims..................................................... 18 3 Installing lxml 20 Where to get it................................................ 20 Requirements................................................ 20 Installation................................................. 21 MS Windows............................................. 21 Linux................................................. 21 MacOS-X............................................... 21 Building lxml from dev sources....................................... 22 Using lxml with python-libxml2...................................... 22 Source builds on MS Windows....................................... 22 Source builds on MacOS-X......................................... 22 4 Benchmarks and Speed 23 General notes................................................ 23 How to read the timings........................................... 24 Parsing and Serialising........................................... 24 The ElementTree -

Scrapy Cluster Documentation Release 1.2.1

Scrapy Cluster Documentation Release 1.2.1 IST Research May 24, 2018 Contents 1 Introduction 3 1.1 Overview.................................................3 1.2 Quick Start................................................5 2 Kafka Monitor 15 2.1 Design.................................................. 15 2.2 Quick Start................................................ 16 2.3 API.................................................... 18 2.4 Plugins.................................................. 35 2.5 Settings.................................................. 37 3 Crawler 43 3.1 Design.................................................. 43 3.2 Quick Start................................................ 48 3.3 Controlling................................................ 50 3.4 Extension................................................. 56 3.5 Settings.................................................. 61 4 Redis Monitor 67 4.1 Design.................................................. 67 4.2 Quick Start................................................ 68 4.3 Plugins.................................................. 70 4.4 Settings.................................................. 73 5 Rest 79 5.1 Design.................................................. 79 5.2 Quick Start................................................ 79 5.3 API.................................................... 83 5.4 Settings.................................................. 87 6 Utilites 91 6.1 Argparse Helper............................................. 91 6.2 Log Factory..............................................